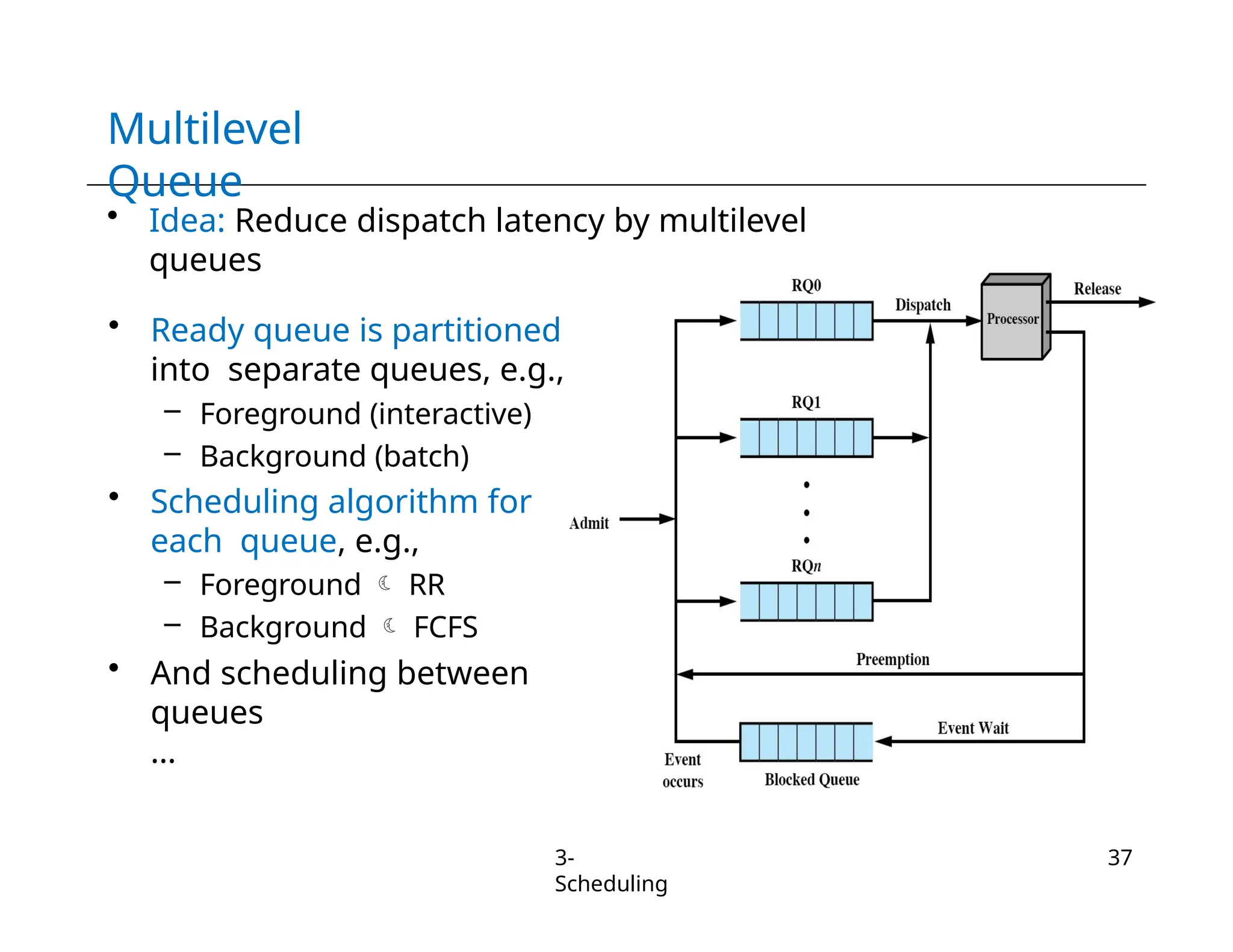

The document discusses various process scheduling techniques in operating systems, highlighting types of schedulers including long-term, medium-term, and short-term. It examines scheduling criteria and optimization goals such as CPU utilization, throughput, fairness, and response time while detailing specific algorithms like First-Come-First-Served (FCFS), Shortest Job First (SJF), and Round Robin. Additionally, the document addresses scheduling challenges such as prioritization, starvation, and the implementation of multilevel queues and feedback mechanisms.

![Shortest Remaining Time First –

Example

Process Arrival time Burst time

P1 0 8

P2 1 4

P3 2 9

P4 3 5

P1

P1

P2

17

3-

Scheduling

22

0

1 5 10

• Average waiting time

– [(10-1)+(1-1)+(17-2)+5-3)]/4 = 26/4

= 6.5

P3

26

P4](https://image.slidesharecdn.com/chapter3-scheduling-241103122353-1af78d3e/75/Chapter-3-Operating-System-Scheduling-pptx-22-2048.jpg)