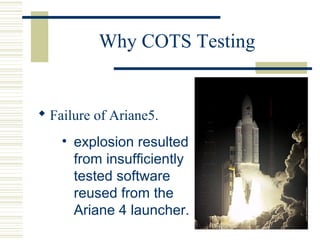

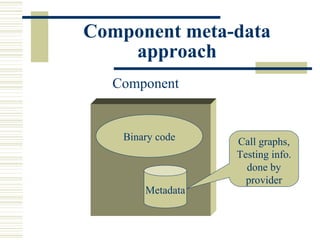

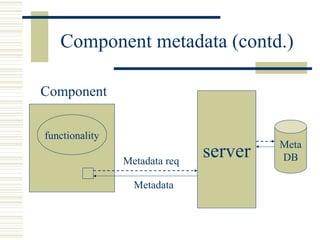

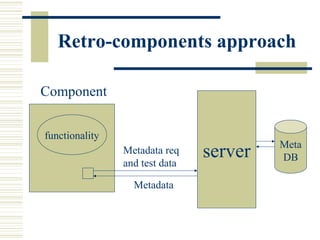

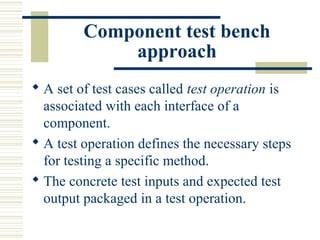

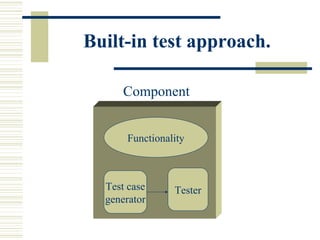

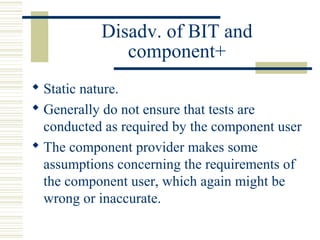

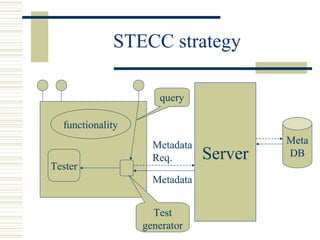

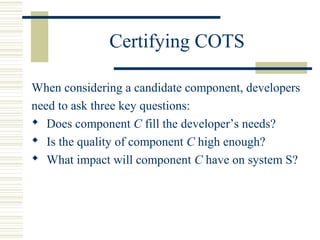

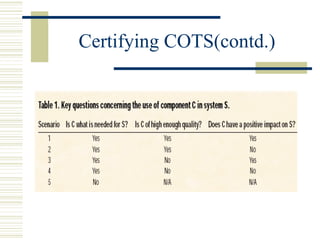

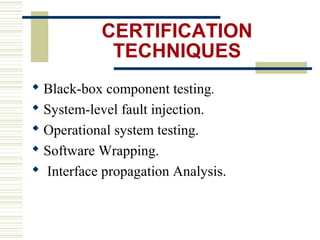

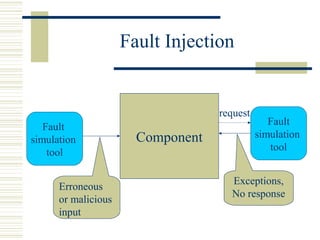

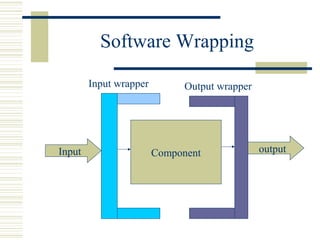

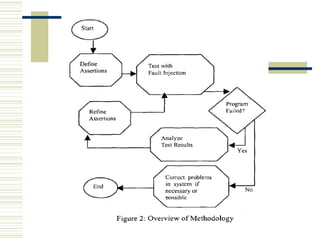

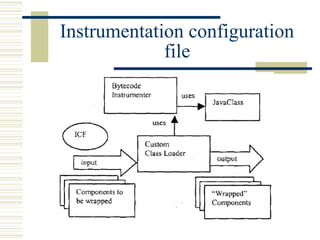

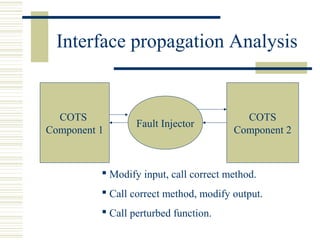

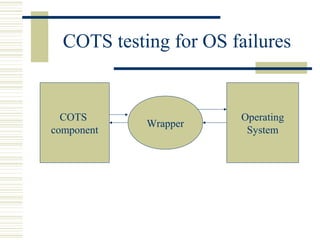

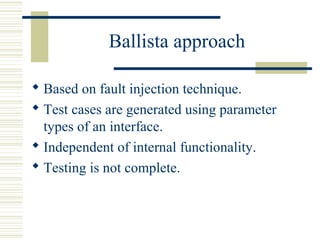

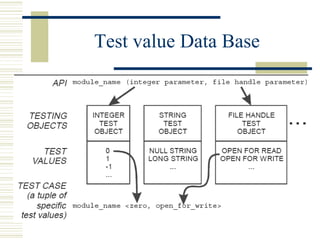

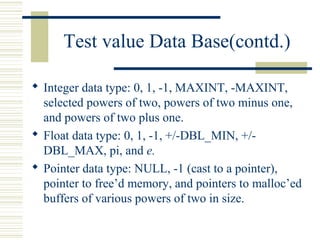

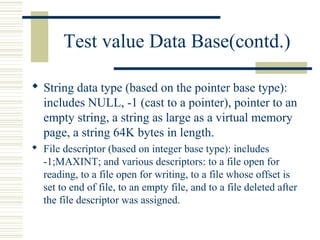

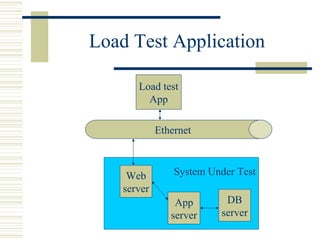

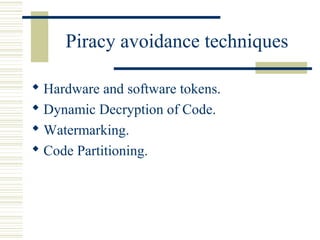

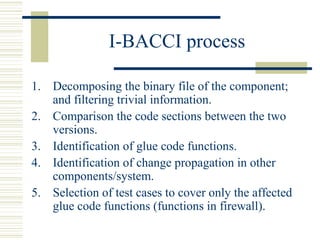

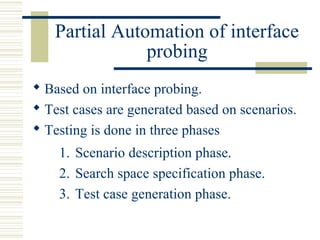

The document discusses various techniques for testing commercial off-the-shelf (COTS) components. It describes methods like the Analytic Hierarchy Process for COTS evaluation and selection. It also covers different approaches to provide testing information for COTS like the component metadata approach. The document discusses levels of testing like unit and integration testing as well as types of testing such as functionality, reliability and security testing.