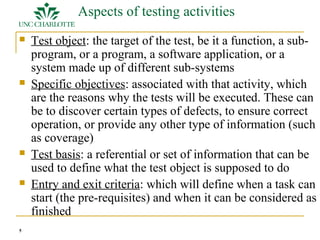

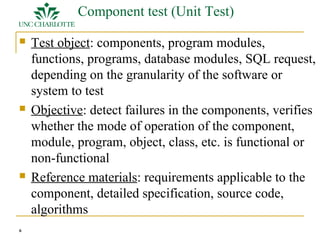

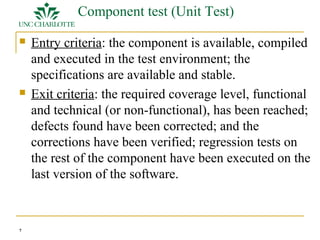

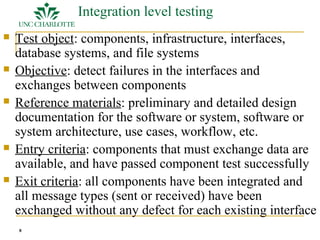

This document discusses different levels of software testing including component tests, integration tests, system tests, and acceptance tests. It describes the test object, objectives, reference materials, entry and exit criteria for each level. Component tests focus on individual modules, integration tests check interfaces between components, system tests evaluate the fully integrated system, and acceptance tests determine if the software meets user requirements.