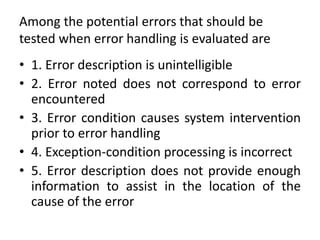

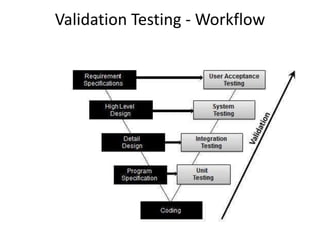

Unit testing focuses on testing individual software modules or components. It ensures information flows properly in and out of modules and data maintains integrity. Common errors include arithmetic issues, mixed data types, incorrect initialization, and precision errors. Test cases should check for logical and comparison errors. Validation testing ensures the software meets requirements by testing with customers. System testing integrates software with other system elements to check recovery, security, performance under stress, and response to abnormal situations.