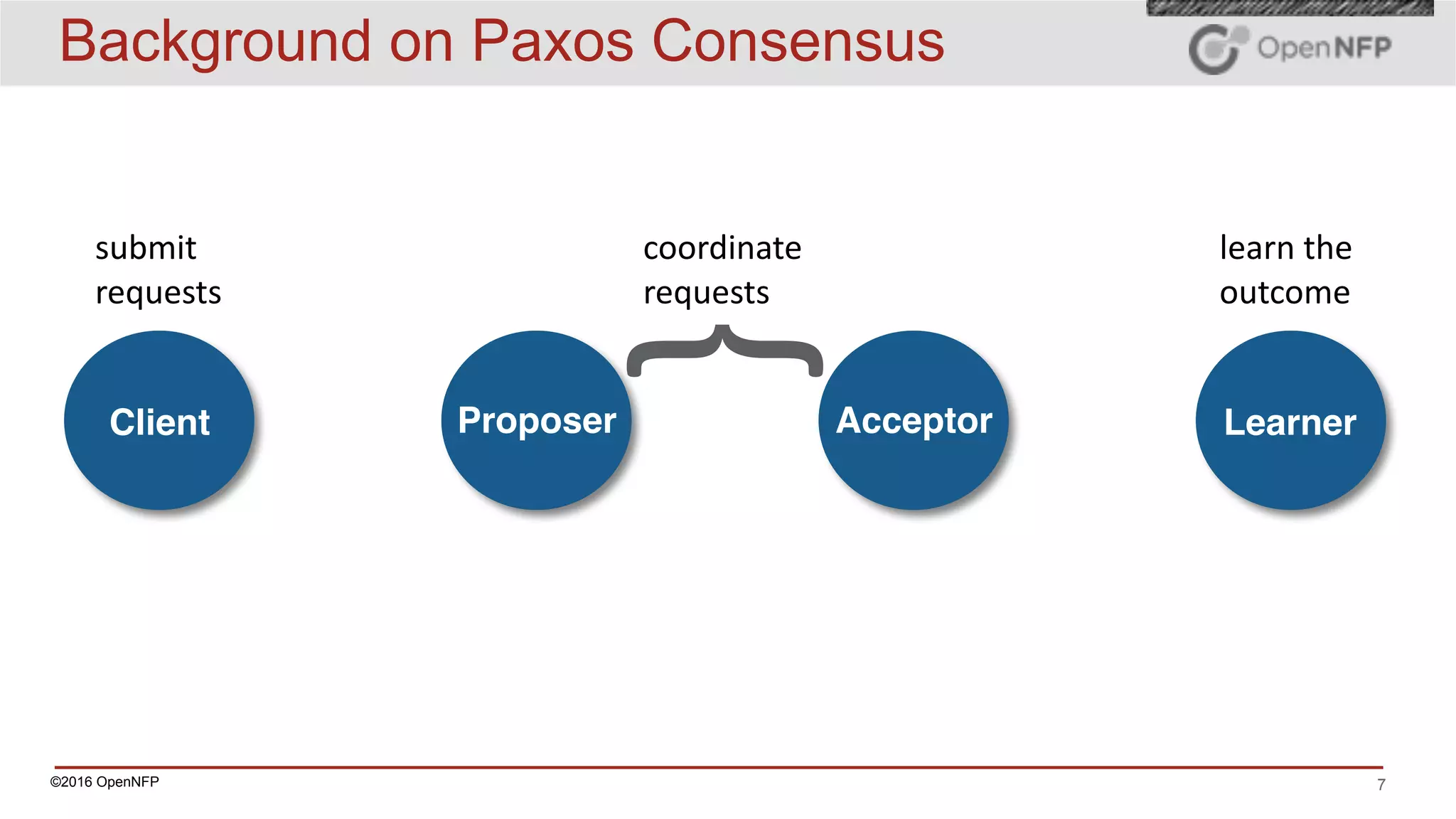

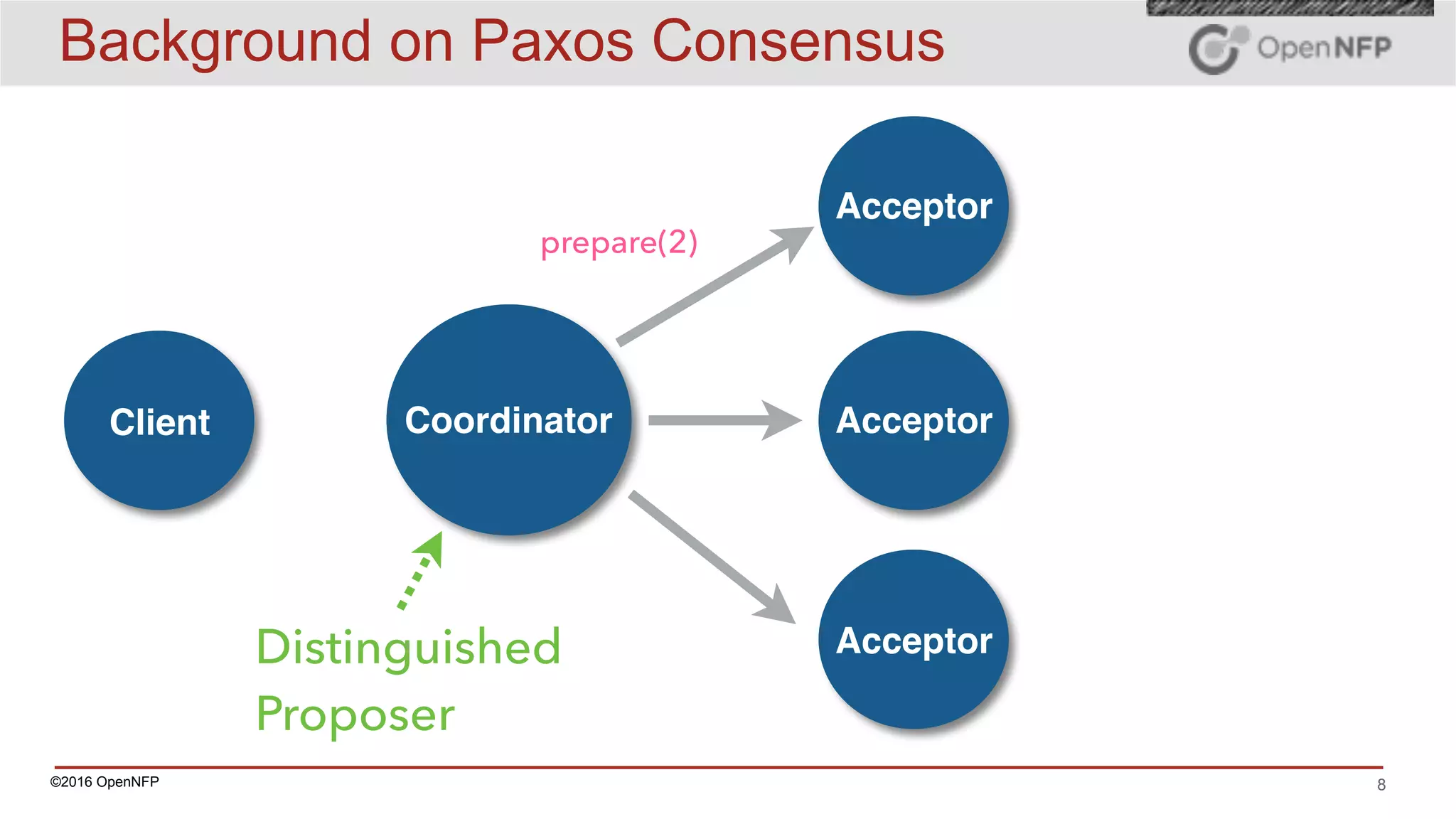

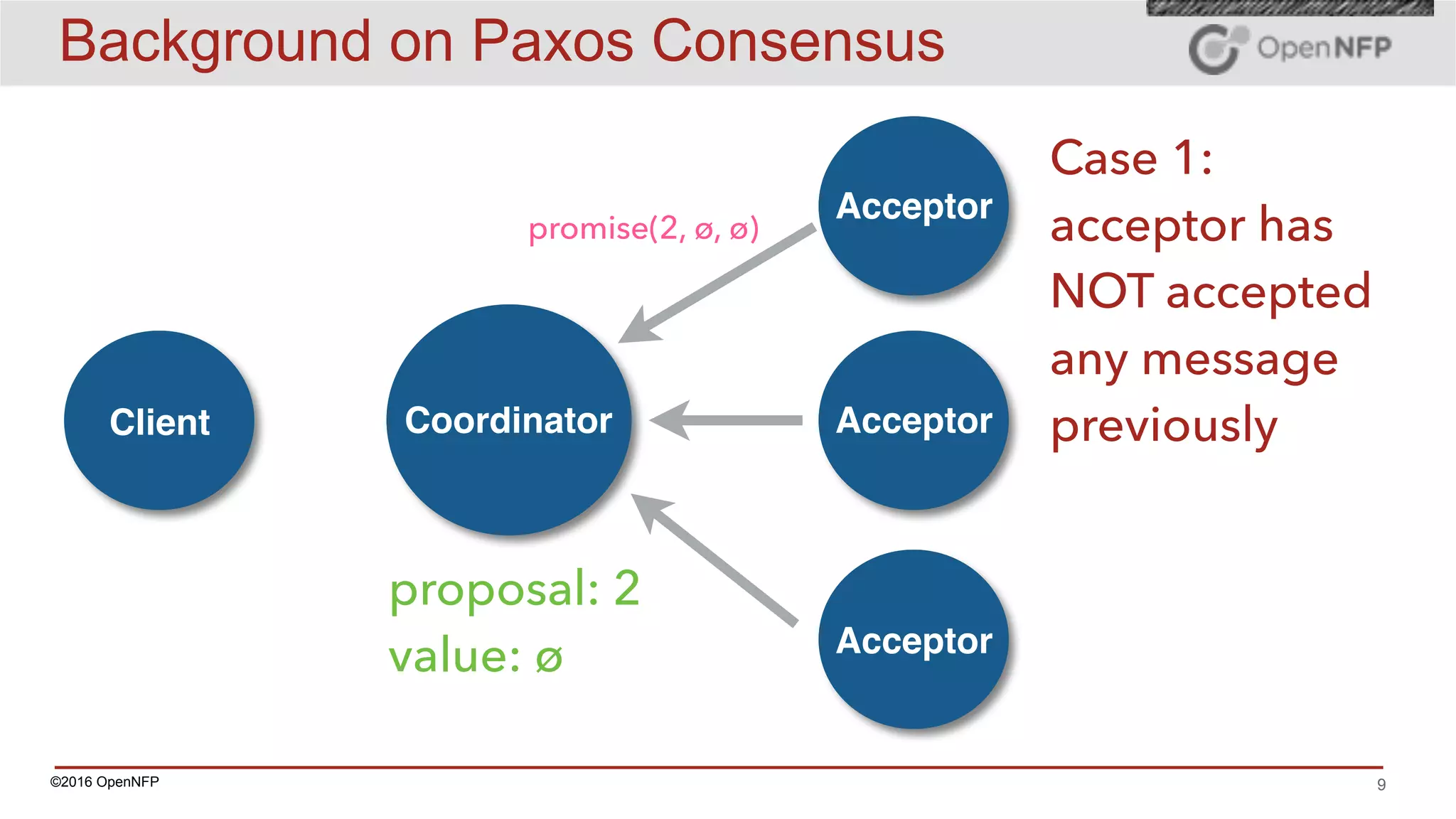

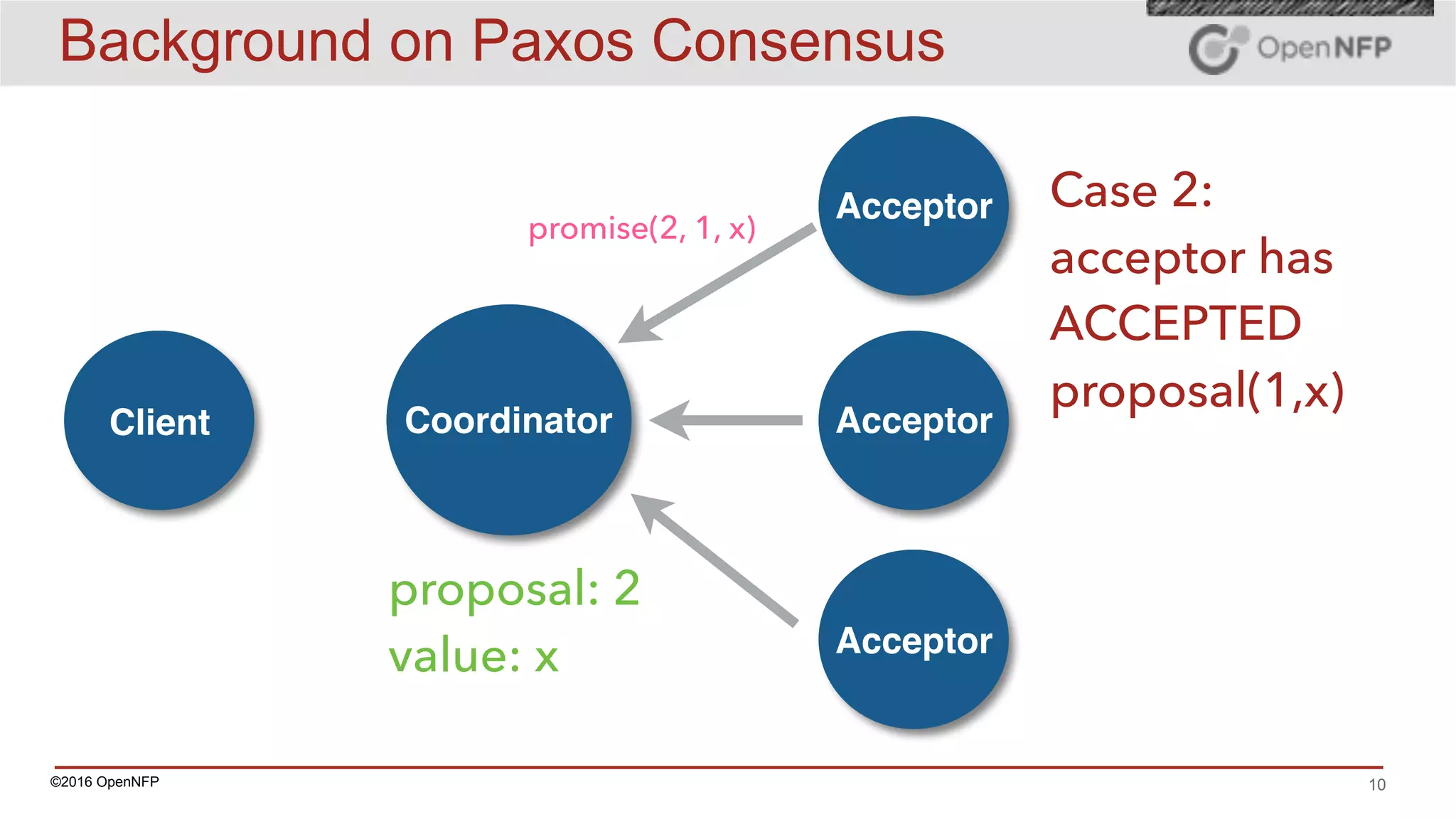

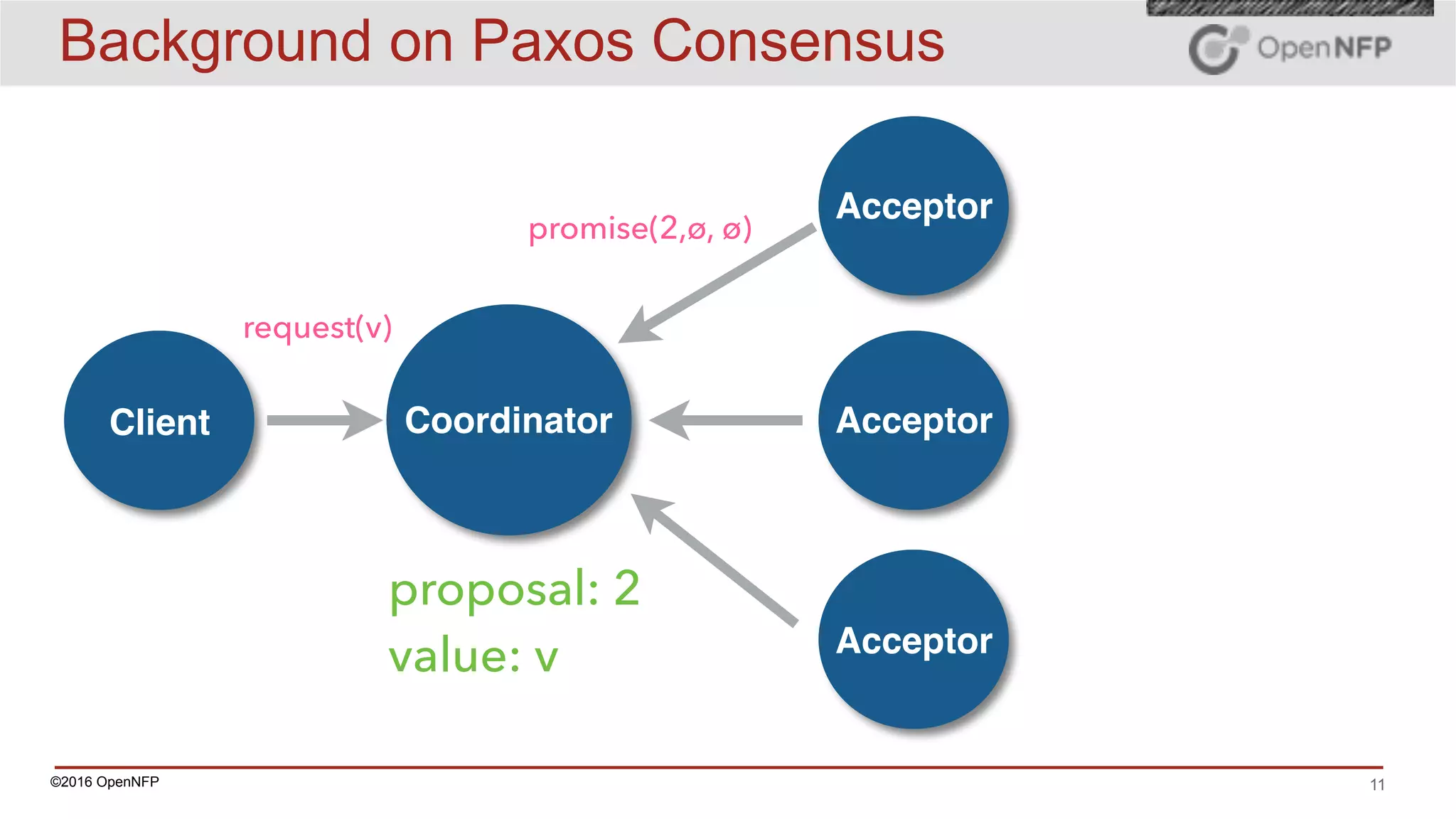

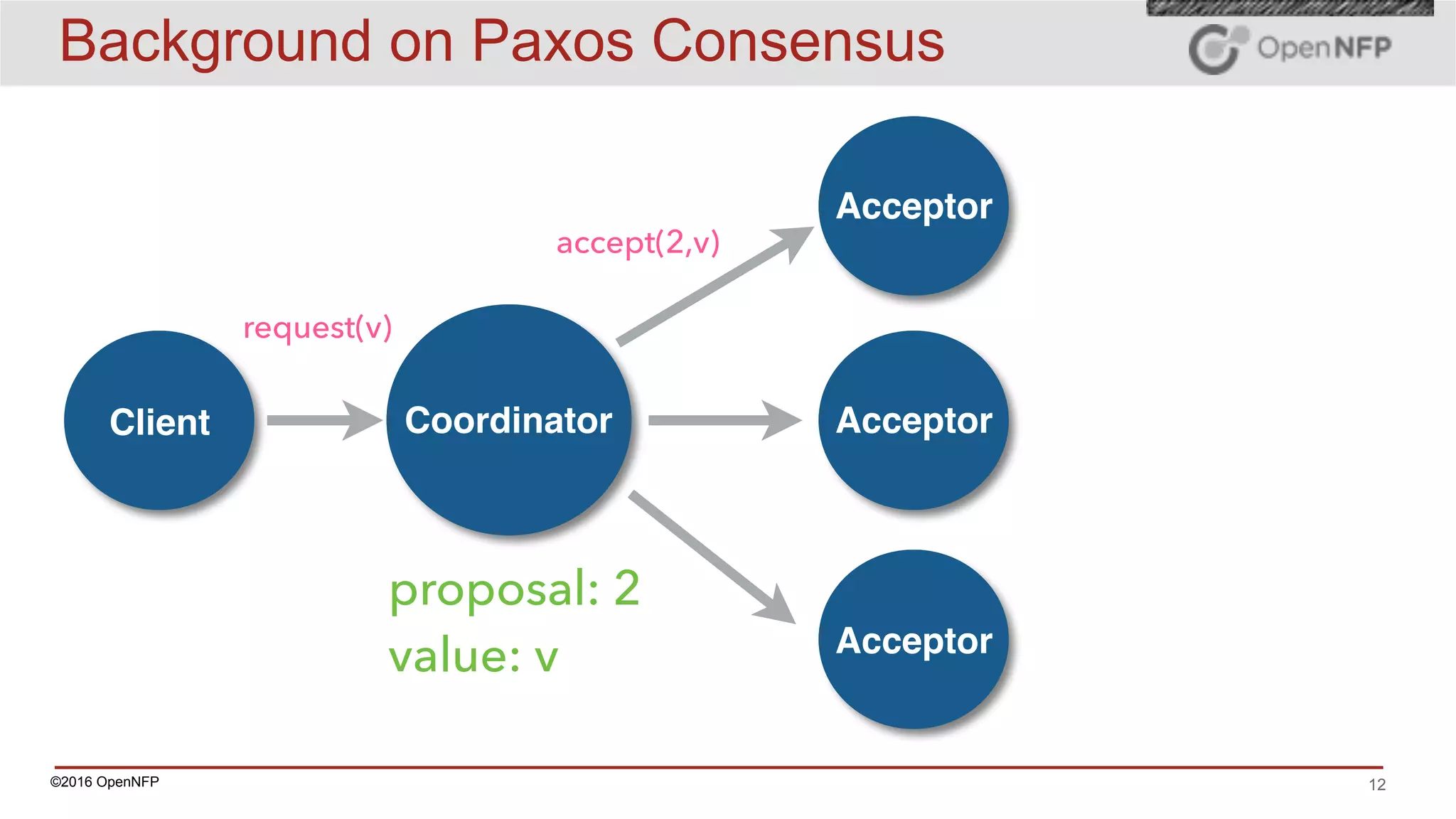

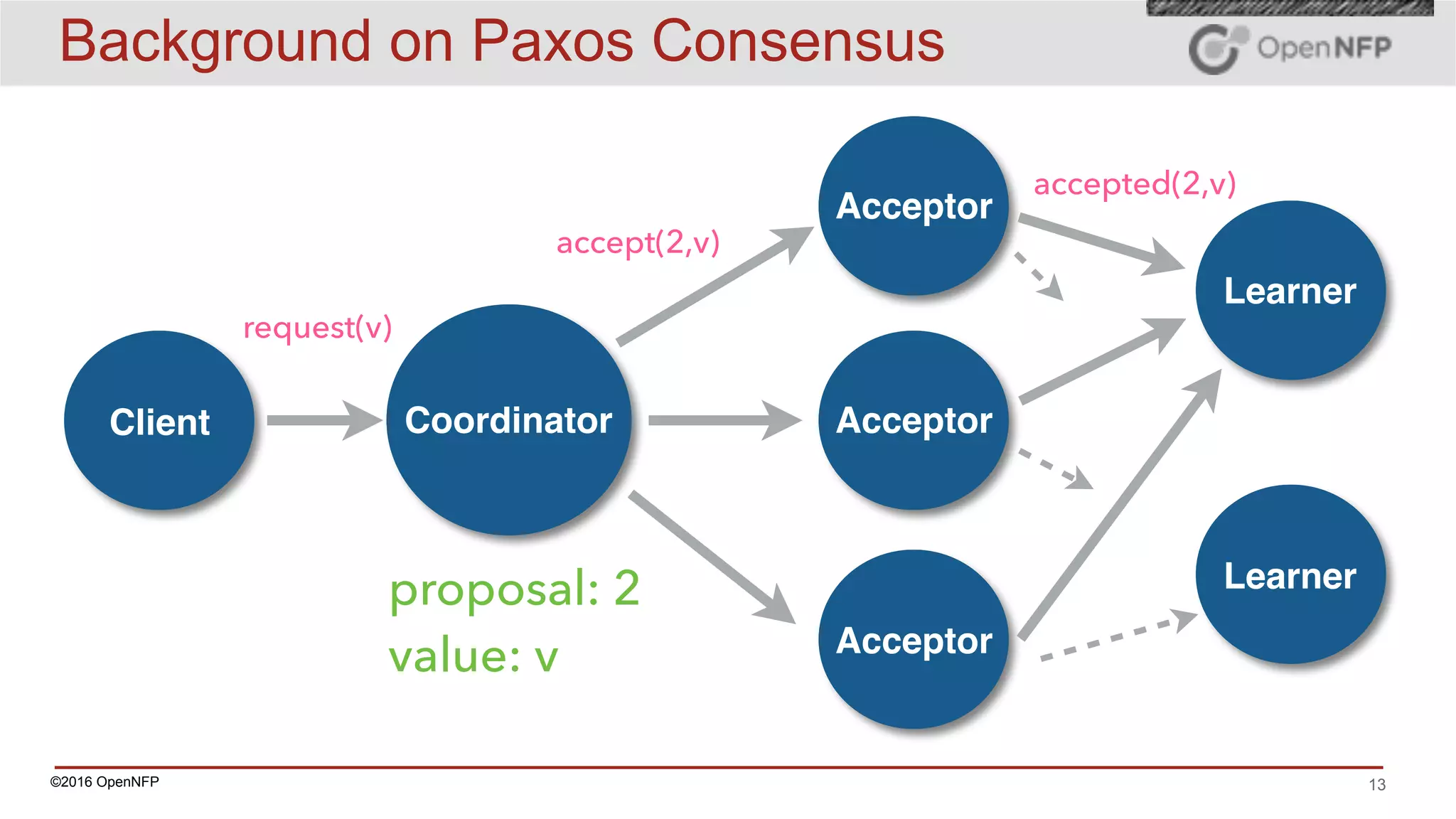

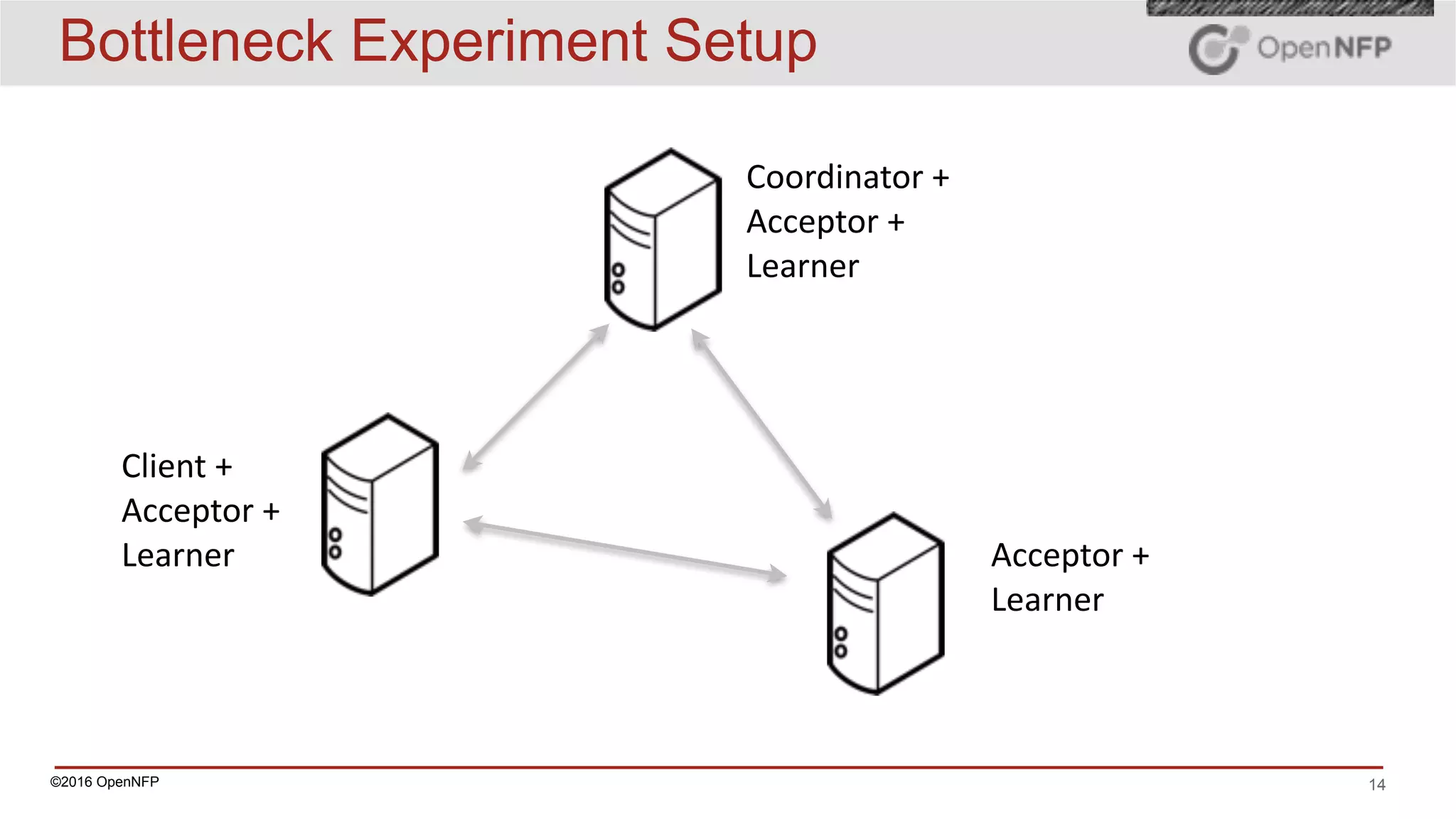

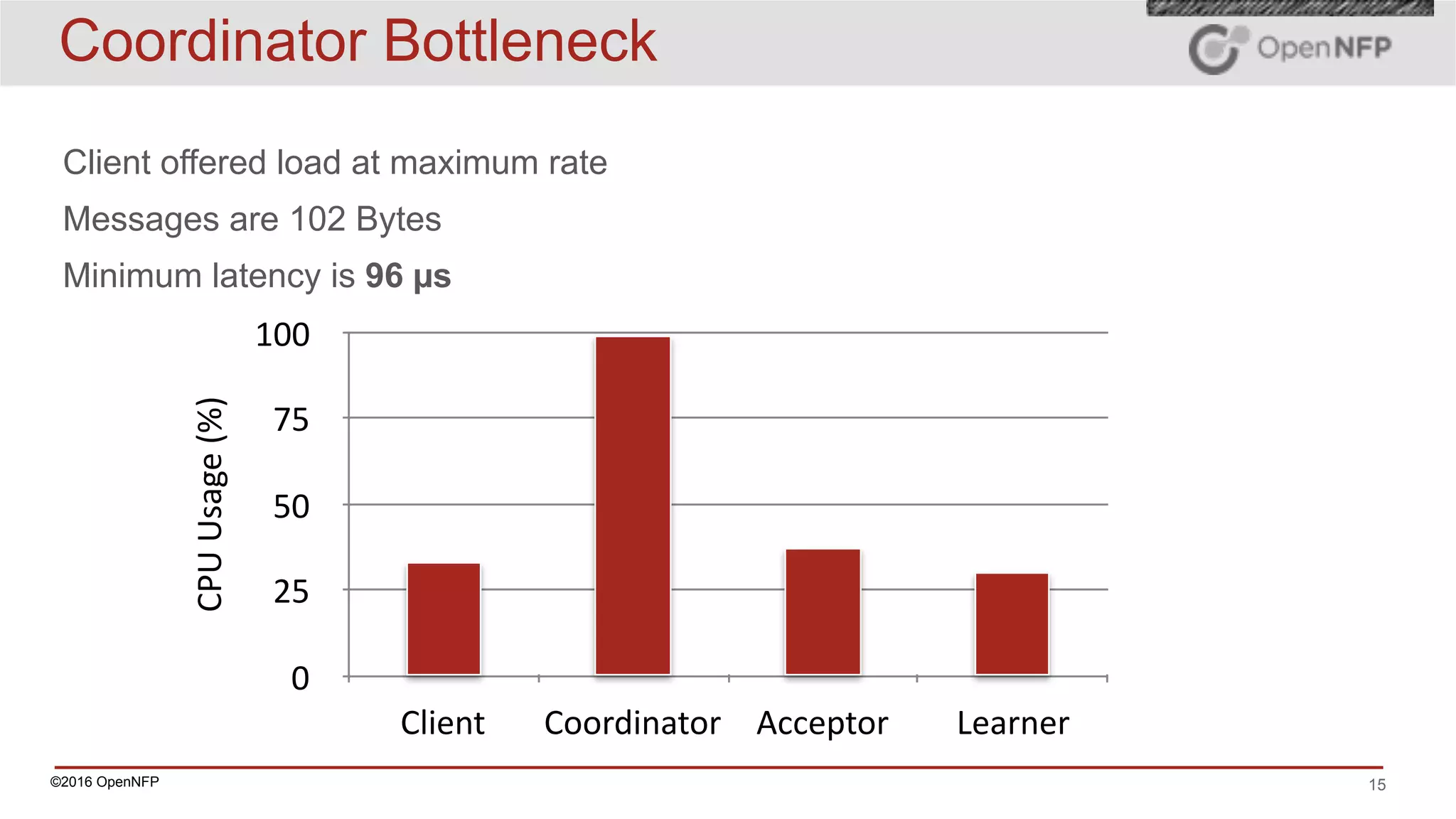

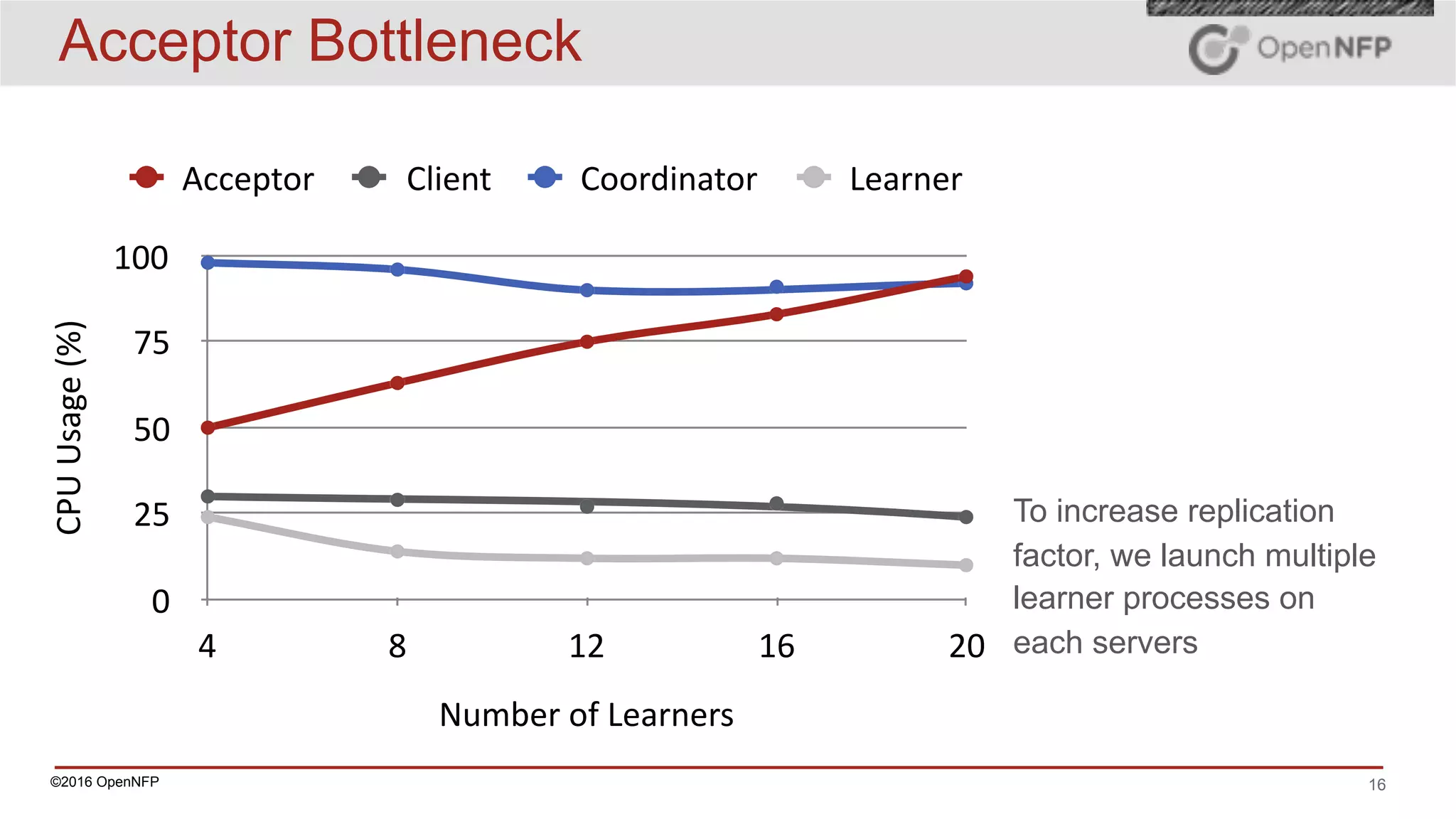

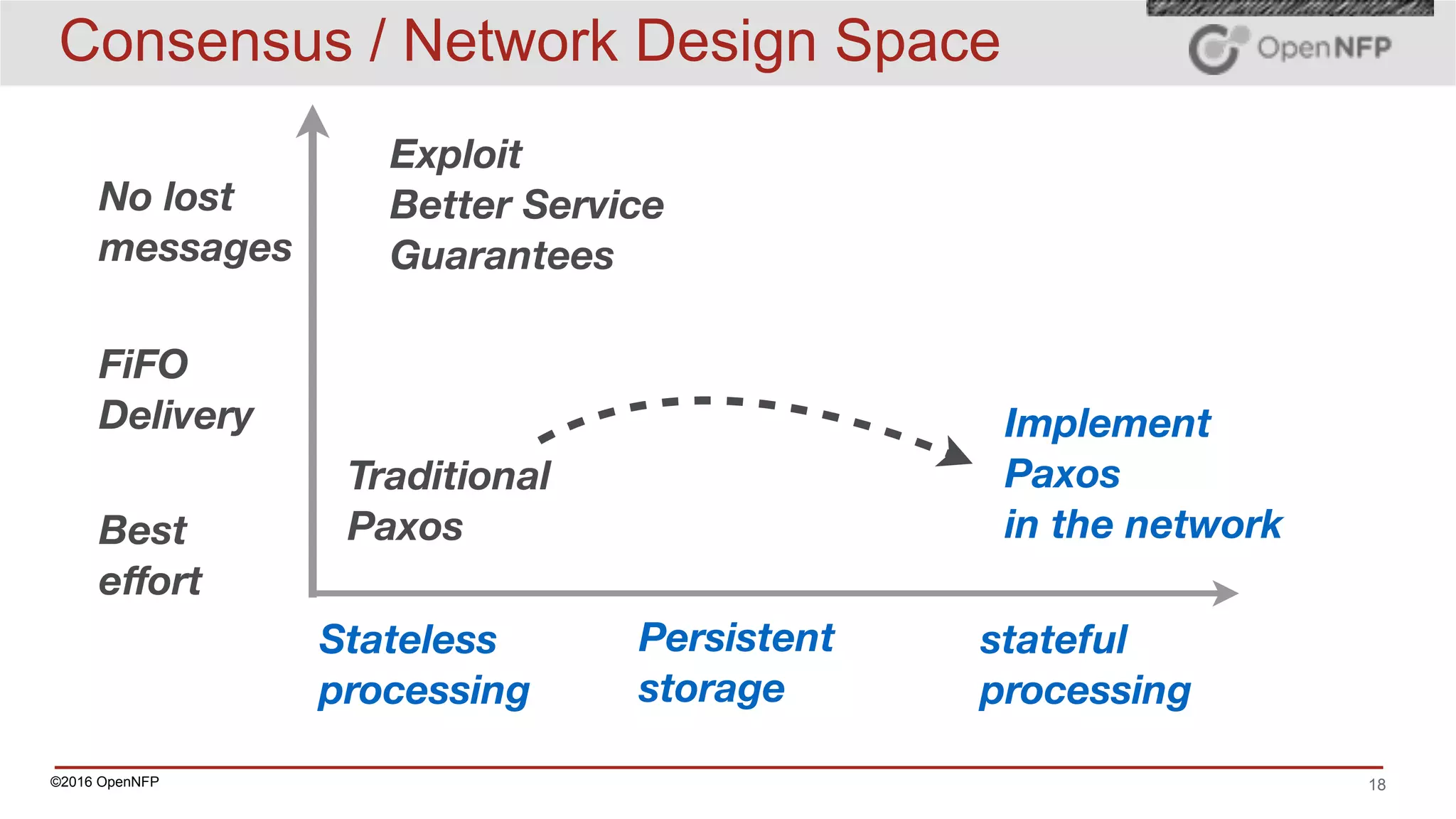

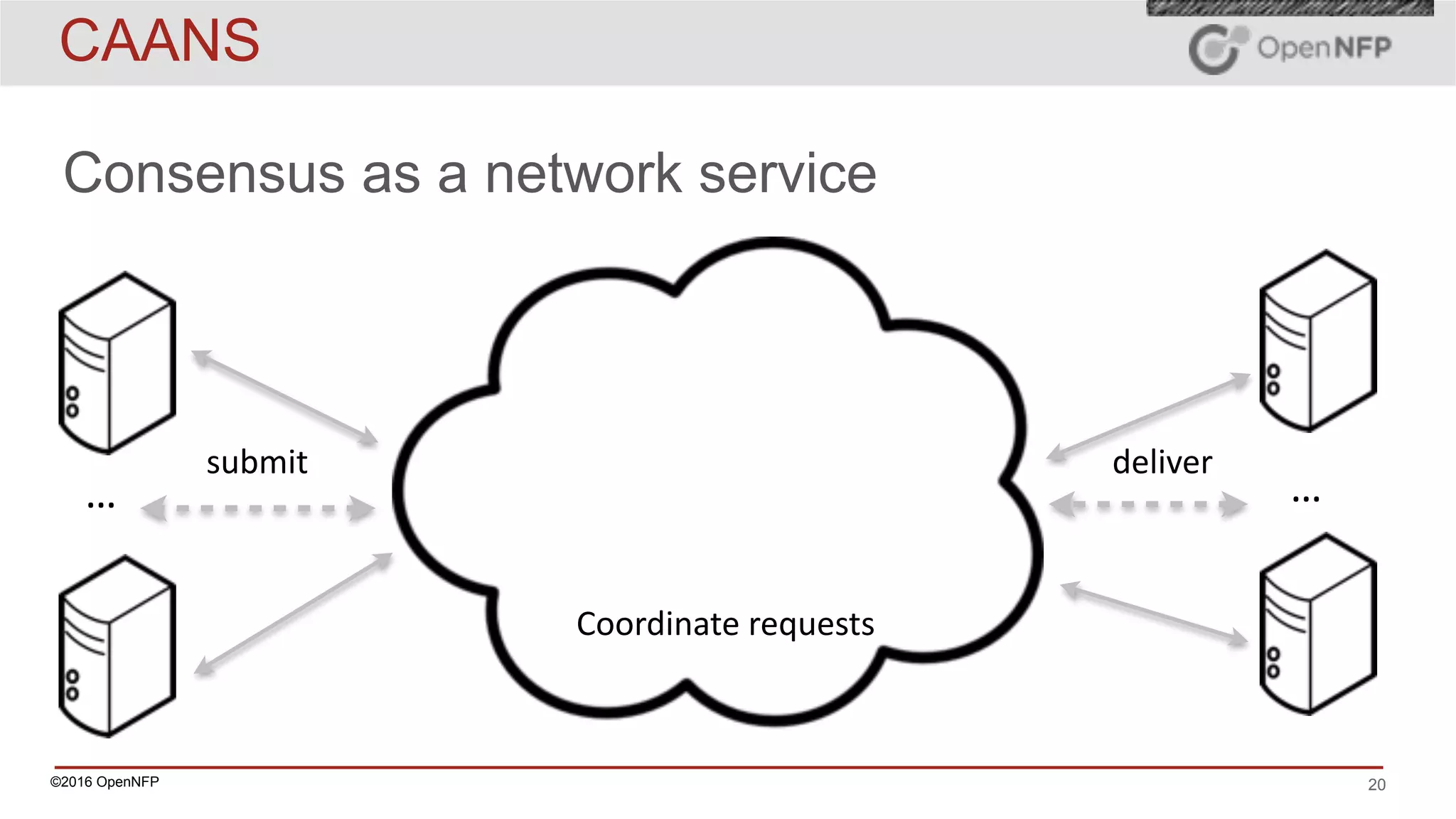

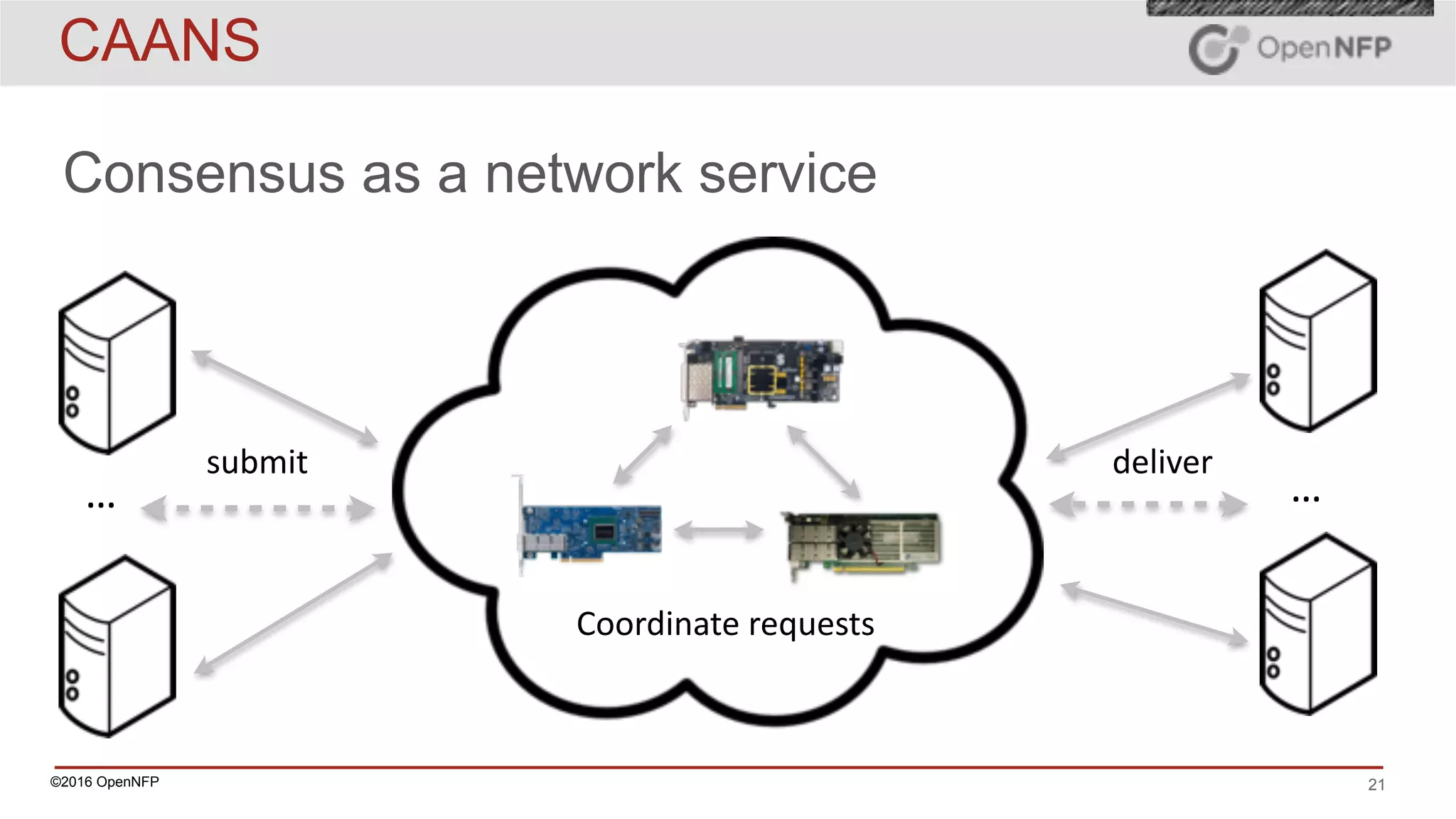

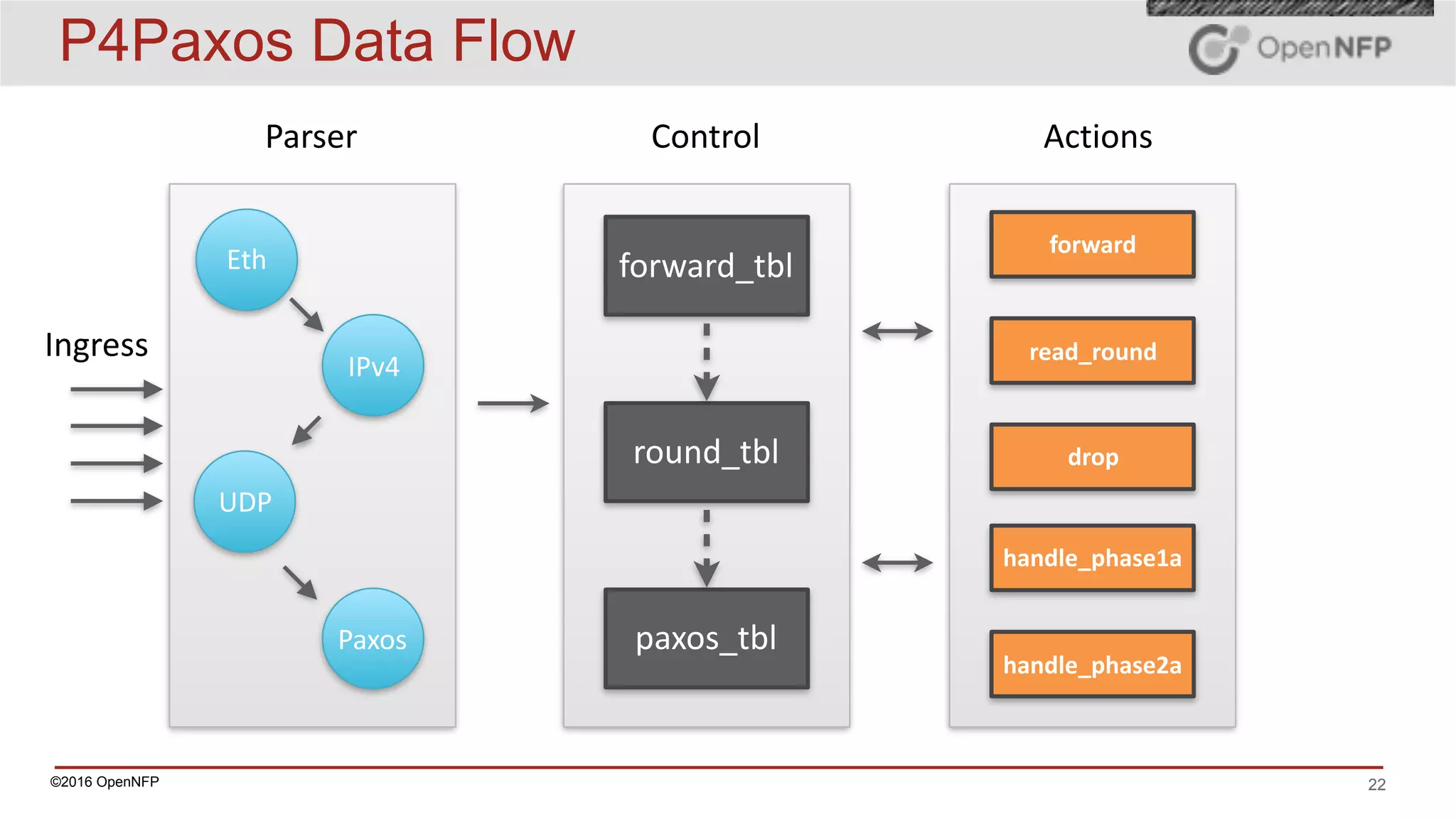

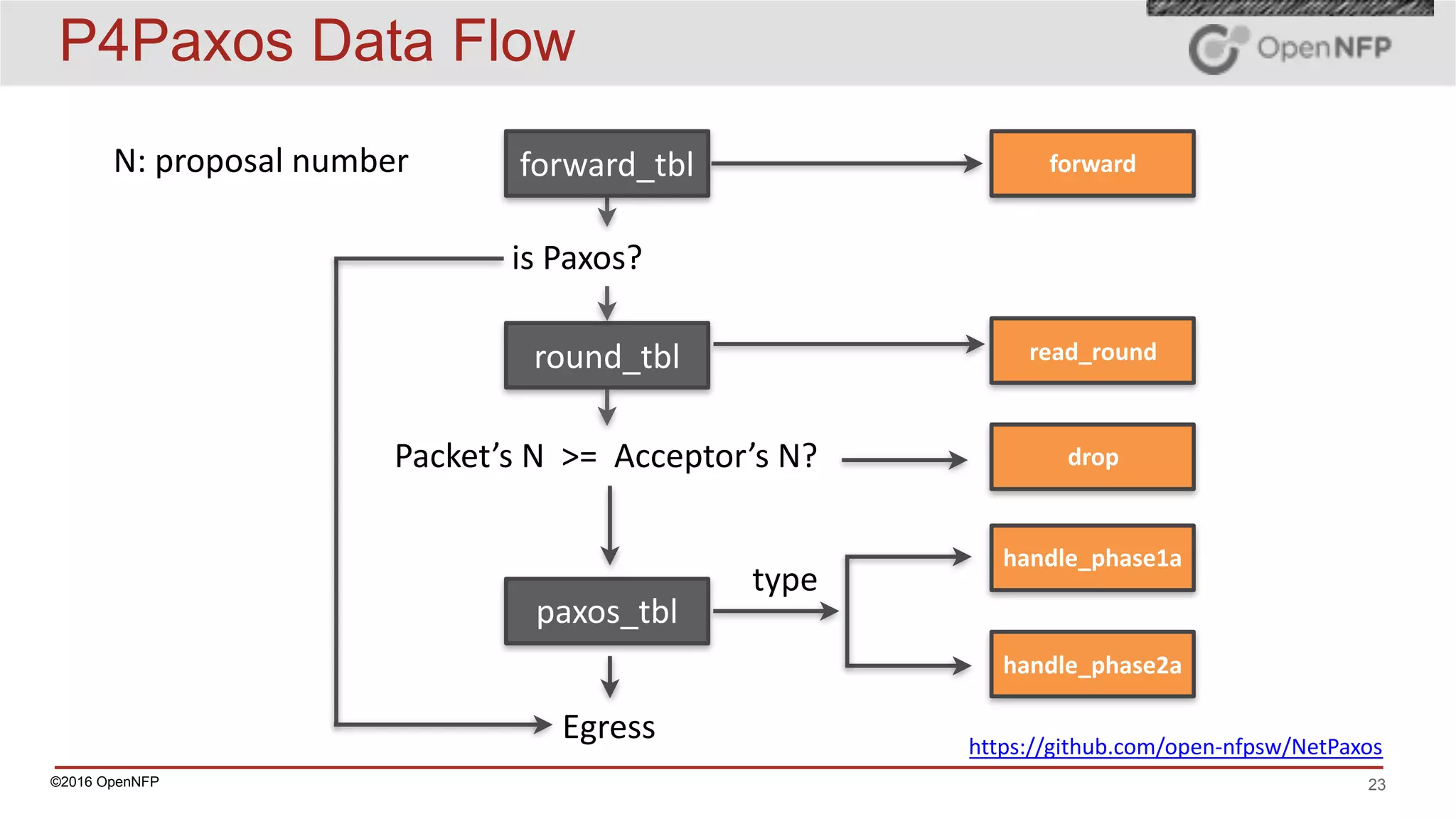

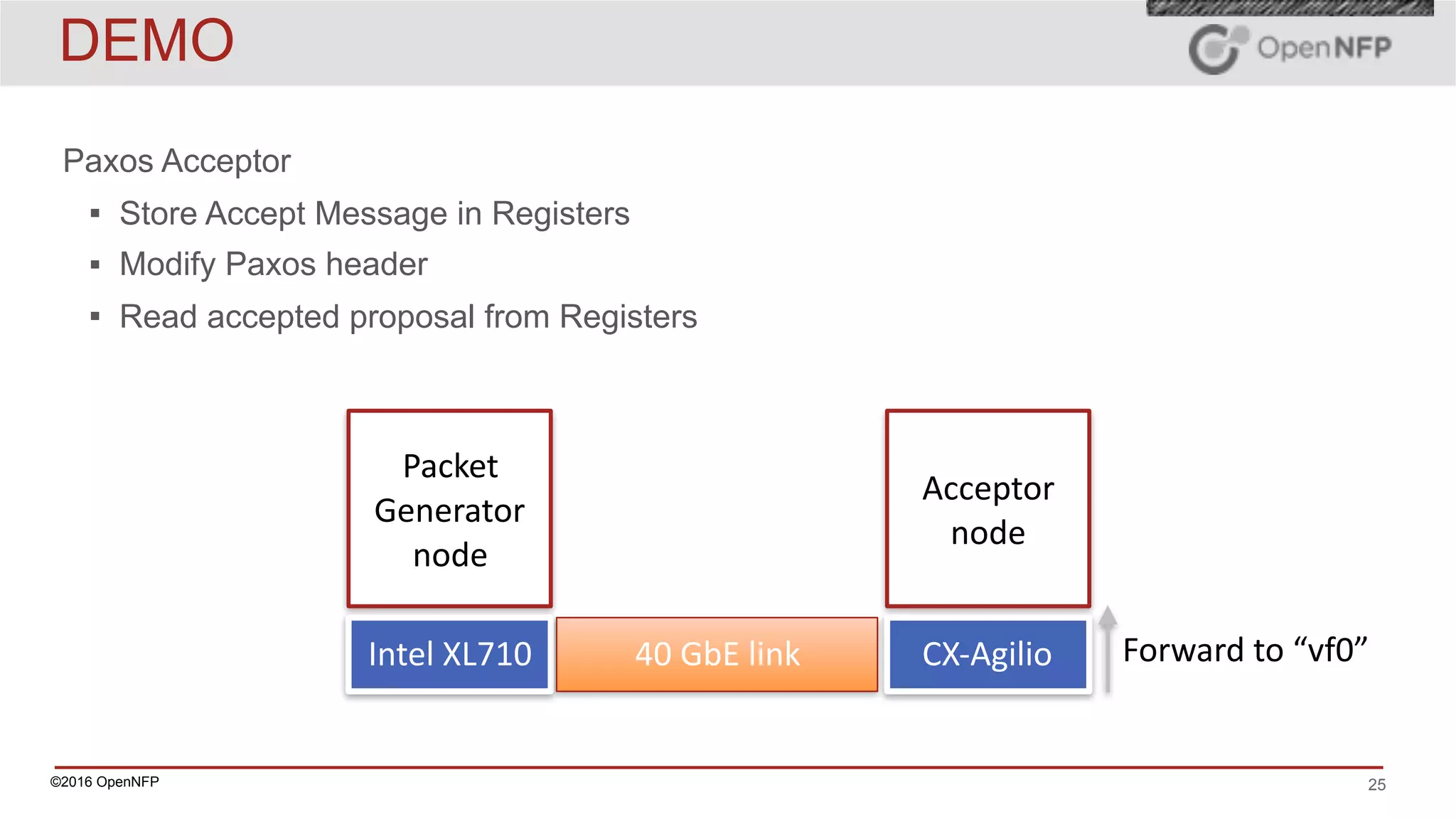

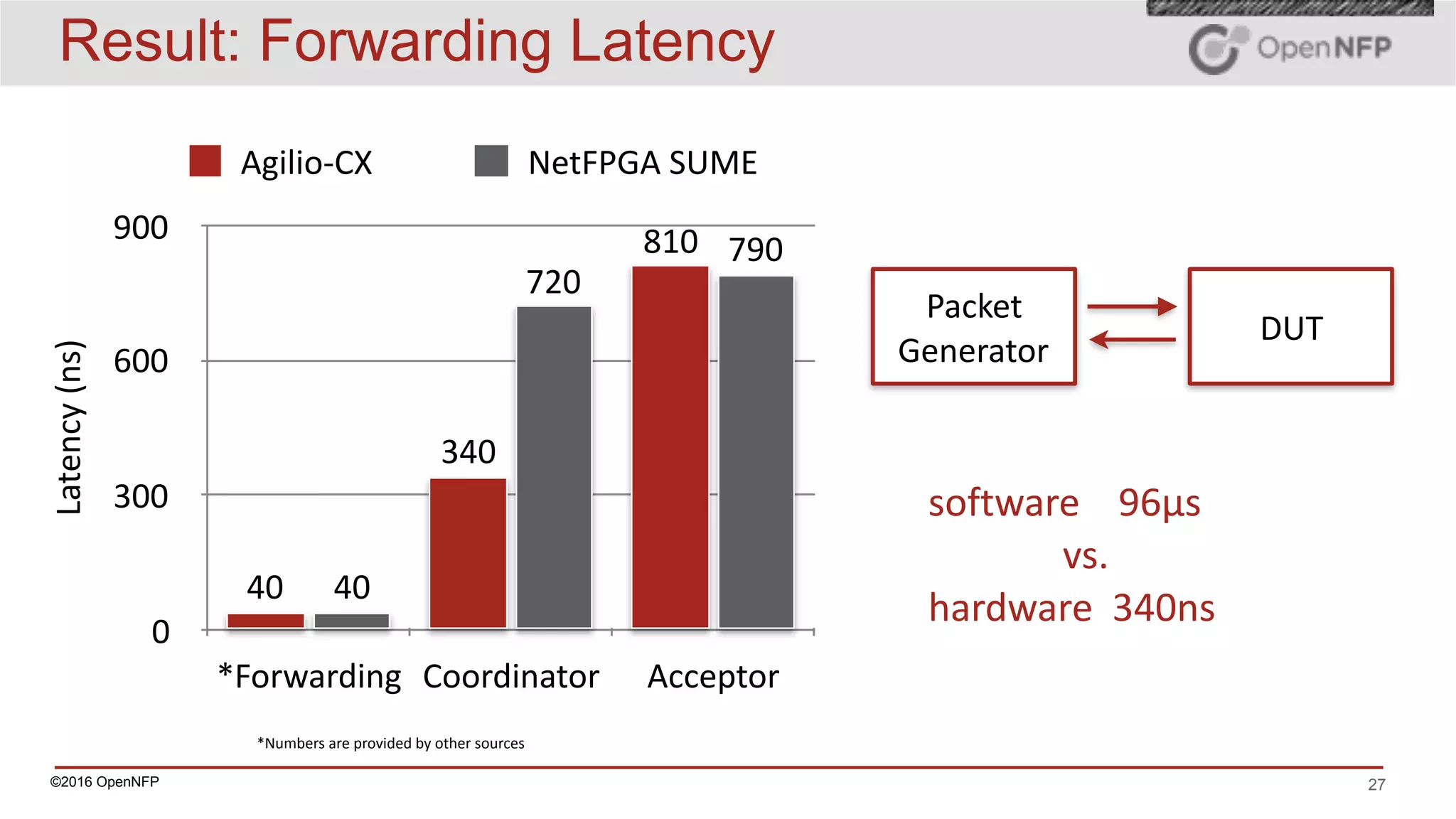

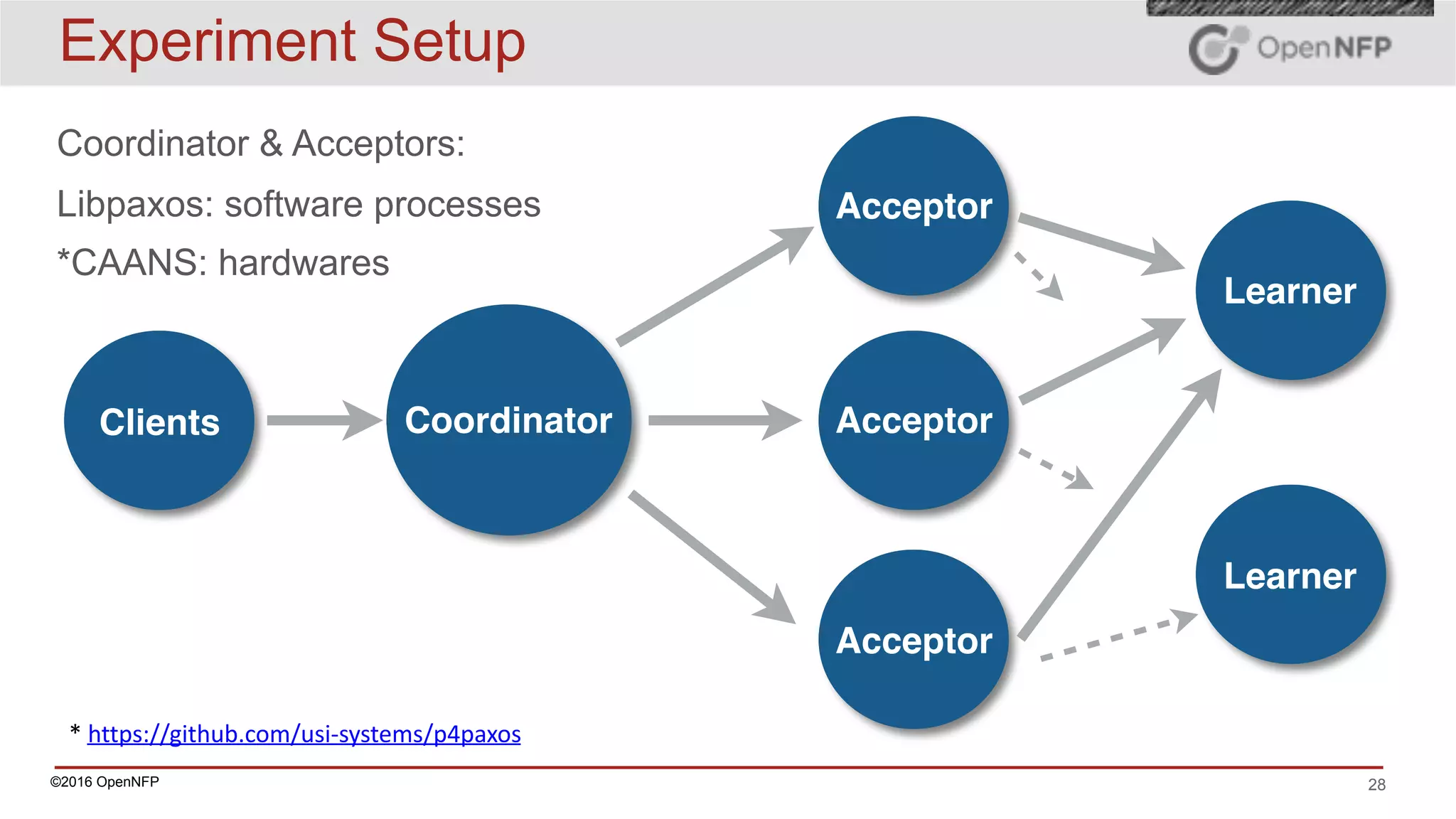

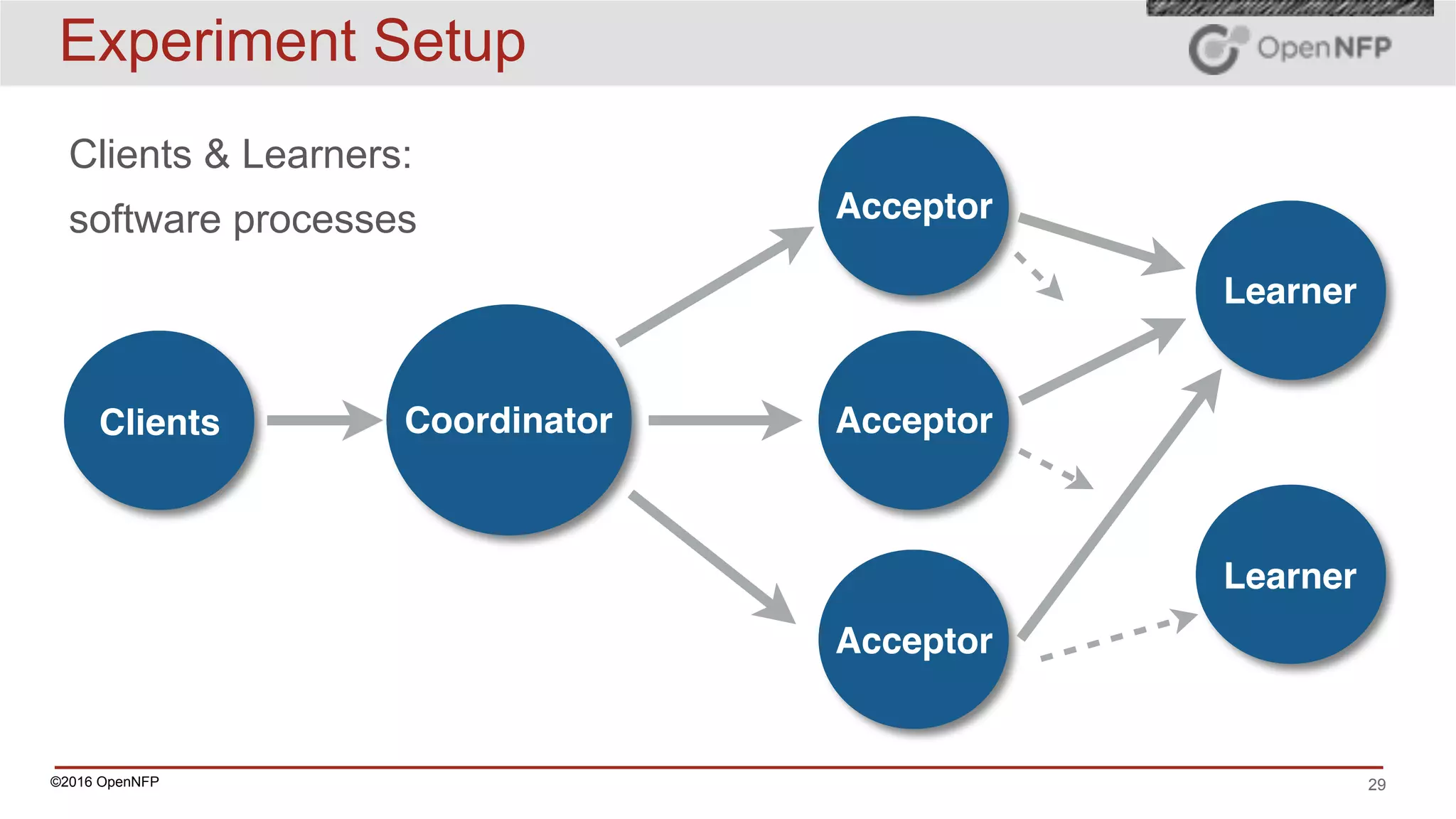

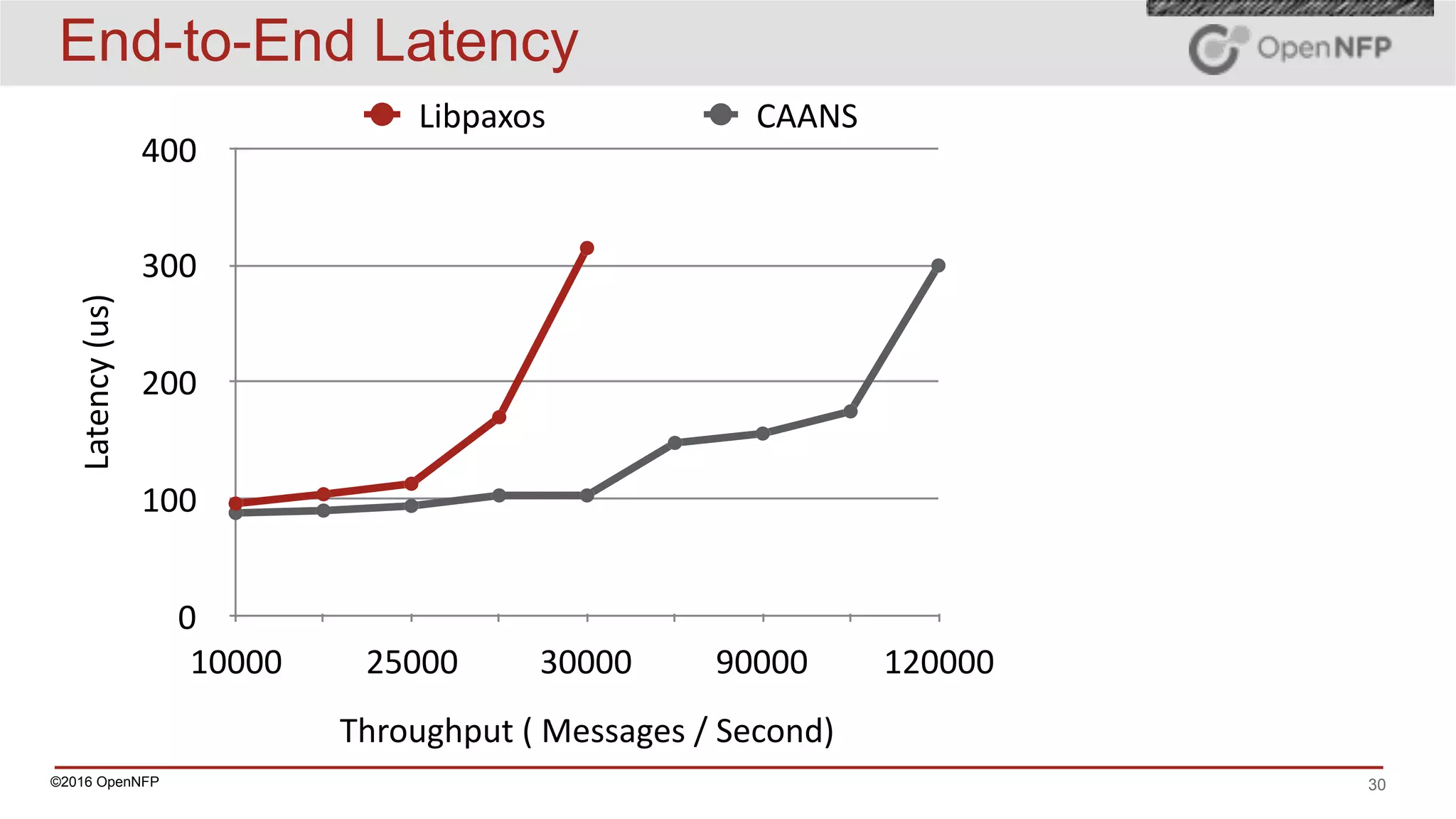

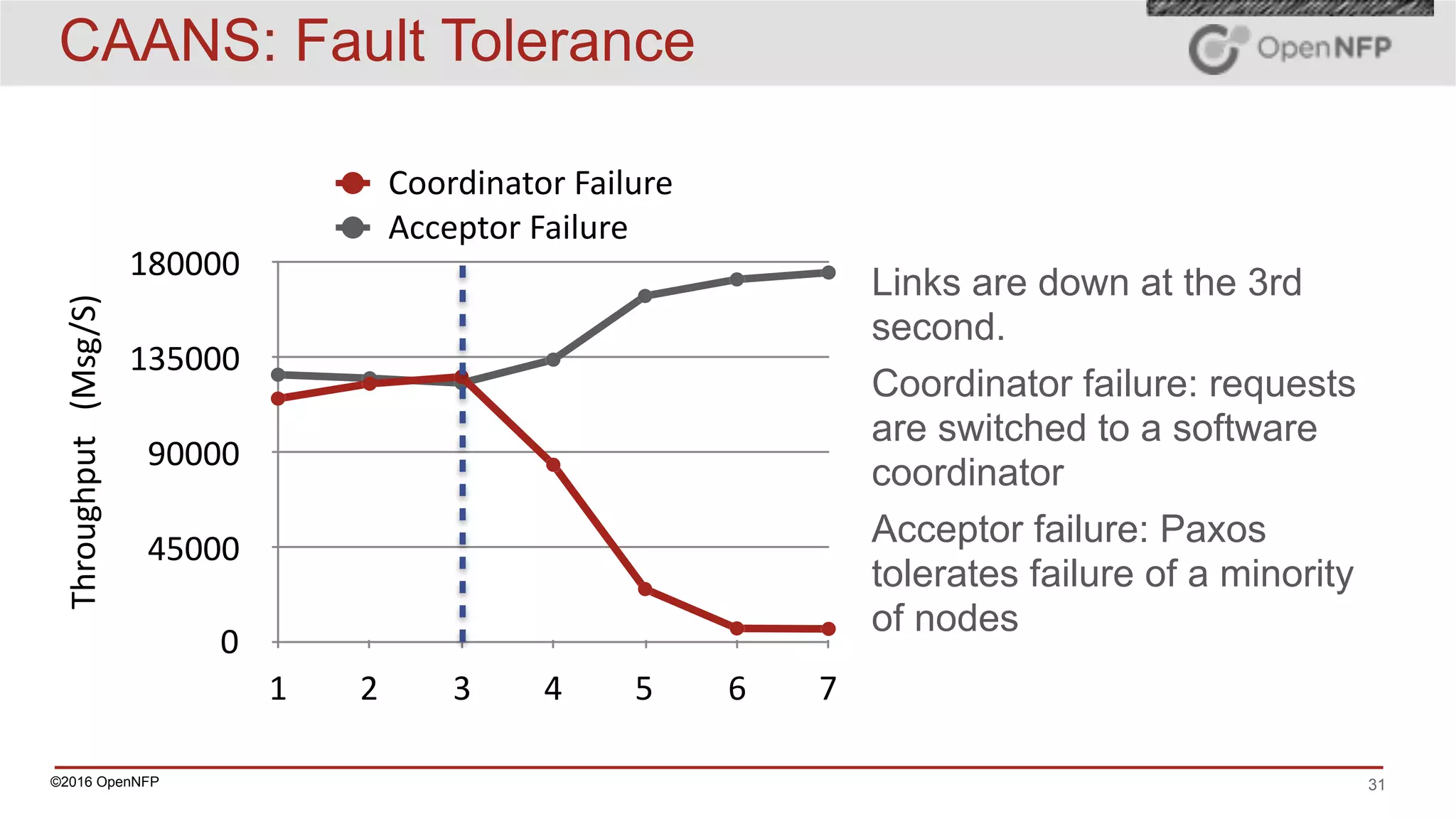

The document discusses a framework called CAANS (Consensus as a Network Service), which explores the integration of consensus protocols, like Paxos, within network devices to improve performance and fault tolerance in distributed systems. It highlights the importance of consensus in distributed computing, offers insights into the performance benefits of hardware implementation, and outlines future work for enhancing learner performance and achieving broader deployment. The findings are supported by experimental results demonstrating reduced latency and increased throughput when employing CAANS compared to traditional software methods.