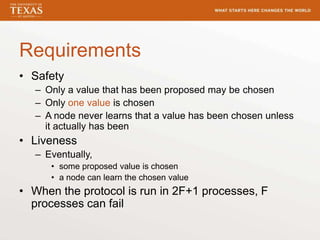

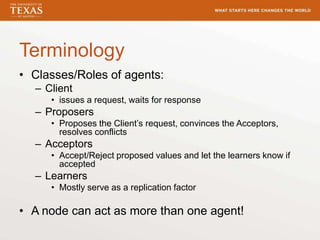

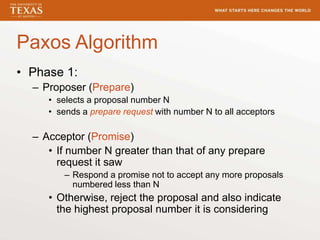

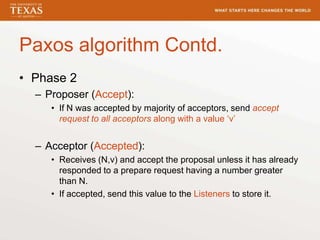

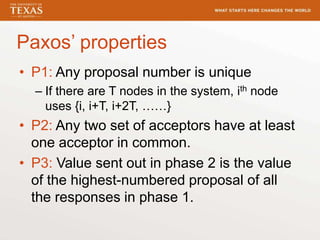

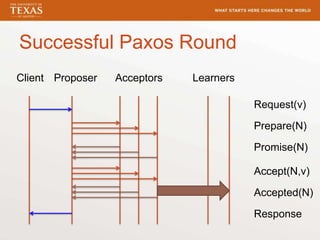

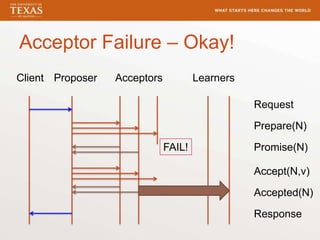

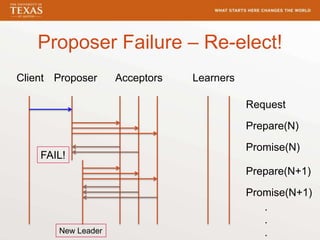

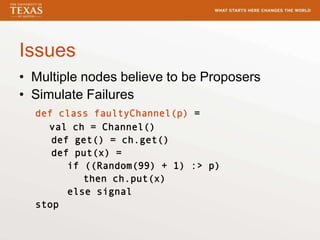

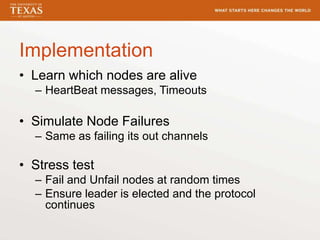

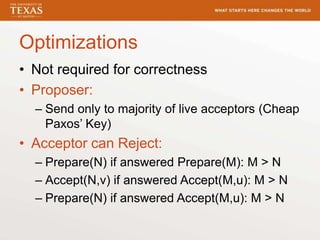

This document summarizes the implementation of the classic Paxos consensus algorithm in Orc. It describes the key aspects of Paxos including phases 1 and 2, properties like uniqueness of proposal numbers, and how consensus is achieved even with failures of proposers or acceptors. It also discusses optimizations that can be made for efficiency but are not required for correctness.