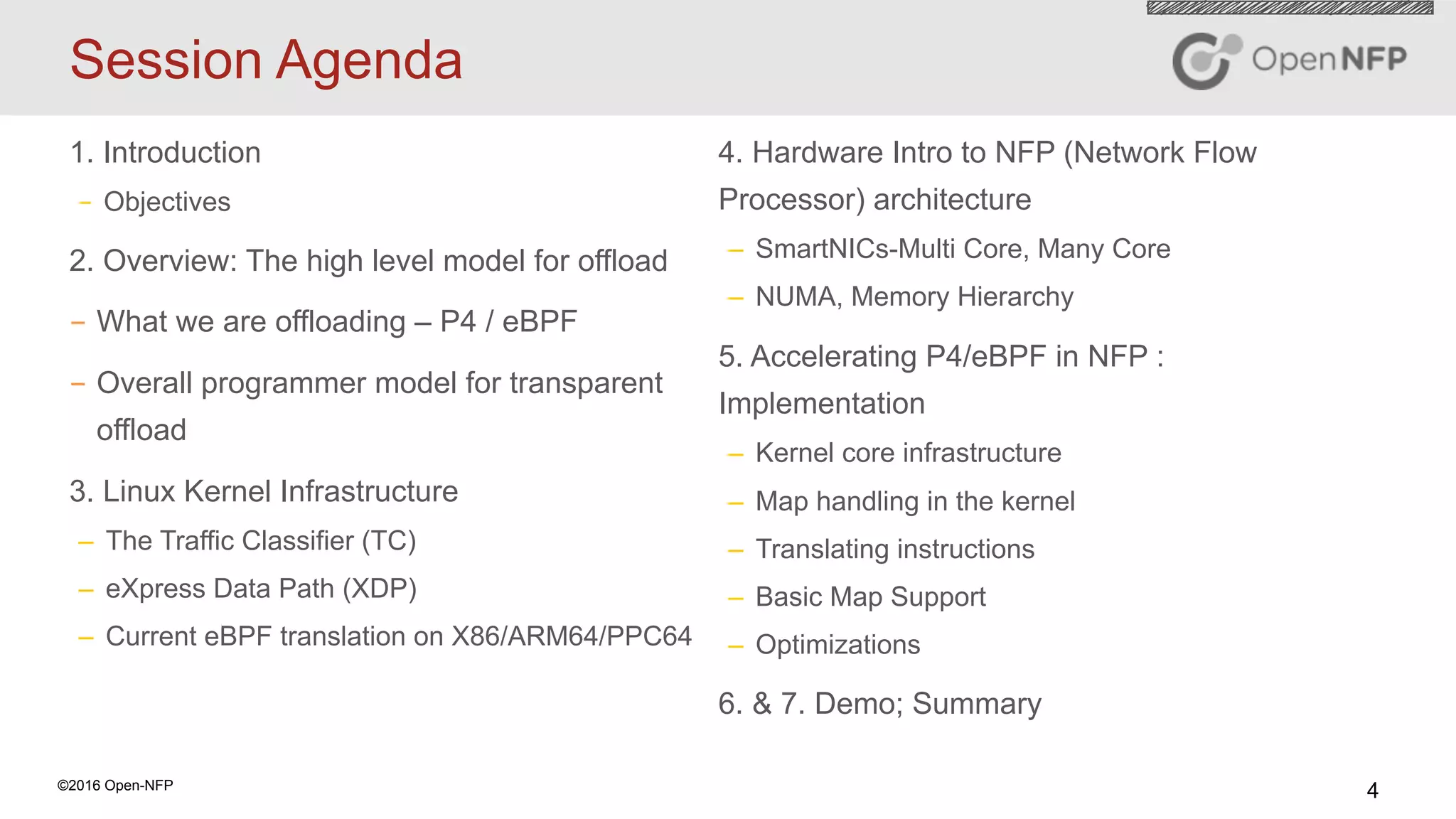

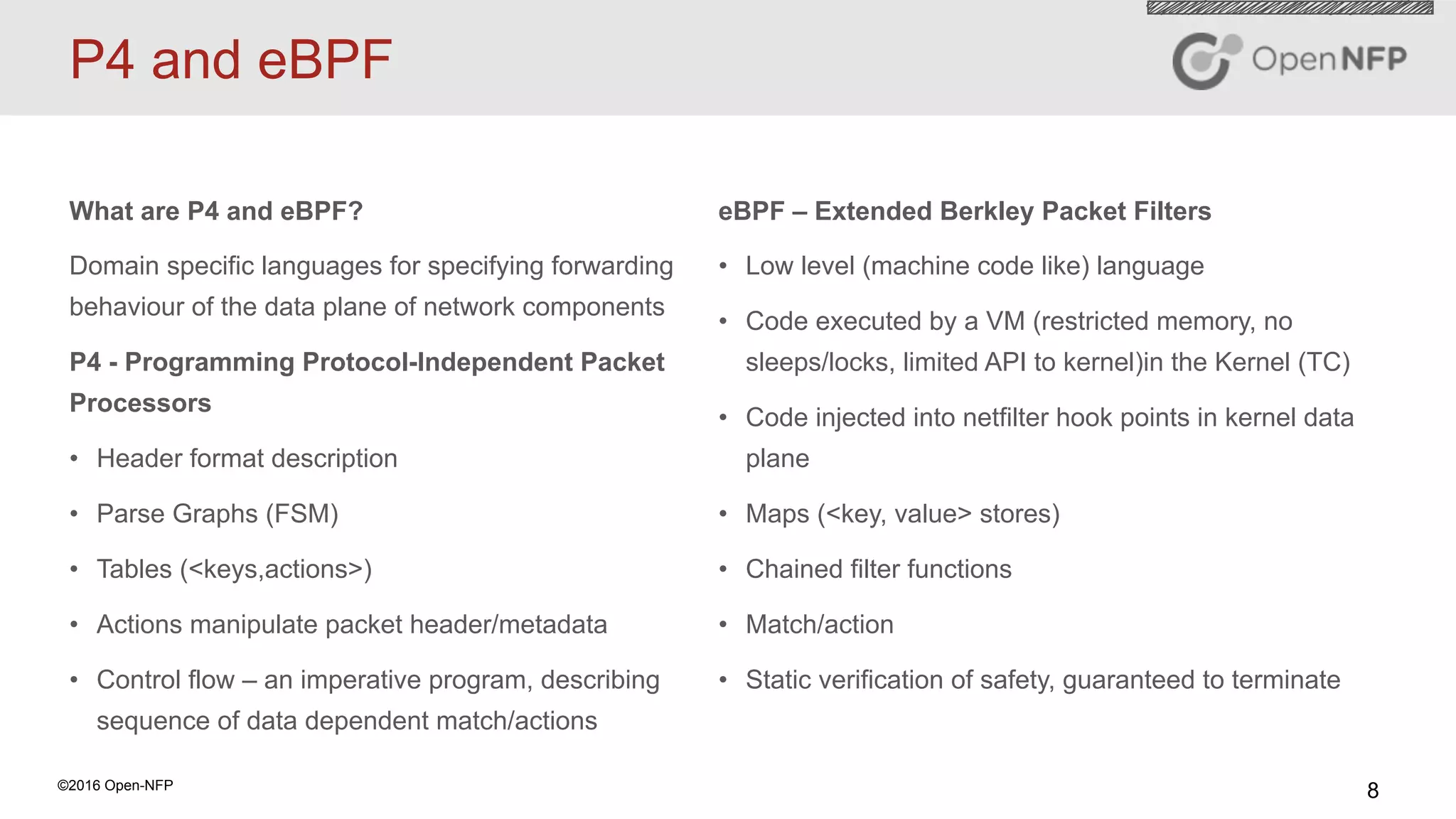

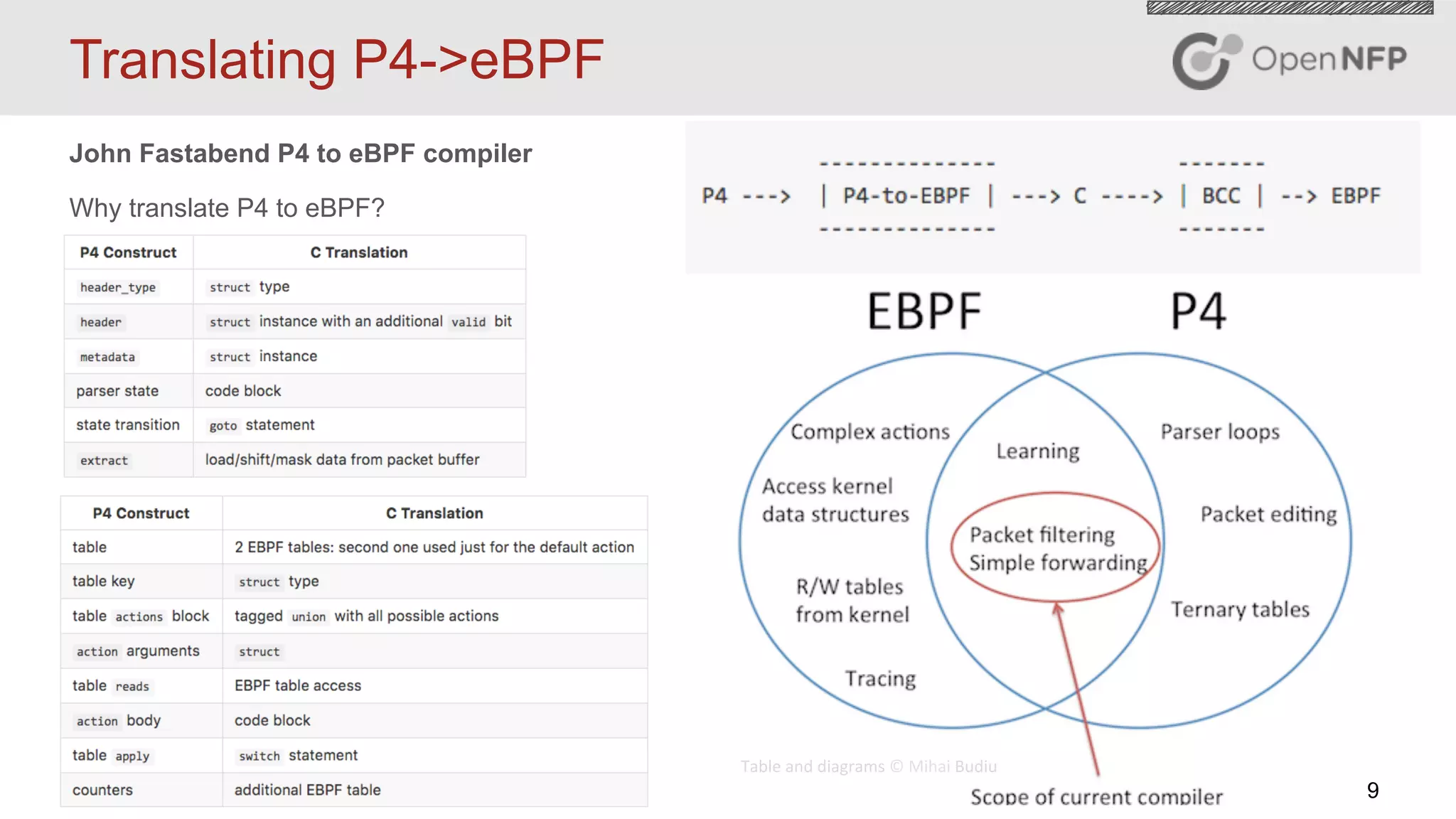

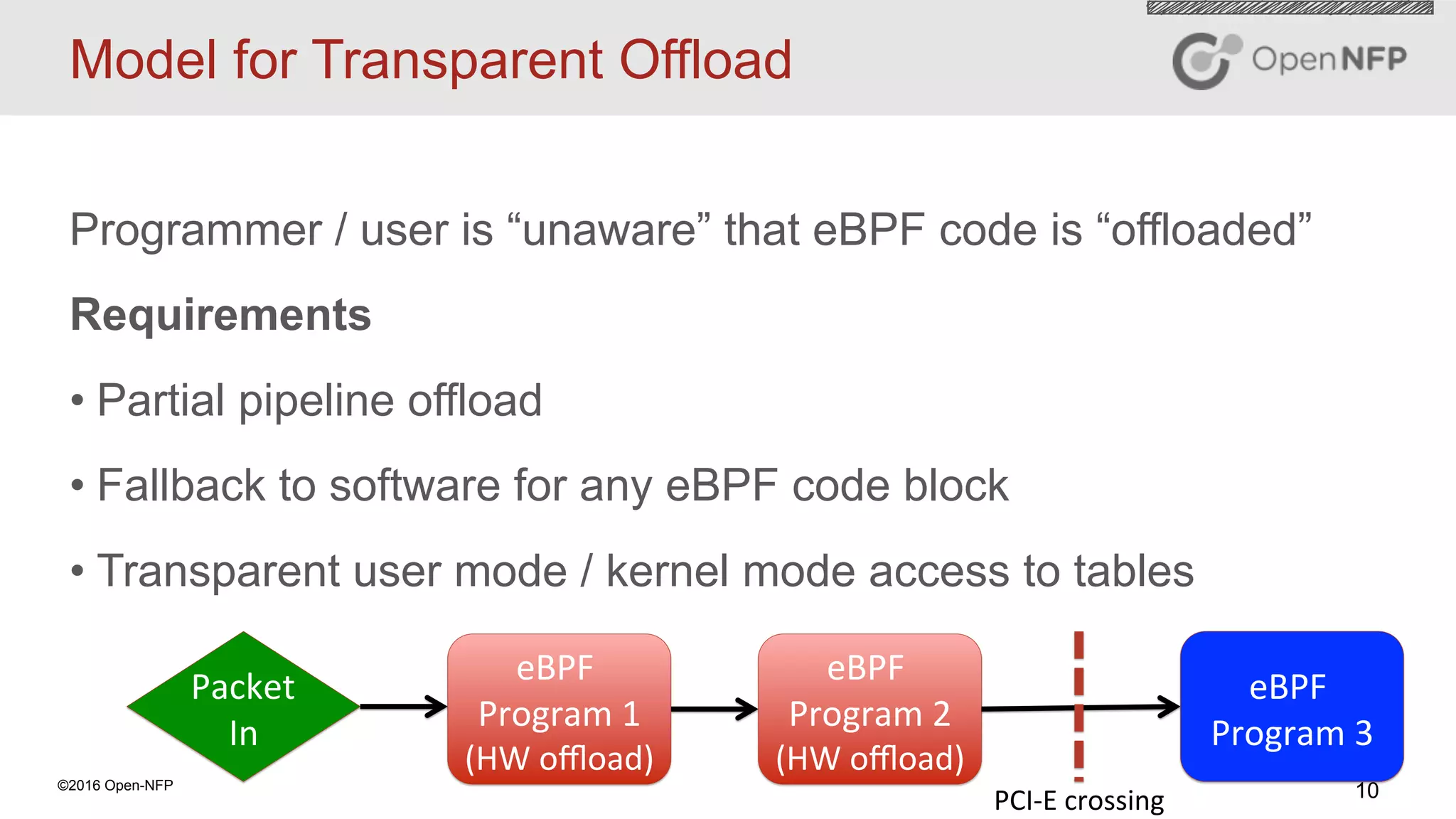

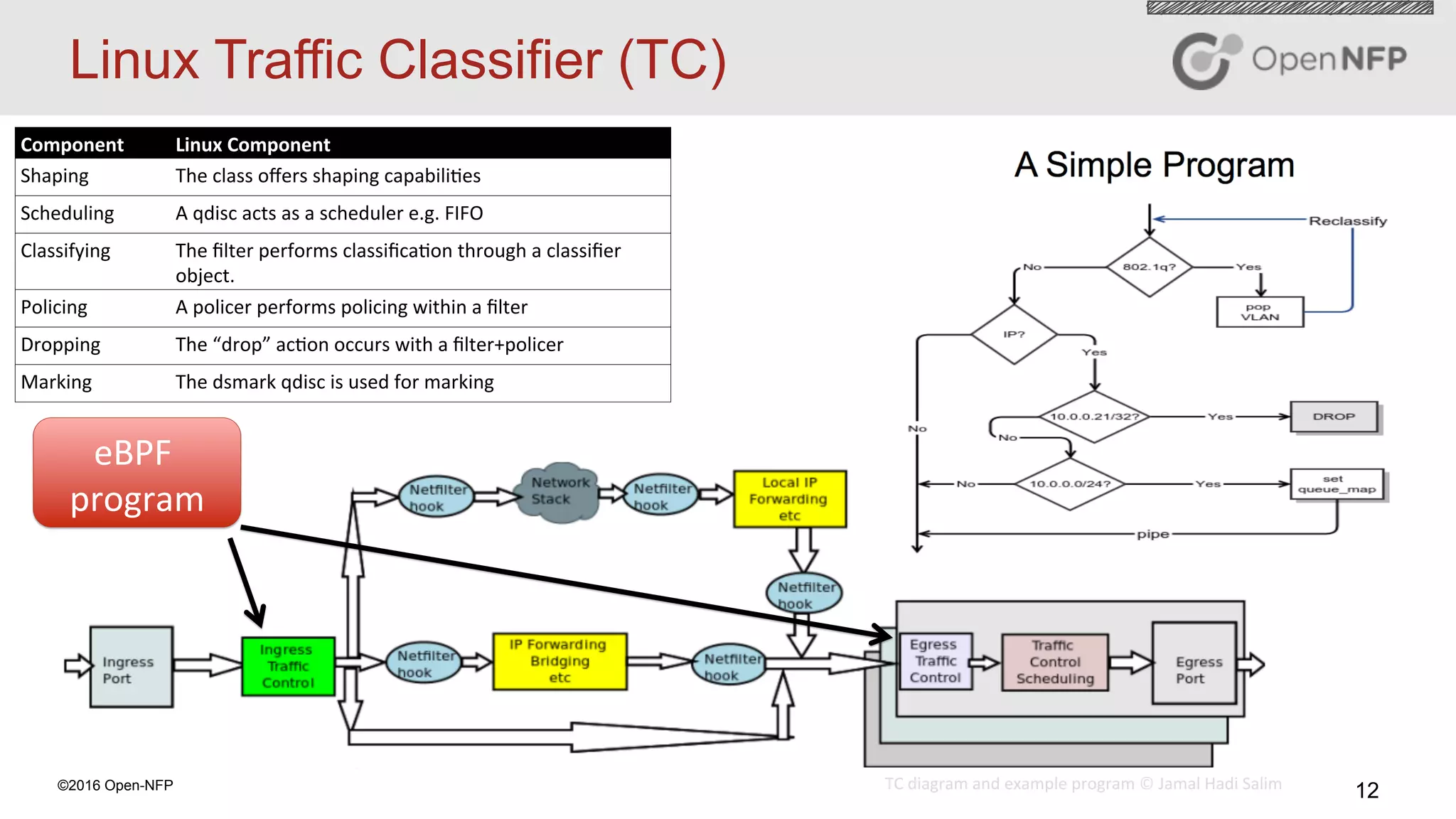

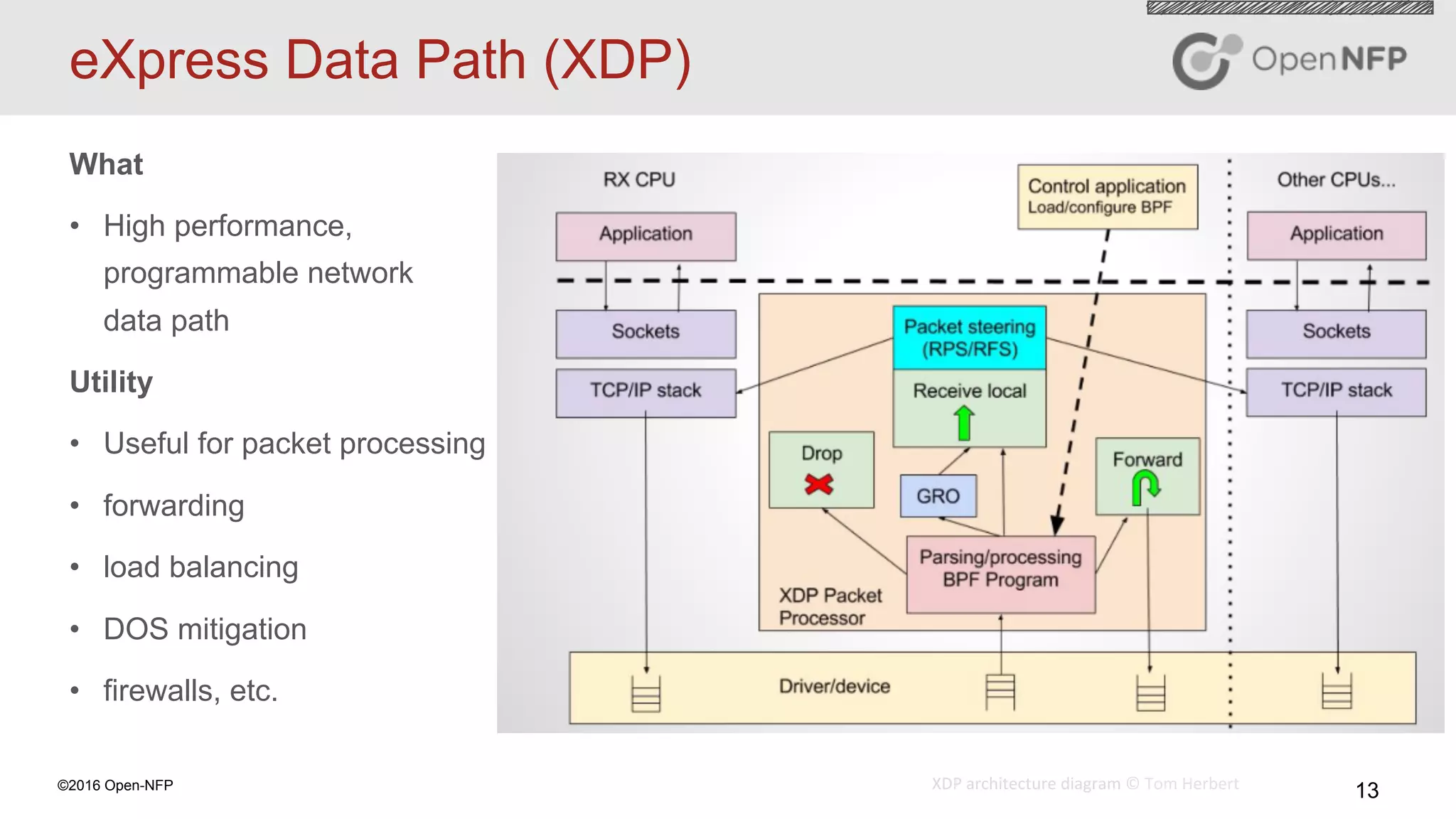

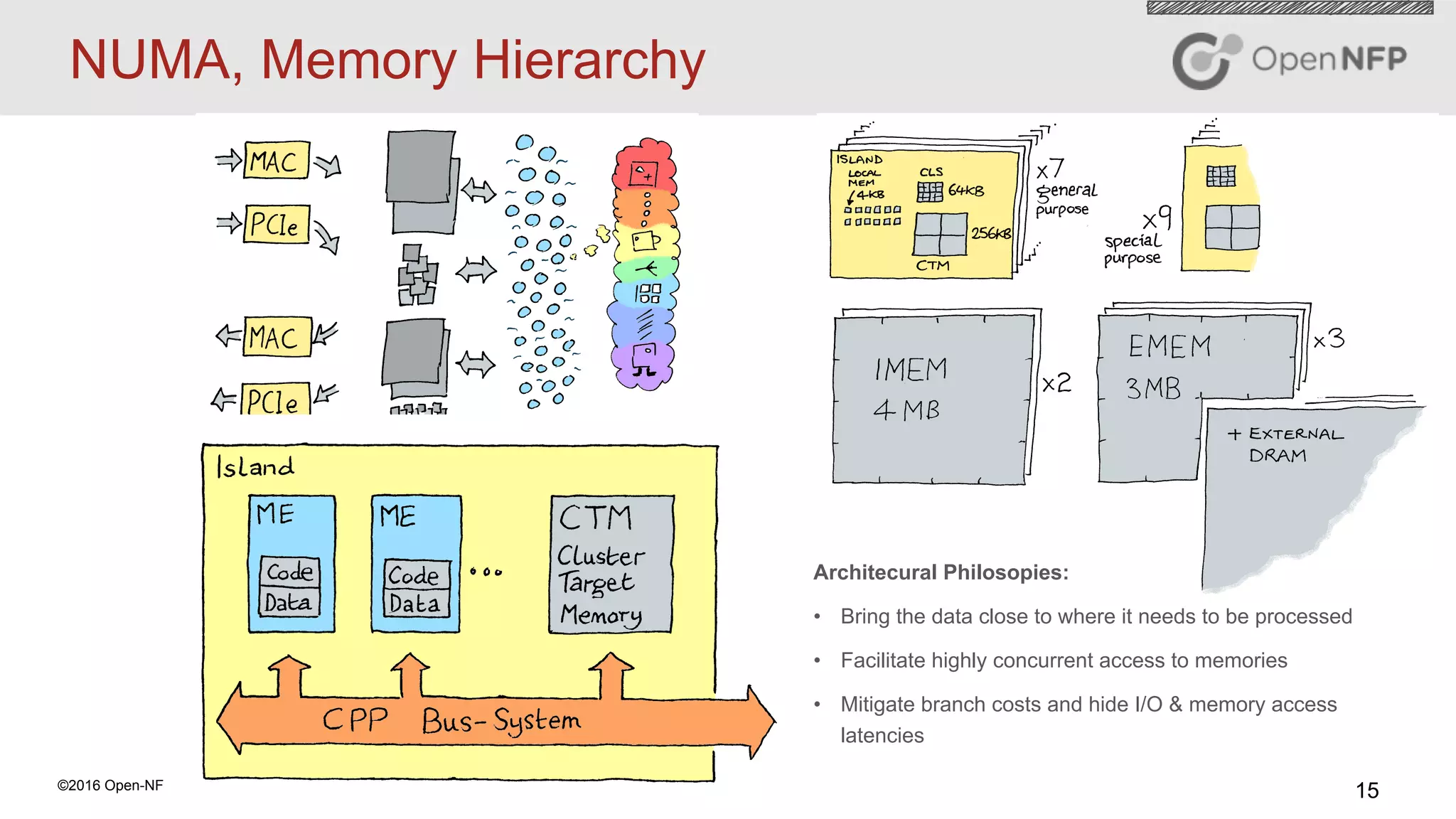

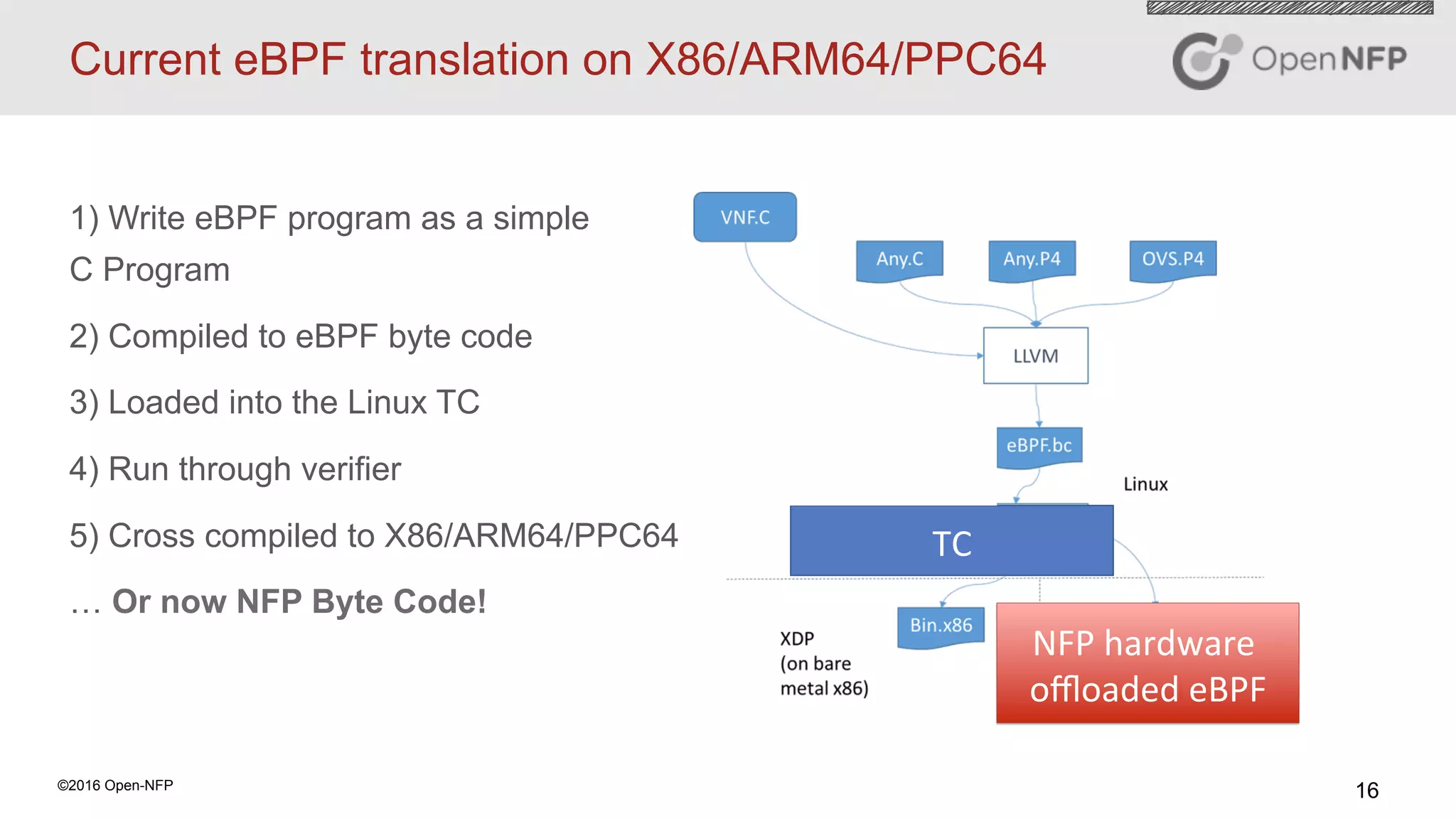

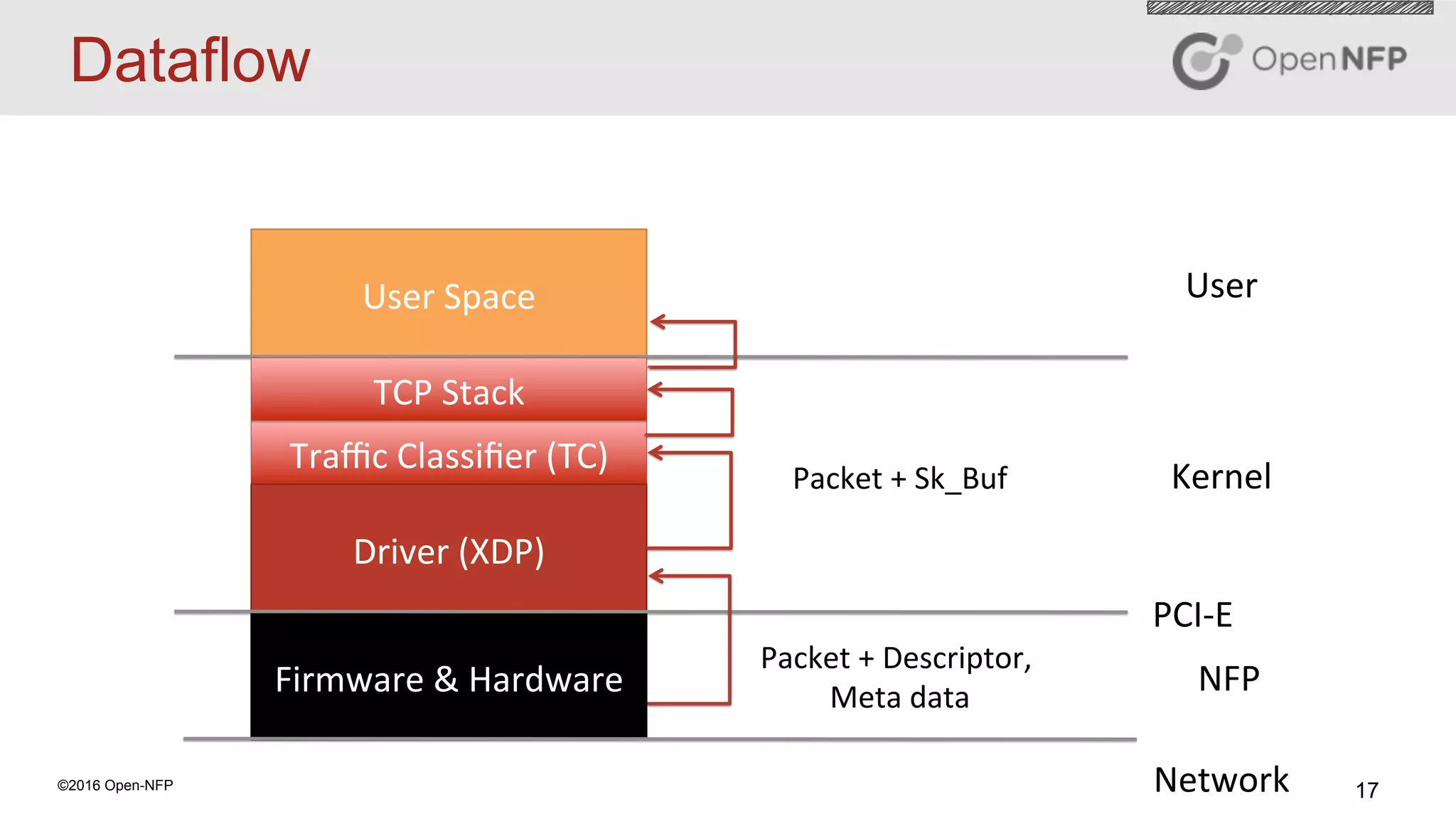

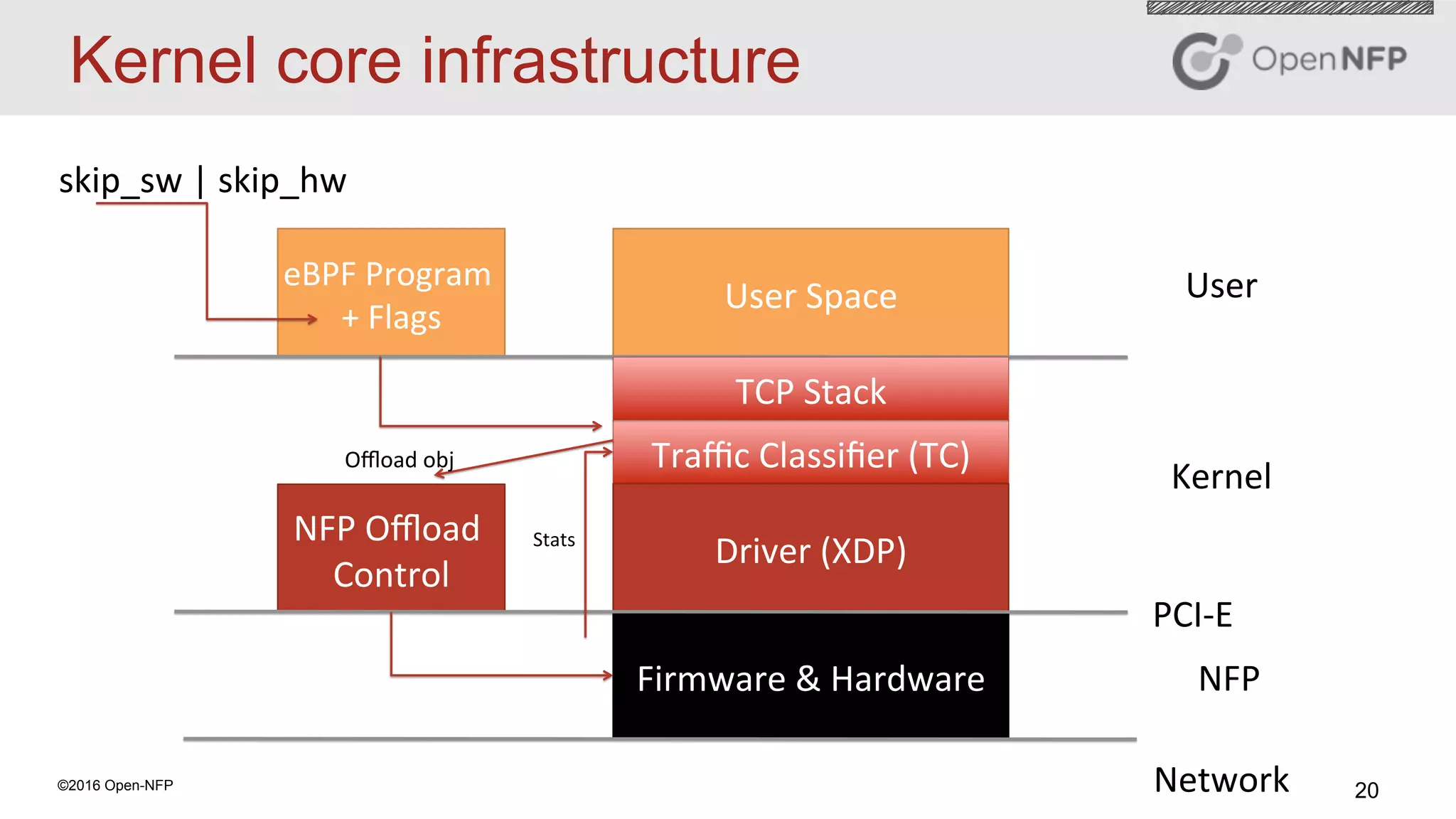

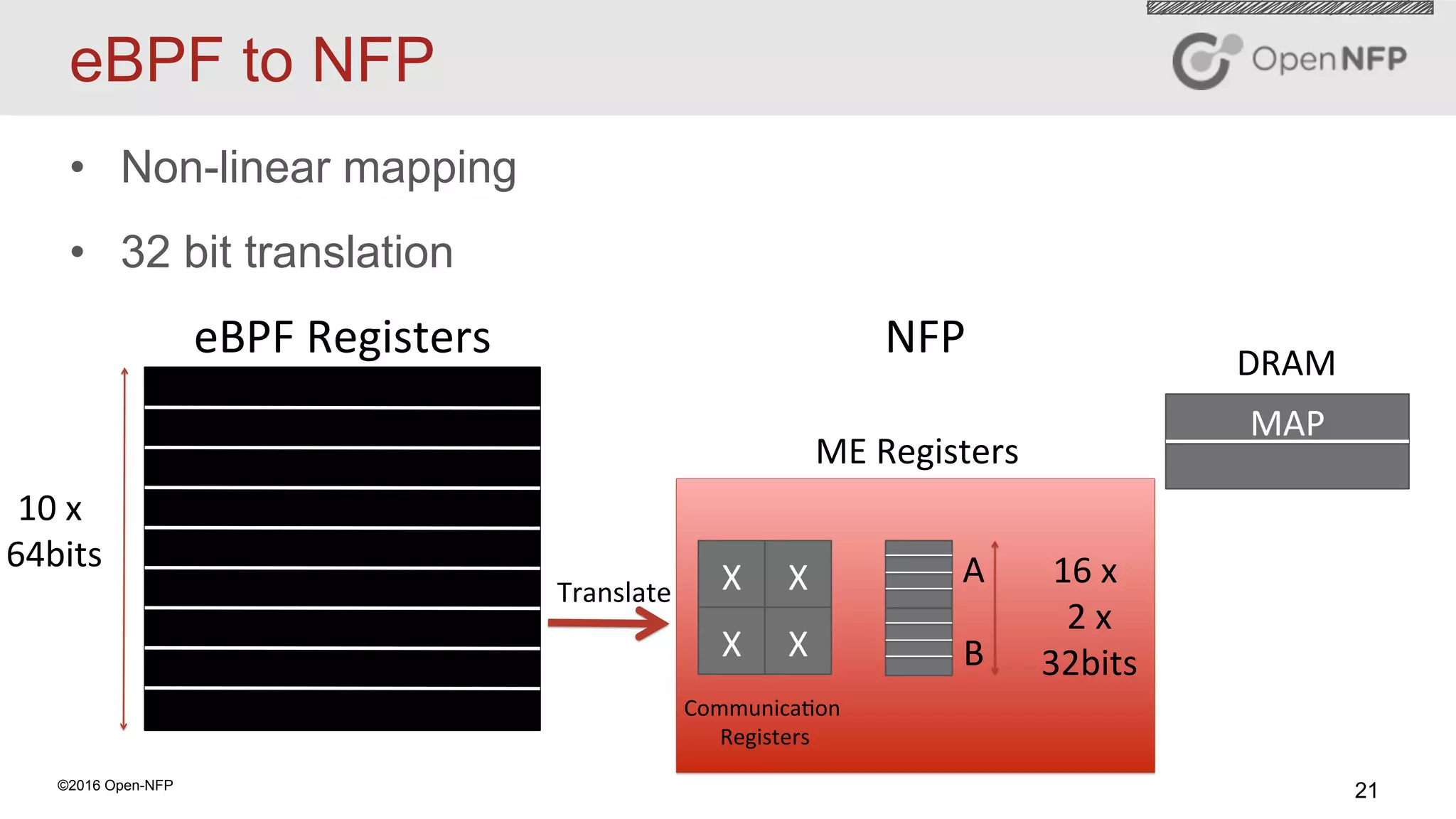

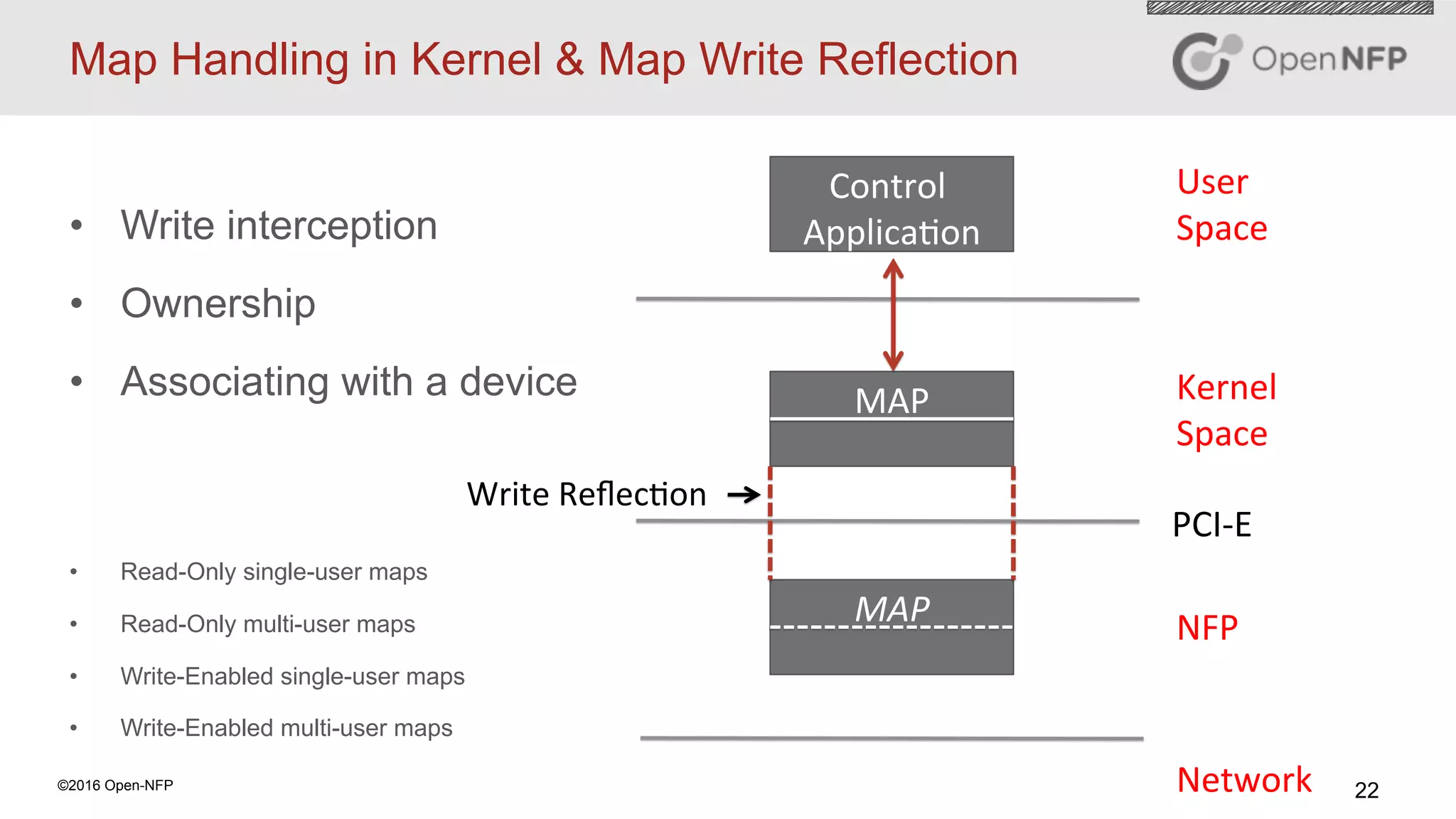

The webinar discussed accelerating P4 and eBPF programs on Netronome SmartNIC hardware. It covered the Linux kernel infrastructure like TC and XDP that supports offloading eBPF programs. It also explained how the NFP architecture is optimized for network flow processing with its multi-core design and memory hierarchy. The webinar demonstrated how eBPF programs can be translated to run efficiently on the NFP hardware by handling maps and applying optimizations.