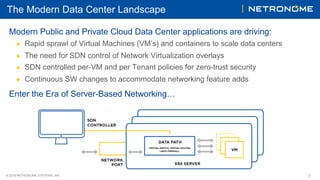

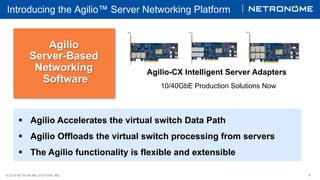

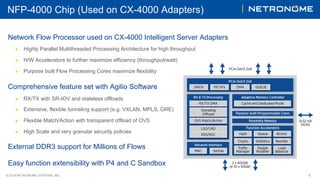

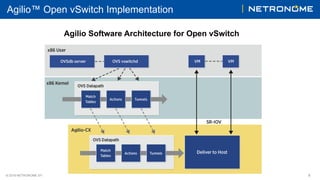

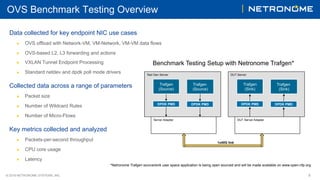

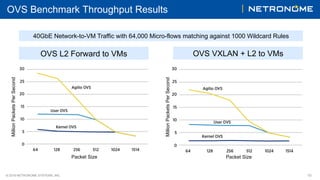

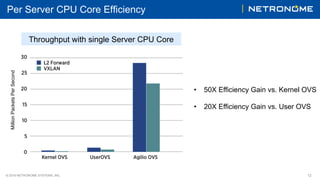

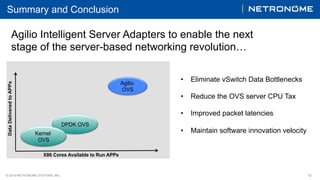

The document discusses the challenges faced by modern data centers due to server-based networking and software-based virtual switches, which can create bottlenecks and reduce performance. It introduces the Agilio server networking platform, emphasizing its ability to offload virtual switch processing and improve efficiency. Finally, it presents benchmark results showing significant gains in throughput and efficiency compared to traditional software options, resulting in lower TCO and enhanced performance capabilities.