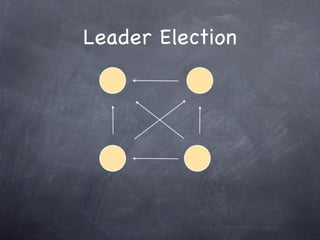

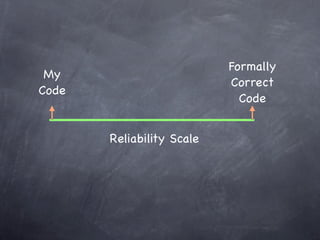

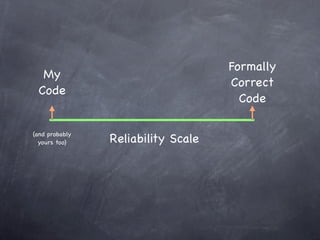

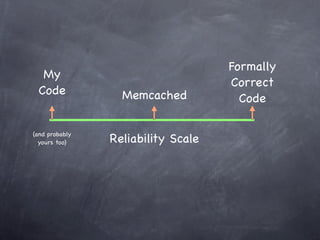

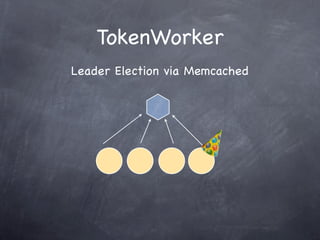

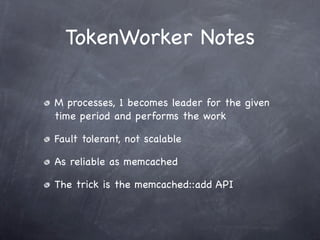

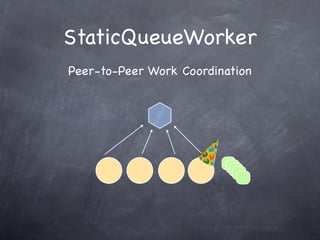

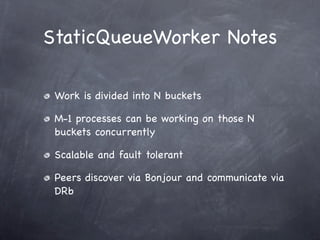

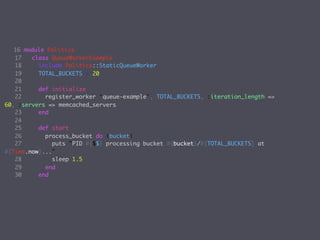

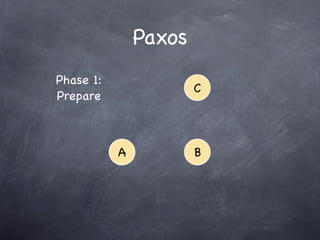

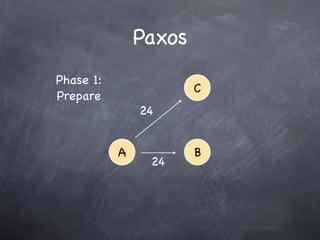

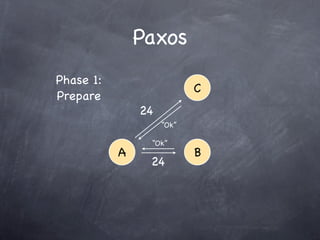

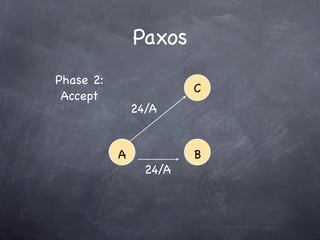

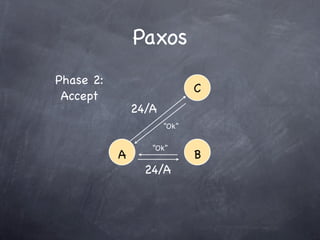

This document discusses patterns in distributed computing. It outlines some of the key hurdles in distributed systems like asynchrony, locality, failure, and Byzantine faults. It then summarizes common distributed algorithms like leader election, group membership, and consensus. It presents two Ruby libraries, TokenWorker and StaticQueueWorker, that implement leader election and work distribution patterns. It also briefly outlines the Paxos consensus algorithm but notes that a full implementation is very challenging. The document advocates for practical approaches over purely formal solutions and emphasizes starting with reasonable reliability requirements.