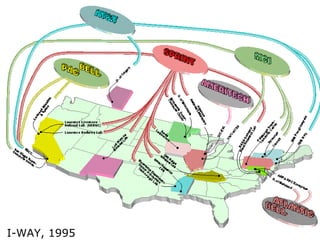

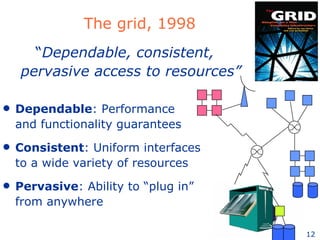

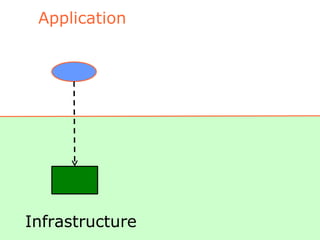

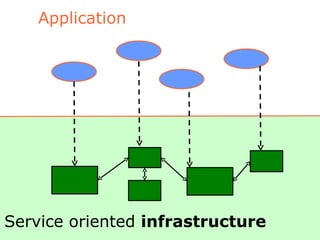

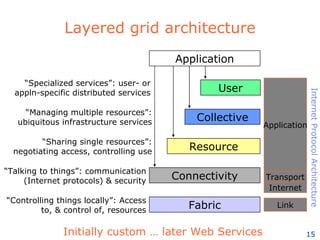

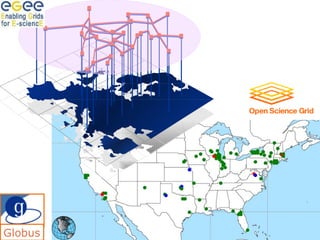

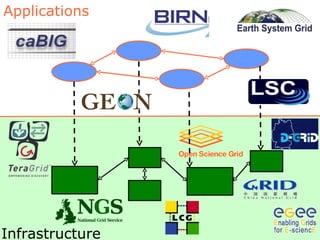

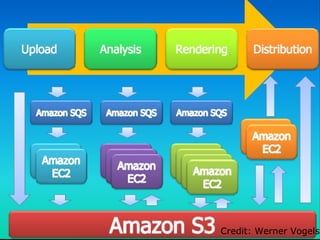

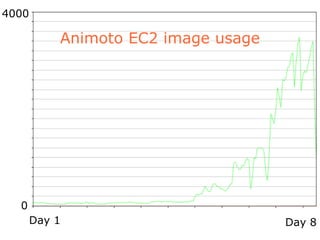

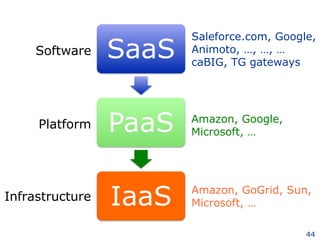

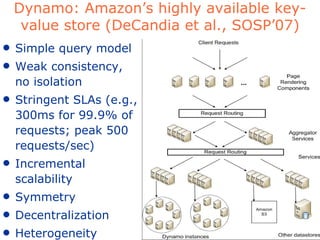

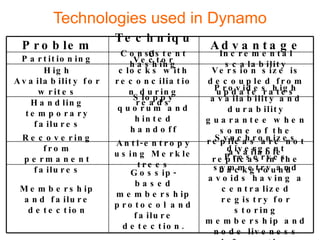

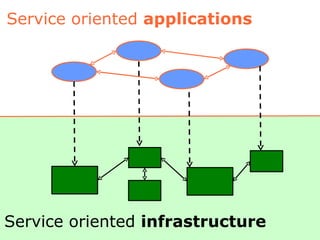

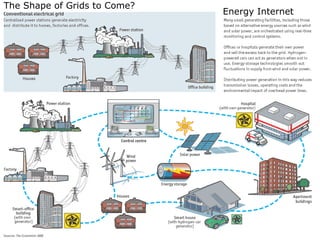

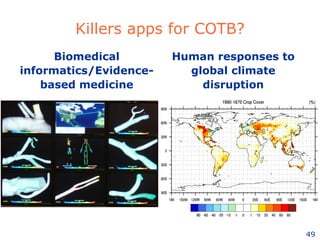

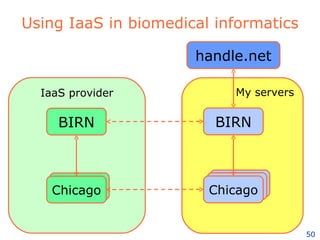

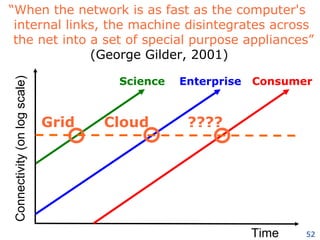

The document discusses the evolution and impact of outsourcing computing over the past decade, highlighting various approaches such as utility computing and cloud computing. It emphasizes the benefits of running applications outside traditional computing confines, including increased power, reduced costs, and collaborative potential. The text also covers the architectural advancements that enable these new applications, particularly in the fields of business and science.