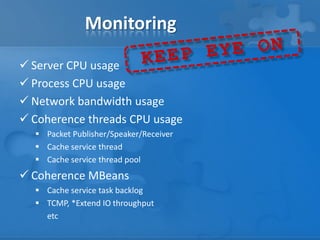

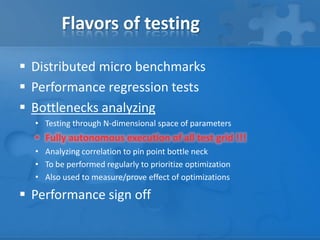

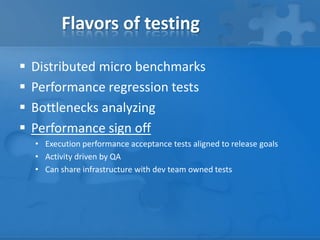

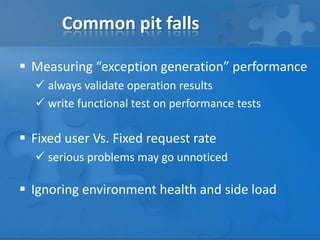

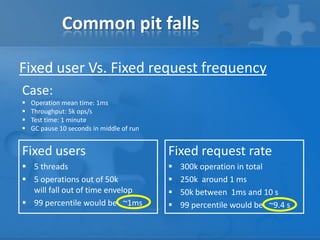

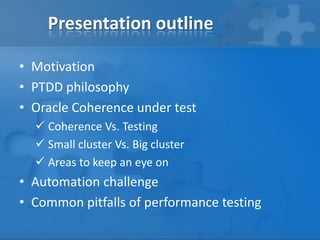

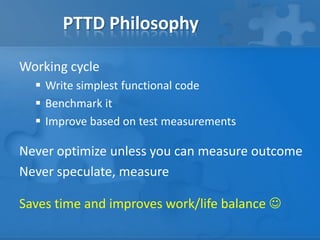

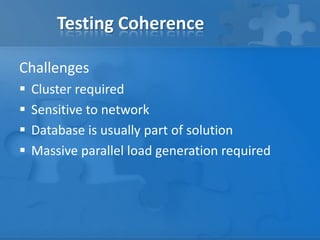

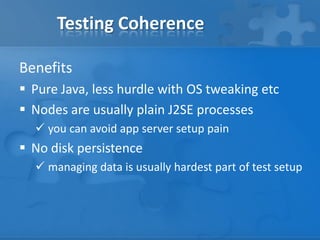

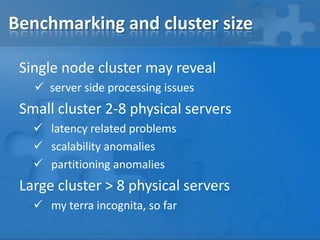

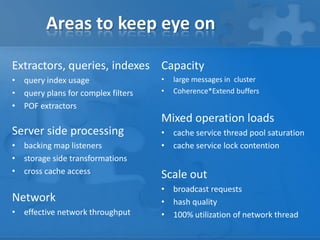

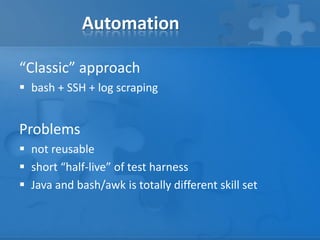

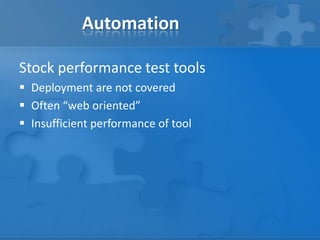

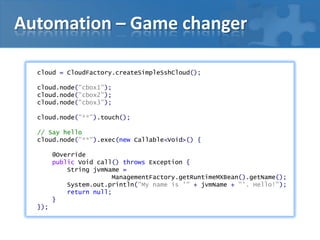

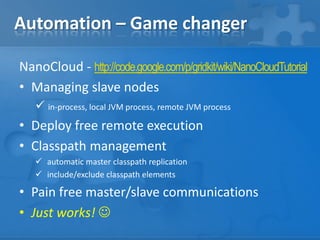

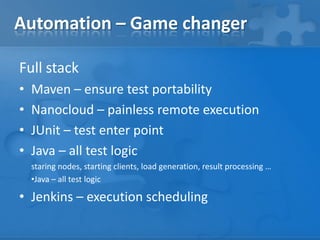

This presentation discusses test driven development with Oracle Coherence. It outlines the philosophy of PTDD and challenges of testing Coherence, including the need for a cluster and sensitivity to network issues. It discusses automating tests using tools like NanoCloud for managing nodes and executing tests remotely. Different types of tests are described like microbenchmarks, performance regression tests, and bottleneck analysis. Common pitfalls of performance testing like fixed users vs fixed request rates are also covered.

![This code works

Filter keyFilter = new InFilter(new KeyExtractor(), keySet);

EntryAggregator aggregator = new Count();

Object result = cache.aggregate(keyFilter, aggregator);

ValueExtractor[] extractors = {

new PofExtractor(String.class, TRADE_ID),

new PofExtractor(String.class, SIDE),

new PofExtractor(String.class, SECURITY),

new PofExtractor(String.class, CLIENT),

new PofExtractor(String.class, TRADER),

new PofExtractor(String.class, STATUS),

};

MultiExtractor projecter = new MultiExtractor(extractors);

ReducerAggregator reducer = new ReducerAggregator(projecter);

Object result = cache.aggregate(filter, reducer);](https://image.slidesharecdn.com/coherencesig-performancetestdrivendevelopment-130718114226-phpapp02/85/Performance-Test-Driven-Development-with-Oracle-Coherence-3-320.jpg)

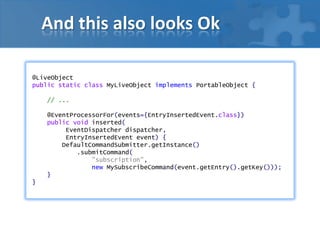

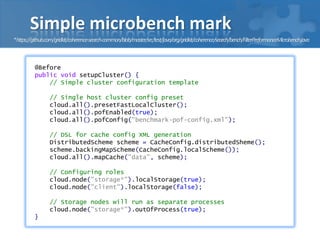

![Simple microbench mark

@Test

public void verify_full_vs_index_scan() {

// Tweak JVM arguments for storage nodes

JvmProps.addJvmArg(cloud.node("storage-*"),

"|-Xmx1024m|-Xms1024m|-XX:+UseConcMarkSweepGC");

// Generating data for benchmark

// ...

cloud.node("client").exec(new Callable<Void>() {

@Override

public Void call() throws Exception {

NamedCache cache = CacheFactory.getCache("data");

System.out.println("Cache size: " + cache.size());

calculate_query_time(tagFilter);

long time =

TimeUnit.NANOSECONDS.toMicros(calculate_query_time(tagFilter));

System.out.println("Exec time for [tagFilter] no index - " + time);

// ...

return null;

}

});

}

*https://github.com/gridkit/coherence-search-common/blob/master/src/test/java/org/gridkit/coherence/search/bench/FilterPerformanceMicrobench.java](https://image.slidesharecdn.com/coherencesig-performancetestdrivendevelopment-130718114226-phpapp02/85/Performance-Test-Driven-Development-with-Oracle-Coherence-19-320.jpg)