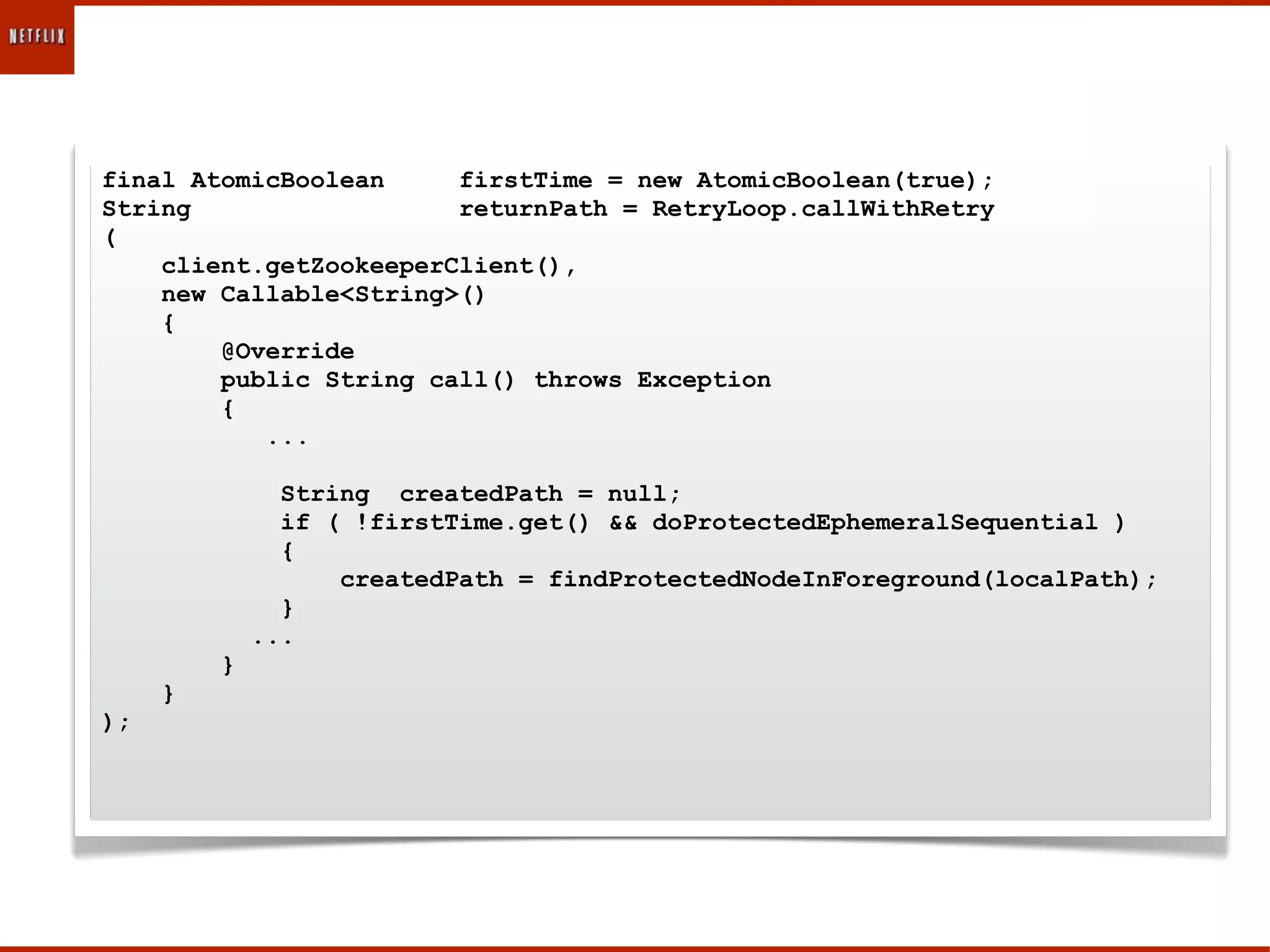

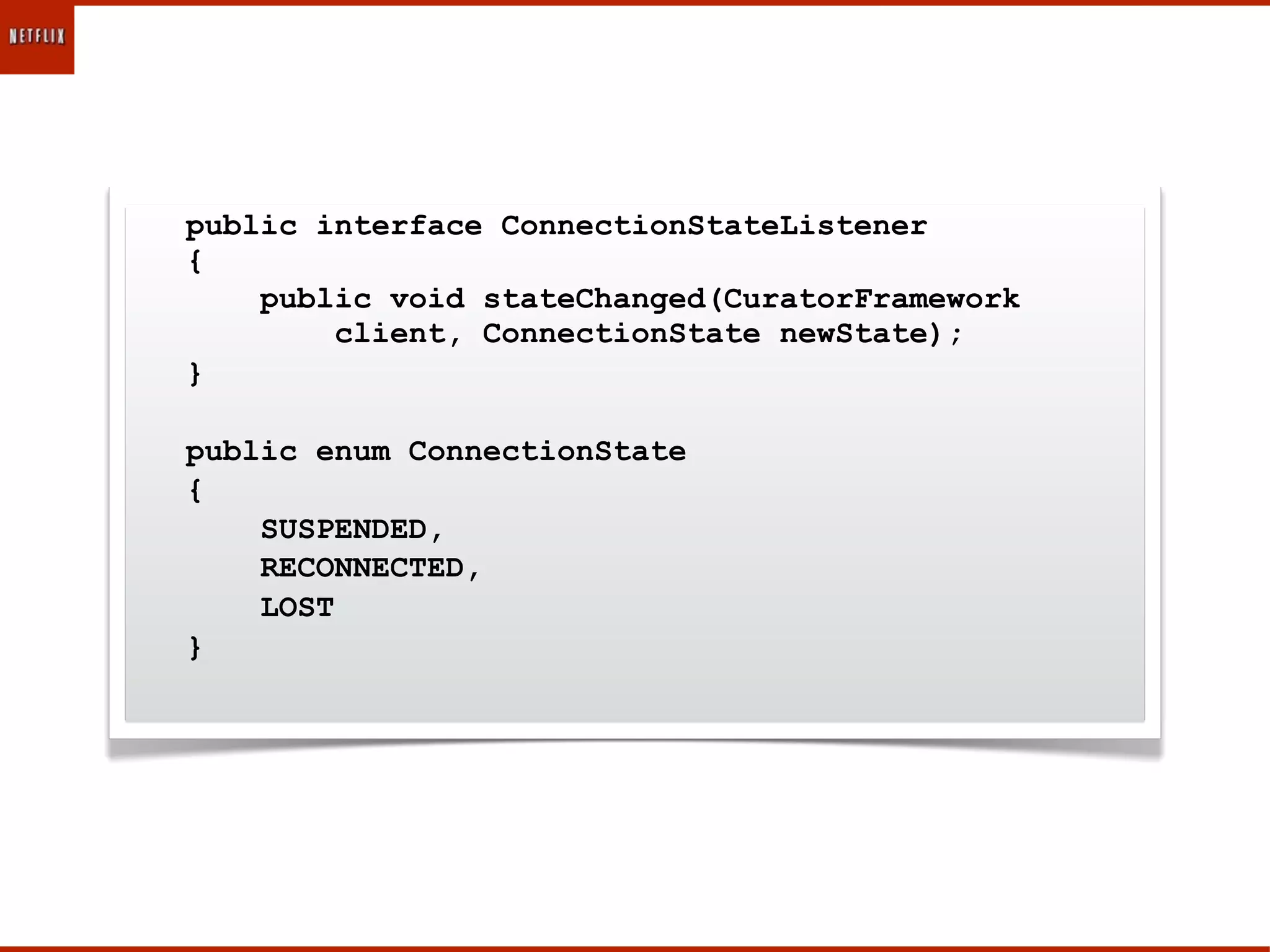

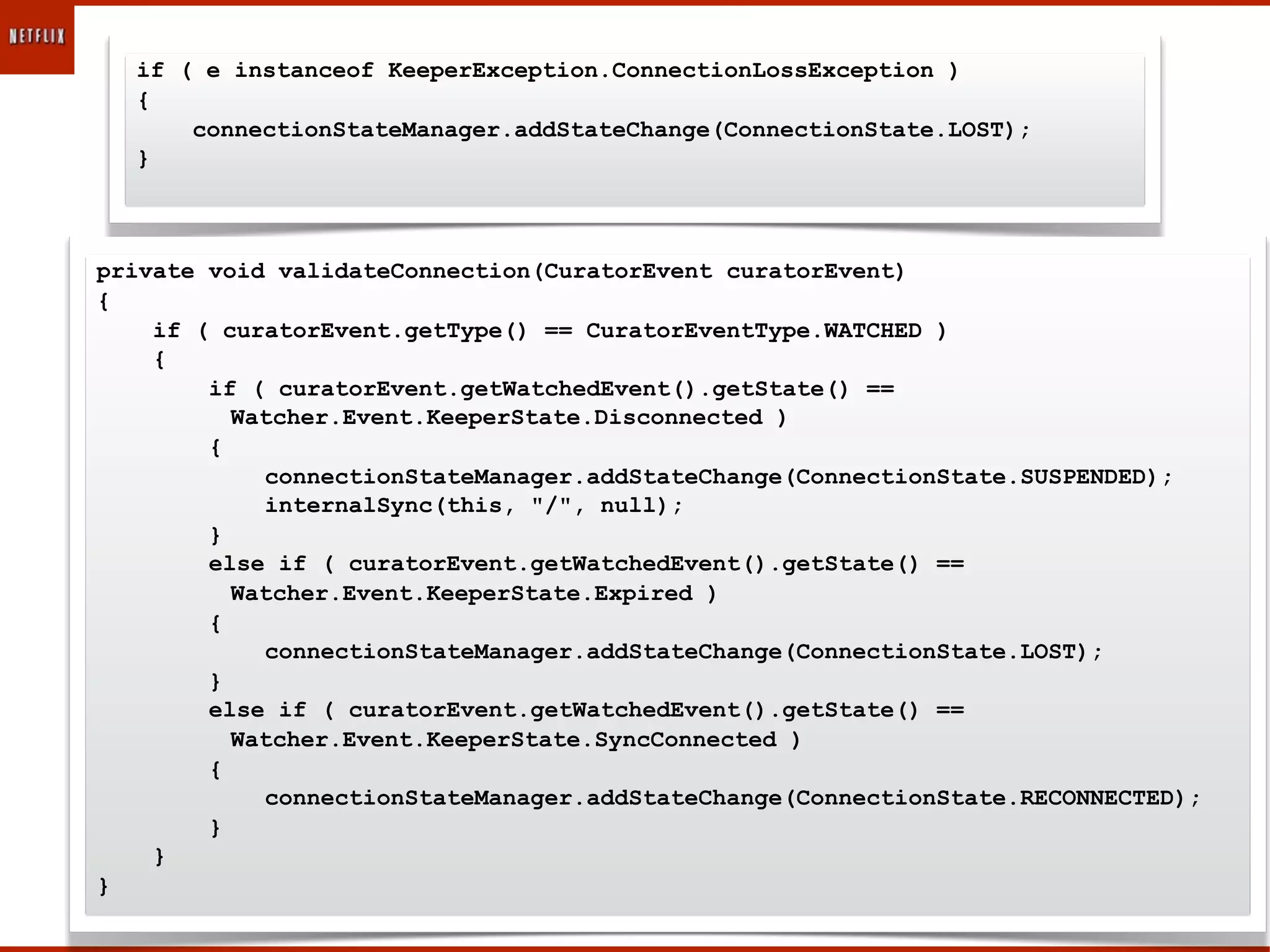

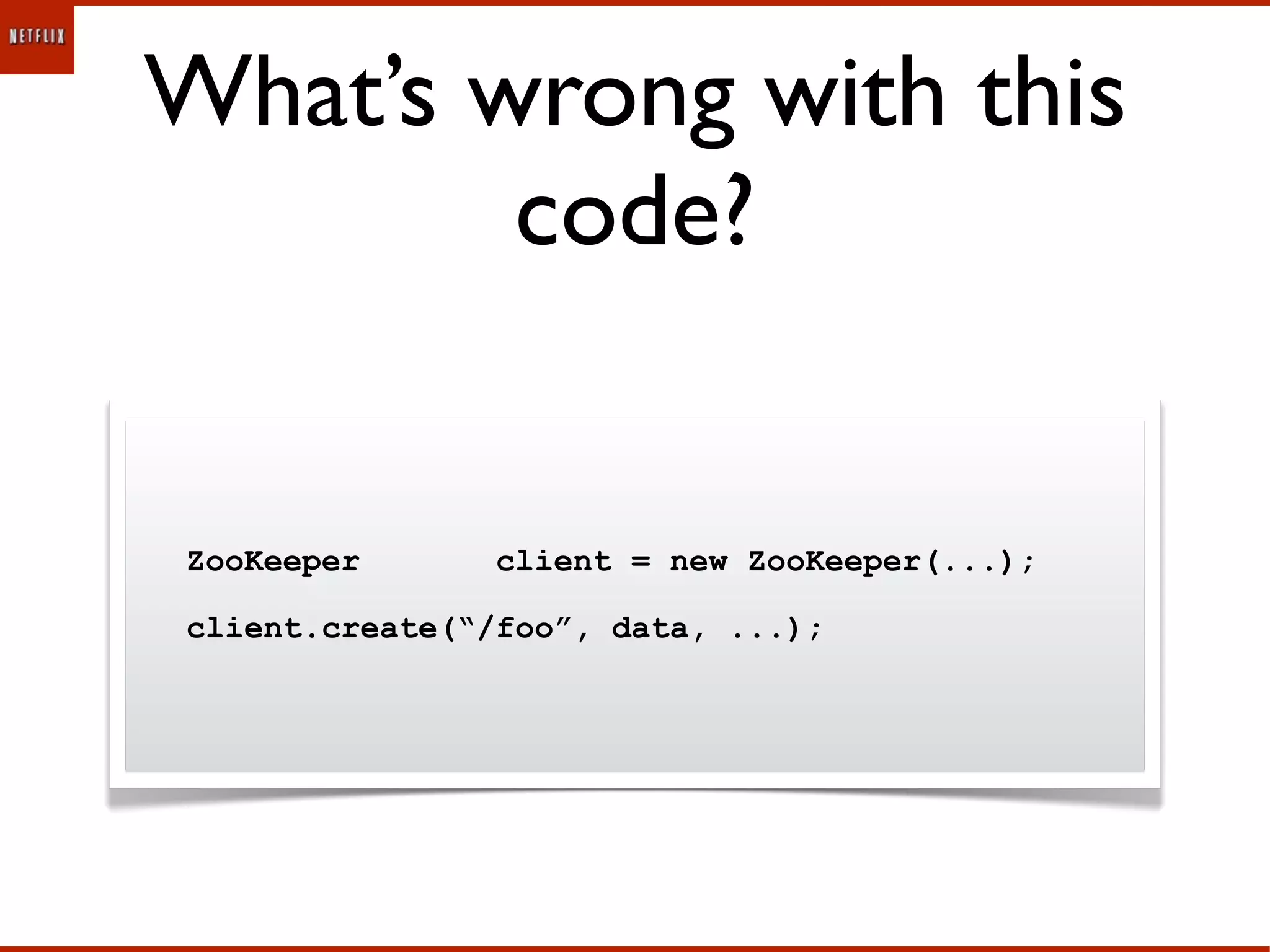

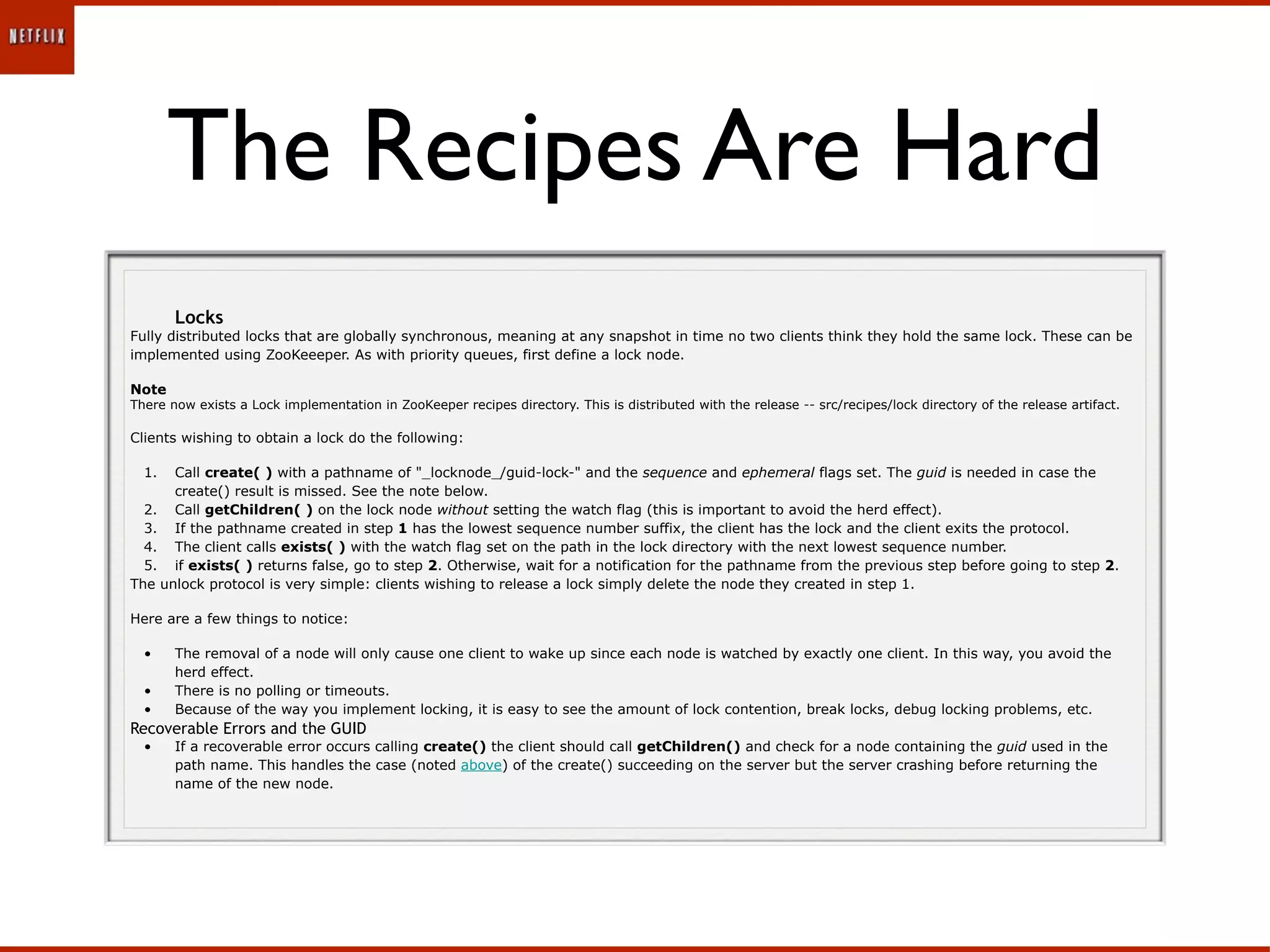

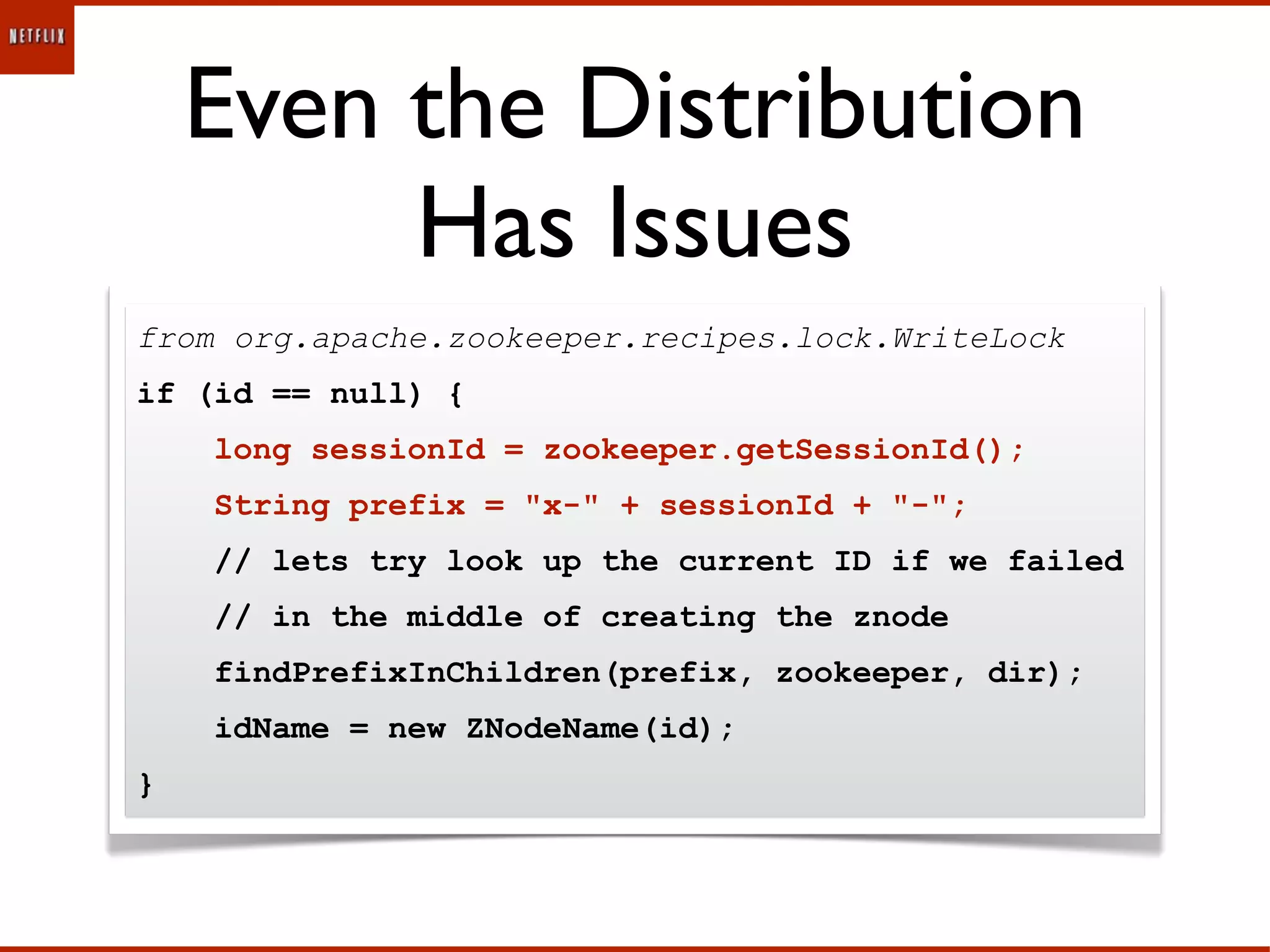

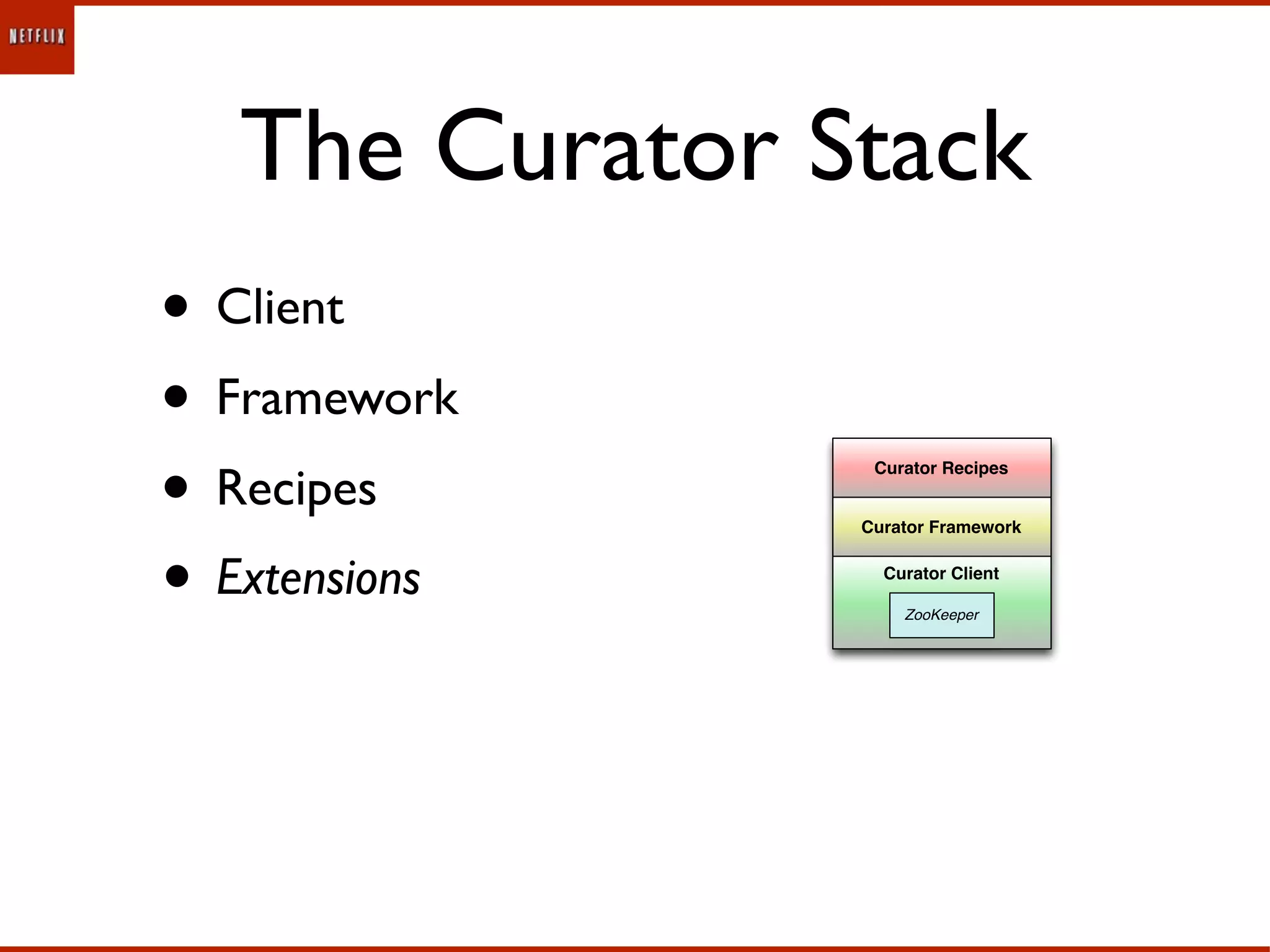

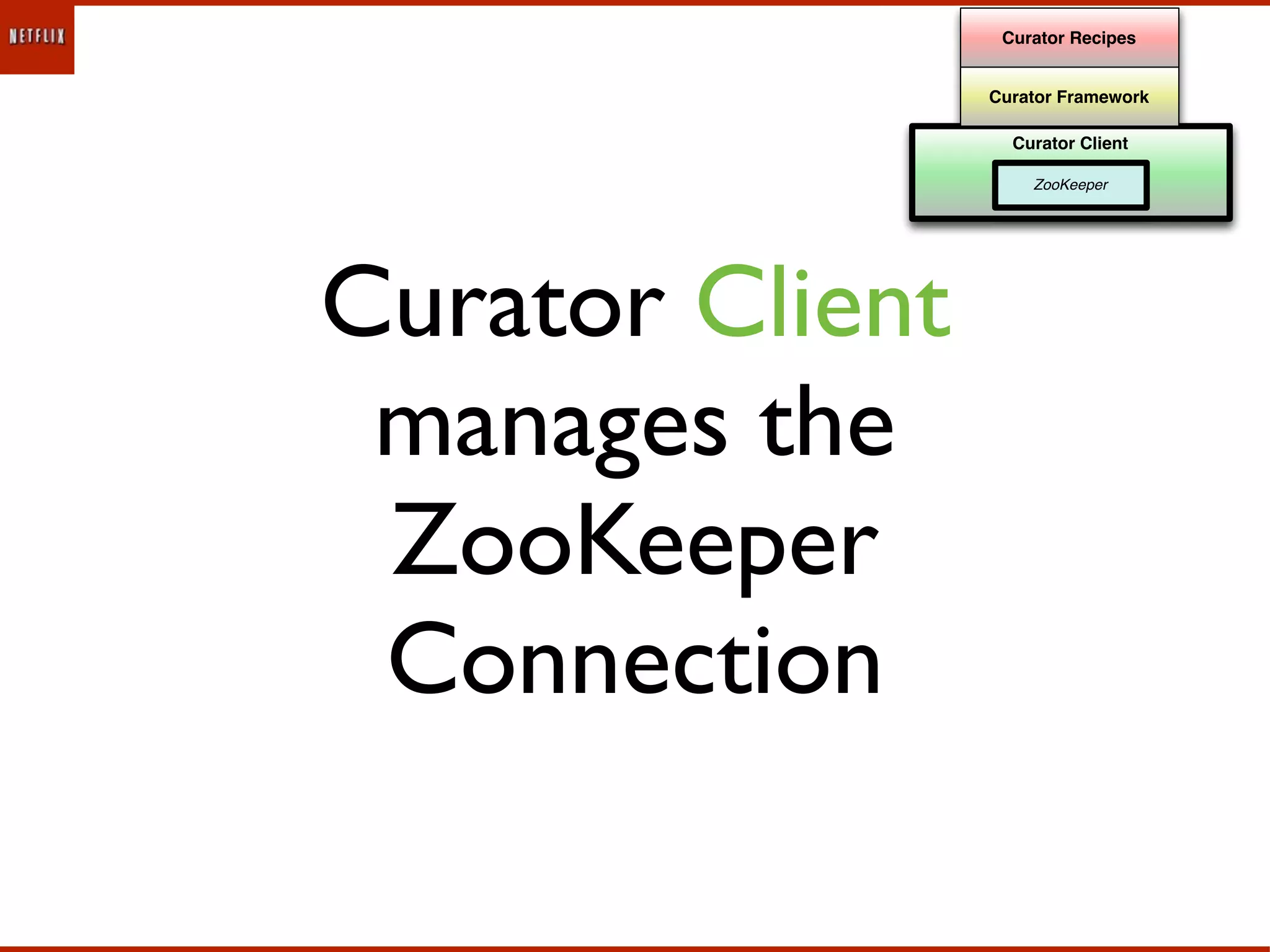

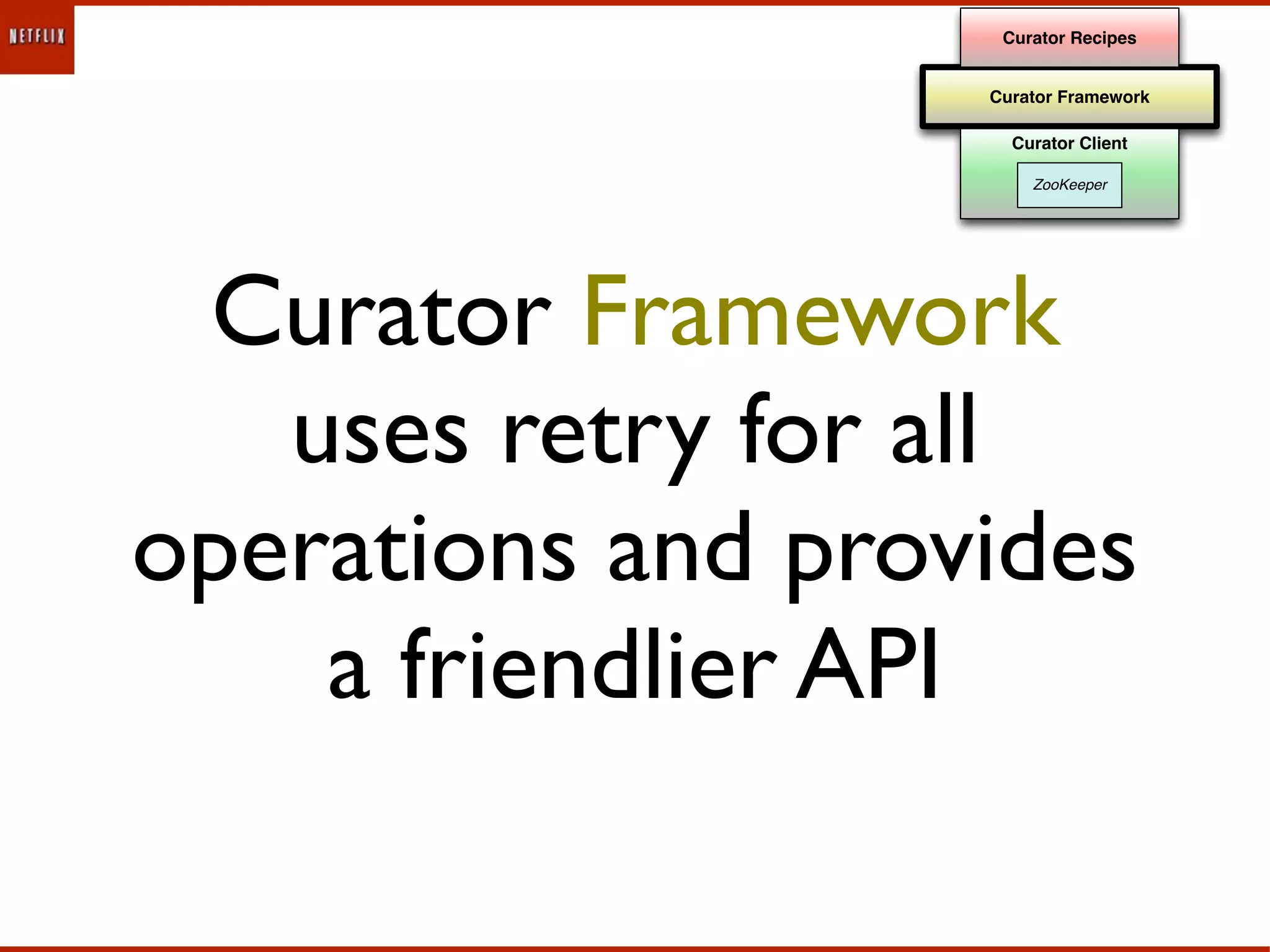

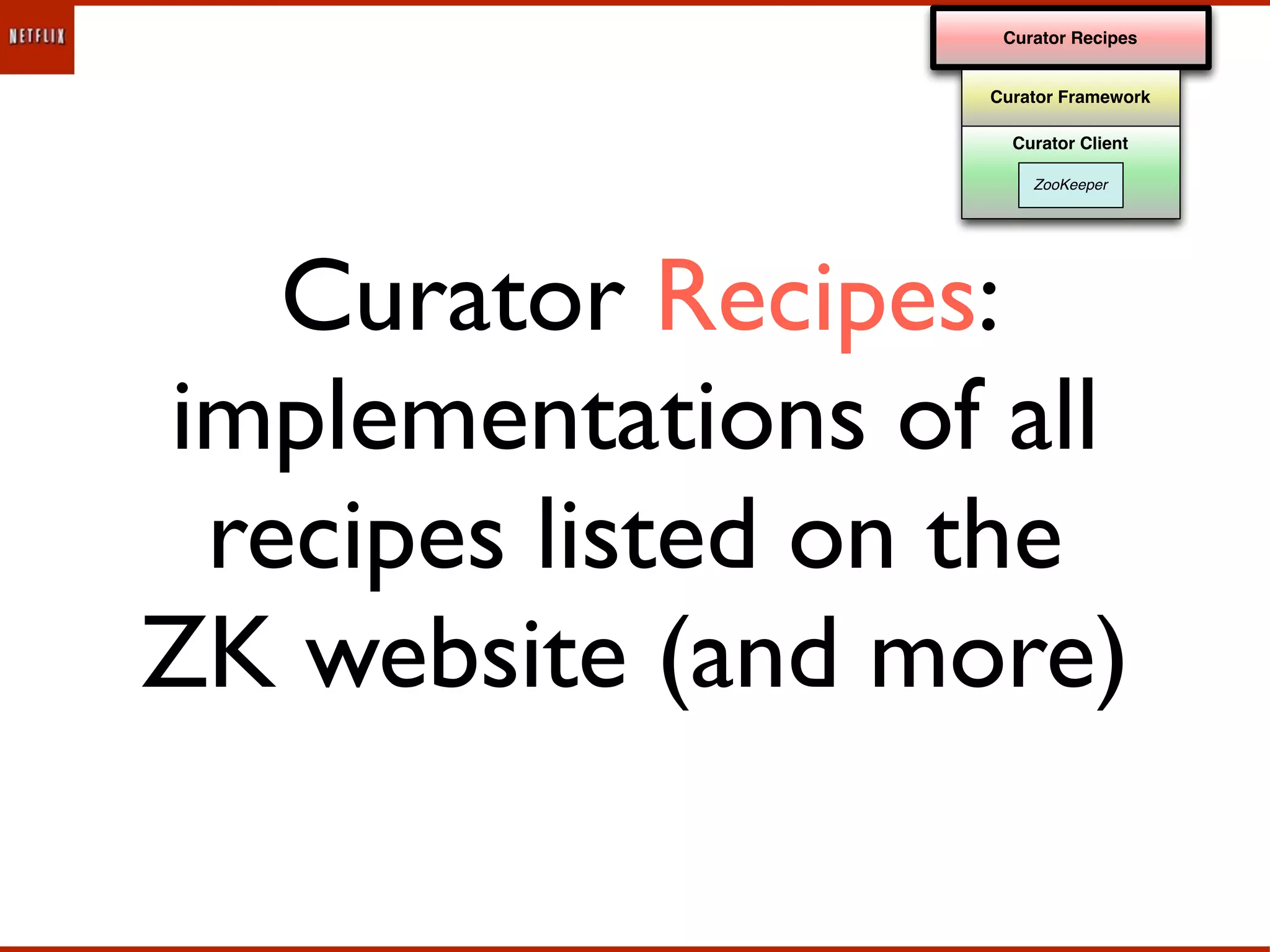

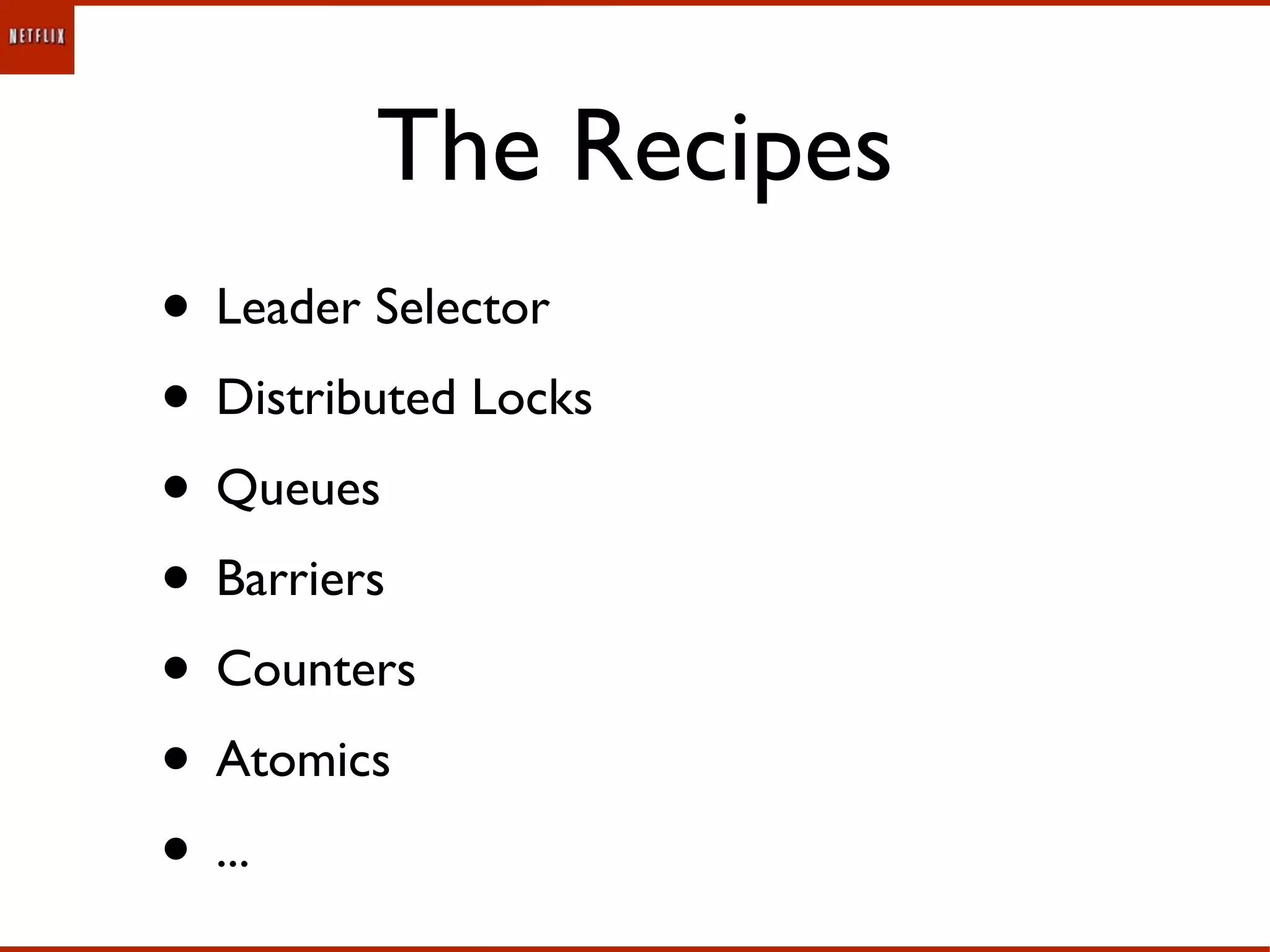

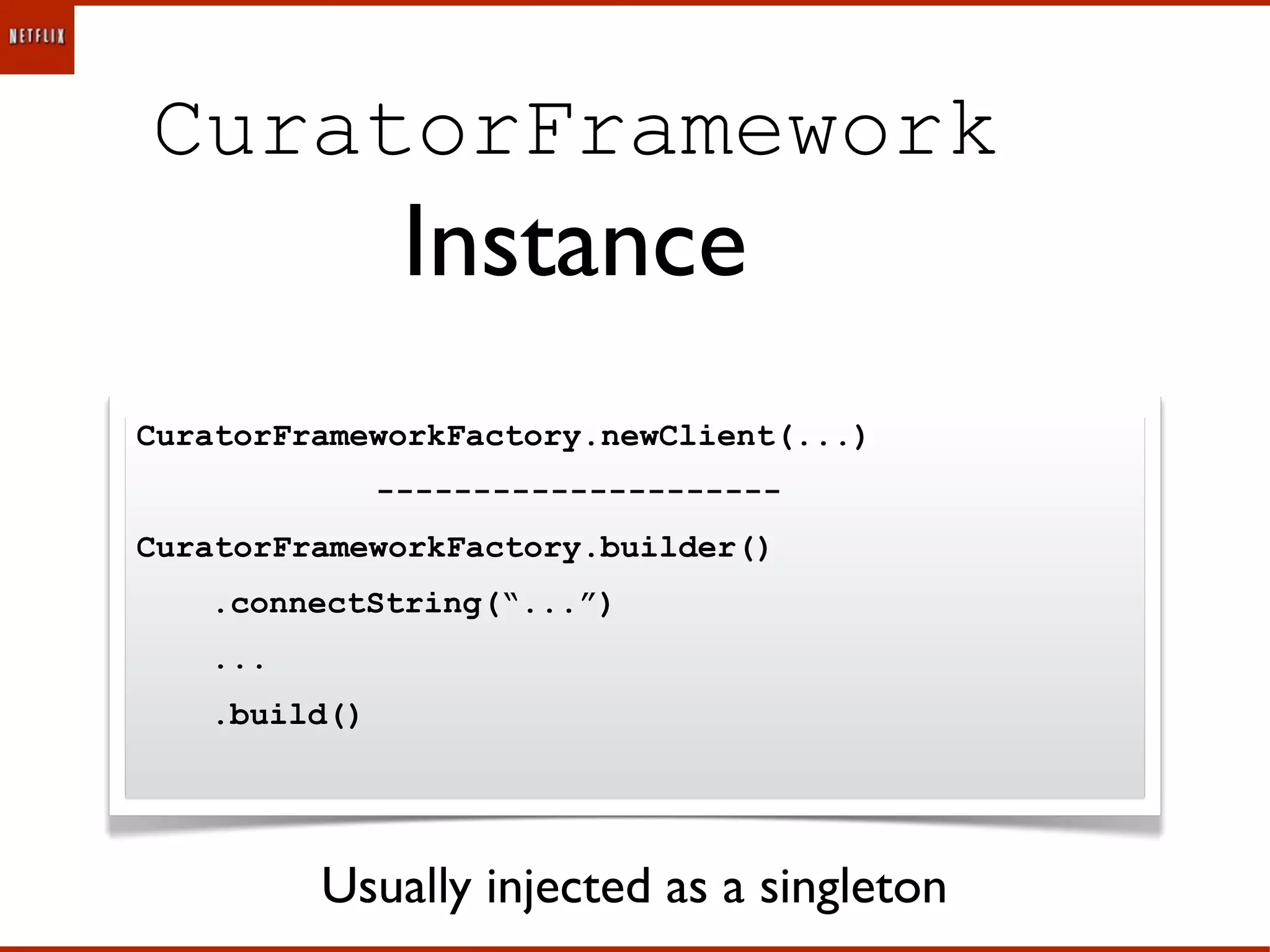

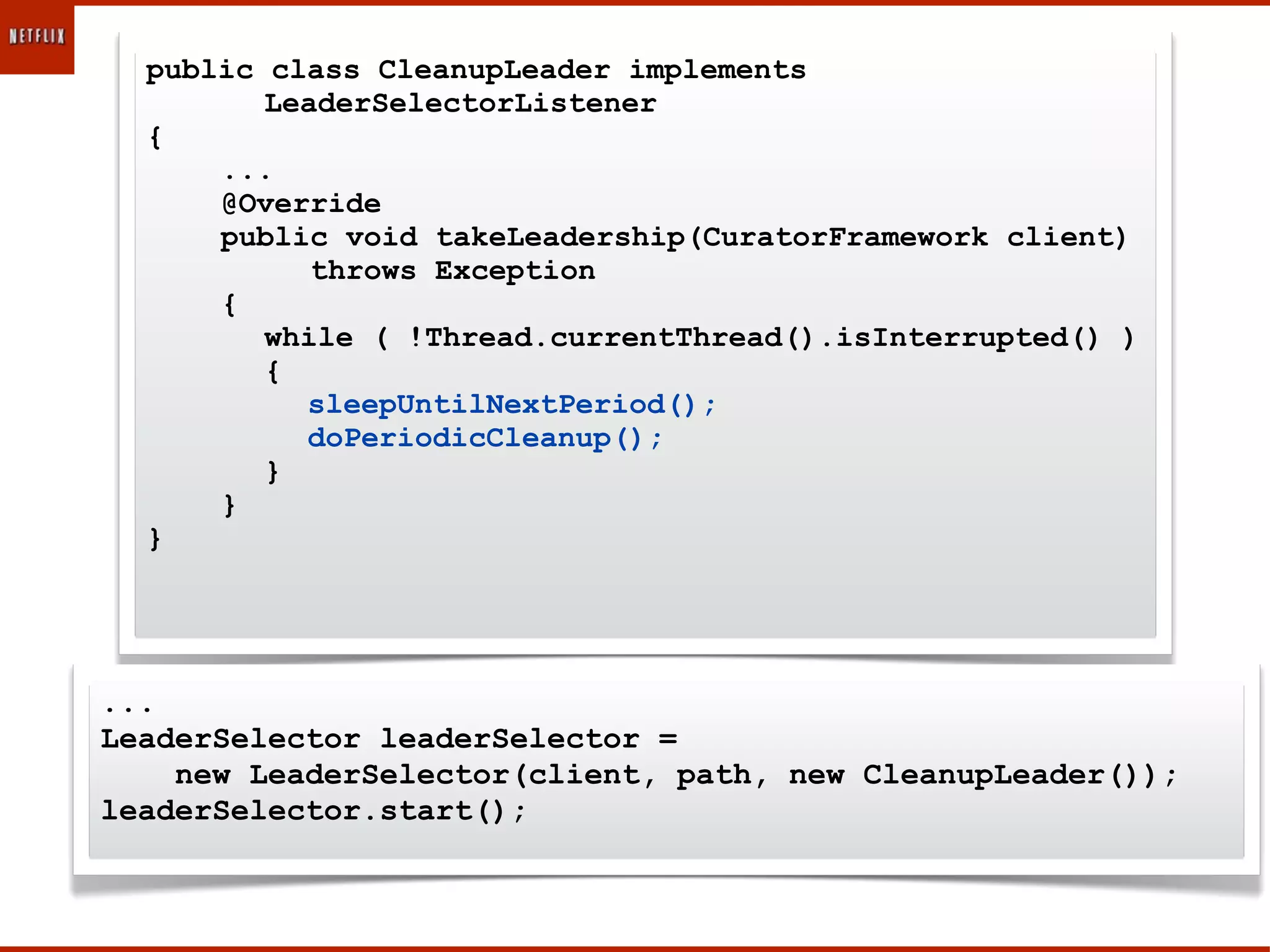

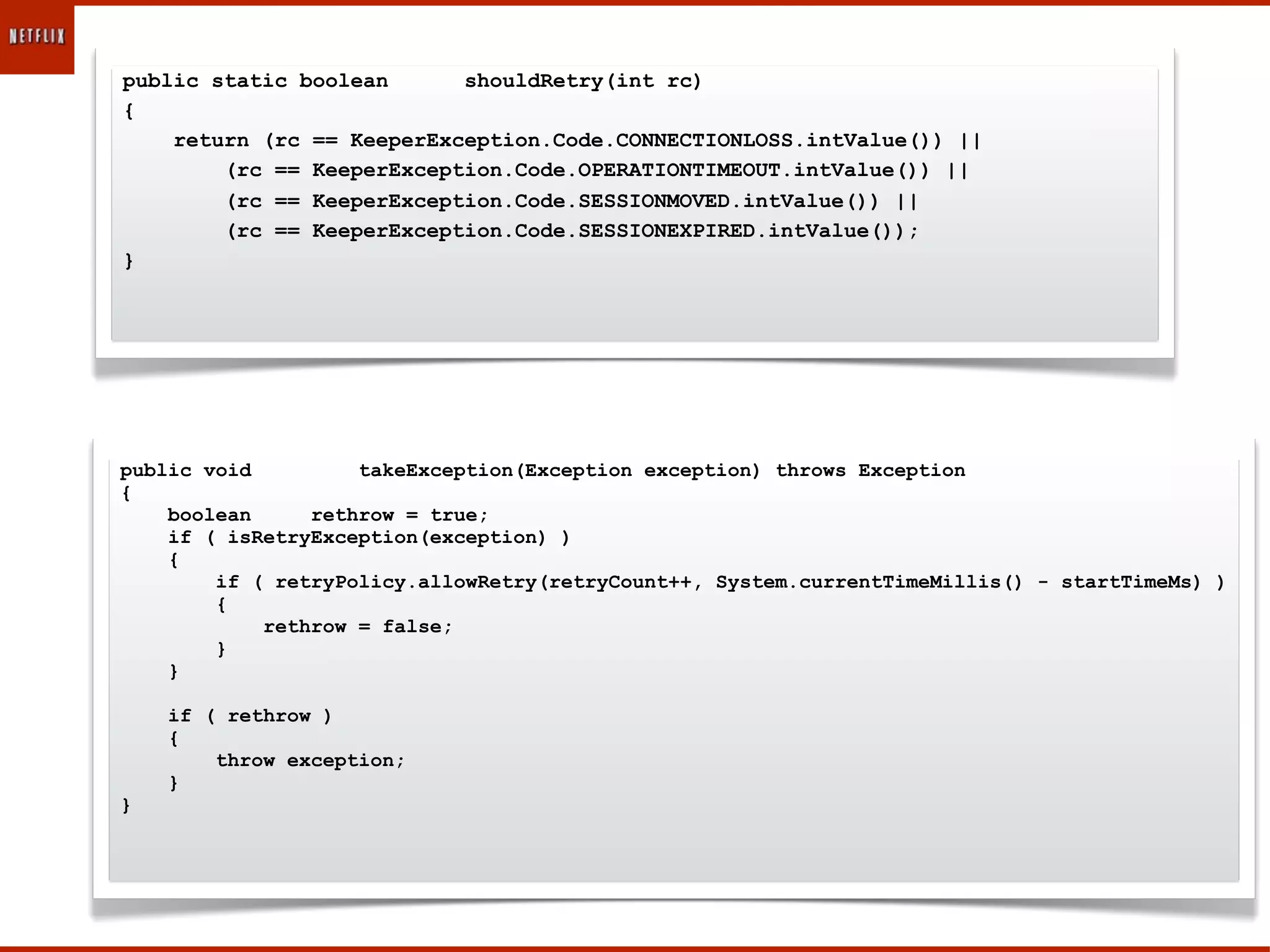

The document provides an overview and summary of Curator, a client library for Apache ZooKeeper. Curator aims to simplify ZooKeeper development by providing a friendlier API, handling retries, and implementing common patterns ("recipes") like leader election and locks. It consists of a client, framework, and recipes components. The framework handles connection management and retries, while recipes implement distributed primitives. Details about common recipes like locks and leader election are provided.

![byte[] responseData = RetryLoop.callWithRetry

(

client.getZookeeperClient(),

new Callable<byte[]>()

{

@Override

public byte[] call() throws Exception

{

byte[] responseData;

responseData = client.getZooKeeper().getData(path,

...);

}

return responseData;

}

}

);

return responseData;](https://image.slidesharecdn.com/curatorintro-120227140117-phpapp01/75/Curator-intro-41-2048.jpg)