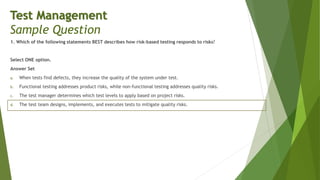

The document discusses advanced test management, focusing on the roles and responsibilities of test managers in securing and utilizing resources for effective testing processes within software development. It emphasizes the importance of understanding testing stakeholders, integrating test activities with the software development lifecycle, and managing non-functional and experience-based testing. The document also highlights risk-based testing techniques for identifying and mitigating quality risks to ensure project success.

![Test Managers should be aware of standards, their organization’s policy on the use of standards, and whether or

not standards are required, necessary or useful for them to use.

Standards can come from different sources, such as:

International or with international objectives

National, such as national applications of international standards

Domain specific, such as when international or national standards are adapted to particular domains, or

developed for specific domains

International standards bodies include ISO and IEEE. ISO is the International Standards Organization, also called

IOS, the International Organization for Standardization. It is made up of members representing, for their country,

the national body most relevant to the area being standardized. This international body has promoted a number of

standards useful for software testers, such as ISO 9126 (being replaced by ISO 25000), ISO 12207 [ISO12207], and

ISO 15504 [ISO15504].

IEEE is the Institute of Electrical and Electronics Engineers, a professional organization based in the U.S., but with

national representatives in more than one hundred countries. This organization has proposed a number of

standards that are useful for software testers, such as IEEE 829 [IEEE829] and IEEE 1028 [IEEE 1028].

Neeraj Kumar Singh

Test Management

Managing the Application of Industry Standards](https://image.slidesharecdn.com/chapter2-210408044826/85/Chapter-2-Test-Management-85-320.jpg)

![Many countries have their own national standards. Some of these standards are useful for software testing. One

example is the UK standard BS 7925-2 [BS7925-2], that provides information related to many of the test design

techniques described in the Advanced Test Analyst and Advanced Technical Test Analyst syllabi.

Some standards are specific to particular domains, and some of these standards have implications for software

testing, software quality and software development. For example, in the avionics domain, the U.S. Federal

Aviation Administration’s standard DO-178B (and its EU equivalent ED 12B) applies to software used in civilian

aircraft. This standard prescribes certain levels of structural coverage criteria based on the level of criticality of

the software being tested.

Another example of a domain-specific standard is found in medical systems with the U.S. Food and Drug

Administration’s Title 21 CFR Part 820 [FDA21]. This standard recommends certain structural and functional test

techniques.

Three other important examples are PMI’s PMBOK, PRINCE2®, and ITIL®. PMI and PRINCE2 are commonly used

project management frameworks in North America and Europe, respectively. ITIL is a framework for ensuring that

an IT group delivers valuable services to the organization in which it exists.

Whatever standards or methodologies are being adopted, it is important to remember that they are created by

groups of professionals. Standards reflect the source group’s collective experience and wisdom, but also their

weaknesses, too. Test Managers should be aware of the standards that apply to their environment and context,

whether formal standards (international, national or domain specific) or in-house standards and recommended

practices.

Neeraj Kumar Singh

Test Management

Managing the Application of Industry Standards](https://image.slidesharecdn.com/chapter2-210408044826/85/Chapter-2-Test-Management-86-320.jpg)