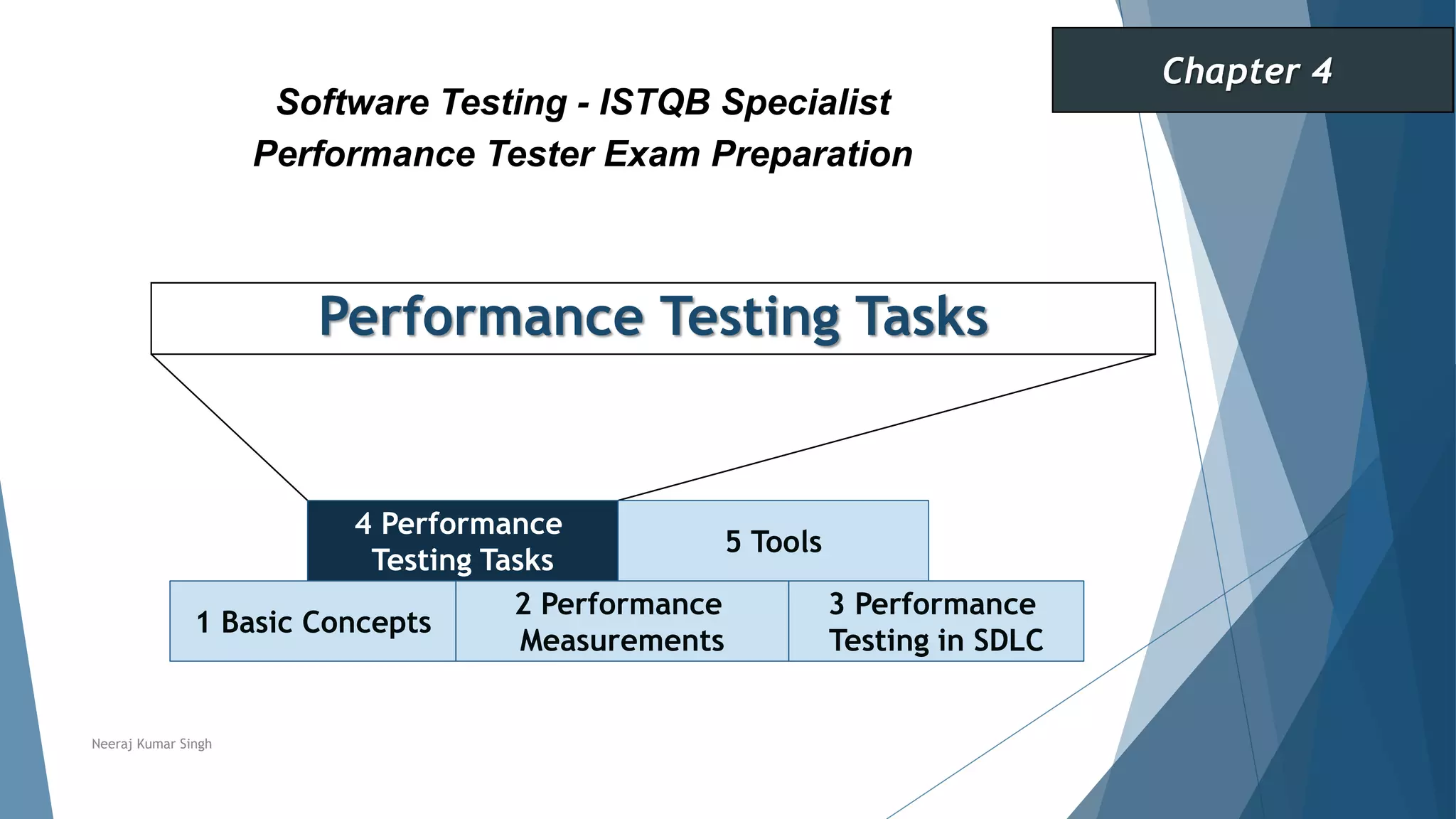

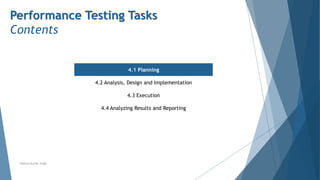

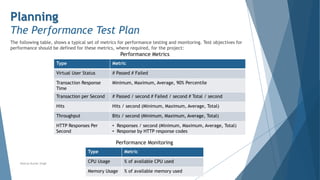

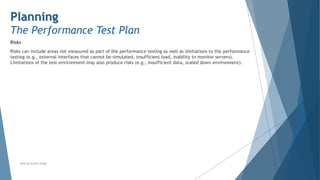

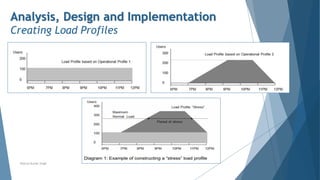

The document details performance testing tasks essential for evaluating software performance, outlining key concepts such as stakeholder objectives, test planning, execution, and result analysis. It emphasizes the need for a structured performance test plan, which includes objectives, acceptance criteria, test data, and system configuration, while also addressing communication strategies for engaging different stakeholder groups. Additionally, it discusses the importance of creating operational profiles and load profiles to accurately reflect user interactions and simulate real-world performance scenarios.

![Analysis, Design and Implementation

Analyzing Throughput and Concurrency

It is important to understand different aspects of workload: throughput and concurrency. To model operational and

load profiles properly, both aspects should be taken into consideration.

System Throughput

System throughput is a measure of the number of transactions of a given type that the system processes in a unit

of time. For example, the number of orders per hour or the number of HTTP requests per second. System

throughput should be distinguished from network throughput, which is the amount of data moved over the network

Workload modeling is usually done by considering the number of virtual users (execution threads) and the think

time (delays between user actions). However, system throughput is also defined by processing time, and that time

may increase as load increases.

System throughput = [number of virtual users] / ([processing time] + [think time])

So when the processing time increases, throughput may significantly decrease even if everything else stays the

same.

System throughput is an important aspect when testing batch processing systems. In this case, the throughput is

typically measured according to the number of transactions that can be accomplished within a given time frame

(e.g., a nightly batch processing window).

Neeraj Kumar Singh](https://image.slidesharecdn.com/chapter4-performancetestingtasks-210803121844/85/Chapter-4-Performance-Testing-Tasks-27-320.jpg)