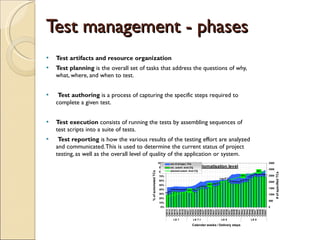

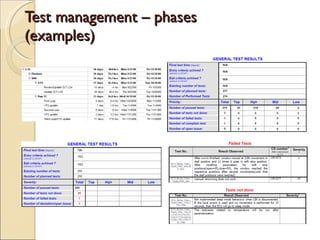

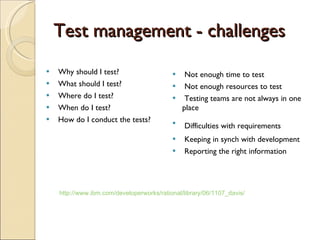

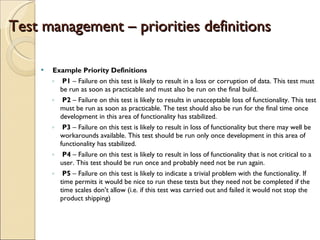

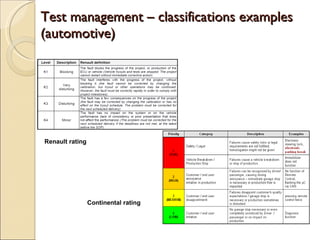

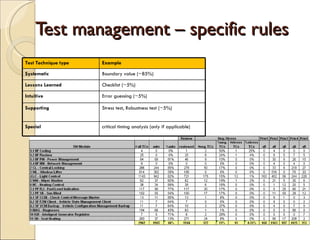

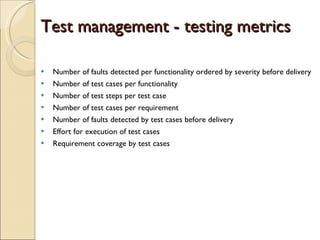

The document discusses test management for software quality assurance. It defines test management as organizing and controlling the testing process and artifacts. The goals of test management are to plan, develop, execute and assess testing activities within software development. It also discusses phases of test management like test planning, authoring, execution and reporting. It highlights challenges of test management like limited time and resources as well as priorities and classifications for testing.