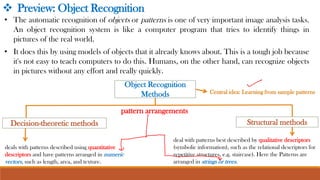

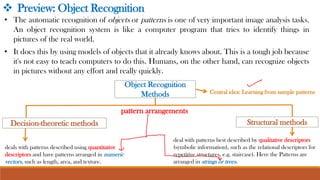

This document discusses various methods for object recognition in digital image processing. It begins by explaining the main steps of image processing, including low, mid, and high-level processing. It then defines object recognition as a computer program that identifies objects in real-world pictures using models of known objects. Two common methods are described: decision-theoretic methods that use quantitative descriptors and numeric pattern vectors, and structural methods that use qualitative descriptors like strings and trees. Pattern classes and arrangements like numeric vectors, strings, and trees are also defined. The document focuses on decision-theoretic methods and minimum distance classifiers, explaining concepts like decision functions, decision boundaries, and how unknown patterns are assigned to the closest class.

![• A pattern is an arrangement of descriptors (or features).

• A pattern class is a family of patterns that share some common properties. Pattern classes are

denoted w1, w2, . . . , wN where N is the number of classes.

• Pattern recognition by machine involves techniques for assigning patterns to their respective

classes automatically and with as little human intervention as possible.

• The object or pattern recognition task consists of two steps:

➢ feature selection (extraction)

➢ matching (classification)

There are three common pattern arrangements used in practice:

• Numeric pattern vectors (for quantitative descriptions)

𝑥1

𝑥 = 𝑥2

[ ⋮ ]

𝑥𝑛

• Strings and trees (for qualitative(structural) descriptions)

x = abababa….

❖ Patterns and Pattern Classes:](https://image.slidesharecdn.com/chap10objectrecognition-231012112856-883d4e2c/85/Chap_10_Object_Recognition-pdf-5-320.jpg)

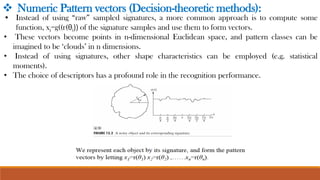

![❖ Numeric Pattern vectors (Decision-theoretic methods):

• Fisher 1936, performed Recognition of three types of Iris flowers(Iris serosa,

versicolor and virginica by the lengths[x1] and widths[x2] of their petals.

• Here 3 pattern classes are w1, w2 and w3 corresponding to Iris setosa, versicolor

and virginica. Also there are variations between and within classes.

• Class separability depends strongly on the choice of descriptors.](https://image.slidesharecdn.com/chap10objectrecognition-231012112856-883d4e2c/85/Chap_10_Object_Recognition-pdf-6-320.jpg)

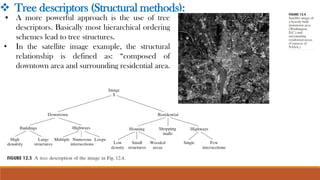

![• The basic concept in decision-theoretic methods is the idea of pattern matching

based on measures of distance between pattern vectors. Where it includes decision

(discriminant) functions and decision boundaries.

• Let x=[x1, x2,…, xn]T represent a pattern vector.

• For W pattern classes ω1, ω2,…, ωW, the basic problem is to find W decision

functions d1(x), d2(x),…, dW (x) with the property that if x belongs to class ωi:

di(x) > dj(x) for j = 1,2,..., W; j # i

• In other words, an unknown pattern x is said to belong to the ith pattern class if

upon substitution of x into all decision functions, di(x) yields the largest numerical

value. We want to classify x, which is a pattern. We are given a finite set of classes

of objects. We want to categorize the pattern x into one of the classes. To do so,

we apply x to all decision functions, and categorize x to the class of best fit.

❖ Decision-theoretic methods:](https://image.slidesharecdn.com/chap10objectrecognition-231012112856-883d4e2c/85/Chap_10_Object_Recognition-pdf-13-320.jpg)