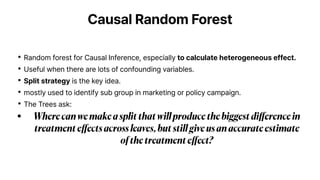

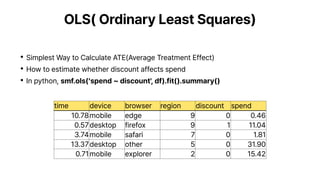

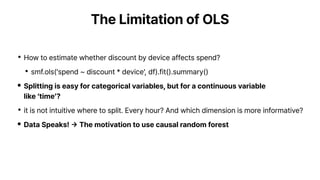

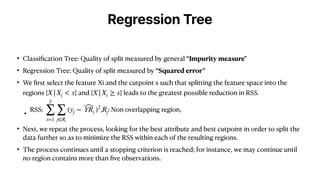

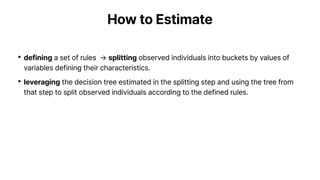

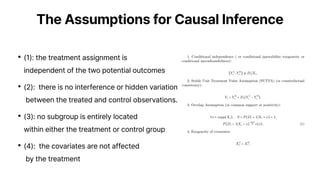

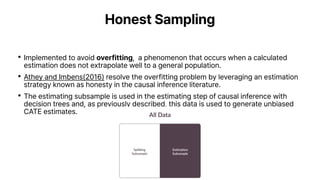

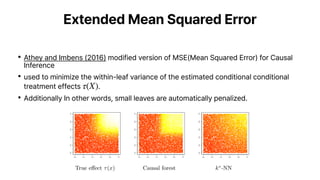

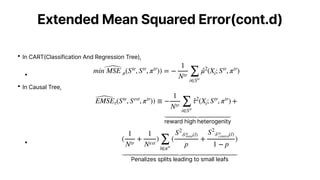

Causal random forests are used to estimate heterogeneous treatment effects by splitting individuals into buckets defined by variable values. The splitting is done by regression trees that minimize an extended mean squared error, which rewards heterogeneity between leaves while penalizing small leaves. This honest sampling and splitting approach allows for unbiased estimates of conditional average treatment effects within subgroups. Causal random forests relax assumptions of traditional methods like propensity score matching and can help identify which subgroups experience the largest effects from a treatment or policy.