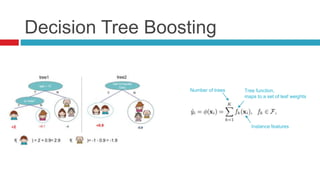

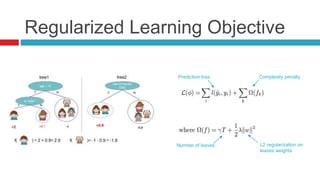

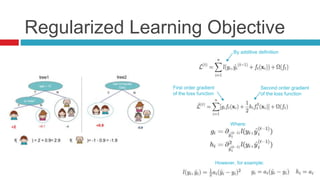

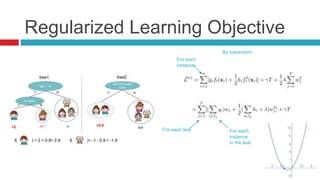

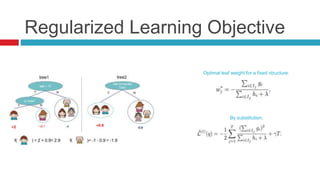

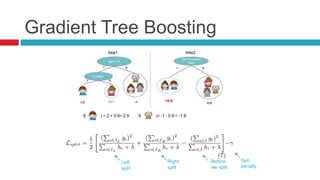

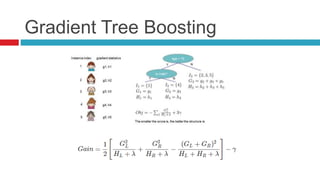

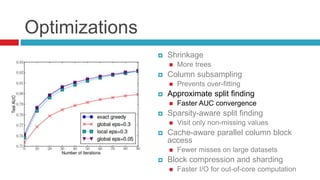

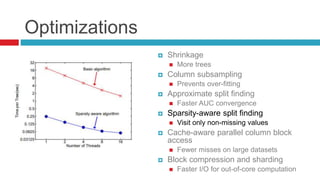

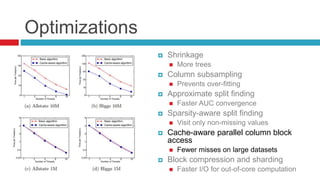

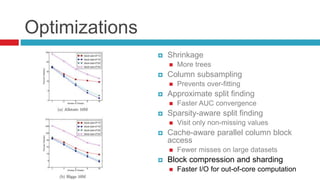

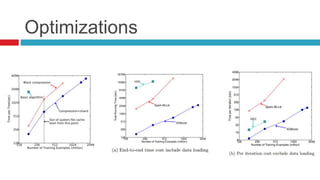

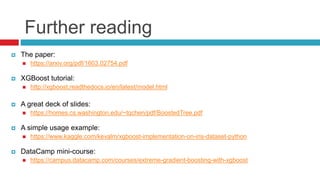

XGBoost is a widely-used scalable tree boosting system that excels in classification, regression, and learning-to-rank tasks, often outperforming competitors. It employs decision tree boosting with optimizations like column subsampling and approximate split finding to enhance performance and reduce overfitting. Further resources and tutorials are available to aid in understanding and implementation.