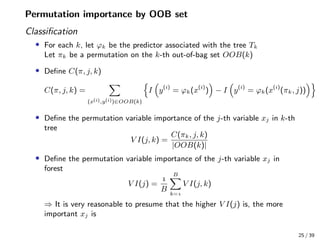

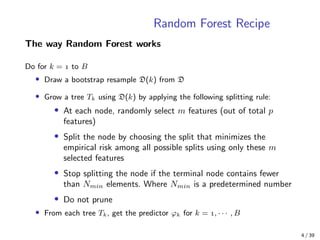

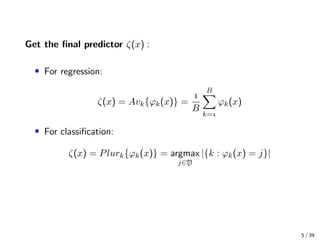

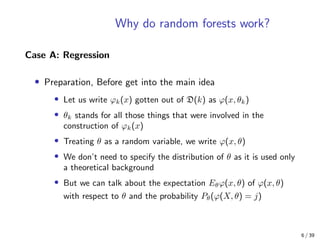

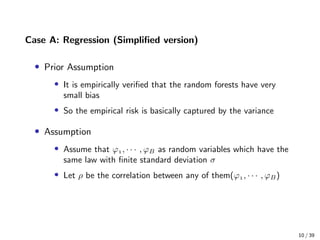

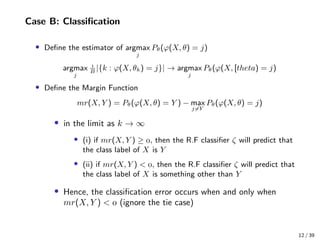

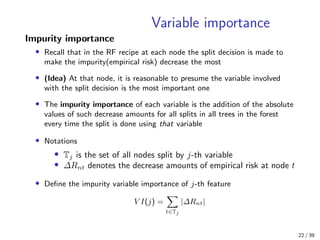

The document provides an overview of random forests, including the random forest recipe, why random forests work, and ramifications of random forests. The random forest recipe involves drawing bootstrap samples to grow trees, randomly selecting features at each split, and aggregating predictions. Random forests work by decorrelating trees, which reduces variance and leads to lower prediction error compared to individual trees. Ramifications discussed include using out-of-bag samples to estimate generalization error and calculating variable importance.

![• Define an estimator of Eθϕ(x, θ)

AVkϕk(x) → Eθϕ(x, θ), (AVkϕk(x) =

B

B

k= ϕ(x, θk))

• Define the Prediction Error(risk) of the forest

PE∗

(forest) = E|Y − Eθϕ(Y, θ)|

, (E stands for EX,Y )

• Define the average(expected) Prediction Error of individual tree

PE∗

(tree) = EθE|Y − ϕ(X, θ)|

• Assume the Unbiasedness of forest and tree(strong assumption)

E[Y − Eθϕ(x, θ)] = , E[Y − ϕ(X, θ)] = , ∀θ

7 / 39](https://image.slidesharecdn.com/randomforests3-200926060727/85/Random-Forest-7-320.jpg)

![• Express PE∗

(forest) using Covariance

PE∗

(forest)= E|Y − Eθϕ(X, θ)|

= E|Eθ[Y − ϕ(X, θ)]|

, ( Y is independent from θ)

= E{Eθ[Y − ϕ(X, θ)] · Eθ [Y − ϕ(X, θ )]}

= EEθ,θ (Y − ϕ(X, θ)) · (Y − ϕ(X, θ ))

= Eθ,θ E(Y − ϕ(X, θ)) · (Y − ϕ(X, θ ))

= Eθ,θ Cov Y − ϕ(X, θ), Y − ϕ(X, θ )

Where θ, θ are independent with the same distribution

• Using the covariance-correlation formula

Cov Y − ϕ(x, θ), Y − ϕ(x, θ )

= ρ Y − ϕ(x, θ), Y − ϕ(x, θ ) · std (Y − ϕ(x, θ)) · std Y − ϕ(x, θ )

= ρ(θ, θ )sd(θ)sd(θ )

Where ρ(θ, θ ) is the correlation between Y − ϕ(x, θ) and Y − ϕ(x, θ ),

sd(θ) denotes the standard deviation of Y − ϕ(x, θ)

8 / 39](https://image.slidesharecdn.com/randomforests3-200926060727/85/Random-Forest-8-320.jpg)

![• Express PE∗

(forest) using correlation

PE∗

(forest) = Eθ,θ {ρ(θ, θ )sd(θ)sd(θ )}

• Define the weighted average correlation ¯ρ

¯ρ =

Eθ,θ {ρ(θ, θ )sd(θ)sd(θ )}

Eθsd(θ)Eθ sd(θ )

• Show the inequality between PE∗

(forest) and PE∗

(tree) by the

property of Variance

PE∗

(forest) = ¯ρ(Eθsd(θ))

≤ ¯ρEθ(sd(θ))

= ¯ρEθE|Y − ϕ(X, θ)|

, ( E[Y − ϕ(X, θ)] = , ∀θ)

= ¯ρPE∗

(tree)

• What this inequality means

”the smaller the correlation, the smaller the prediction error.”

⇒ This shows why Random forest limits the number of variables m d

at each node

because it’s reasonable to assume that two sets of features that have not

much overlap between them should in general have a small correlation

9 / 39](https://image.slidesharecdn.com/randomforests3-200926060727/85/Random-Forest-9-320.jpg)

![• Define the Prediction Error of forest classifier using mr(X, Y )

PE∗

= P(mr(X, Y ) < )

• Define the mean(expectation) s of mr(X, Y )

s = E[mr(X, Y )]

s represents the strength of the individual classifiers in the forest

• (Lemma 1) Let U be a random variable and let s be any positive

number. Then

Prob[U < ] ≤

E|U − s|

s

proof: by the Chebyshev inequality

• Express the inequality of Prediction Error by Lemma 1

PE∗

≤

V ar(mr)

s

, (assume s > )

13 / 39](https://image.slidesharecdn.com/randomforests3-200926060727/85/Random-Forest-13-320.jpg)

![• Define ˆj(X, Y ) for simple notation

ˆj(X, Y ) = argmax

j=Y

Pθ(ϕ(x, θ) = j)

• Express mr(X, Y ) using ˆj(X, Y )

mr(X, Y )= Pθ[ϕ(X, θ) = Y ] − Pθ[ϕ(X, θ) = ˆj(X, Y )]

= Eθ[I(ϕ(X, θ) = Y ) − I(ϕ(X, θ) = ˆj(X, Y ))]

• Define rmg(θ, X, Y ) for simple notation

rmg(θ, X, Y ) = I(ϕ(X, θ) = Y ) − I(ϕ(X, θ) = ˆj(X, Y ))

• Hence we can express mr(X, Y ) using rmg(θ, X, Y )

mr(X, Y ) = Eθ[rmg(θ, X, Y )]

14 / 39](https://image.slidesharecdn.com/randomforests3-200926060727/85/Random-Forest-14-320.jpg)

![• Express V ar(mr) by Covariance

V ar(mr)

= E(Eθrmg(θ, X, Y ))

− (EEθrmg(θ, X, Y ))

= EEθrmg(θ, X, Y )Eθ rmg(θ , X, Y ) − EEθrmg(θ, X, Y )EEθ rmg(θ , X, Y )

= EEθ,θ {rmg(θ, X, Y )rmg(θ , X, Y )} − EθErmg(θ, X, Y )Eθ Ermg(θ , X, Y )

= Eθ,θ {E[rmg(θ, X, Y )rmg(θ , X, Y )] − Ermg(θ, X, Y )Ermg(θ , X, Y )}

= Eθ,θ Cov rmg(θ, X, Y ), rmg(θ , X, Y )

• Using the Covariance - Correlation formula

Cov rmg(θ, X, Y ), rmg(θ , X, Y )

= ρ rmg(θ, X, Y ), rmg(θ , X, Y ) · std(rmg(θ, X, Y ) · std(rmg(θ , X, Y ))

= ρ(θ, θ )sd(θ)sd(θ )

Where ρ(θ, θ ) is the correlation between rmg(θ, X, Y ) and rmg(θ , X, Y ),

sd(θ) is the standard deviation of rmg(θ, X, Y )

15 / 39](https://image.slidesharecdn.com/randomforests3-200926060727/85/Random-Forest-15-320.jpg)

![• Express V ar(mr) using correlation

V ar(mr) = Eθ,θ [ρ(θ, θ )sd(θ)sd(θ )]

• Define the weighted average correlation ¯ρ of rmg(θ, X, Y ) and

rmg(θ , X, Y )

¯ρ =

Eθ,θ [ρ(θ, θ )sd(θ)sd(θ )]

Eθ,θ [sd(θ)sd(θ )]

16 / 39](https://image.slidesharecdn.com/randomforests3-200926060727/85/Random-Forest-16-320.jpg)

![• Show the inequality of V ar(mr) using the fact that |¯ρ| ≤ 1 and

sd(θ) = sd(θ )

V ar(mr) = ¯ρ{Eθsd(θ)}

≤ ¯ρEθ[sd(θ)]

• Unfold Eθ[sd(θ)]

Eθ[sd(θ)]

= EθE[rmg(θ, X, Y )]

− Eθ[Ermg(θ, X, Y )]

• Show the inequality of s by ...

s = E(mr(X, Y )) = EEθrmg(θ, X, Y ) = EθErmg(θ, X, Y )

s

= {EθErmg(θ, X, Y )}

≤ Eθ[Ermg(θ, X, Y )]

• Therefore

Eθ[sd(θ)]

≤ EθE[rmg(θ, X, Y )]

− s

• Show the inequality of EθE[rmg(θ, X, Y )]

EθE[rmg(θ, X, Y )]

≤

( rmg(θ, X, Y ) = I(something) − I(somethingelse),

Which can only take 0 or ±1 for its value)

17 / 39](https://image.slidesharecdn.com/randomforests3-200926060727/85/Random-Forest-17-320.jpg)

![• Show the inequality of Prediction Error

PE∗

≤ ¯ρ

− s

s

• Above inequality can be proven by the inequalities we’ve shown before

1

PE∗

≤

V ar(mr)

s

2

V ar(mr) = ¯ρ{Eθsd(θ)}

≤ ¯ρEθ[sd(θ)]

3

Eθ[sd(θ)]

≤ EθE[rmg(θ, X, Y )]

− s

4

EθE[rmg(θ, X, Y )]

≤

• What this inequality means

”the smaller the correlatoin, the smaller the prediction error”

18 / 39](https://image.slidesharecdn.com/randomforests3-200926060727/85/Random-Forest-18-320.jpg)

![Permutation importance

• The permutation importance assumes that the data value associated with

an important variable must have a certain structural relevance to the

problem

• (Idea) So that the effect of a random shuffling (permutation) of its data

value must be reflected in the decrease in accuracy of the resulting

predictor

• Notations

• D = {x()

, · · · , x(n)

}

• x(i)

= [x

(i)

, · · · , x

(i)

p ]T

• Write D in matrix form

D =

x · · · xj · · · xp

...

...

...

xi · · · xij · · · xip

...

...

...

xn · · · xnj · · · xnp

in which xij = x

(i)

j for all i = , · · · , n and j = , · · · , p

23 / 39](https://image.slidesharecdn.com/randomforests3-200926060727/85/Random-Forest-23-320.jpg)