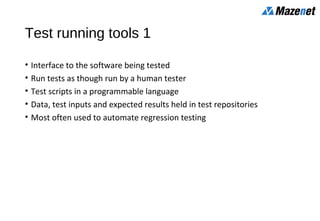

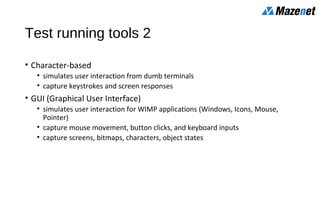

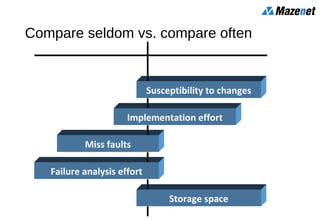

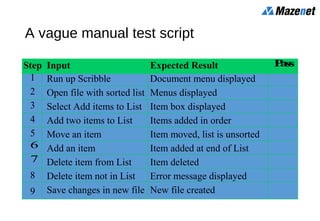

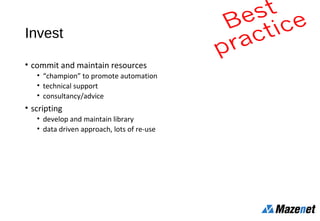

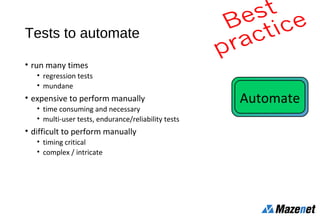

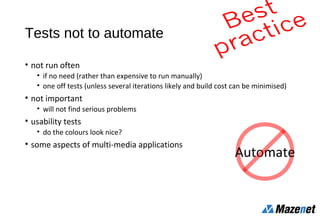

The document outlines various types of tool support for software testing, categorizing them into distinct areas including requirements testing, static analysis, test design, data preparation, running, and management tools. It emphasizes the importance of automation in testing while highlighting the challenges and maintenance efforts involved, cautions against over-automation, and advocates for best practices in tool adoption. Key points include the need for planning, careful selection of tests to automate, and ongoing management to avoid excessive maintenance costs.