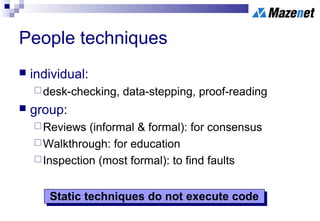

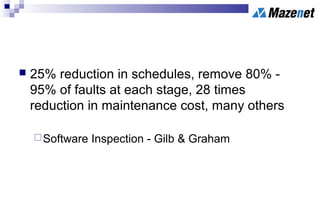

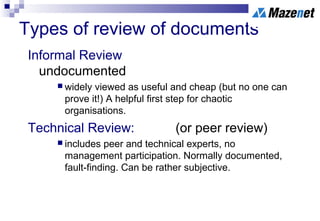

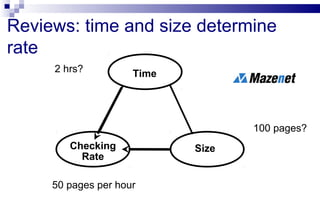

The document discusses static testing and review techniques in software development, emphasizing the benefits of various review types such as informal, technical, and inspections for improving quality and reducing faults. It outlines the roles, costs, and processes involved in conducting reviews and highlights the significance of static analysis in identifying code faults without execution. Key points include the importance of structured reviews and the impact of static techniques on development productivity and cost-effectiveness.