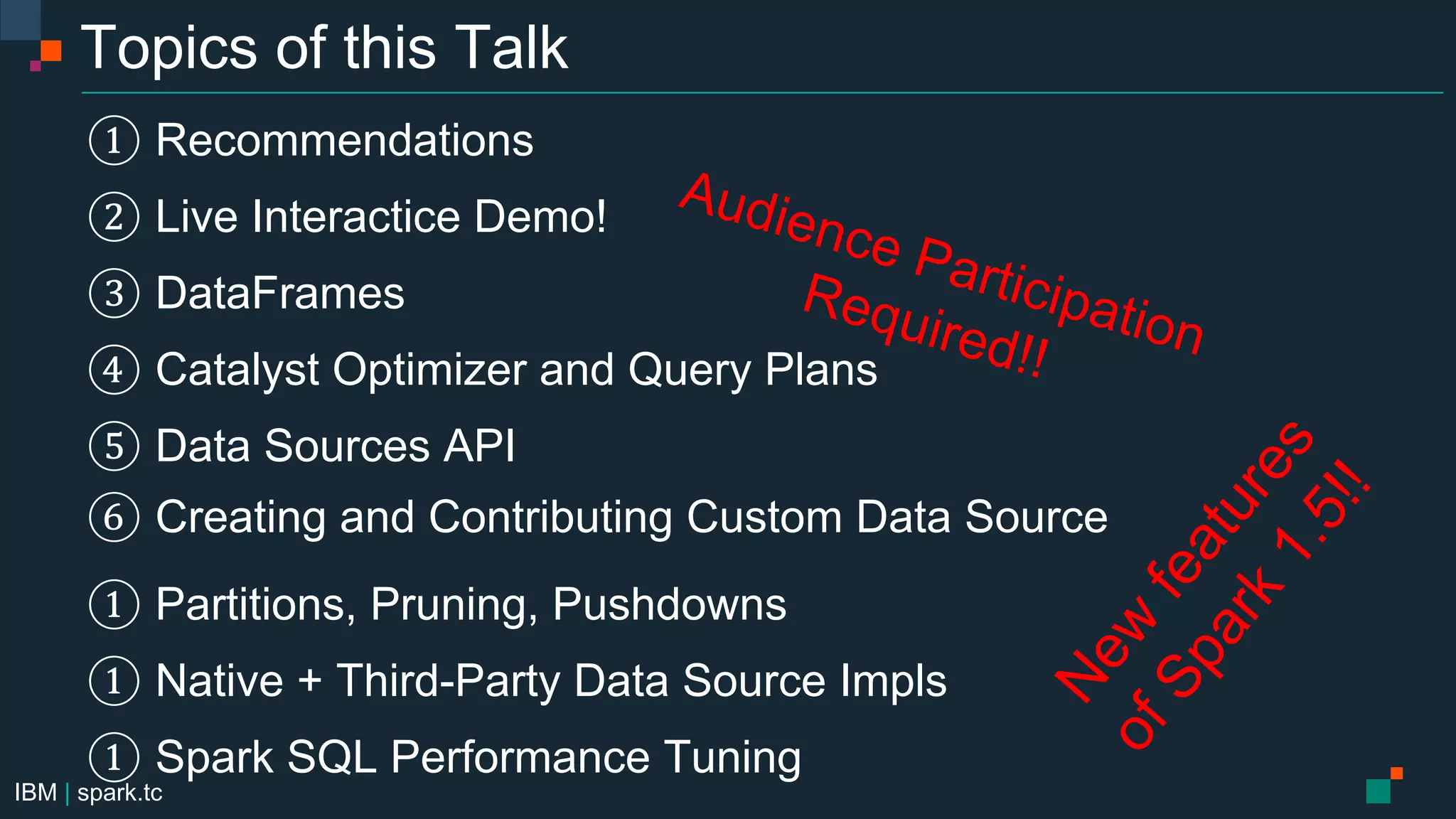

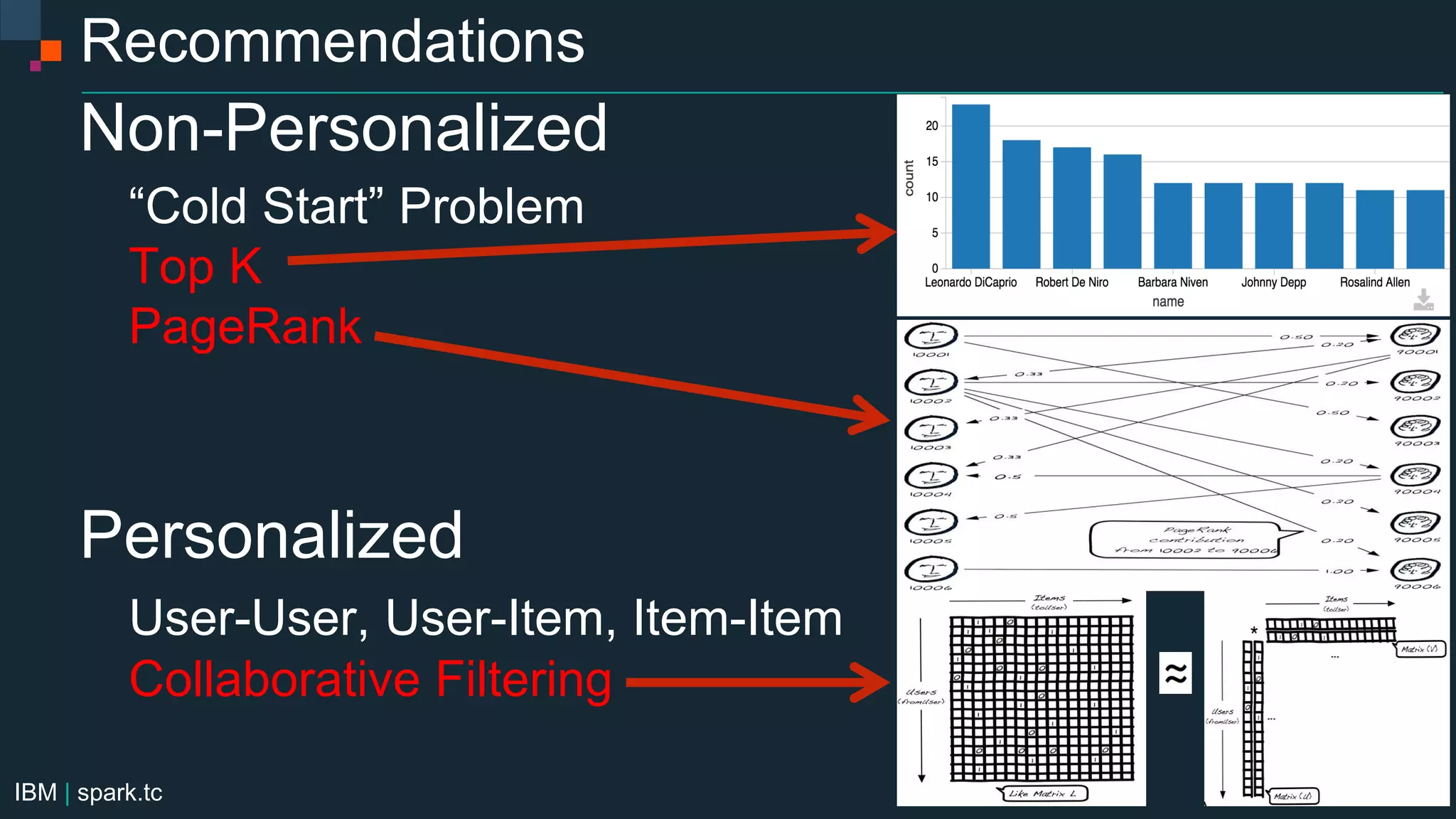

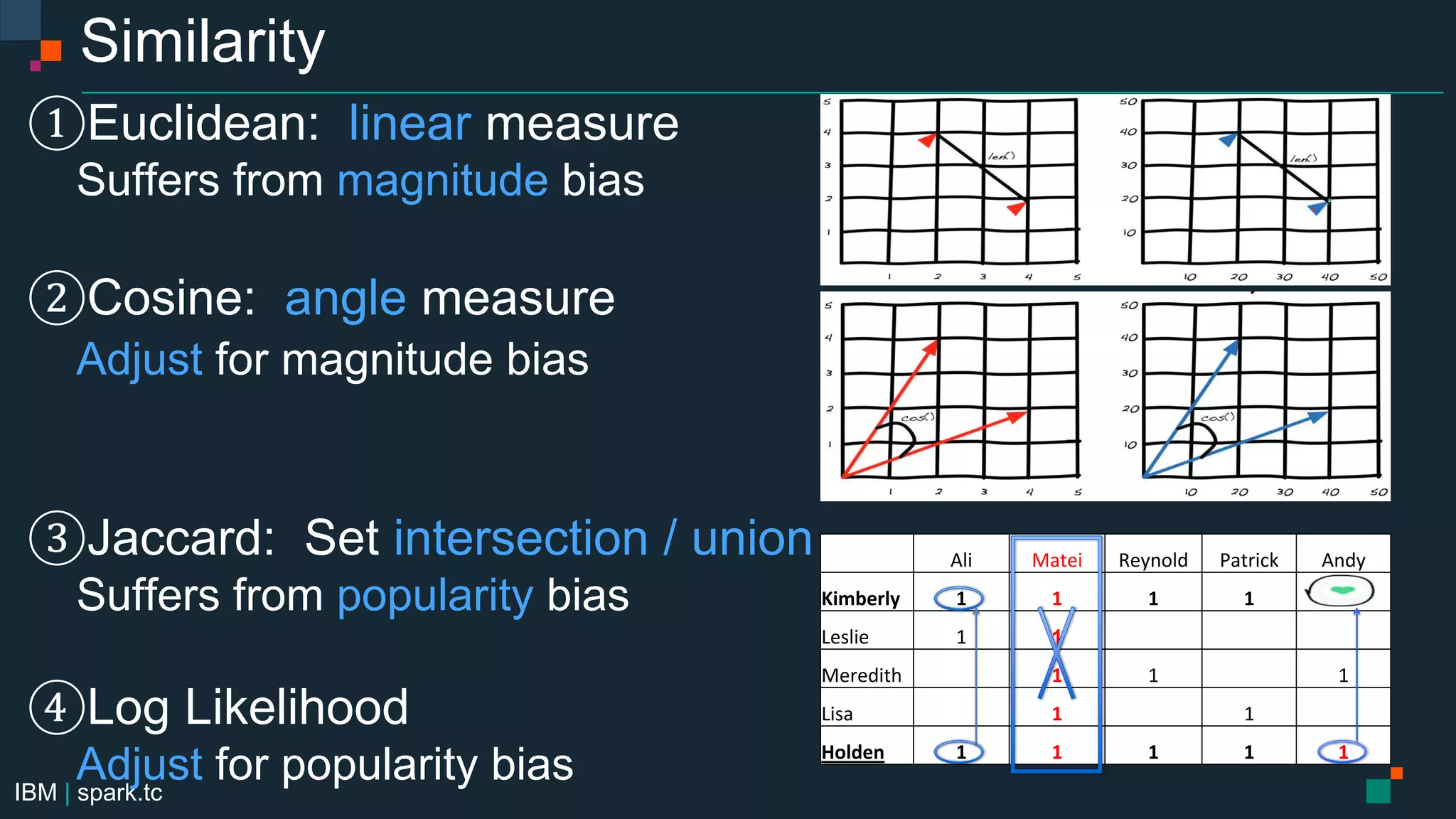

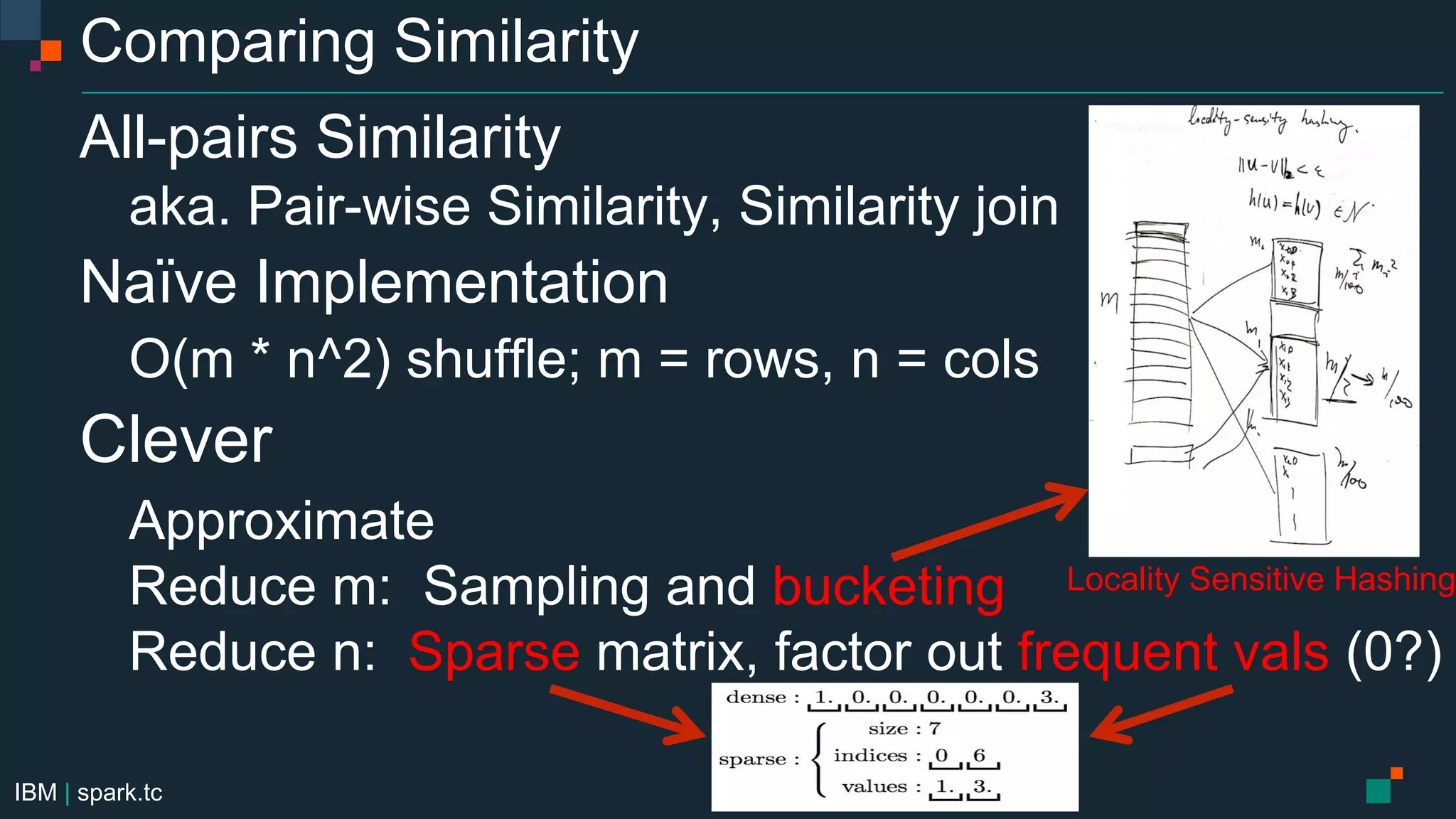

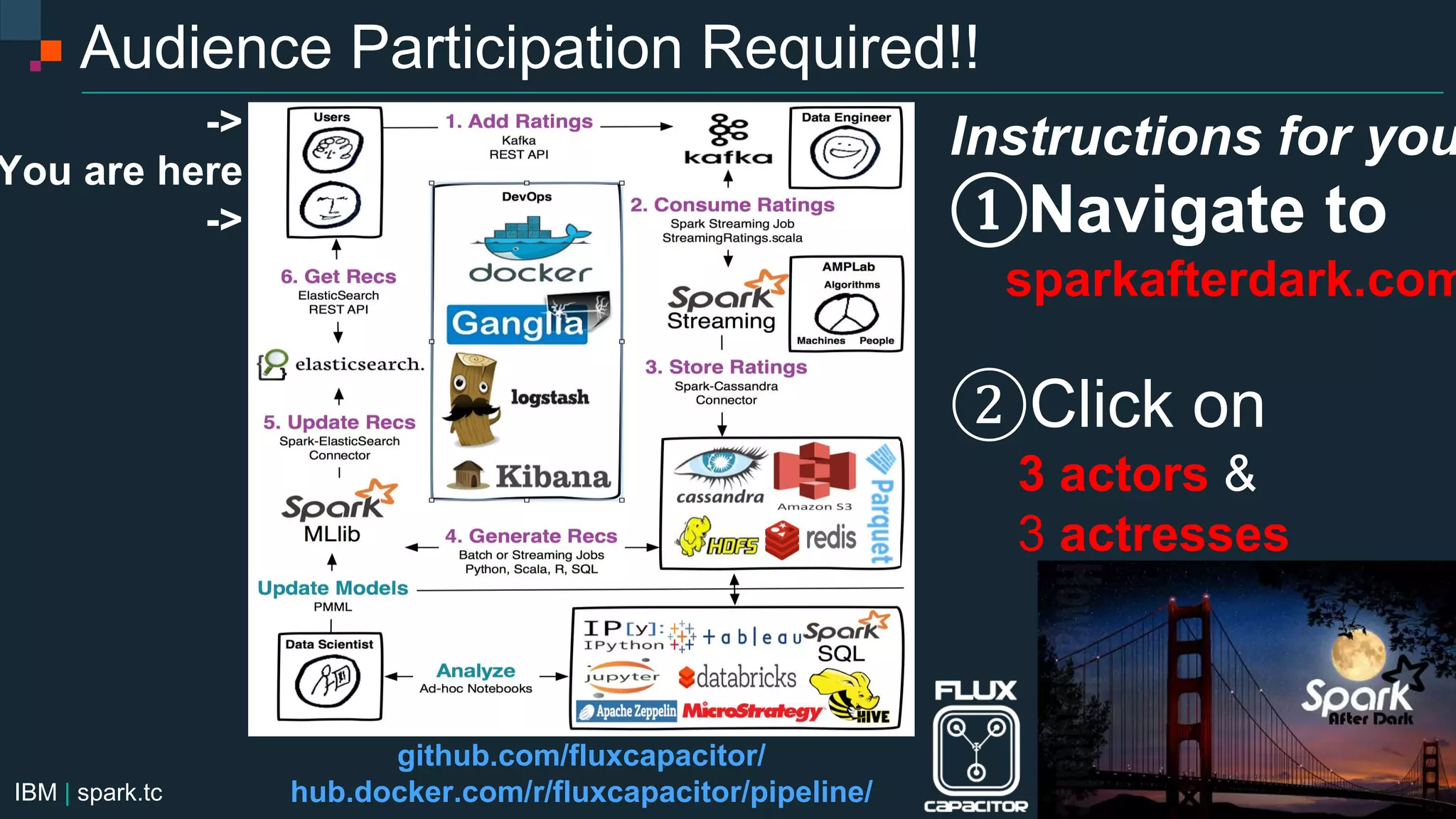

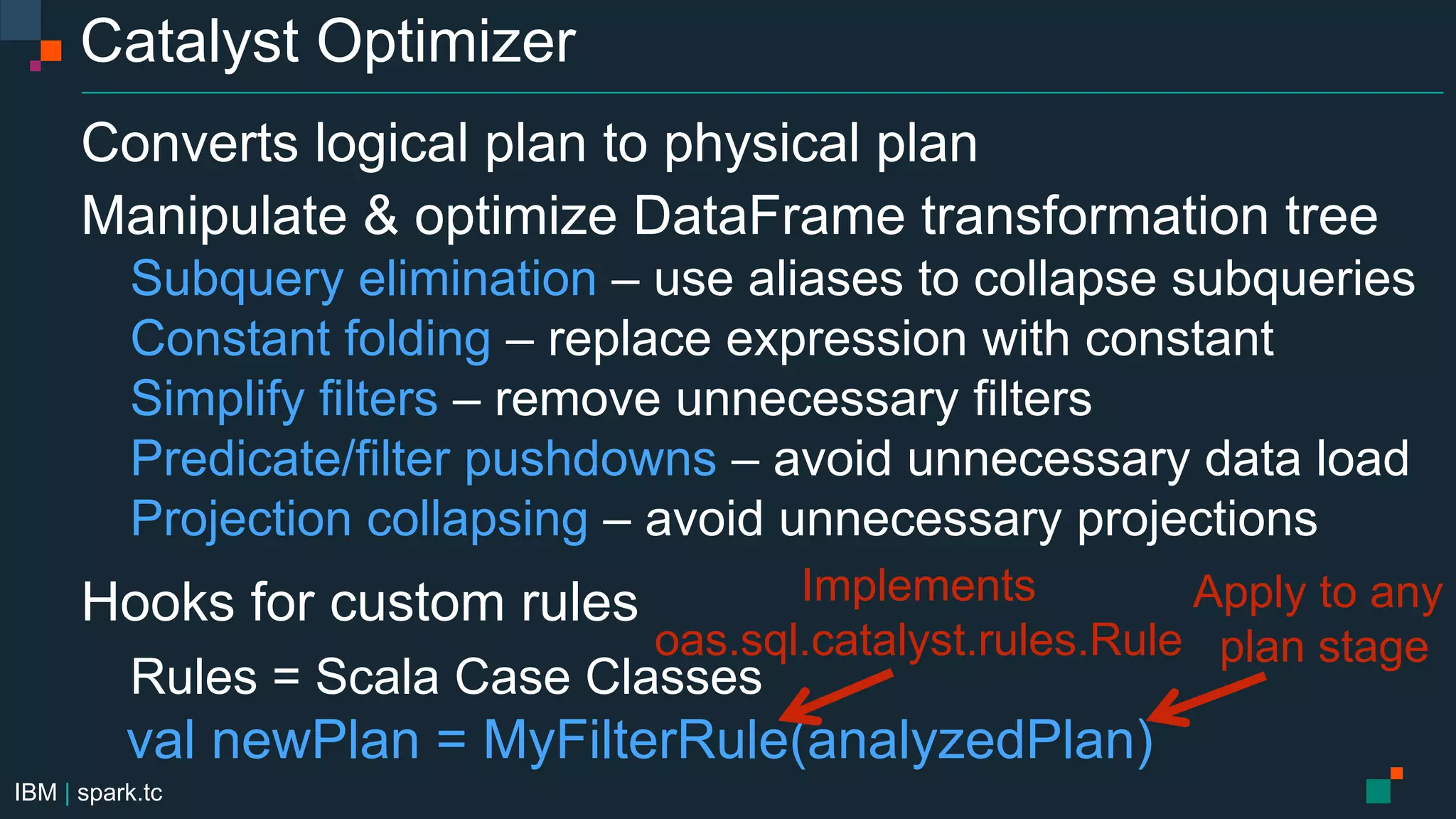

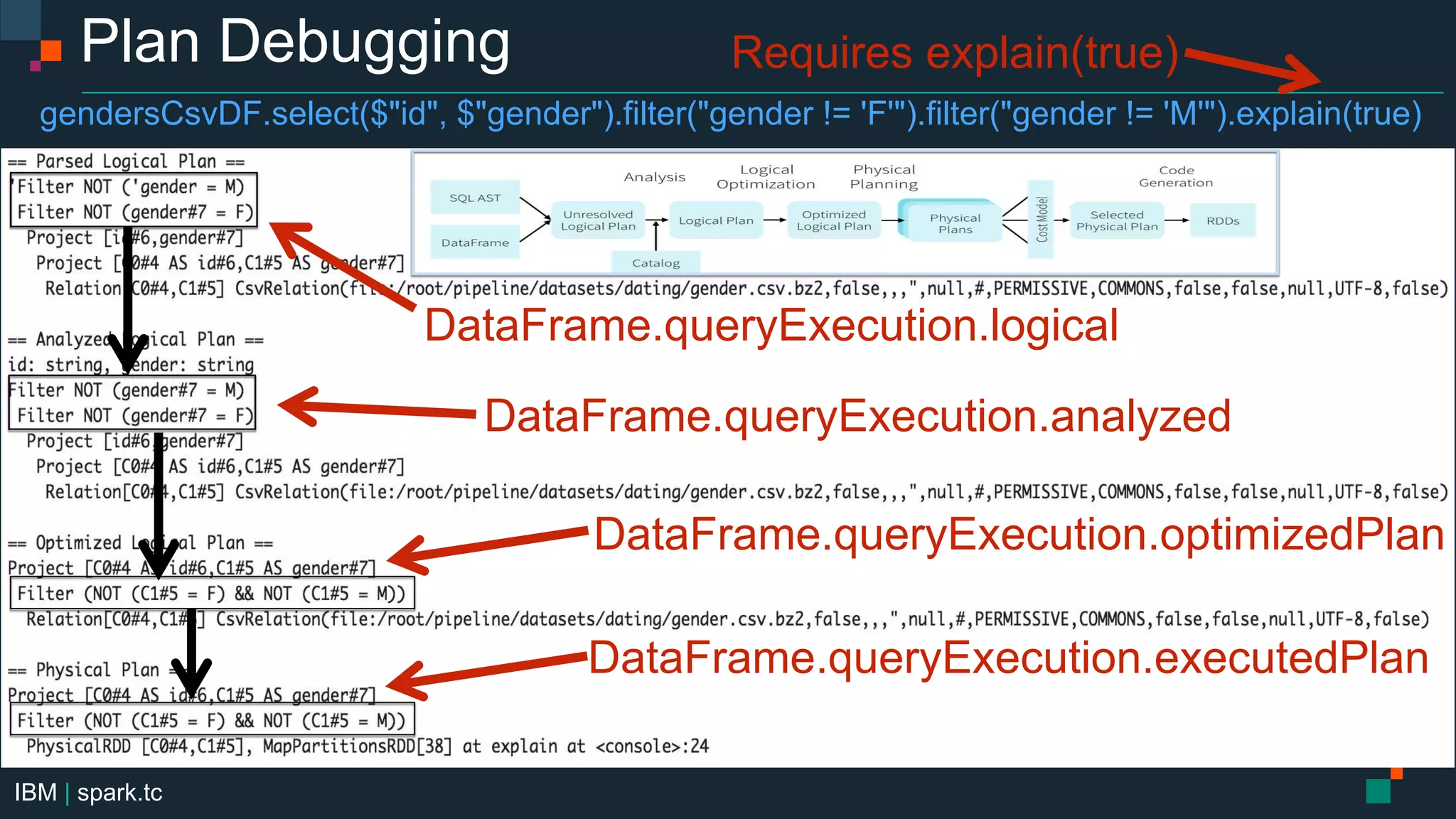

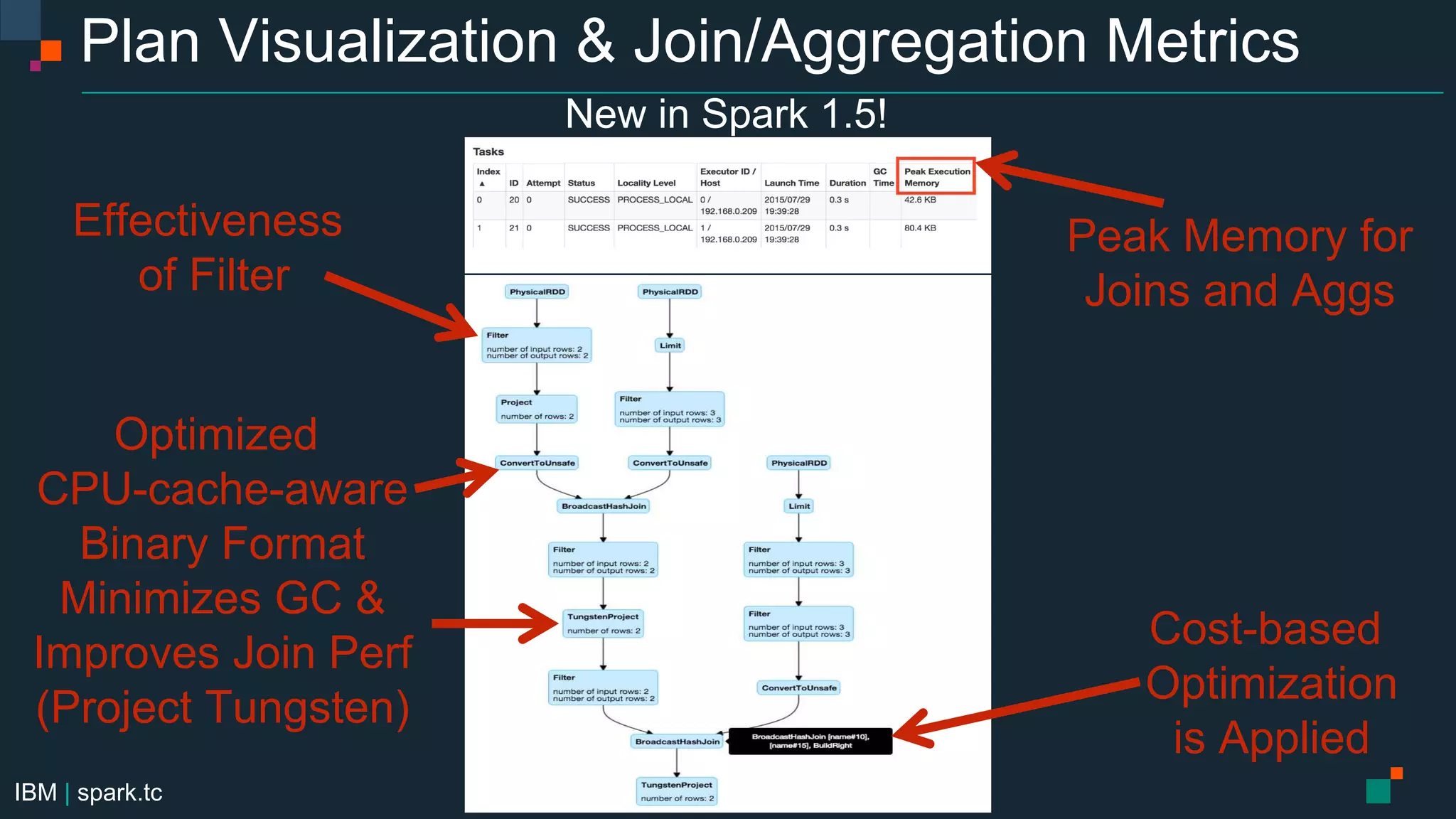

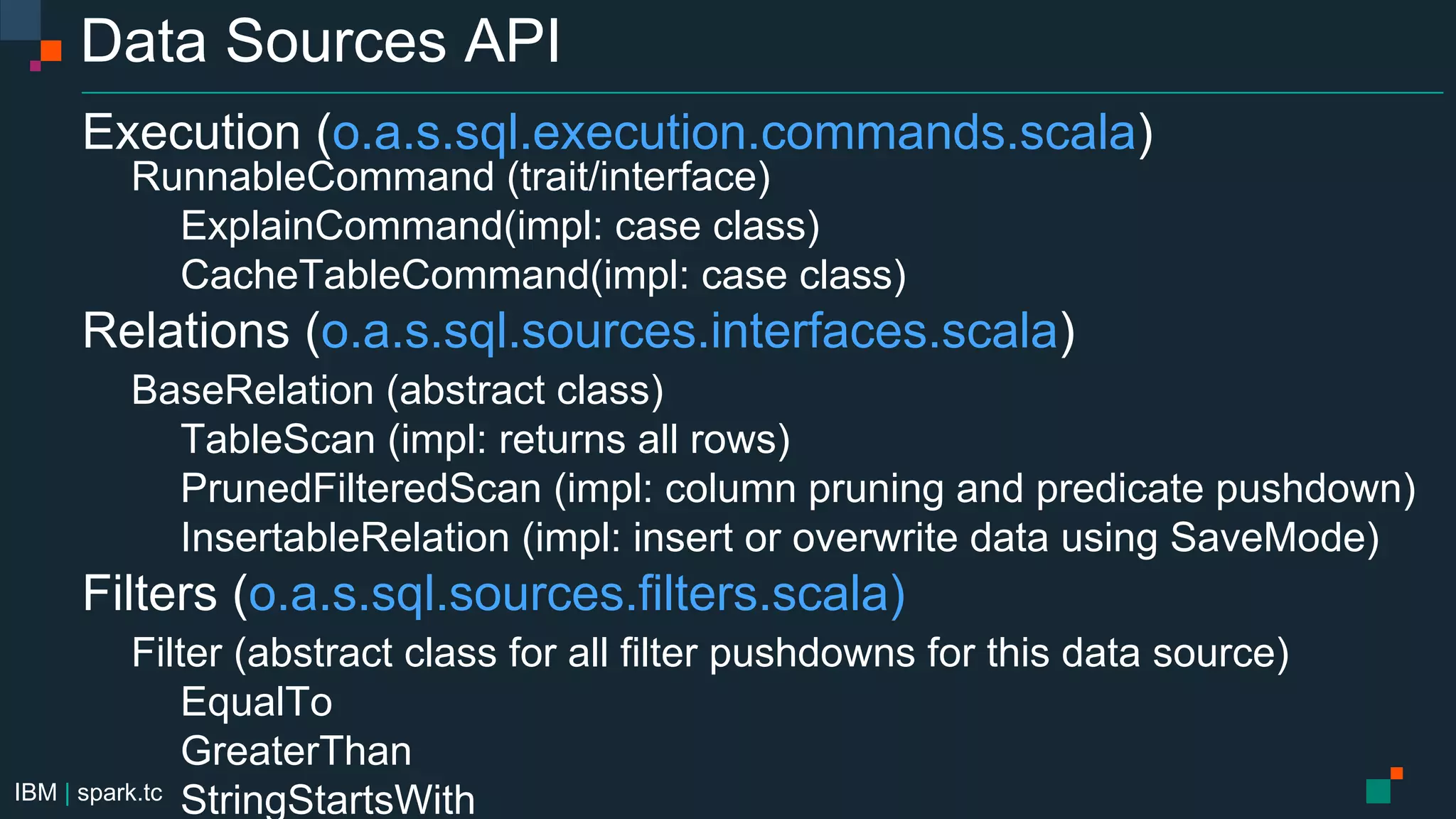

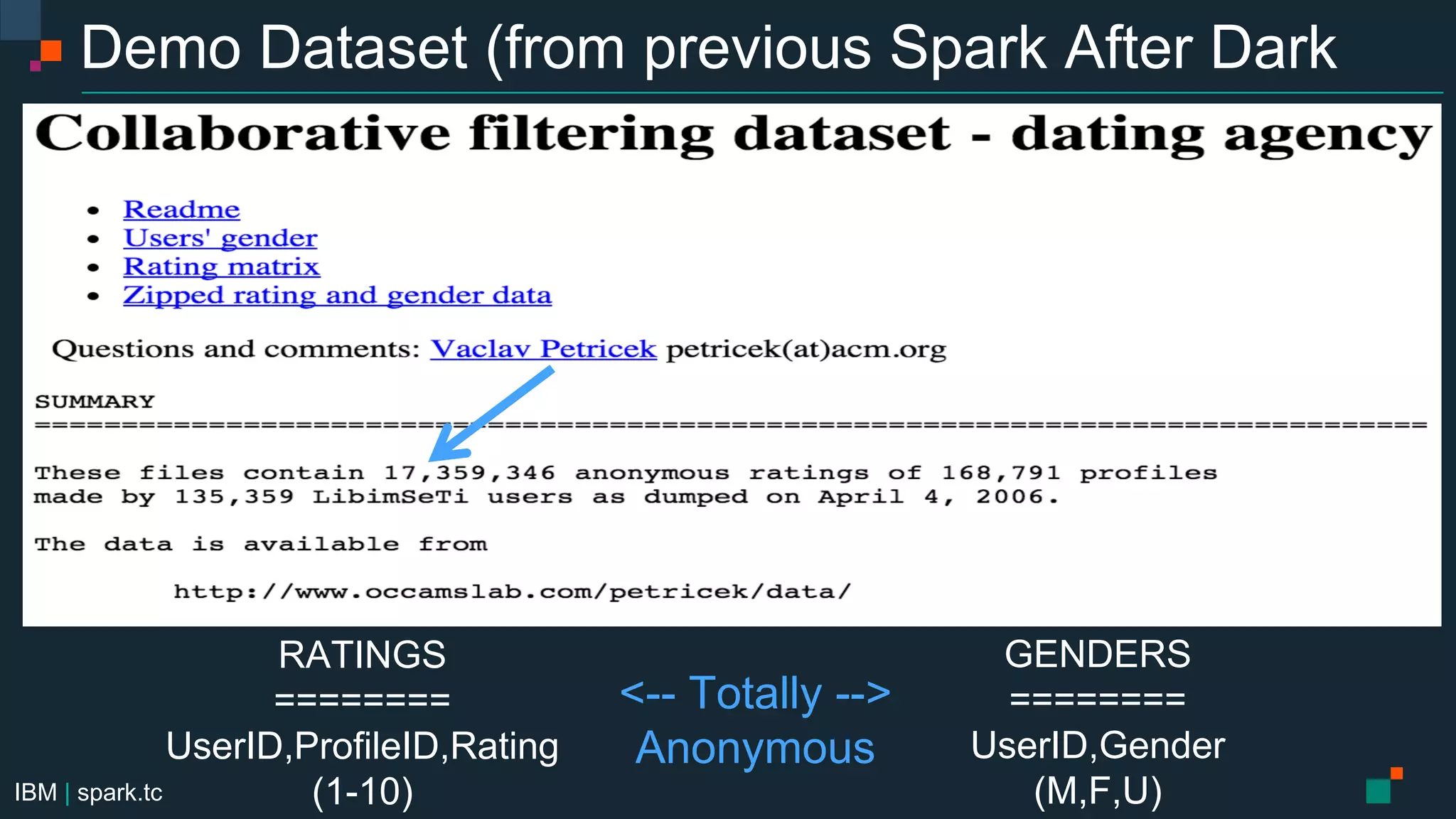

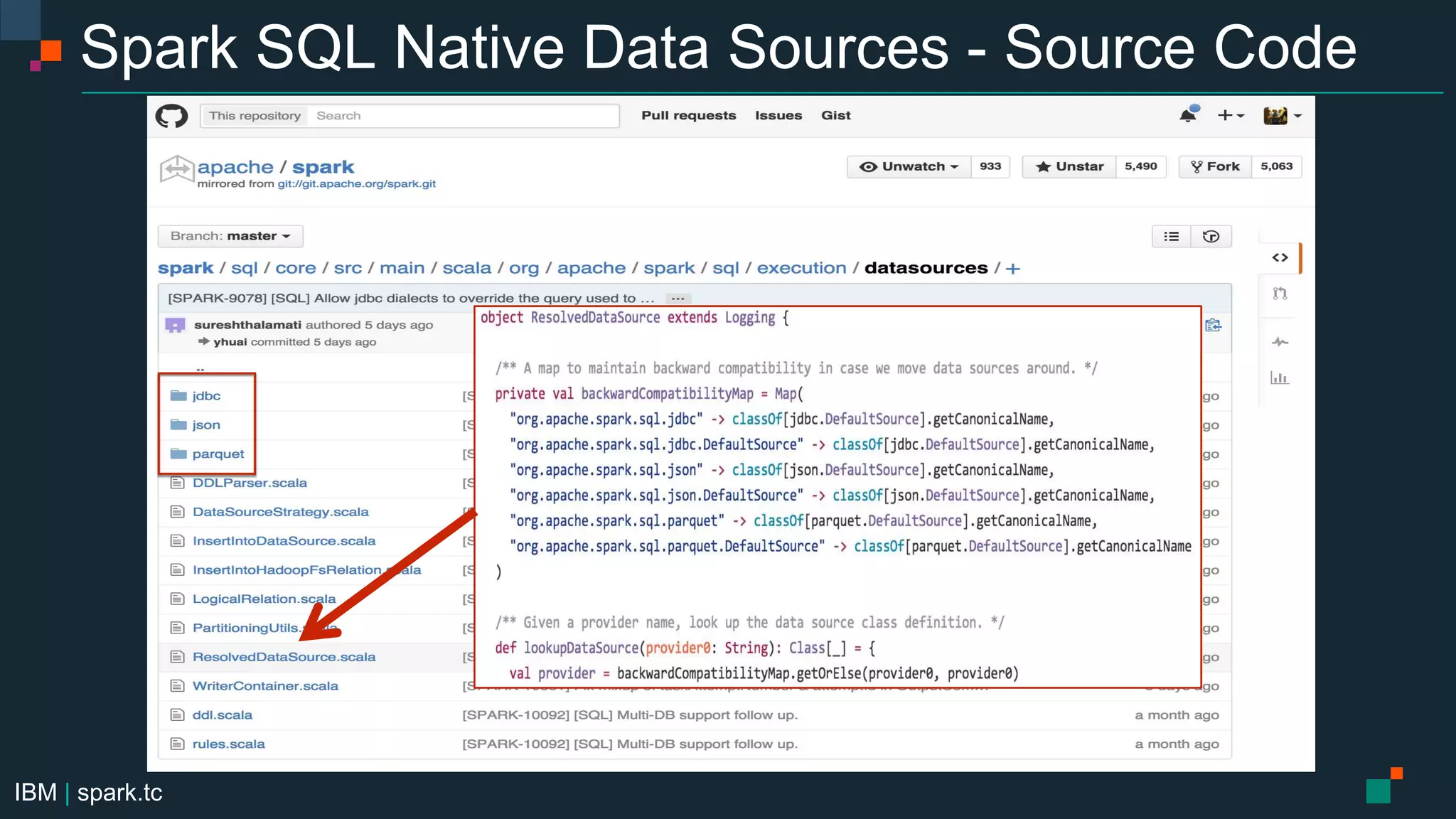

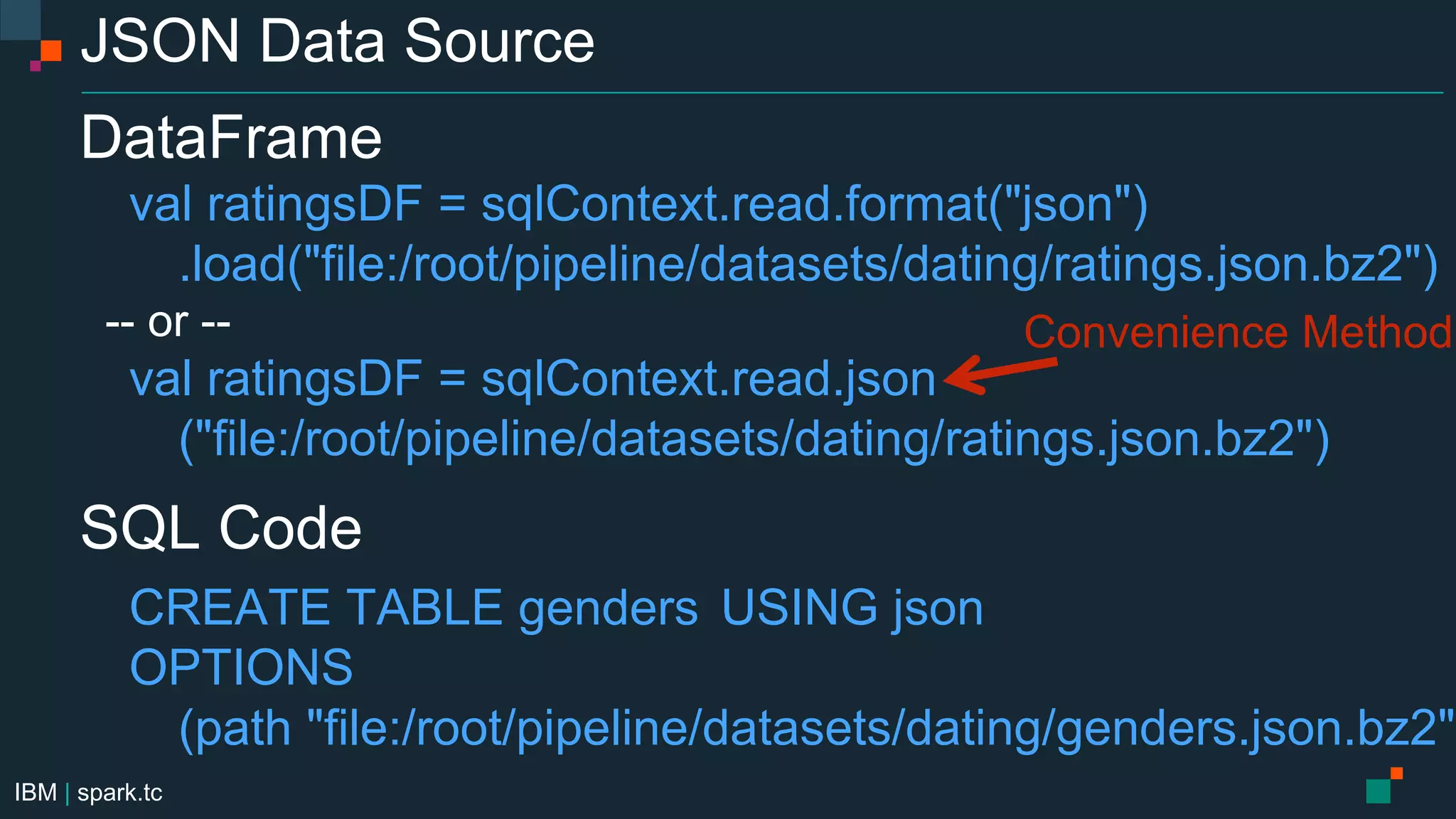

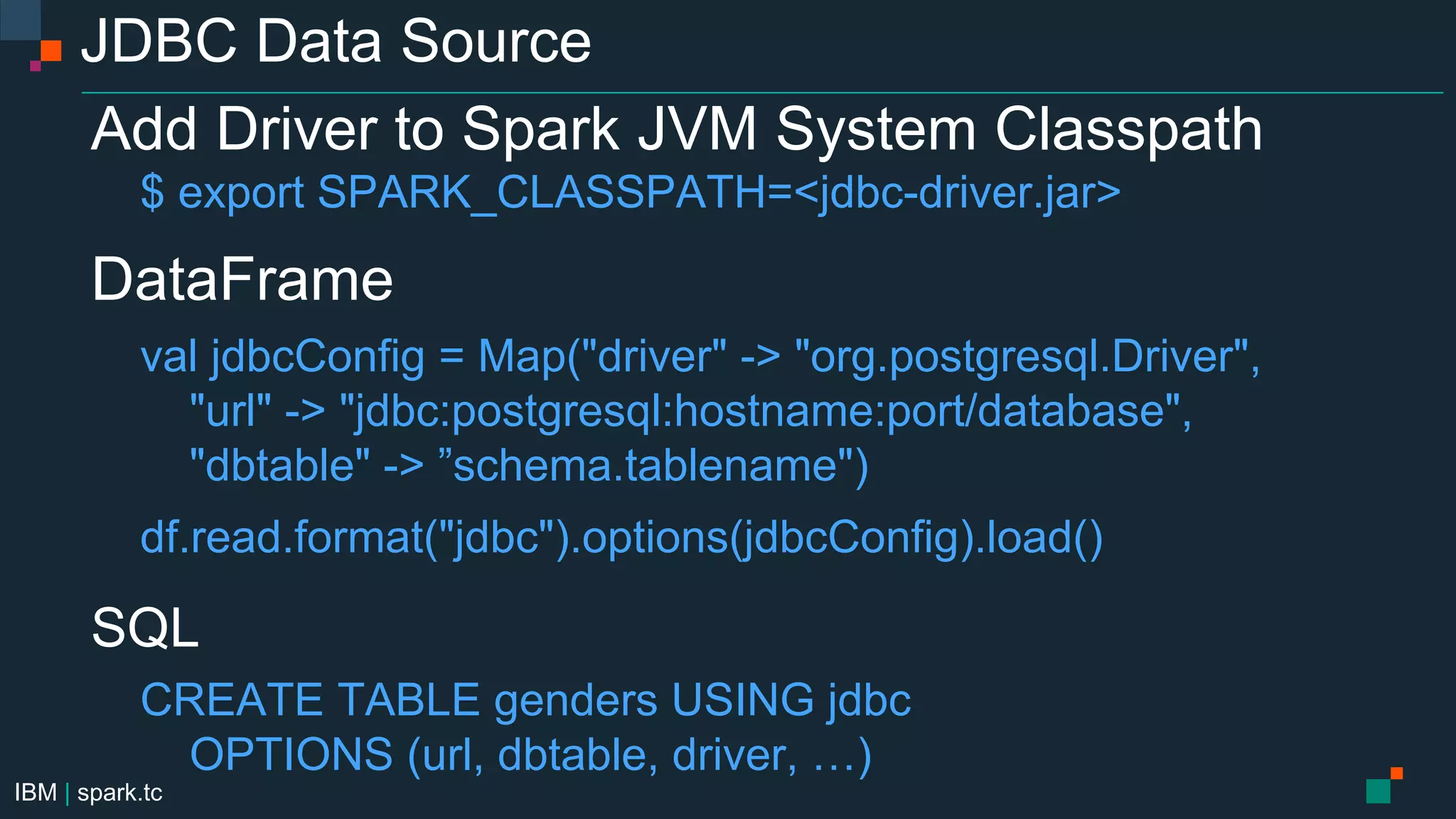

The document presents a talk by Chris Fregly from IBM about real-time advanced analytics with Apache Spark and Cassandra, covering various topics such as recommendations, dataframes, and the Catalyst optimizer. It includes details about meetups, audience interaction, and a live demo for generating dating recommendations using machine learning techniques. Additionally, it discusses performance tuning techniques and provides information on creating custom data sources and integrating with various databases.

![IBM | spark.tc

Parquet Data Source

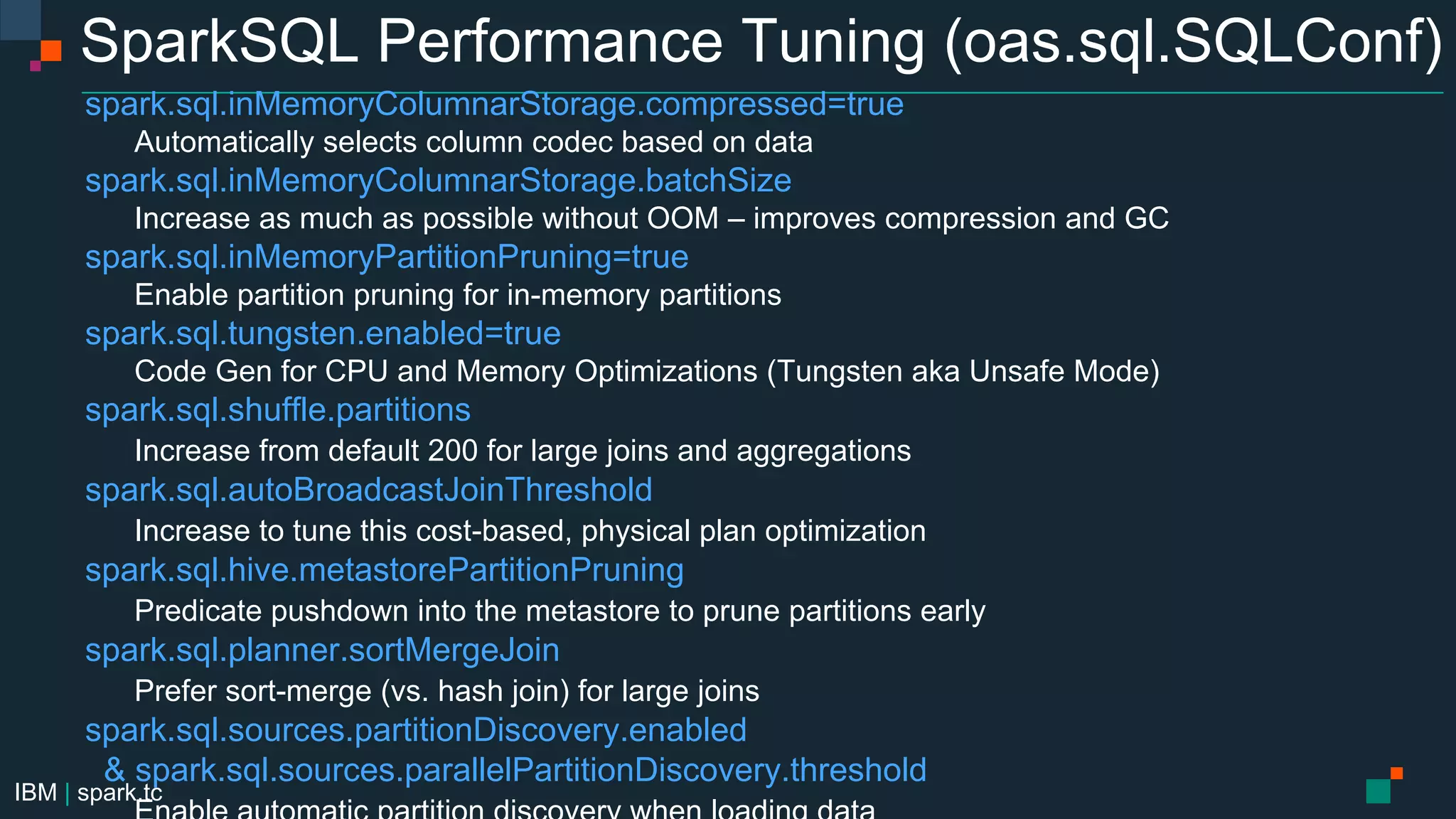

Configuration

spark.sql.parquet.filterPushdown=true

spark.sql.parquet.mergeSchema=true

spark.sql.parquet.cacheMetadata=true

spark.sql.parquet.compression.codec=[uncompressed,snappy,gzip,lzo]

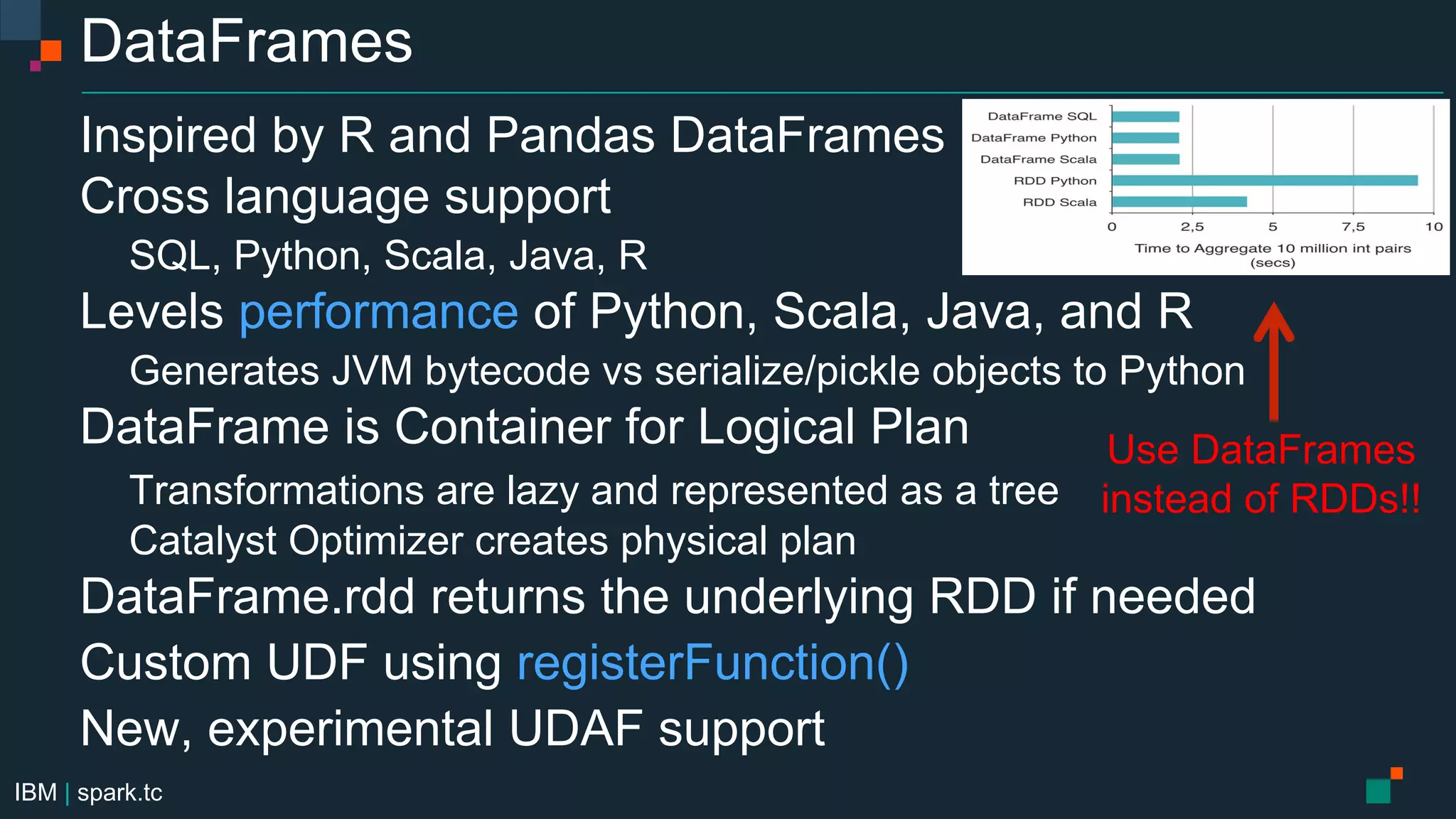

DataFrames

val gendersDF = sqlContext.read.format("parquet")

.load("file:/root/pipeline/datasets/dating/genders.parquet")

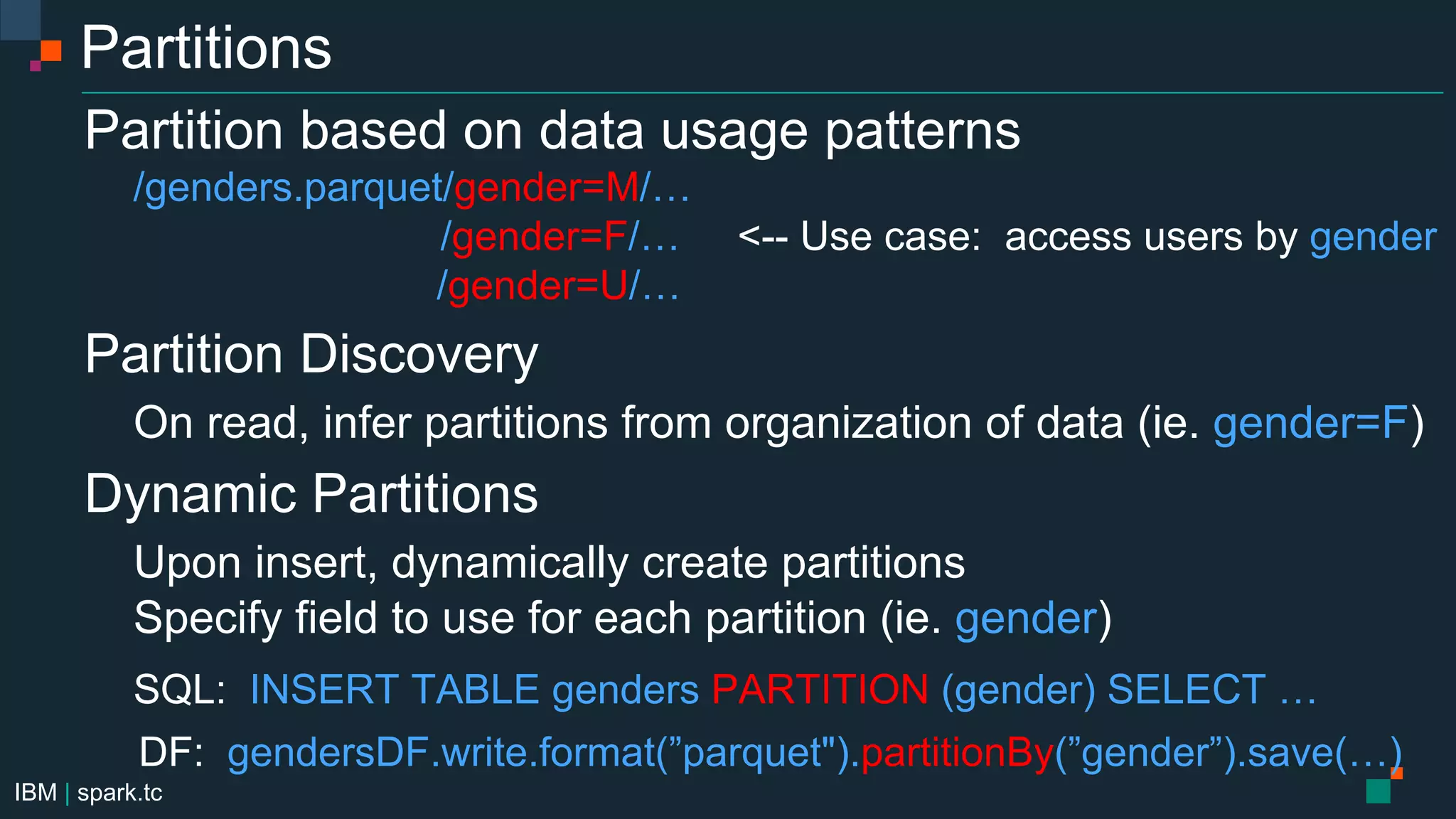

gendersDF.write.format("parquet").partitionBy("gender")

.save("file:/root/pipeline/datasets/dating/genders.parquet")

SQL

CREATE TABLE genders USING parquet

OPTIONS

(path "file:/root/pipeline/datasets/dating/genders.parquet")](https://image.slidesharecdn.com/cassandrasummitsept2015sparkafterdark-150923230637-lva1-app6891/75/Cassandra-Summit-Sept-2015-Real-Time-Advanced-Analytics-with-Spark-and-Cassandra-Recommendations-Machine-Learning-Graph-Processing-29-2048.jpg)