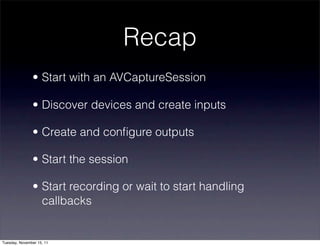

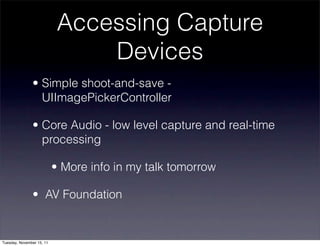

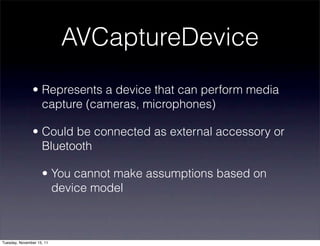

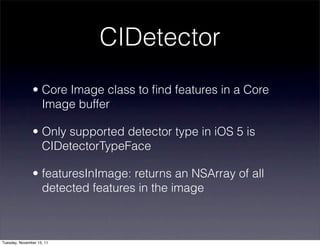

The document outlines media capture technologies in iOS using the AV Foundation framework. It discusses accessing capture devices, configuring sessions, managing inputs and outputs, and processing media, as well as providing practical code examples. Key topics include managing audio and video capture sessions, accessing device properties, and utilizing Core Image for features such as face detection.

![AVCaptureSession

• Coordinates the flow of capture from inputs to

outputs

• Create, add inputs and outputs, start running

captureSession = [[AVCaptureSession alloc] init];

Tuesday, November 15, 11](https://image.slidesharecdn.com/vtmiosbostonavfcapture-111115211631-phpapp02/85/Capturing-Stills-Sounds-and-Scenes-with-AV-Foundation-22-320.jpg)

![AVCaptureDevice *videoDevice =

! [AVCaptureDevice defaultDeviceWithMediaType:

! AVMediaTypeVideo];

if (videoDevice) {

! AVCaptureDeviceInput *videoInput =

! [AVCaptureDeviceInput

! ! deviceInputWithDevice:videoDevice

! ! ! ! ! ! ! ! error:&setUpError];

! if (videoInput) {

! ! [captureSession addInput: videoInput];

! }

}

Tuesday, November 15, 11](https://image.slidesharecdn.com/vtmiosbostonavfcapture-111115211631-phpapp02/85/Capturing-Stills-Sounds-and-Scenes-with-AV-Foundation-31-320.jpg)

![AVCaptureVideoPreviewLayer *previewLayer =

! [AVCaptureVideoPreviewLayer

! ! layerWithSession:captureSession];

previewLayer.frame = captureView.layer.bounds;

previewLayer.videoGravity =

! AVLayerVideoGravityResizeAspect;

[captureView.layer addSublayer:previewLayer];

Tuesday, November 15, 11](https://image.slidesharecdn.com/vtmiosbostonavfcapture-111115211631-phpapp02/85/Capturing-Stills-Sounds-and-Scenes-with-AV-Foundation-33-320.jpg)

![captureMovieOutput =

! [[AVCaptureMovieFileOutput alloc] init];

if (! captureMovieURL) {

! captureMoviePath = [getCaptureMoviePath() retain];

! captureMovieURL = [[NSURL alloc]

! ! ! initFileURLWithPath:captureMoviePath];

}

NSLog (@"recording to %@", captureMovieURL);

[captureSession addOutput:captureMovieOutput];

Tuesday, November 15, 11](https://image.slidesharecdn.com/vtmiosbostonavfcapture-111115211631-phpapp02/85/Capturing-Stills-Sounds-and-Scenes-with-AV-Foundation-36-320.jpg)

![Cranking it up

• -[AVCaptureSession startRunning] starts

capturing from all connected inputs

• If you have a preview layer, it will start

getting updated

• File outputs do not start writing to filesystem

until you call startRecording on them

Tuesday, November 15, 11](https://image.slidesharecdn.com/vtmiosbostonavfcapture-111115211631-phpapp02/85/Capturing-Stills-Sounds-and-Scenes-with-AV-Foundation-37-320.jpg)

![Creating the data

output

AVCaptureVideoDataOutput *captureOutput =

! [[AVCaptureVideoDataOutput alloc] init];

captureOutput.alwaysDiscardsLateVideoFrames =

! YES;

[captureOutput setSampleBufferDelegate:self

! ! ! ! queue:dispatch_get_main_queue()];

Tuesday, November 15, 11](https://image.slidesharecdn.com/vtmiosbostonavfcapture-111115211631-phpapp02/85/Capturing-Stills-Sounds-and-Scenes-with-AV-Foundation-42-320.jpg)

![Configuring the data

output

NSString* key =

! (NSString*)kCVPixelBufferPixelFormatTypeKey;

NSNumber* value =

! [NSNumber numberWithUnsignedInt:

! ! kCVPixelFormatType_32BGRA];

NSDictionary* videoSettings = [NSDictionary

! dictionaryWithObject:value forKey:key];

[captureOutput setVideoSettings:videoSettings];

Tuesday, November 15, 11](https://image.slidesharecdn.com/vtmiosbostonavfcapture-111115211631-phpapp02/85/Capturing-Stills-Sounds-and-Scenes-with-AV-Foundation-43-320.jpg)

![Convert CM to CV to CI

CVPixelBufferRef cvPixelBuffer =

! CMSampleBufferGetImageBuffer(sampleBuffer);

CFDictionaryRef attachmentsDict =

! CMCopyDictionaryOfAttachments(

! ! kCFAllocatorSystemDefault,

! ! sampleBuffer,

! ! kCMAttachmentMode_ShouldPropagate);

CIImage *ciImage = [[CIImage alloc]

! ! initWithCVPixelBuffer:cvPixelBuffer

! ! options:(__bridge NSDictionary*)

! ! ! ! attachmentsDict];

Tuesday, November 15, 11](https://image.slidesharecdn.com/vtmiosbostonavfcapture-111115211631-phpapp02/85/Capturing-Stills-Sounds-and-Scenes-with-AV-Foundation-50-320.jpg)

![Creating the CIDetector

NSDictionary *faceDetectorDict =

! ! [NSDictionary dictionaryWithObjectsAndKeys:

! ! ! CIDetectorAccuracyHigh,

! ! ! CIDetectorAccuracy,

! ! ! nil];

CIDetector *faceDetector =

[CIDetector detectorOfType:CIDetectorTypeFace

context:nil

options:faceDetectorDict];

NSArray *faces = [faceDetector

! ! ! featuresInImage:ciImage];

Tuesday, November 15, 11](https://image.slidesharecdn.com/vtmiosbostonavfcapture-111115211631-phpapp02/85/Capturing-Stills-Sounds-and-Scenes-with-AV-Foundation-51-320.jpg)

![Boxing the faces

for (CIFaceFeature *faceFeature in self.facesArray) {

CGRect boxRect = CGRectMake(

faceFeature.bounds.origin.x * self.scaleToApply,

faceFeature.bounds.origin.y * self.scaleToApply,

faceFeature.bounds.size.width * self.scaleToApply,

faceFeature.bounds.size.height * self.scaleToApply);

CGContextSetStrokeColorWithColor(cgContext,

[UIColor yellowColor].CGColor);

CGContextStrokeRect(cgContext, boxRect);

}

Tuesday, November 15, 11](https://image.slidesharecdn.com/vtmiosbostonavfcapture-111115211631-phpapp02/85/Capturing-Stills-Sounds-and-Scenes-with-AV-Foundation-53-320.jpg)