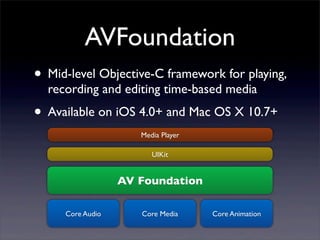

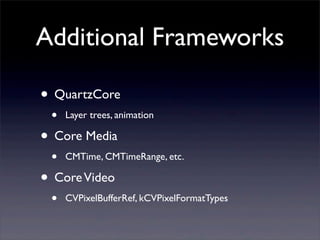

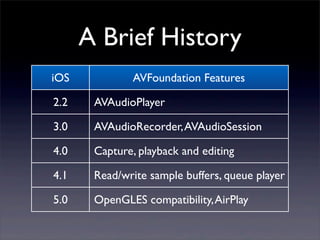

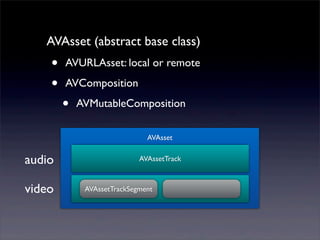

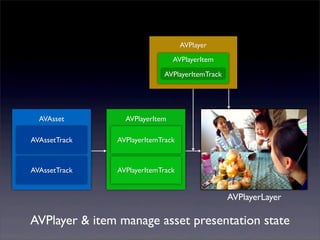

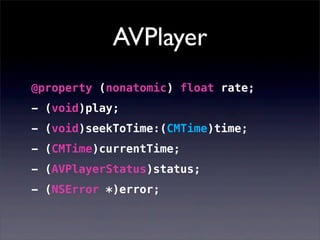

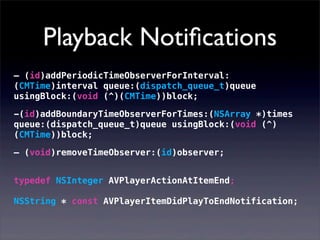

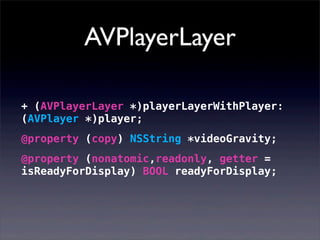

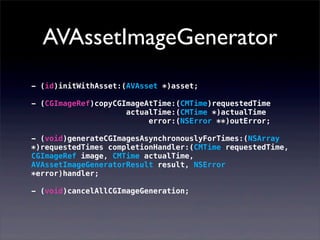

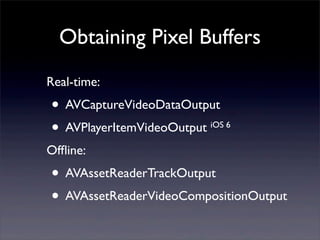

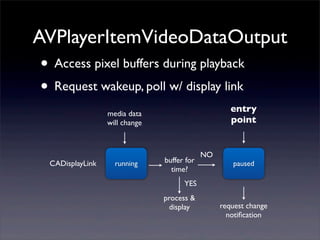

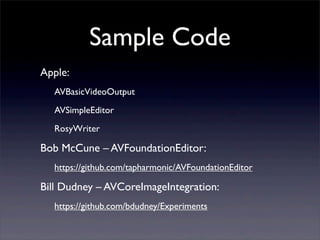

The document provides an overview of AVFoundation, a mid-level Objective-C framework for managing time-based media on iOS and macOS. It details various features, components, and classes such as AVAudioPlayer, AVPlayer, and AVAsset for playback, recording, and editing media. Additionally, it highlights real-time video processing capabilities and integration with OpenGL and Core Image for enhanced media handling.

![Now Playing in 3D

• iOS 5:

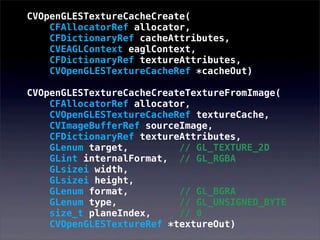

• CVOpenGLESTextureCacheRef

• CVOpenGLESTextureRef

• +[CIImage imageWithCVPixelBuffer:options:]

• Binding between CVPixelBufferRef and GL textures

• Bypasses copying to/from CPU-controlled memory

• iOS 6:

• +[CIImage imageWithTexture:size:flipped:colorSpace:]](https://image.slidesharecdn.com/20130514avfoundationtacow-130516195109-phpapp01/85/AVFoundation-TACOW-2013-05-14-27-320.jpg)

![Twitter: @rydermackay

ADN: @ryder

github.com/rydermackay

while (self.retainCount > 0) {

[self release];

}](https://image.slidesharecdn.com/20130514avfoundationtacow-130516195109-phpapp01/85/AVFoundation-TACOW-2013-05-14-33-320.jpg)