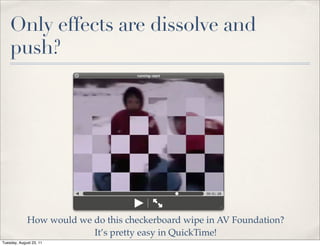

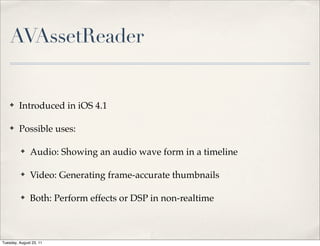

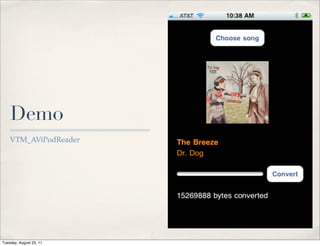

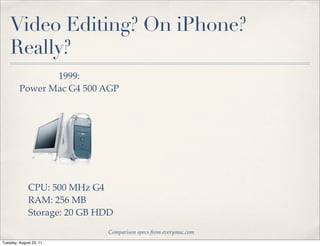

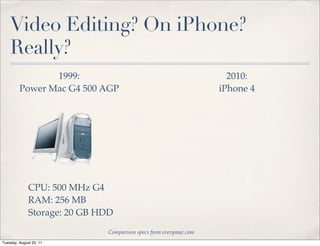

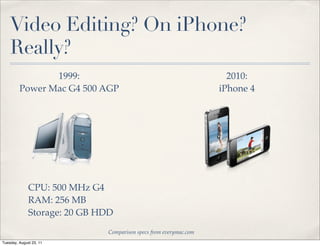

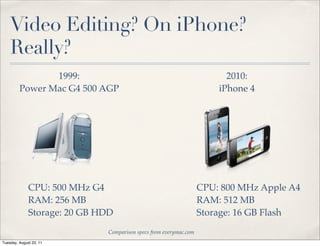

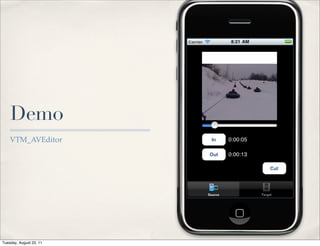

The document presents a detailed overview of the AV Foundation framework, specifically focusing on video and audio capture, processing, and editing techniques in iOS development. It provides code examples and discusses the use of AVAssetWriter and AVAssetReader for writing and reading media samples, along with AVMutableComposition for video editing. The presentation also highlights the evolution of video editing capabilities on mobile devices, using comparison specs to emphasize advancements since 1999.

![Really, really, seriously… don’t

AVMutableVideoCompositionLayerInstruction *aInstruction =

[AVMutableVideoCompositionLayerInstruction videoCompositionLayerInstructionWithAssetTrack: trackA];

[aInstruction setOpacityRampFromStartOpacity:0.0

toEndOpacity:1.0

timeRange:CMTimeRangeMake(CMTimeMakeWithSeconds(2.9, VIDEO_TIME_SCALE),

CMTimeMakeWithSeconds(6.0, VIDEO_TIME_SCALE))];

Tuesday, August 23, 11](https://image.slidesharecdn.com/2-advanced-av-foundation-110823071412-phpapp01/85/Advanced-AV-Foundation-CocoaConf-Aug-11-4-320.jpg)

![AVCaptureVideoDataOutput

AVCaptureDeviceInput *captureInput =

[AVCaptureDeviceInput deviceInputWithDevice:

[AVCaptureDevice

defaultDeviceWithMediaType:AVMediaTypeVideo]

error:nil];

AVCaptureVideoDataOutput *captureOutput =

[[AVCaptureVideoDataOutput alloc] init];

captureOutput.alwaysDiscardsLateVideoFrames = YES;

[captureOutput setSampleBufferDelegate:self

queue:dispatch_get_main_queue()];

// some configuration stuff omitted...

[self.captureSession addOutput:captureOutput];

Tuesday, August 23, 11](https://image.slidesharecdn.com/2-advanced-av-foundation-110823071412-phpapp01/85/Advanced-AV-Foundation-CocoaConf-Aug-11-12-320.jpg)

![AVCaptureVideoDataOutputSam

pleBufferDelegate (yes, really)

- (void)captureOutput:(AVCaptureOutput *)captureOutput

didOutputSampleBuffer:(CMSampleBufferRef)sampleBuffer

fromConnection:(AVCaptureConnection *)connection

{

CVImageBufferRef imageBuffer =

CMSampleBufferGetImageBuffer(sampleBuffer);

CVPixelBufferLockBaseAddress(imageBuffer,0);

// Core Video pixel counting math omitted...

CGContextRef newContext =

CGBitmapContextCreate(baseAddress, width, height, 8,

bytesPerRow, colorSpace,

kCGBitmapByteOrder32Little |

kCGImageAlphaNoneSkipFirst);

CGImageRef capture = CGBitmapContextCreateImage(newContext);

CVPixelBufferUnlockBaseAddress(imageBuffer,0);

// Zxing then does secondary crop, converts to UIImage, and

// calls its own -[Decoder decodeImage:cropRect:]

}

Tuesday, August 23, 11](https://image.slidesharecdn.com/2-advanced-av-foundation-110823071412-phpapp01/85/Advanced-AV-Foundation-CocoaConf-Aug-11-13-320.jpg)

![Using AVAssetWriter

✤ Create an AVAssetWriter

✤ Create and configure an AVAssetWriterInput and connect it to the

writer

✤ -[AVAssetWriter startWriting]

✤ Repeatedly call -[AVAssetWriterInput appendSampleBuffer:] with

CMSampleBufferRef’s

✤ Set expectsDataInRealTime appropriately, honor

readyForMoreMediaData property.

Tuesday, August 23, 11](https://image.slidesharecdn.com/2-advanced-av-foundation-110823071412-phpapp01/85/Advanced-AV-Foundation-CocoaConf-Aug-11-16-320.jpg)

![Create writer, writer input, and

pixel buffer adaptor

assetWriter = [[AVAssetWriter alloc] initWithURL:movieURL

fileType:AVFileTypeQuickTimeMovie

error:&movieError];

NSDictionary *assetWriterInputSettings = [NSDictionary dictionaryWithObjectsAndKeys:

AVVideoCodecH264, AVVideoCodecKey,

[NSNumber numberWithInt:FRAME_WIDTH], AVVideoWidthKey,

[NSNumber numberWithInt:FRAME_HEIGHT], AVVideoHeightKey,

nil];

assetWriterInput = [AVAssetWriterInput assetWriterInputWithMediaType: AVMediaTypeVideo

outputSettings:assetWriterInputSettings];

assetWriterInput.expectsMediaDataInRealTime = YES;

[assetWriter addInput:assetWriterInput];

assetWriterPixelBufferAdaptor = [[AVAssetWriterInputPixelBufferAdaptor alloc]

! ! ! ! ! ! ! ! initWithAssetWriterInput:assetWriterInput

! ! ! ! ! ! ! ! sourcePixelBufferAttributes:nil];

[assetWriter startWriting];

Settings keys and values are defined in AVAudioSettings.h

and AVVideoSettings.h, or AV Foundation Constants Reference

Tuesday, August 23, 11](https://image.slidesharecdn.com/2-advanced-av-foundation-110823071412-phpapp01/85/Advanced-AV-Foundation-CocoaConf-Aug-11-19-320.jpg)

![Create a pixel buffer

// get screenshot image!

CGImageRef image = (CGImageRef) [[self screenshot] CGImage];

NSLog (@"made screenshot");

// prepare the pixel buffer

CVPixelBufferRef pixelBuffer = NULL;

CFDataRef imageData= CGDataProviderCopyData(CGImageGetDataProvider(image));

NSLog (@"copied image data");

cvErr = CVPixelBufferCreateWithBytes(kCFAllocatorDefault,

FRAME_WIDTH,

FRAME_HEIGHT,

kCVPixelFormatType_32BGRA,

(void*)CFDataGetBytePtr(imageData),

CGImageGetBytesPerRow(image),

NULL,

NULL,

NULL,

&pixelBuffer);

NSLog (@"CVPixelBufferCreateWithBytes returned %d", cvErr);

Tuesday, August 23, 11](https://image.slidesharecdn.com/2-advanced-av-foundation-110823071412-phpapp01/85/Advanced-AV-Foundation-CocoaConf-Aug-11-21-320.jpg)

![Calculate time and write sample

// calculate the time

CFAbsoluteTime thisFrameWallClockTime = CFAbsoluteTimeGetCurrent();

CFTimeInterval elapsedTime = thisFrameWallClockTime - firstFrameWallClockTime;

NSLog (@"elapsedTime: %f", elapsedTime);

CMTime presentationTime = CMTimeMake (elapsedTime * TIME_SCALE, TIME_SCALE);

// write the sample

BOOL appended = [assetWriterPixelBufferAdaptor appendPixelBuffer:pixelBuffer

withPresentationTime:presentationTime];

Tuesday, August 23, 11](https://image.slidesharecdn.com/2-advanced-av-foundation-110823071412-phpapp01/85/Advanced-AV-Foundation-CocoaConf-Aug-11-22-320.jpg)

![Using AVAssetReader

✤ Create an AVAssetReader

✤ Create and configure an AVAssetReaderOutput

✤ Three concrete subclasses: AVAssetReaderTrackOutput,

AVAssetReaderAudioMixOutput, and

AVAssetReaderVideoCompositionOutput.

✤ Get data with -[AVAssetReader copyNextSampleBuffer]

Tuesday, August 23, 11](https://image.slidesharecdn.com/2-advanced-av-foundation-110823071412-phpapp01/85/Advanced-AV-Foundation-CocoaConf-Aug-11-25-320.jpg)

![Coordinated reading/writing

✤ You can provide a block to -[AVAssetWriter

requestMediaDataWhenReady:onQueue:]

✤ Only perform your asset reads / writes when the writer is ready.

✤ In this example, AVAssetWriterInput.expectsMediaInRealTime is NO

Tuesday, August 23, 11](https://image.slidesharecdn.com/2-advanced-av-foundation-110823071412-phpapp01/85/Advanced-AV-Foundation-CocoaConf-Aug-11-28-320.jpg)

![Set up reader, reader output,

writer

NSURL *assetURL = [song valueForProperty:MPMediaItemPropertyAssetURL];

AVURLAsset *songAsset =

[AVURLAsset URLAssetWithURL:assetURL options:nil];

NSError *assetError = nil;

AVAssetReader *assetReader =

[[AVAssetReader assetReaderWithAsset:songAsset

error:&assetError]

retain];

AVAssetReaderOutput *assetReaderOutput =

[[AVAssetReaderAudioMixOutput

assetReaderAudioMixOutputWithAudioTracks:songAsset.tracks

audioSettings: nil]

retain];

[assetReader addOutput: assetReaderOutput];

AVAssetWriter *assetWriter =

[[AVAssetWriter assetWriterWithURL:exportURL

fileType:AVFileTypeCoreAudioFormat

error:&assetError]

retain];

Tuesday, August 23, 11](https://image.slidesharecdn.com/2-advanced-av-foundation-110823071412-phpapp01/85/Advanced-AV-Foundation-CocoaConf-Aug-11-29-320.jpg)

![Set up writer input

AudioChannelLayout channelLayout;

memset(&channelLayout, 0, sizeof(AudioChannelLayout));

channelLayout.mChannelLayoutTag = kAudioChannelLayoutTag_Stereo;

NSDictionary *outputSettings =

[NSDictionary dictionaryWithObjectsAndKeys:

[NSNumber numberWithInt:kAudioFormatLinearPCM], AVFormatIDKey,

[NSNumber numberWithFloat:44100.0], AVSampleRateKey,

[NSNumber numberWithInt:2], AVNumberOfChannelsKey,

[NSData dataWithBytes:&channelLayout length:sizeof(AudioChannelLayout)],

AVChannelLayoutKey,

[NSNumber numberWithInt:16], AVLinearPCMBitDepthKey,

[NSNumber numberWithBool:NO], AVLinearPCMIsNonInterleaved,

[NSNumber numberWithBool:NO],AVLinearPCMIsFloatKey,

[NSNumber numberWithBool:NO], AVLinearPCMIsBigEndianKey,

nil];

AVAssetWriterInput *assetWriterInput =

[[AVAssetWriterInput assetWriterInputWithMediaType:AVMediaTypeAudio

outputSettings:outputSettings]

retain];

Note 1: Many of these settings are required, but you won’t know which until you get a runtime error.

Note 2: AudioChannelLayout is from Core Audio

Tuesday, August 23, 11](https://image.slidesharecdn.com/2-advanced-av-foundation-110823071412-phpapp01/85/Advanced-AV-Foundation-CocoaConf-Aug-11-30-320.jpg)

![Start reading and writing

[assetWriter startWriting];

[assetReader startReading];

AVAssetTrack *soundTrack = [songAsset.tracks objectAtIndex:0];

CMTime startTime = CMTimeMake (0, soundTrack.naturalTimeScale);

[assetWriter startSessionAtSourceTime: startTime];

Tuesday, August 23, 11](https://image.slidesharecdn.com/2-advanced-av-foundation-110823071412-phpapp01/85/Advanced-AV-Foundation-CocoaConf-Aug-11-31-320.jpg)

![Read only when writer is ready

__block UInt64 convertedByteCount = 0;

dispatch_queue_t mediaInputQueue = dispatch_queue_create("mediaInputQueue", NULL);

[assetWriterInput requestMediaDataWhenReadyOnQueue:mediaInputQueue

usingBlock: ^

{

while (assetWriterInput.readyForMoreMediaData) {

CMSampleBufferRef nextBuffer = [assetReaderOutput copyNextSampleBuffer];

if (nextBuffer) {

// append buffer

[assetWriterInput appendSampleBuffer: nextBuffer];

convertedByteCount += CMSampleBufferGetTotalSampleSize (nextBuffer);

// update UI on main thread only

NSNumber *convertedByteCountNumber = [NSNumber

numberWithLong:convertedByteCount];

[self performSelectorOnMainThread:@selector(updateSizeLabel:)

withObject:convertedByteCountNumber

waitUntilDone:NO];

}

Tuesday, August 23, 11](https://image.slidesharecdn.com/2-advanced-av-foundation-110823071412-phpapp01/85/Advanced-AV-Foundation-CocoaConf-Aug-11-32-320.jpg)

![Close file when done

else {

// done!

[assetWriterInput markAsFinished];

[assetWriter finishWriting];

[assetReader cancelReading];

NSDictionary *outputFileAttributes = [[NSFileManager defaultManager]

attributesOfItemAtPath:exportPath

error:nil];

NSNumber *doneFileSize = [NSNumber numberWithLong:[outputFileAttributes

fileSize]];

[self performSelectorOnMainThread:@selector(updateCompletedSizeLabel:)

withObject:doneFileSize

waitUntilDone:NO];

// release a lot of stuff

[assetReader release];

[assetReaderOutput release];

[assetWriter release];

[assetWriterInput release];

[exportPath release];

break;

}

}

}];

Tuesday, August 23, 11](https://image.slidesharecdn.com/2-advanced-av-foundation-110823071412-phpapp01/85/Advanced-AV-Foundation-CocoaConf-Aug-11-33-320.jpg)

![AVComposition

✤ An AVAsset that gets its tracks from multiple file-based sources

✤ To create a movie, you typically use an AVMutableComposition

composition = [[AVMutableComposition alloc] init];

Tuesday, August 23, 11](https://image.slidesharecdn.com/2-advanced-av-foundation-110823071412-phpapp01/85/Advanced-AV-Foundation-CocoaConf-Aug-11-42-320.jpg)

![Copying from another asset

✤ -[AVMutableComposition insertTimeRange:ofAsset:atTime:error:]

CMTime inTime = CMTimeMakeWithSeconds(inSeconds, 600);

CMTime outTime = CMTimeMakeWithSeconds(outSeconds, 600);

CMTime duration = CMTimeSubtract(outTime, inTime);

CMTimeRange editRange = CMTimeRangeMake(inTime, duration);

NSError *editError = nil;

[targetController.composition insertTimeRange:editRange

! ! ! ! ! ! ! ofAsset:sourceAsset

atTime:targetController.composition.duration

! ! ! ! ! ! ! error:&editError];

Tuesday, August 23, 11](https://image.slidesharecdn.com/2-advanced-av-foundation-110823071412-phpapp01/85/Advanced-AV-Foundation-CocoaConf-Aug-11-43-320.jpg)

![Multiple video tracks

✤ To combine multiple video sources into one movie, create an

AVMutableComposition, then create AVMutableCompositionTracks

// create composition

self.composition = [[AVMutableComposition alloc] init];

// create video tracks a and b

// note: mediatypes are defined in AVMediaFormat.h

[trackA! release];

trackA = [self.composition addMutableTrackWithMediaType:AVMediaTypeVideo

preferredTrackID:kCMPersistentTrackID_Invalid];

[trackB release];

trackB = [self.composition addMutableTrackWithMediaType:AVMediaTypeVideo

preferredTrackID:kCMPersistentTrackID_Invalid];

// locate source video track

AVAssetTrack *sourceVideoTrack = [[sourceVideoAsset tracksWithMediaType:

AVMediaTypeVideo]

objectAtIndex: 0];

Tuesday, August 23, 11](https://image.slidesharecdn.com/2-advanced-av-foundation-110823071412-phpapp01/85/Advanced-AV-Foundation-CocoaConf-Aug-11-47-320.jpg)

![Sound tracks

✤ Treat your audio as separate tracks too.

// create music track

trackMusic = [self.composition addMutableTrackWithMediaType:AVMediaTypeAudio

preferredTrackID:kCMPersistentTrackID_Invalid];

CMTimeRange musicTrackTimeRange = CMTimeRangeMake(kCMTimeZero,

musicTrackAudioAsset.duration);

NSError *trackMusicError = nil;

[trackMusic insertTimeRange:musicTrackTimeRange

ofTrack:[musicTrackAudioAsset.tracks objectAtIndex:0]

atTime:kCMTimeZero

error:&trackMusicError];

Tuesday, August 23, 11](https://image.slidesharecdn.com/2-advanced-av-foundation-110823071412-phpapp01/85/Advanced-AV-Foundation-CocoaConf-Aug-11-49-320.jpg)

![Empty ranges

✤ Use -[AVMutableCompositionTrack insertEmptyTimeRange:] to

account for any part of any track where you won’t be inserting media

segments.

CMTime videoTracksTime = CMTimeMake(0, VIDEO_TIME_SCALE);

CMTime postEditTime = CMTimeAdd (videoTracksTime,

CMTimeMakeWithSeconds(FIRST_CUT_TRACK_A_IN_TIME,

VIDEO_TIME_SCALE));

[trackA insertEmptyTimeRange:CMTimeRangeMake(kCMTimeZero, postEditTime)];

videoTracksTime = postEditTime;

Tuesday, August 23, 11](https://image.slidesharecdn.com/2-advanced-av-foundation-110823071412-phpapp01/85/Advanced-AV-Foundation-CocoaConf-Aug-11-51-320.jpg)

![Track-level inserts

✤ Insert media segments with -[AVMutableCompositionTrack

insertTimeRange:ofTrack:atTime:error]

postEditTime = CMTimeAdd (videoTracksTime, CMTimeMakeWithSeconds(FIRST_CUT_DURATION,

VIDEO_TIME_SCALE));

CMTimeRange firstShotRange = CMTimeRangeMake(kCMTimeZero,

CMTimeMakeWithSeconds(FIRST_CUT_DURATION,

VIDEO_TIME_SCALE));

[trackA insertTimeRange:firstShotRange

ofTrack:sourceVideoTrack

atTime:videoTracksTime

error:&performError];

videoTracksTime = postEditTime;

Tuesday, August 23, 11](https://image.slidesharecdn.com/2-advanced-av-foundation-110823071412-phpapp01/85/Advanced-AV-Foundation-CocoaConf-Aug-11-52-320.jpg)

![AVVideoCompositionLayerInstru

ction (yes, really)

✤ Identifies the instructions for one track within an

AVVideoCompositionInstruction.

✤ AVMutableVideoCompositionLayerInstruction. I warned you about

this back on slide 3.

✤ Currently supports two properties: opacity and affine transform.

Animating (“ramping”) these creates fades/cross-dissolves and

pushes.

✤ e.g., -[AVMutableVideoCompositionLayerInstruction

setOpacityRampFromStartOpacity:toEndOpacity:timeRange]

Tuesday, August 23, 11](https://image.slidesharecdn.com/2-advanced-av-foundation-110823071412-phpapp01/85/Advanced-AV-Foundation-CocoaConf-Aug-11-55-320.jpg)

![An

AVVideoCompositionInstruction

AVMutableVideoCompositionInstruction *transitionInstruction =

[AVMutableVideoCompositionInstruction videoCompositionInstruction];

transitionInstruction.timeRange = CMTimeRangeMake(kCMTimeZero, composition.duration);

AVMutableVideoCompositionLayerInstruction *aInstruction =

[AVMutableVideoCompositionLayerInstruction videoCompositionLayerInstructionWithAssetTrack:

trackA];

[aInstruction setOpacityRampFromStartOpacity:0.0 toEndOpacity:1.0

timeRange:CMTimeRangeMake(CMTimeMakeWithSeconds(2.9, VIDEO_TIME_SCALE),

CMTimeMakeWithSeconds(6.0, VIDEO_TIME_SCALE))];

AVMutableVideoCompositionLayerInstruction *bInstruction =

[AVMutableVideoCompositionLayerInstruction videoCompositionLayerInstructionWithAssetTrack:

trackB];

[bInstruction setOpacity:0 atTime:kCMTimeZero];

transitionInstruction.layerInstructions = [NSArray arrayWithObjects:aInstruction, bInstruction, nil];

[videoInstructions addObject: transitionInstruction];

Tuesday, August 23, 11](https://image.slidesharecdn.com/2-advanced-av-foundation-110823071412-phpapp01/85/Advanced-AV-Foundation-CocoaConf-Aug-11-56-320.jpg)

![Attaching the instructions

AVMutableVideoComposition *videoComposition =

[AVMutableVideoComposition videoComposition];

videoComposition.instructions = videoInstructions;

videoComposition.renderSize = videoSize;

videoComposition.frameDuration = CMTimeMake(1, 30); // 30 fps

compositionPlayer.currentItem.videoComposition =

videoComposition;

Tuesday, August 23, 11](https://image.slidesharecdn.com/2-advanced-av-foundation-110823071412-phpapp01/85/Advanced-AV-Foundation-CocoaConf-Aug-11-57-320.jpg)

![Creating a main title layer

// synchronized layer to own all the title layers

AVSynchronizedLayer *synchronizedLayer =

[AVSynchronizedLayer

synchronizedLayerWithPlayerItem:compositionPlayer.currentItem];

synchronizedLayer.frame = [compositionView frame];

[self.view.layer addSublayer:synchronizedLayer];

// main titles

CATextLayer *mainTitleLayer = [CATextLayer layer];

mainTitleLayer.string = NSLocalizedString(@"Running Start", nil);

mainTitleLayer.font = @"Verdana-Bold";

mainTitleLayer.fontSize = videoSize.height / 8;

mainTitleLayer.foregroundColor = [[UIColor yellowColor] CGColor];

mainTitleLayer.alignmentMode = kCAAlignmentCenter;

mainTitleLayer.frame = CGRectMake(0.0, 0.0, videoSize.width,

videoSize.height);

mainTitleLayer.opacity = 0.0; // initially invisible

[synchronizedLayer addSublayer:mainTitleLayer];

Tuesday, August 23, 11](https://image.slidesharecdn.com/2-advanced-av-foundation-110823071412-phpapp01/85/Advanced-AV-Foundation-CocoaConf-Aug-11-59-320.jpg)

![Adding an animation

// main title opacity animation

[CATransaction begin];

[CATransaction setDisableActions:YES];

CABasicAnimation *mainTitleInAnimation =

[CABasicAnimation animationWithKeyPath:@"opacity"];

mainTitleInAnimation.fromValue = [NSNumber numberWithFloat: 0.0];

mainTitleInAnimation.toValue = [NSNumber numberWithFloat: 1.0];

mainTitleInAnimation.removedOnCompletion = NO;

mainTitleInAnimation.beginTime = AVCoreAnimationBeginTimeAtZero;

mainTitleInAnimation.duration = 5.0;

[mainTitleLayer addAnimation:mainTitleInAnimation forKey:@"in-animation"];

Nasty gotcha: AVCoreAnimationBeginTimeAtZero is a special value that is used for AVF

animations, since 0 would otherwise be interpreted as CACurrentMediaTime()

Tuesday, August 23, 11](https://image.slidesharecdn.com/2-advanced-av-foundation-110823071412-phpapp01/85/Advanced-AV-Foundation-CocoaConf-Aug-11-60-320.jpg)