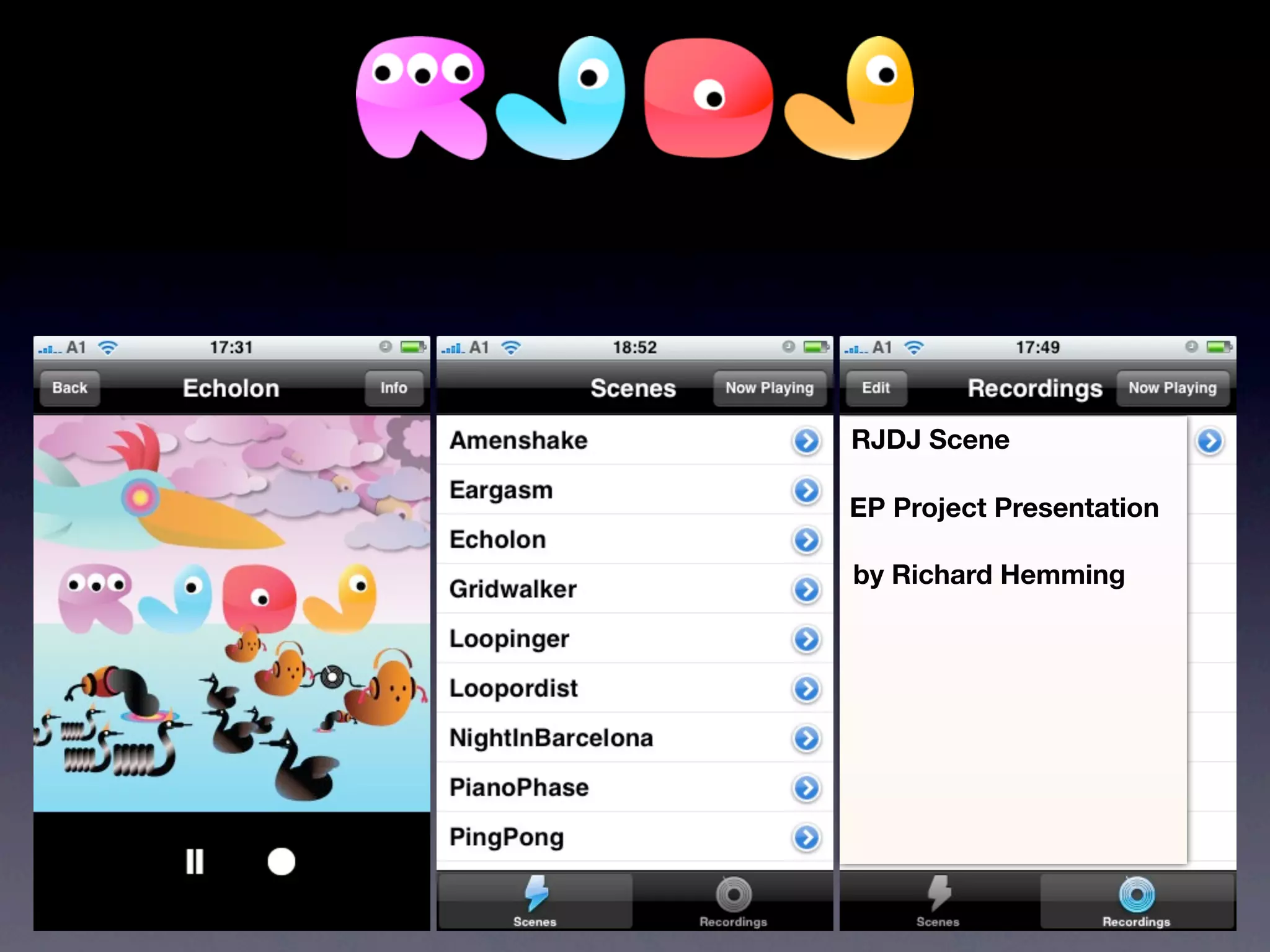

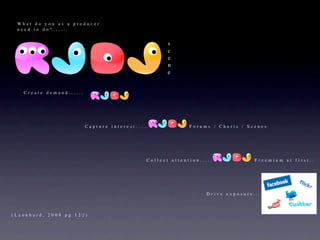

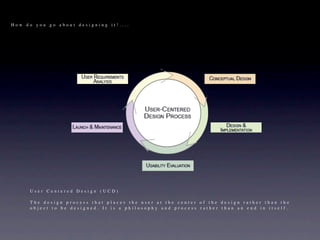

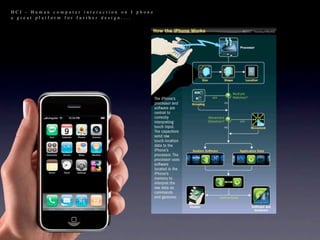

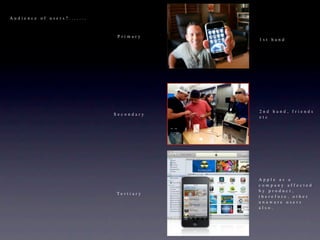

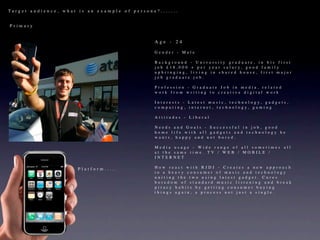

The document discusses plans to create interactive music scenes using the RJDJ app for iPhone. The scenes would analyze environmental sounds through the microphone and generate responsive music through techniques like spectral analysis and sample triggering. The goal is to create an immersive, individualized experience for each user based on their surroundings. The project will use Pure Data to program the scenes and implement techniques like feedback loops and synthesis. It aims to empower users and make them part of the creative process. A freemium model and premium scenes may be used to monetize the project. User testing and wireframing will inform the design process.