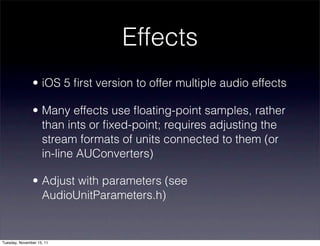

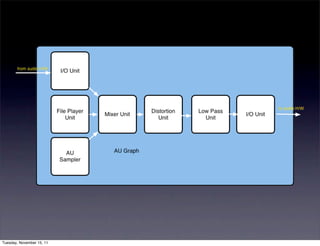

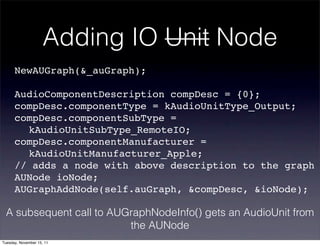

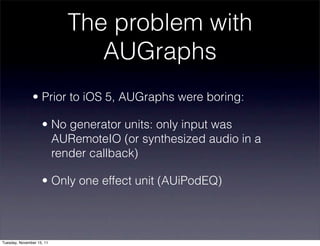

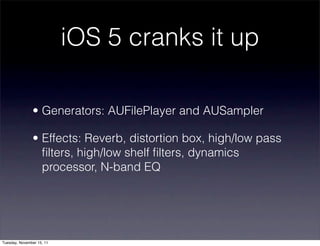

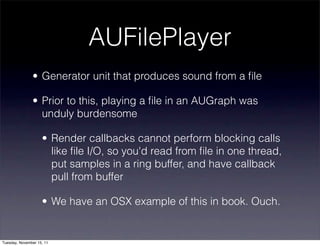

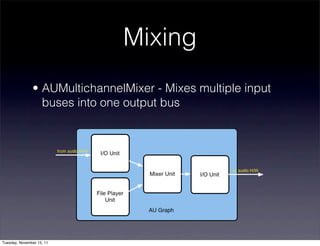

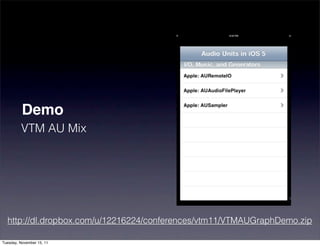

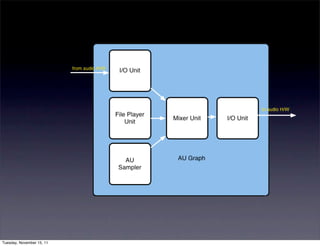

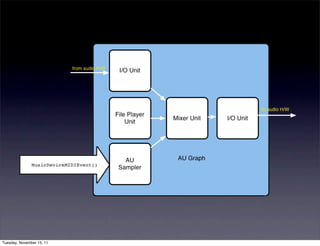

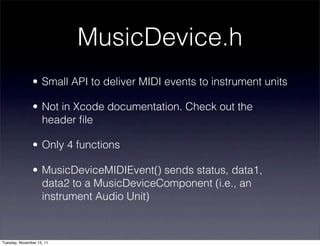

The document discusses Core Audio, a low-level audio processing API in iOS that provides capabilities for audio units, mixing, and effects. It covers the advanced features introduced in iOS 5, including generator units for playing audio files and multiple audio effects like reverb and filters. Additionally, it touches on MIDI integration for handling music events and the configuration of audio units for real-time audio manipulation.

![MIDI set-up

MIDIClientCreate(CFSTR("VTM iOS Demo"), MyMIDINotifyProc,

! ! callbackContext, &client);

MIDIInputPortCreate(client, CFSTR("Input port"),

! ! MyMIDIReadProc, callbackContext, &inPort);

unsigned long sourceCount = MIDIGetNumberOfSources();

for (int i = 0; i < sourceCount; ++i) {

! MIDIEndpointRef src = MIDIGetSource(i);

! CFStringRef endpointName = NULL;

! MIDIObjectGetStringProperty(src, kMIDIPropertyName,

! ! &endpointName);

! char endpointNameC[255];

! CFStringGetCString(endpointName, endpointNameC,

! ! 255, kCFStringEncodingUTF8);

! printf(" source %d: %sn", i, endpointNameC);

! MIDIPortConnectSource(inPort, src, NULL);

}

Tuesday, November 15, 11](https://image.slidesharecdn.com/vtmiosbostoncoreaudio-111115211842-phpapp02/85/Core-Audio-Cranks-It-Up-28-320.jpg)

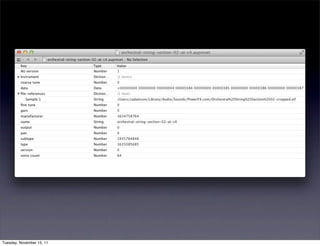

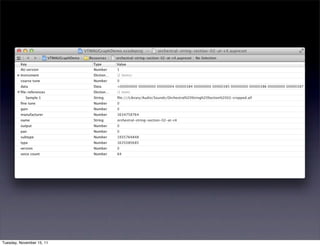

![Loading .aupreset (1/3)

NSString *filePath = [[NSBundle mainBundle]

! pathForResource: AU_SAMPLER_PRESET_FILE

! ofType:@"aupreset"];

CFURLRef presetURL =

! (__bridge CFURLRef) [NSURL fileURLWithPath:filePath];

// load preset file into a CFDataRef

CFDataRef presetData = NULL;

SInt32 errorCode = noErr;

Boolean gotPresetData =

! CFURLCreateDataAndPropertiesFromResource(

! ! kCFAllocatorSystemDefault, presetURL,

! ! &presetData, NULL, NULL, &errorCode);

Tuesday, November 15, 11](https://image.slidesharecdn.com/vtmiosbostoncoreaudio-111115211842-phpapp02/85/Core-Audio-Cranks-It-Up-41-320.jpg)

![Delivering MIDI event

MIDIPacket *packet = (MIDIPacket *)pktlist->packet;!

for (int i=0; i < pktlist->numPackets; i++) {

! Byte midiStatus = packet->data[0];

! Byte midiCommand = midiStatus >> 4;

! // is it a note-on or note-off

! if ((midiCommand == 0x09) || (midiCommand == 0x08)) {

! ! Byte note = packet->data[1] & 0x7F;

! ! Byte velocity = packet->data[2] & 0x7F;

! ! // send to augraph

! ! MusicDeviceMIDIEvent (myVC.auSampler, midiStatus,

note, velocity, 0);

! }

! packet = MIDIPacketNext(packet);

}

Tuesday, November 15, 11](https://image.slidesharecdn.com/vtmiosbostoncoreaudio-111115211842-phpapp02/85/Core-Audio-Cranks-It-Up-48-320.jpg)