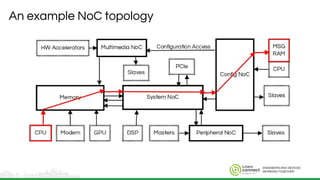

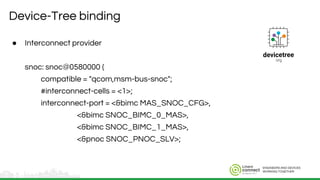

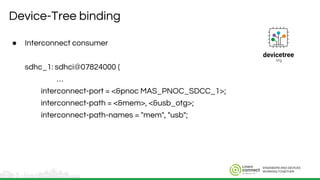

The document outlines the challenges and solutions related to on-chip interconnect buses in System on Chip (SoC) architectures, emphasizing the need for a common framework in the upstream Linux kernel. It proposes a new interconnect framework with vendor-specific drivers and a consumer API for managing data flow and resource allocation effectively. Future work includes incorporating feedback for enhancements, exploring additional Quality of Service (QoS) constraints, and extending support for specific interconnect drivers.