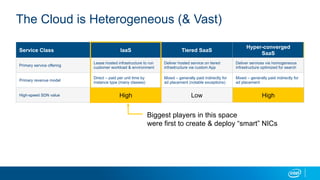

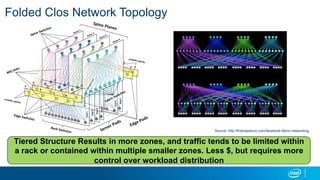

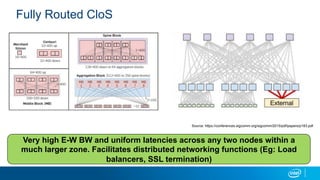

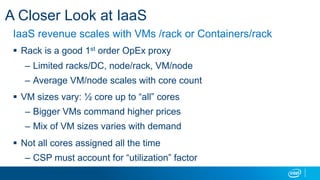

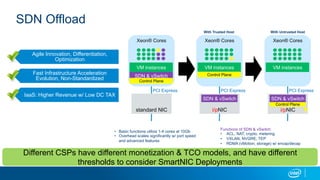

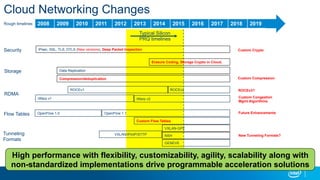

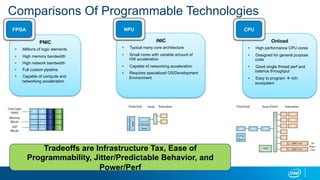

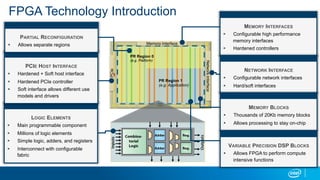

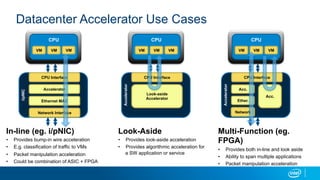

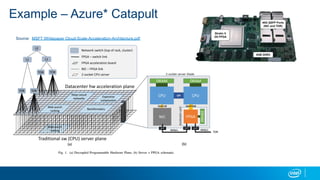

The document discusses trends in cloud networking, focusing on different service classes like IaaS and SaaS, various network topologies, and the future use of advanced technologies like NICs and FPGAs in data centers. Key insights include the differences in revenue models, the importance of SDN and its offloading capabilities, and the scalability challenges in IaaS infrastructures. It highlights the innovation in networking and accelerators, emphasizing a non-standardized approach for future cloud services advancements.