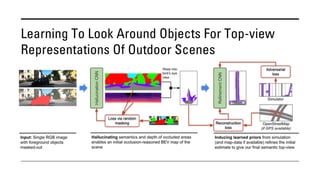

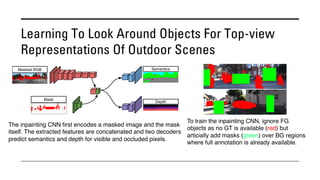

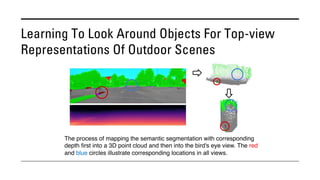

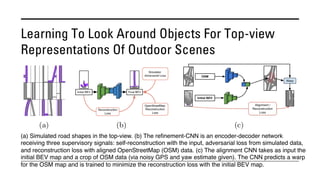

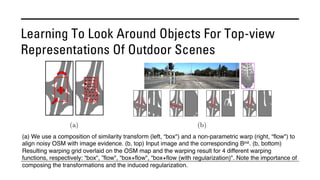

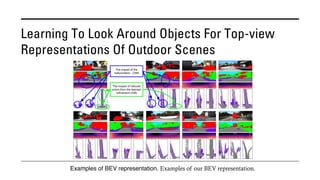

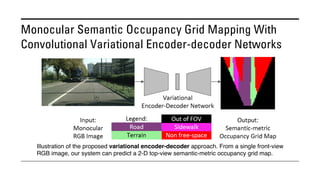

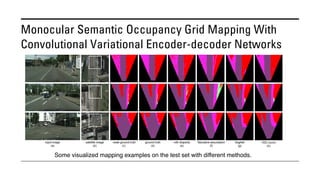

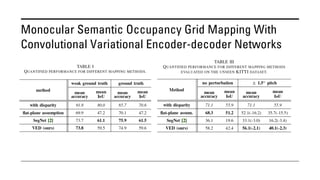

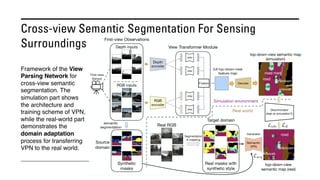

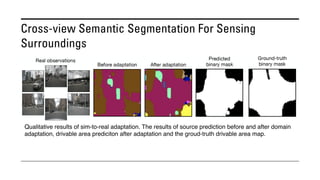

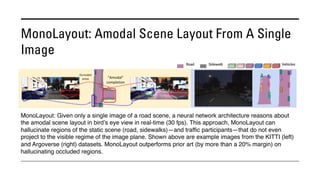

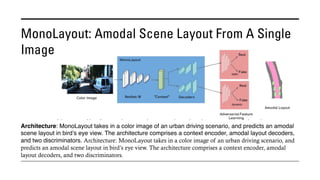

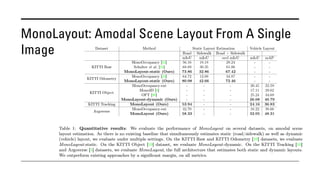

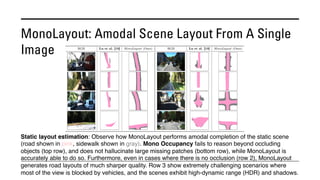

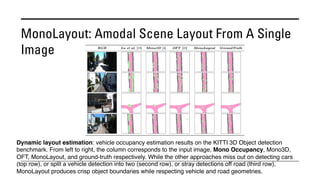

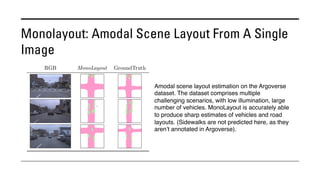

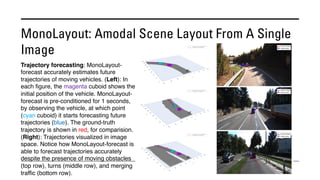

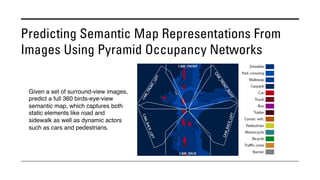

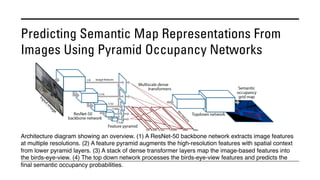

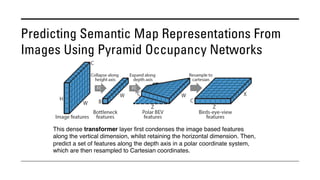

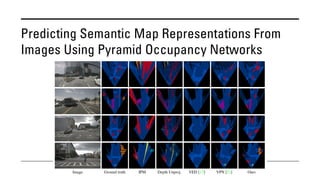

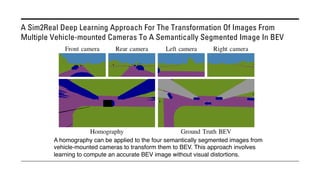

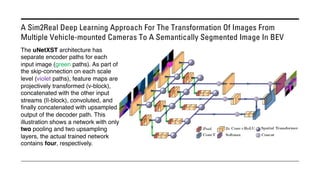

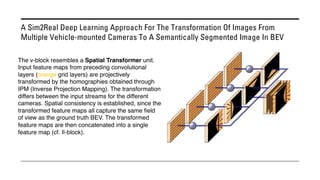

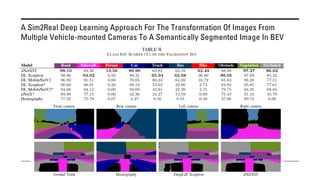

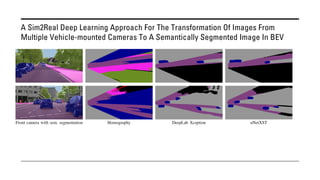

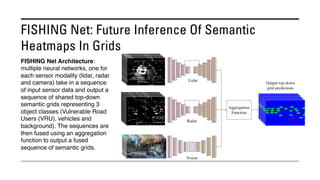

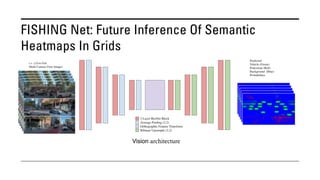

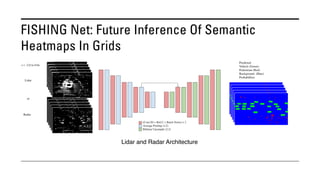

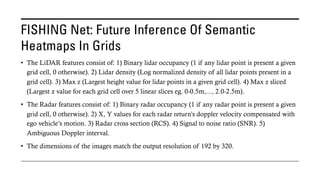

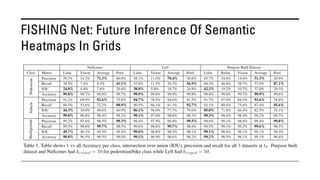

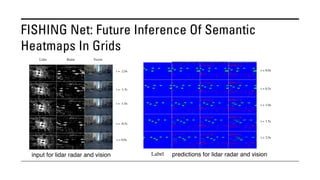

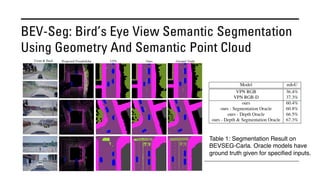

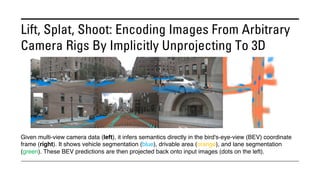

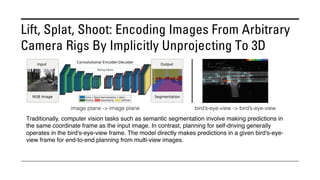

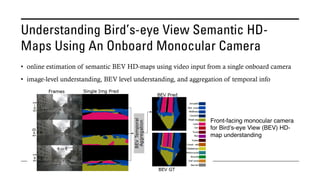

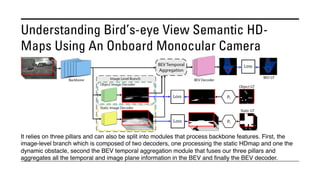

The document outlines various methods for bird's-eye view (BEV) semantic segmentation and scene representation using neural networks. Key techniques include monocular semantic occupancy grid mapping, cross-view semantic segmentation, and the Monolayout approach, which predicts amodal layouts from single images. Additionally, it discusses a 'sim2real' deep learning approach for transforming multi-camera images into semantically segmented BEV representations, as well as frameworks for future inference of semantic heatmaps using sensor data aggregation.