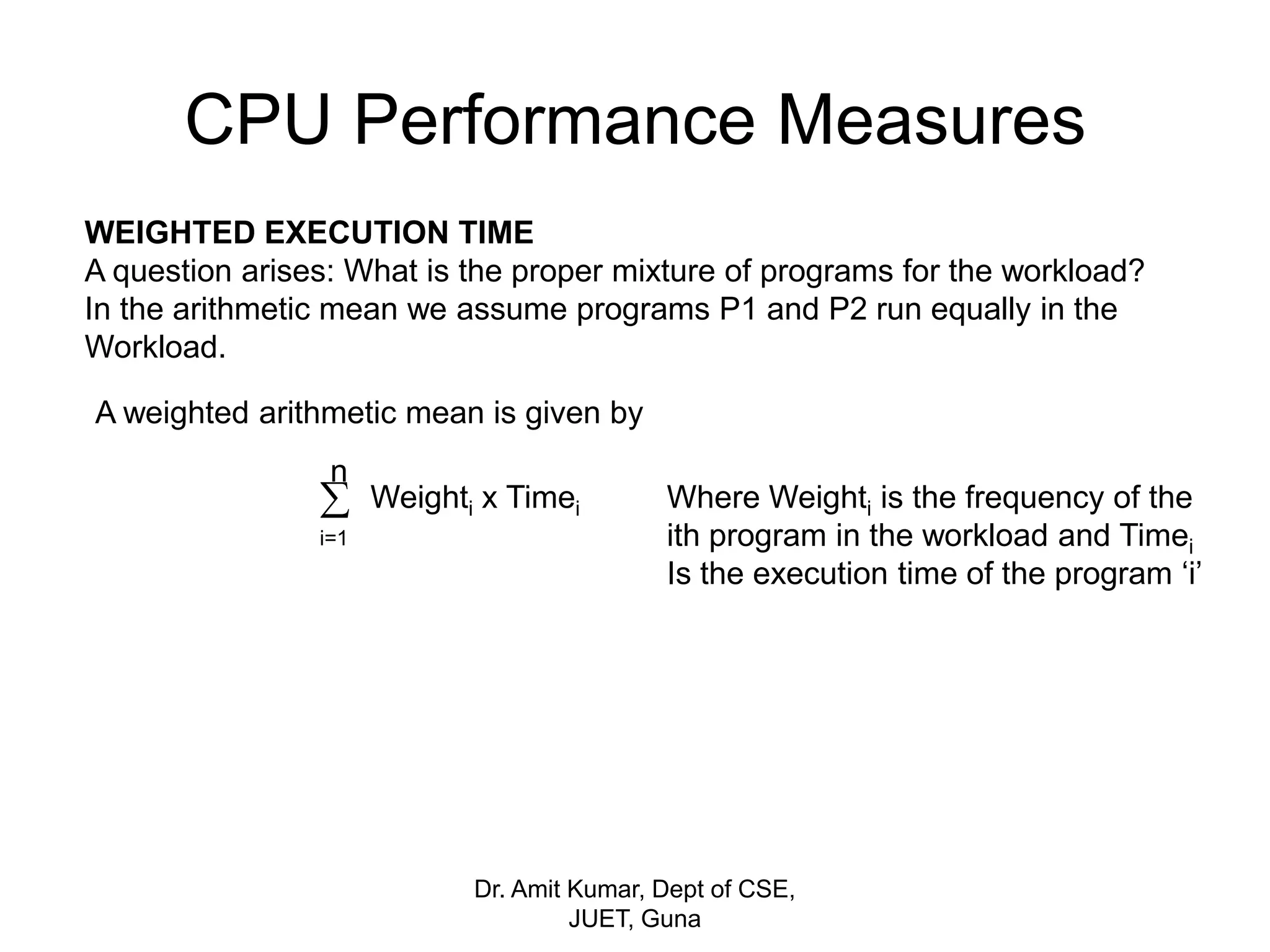

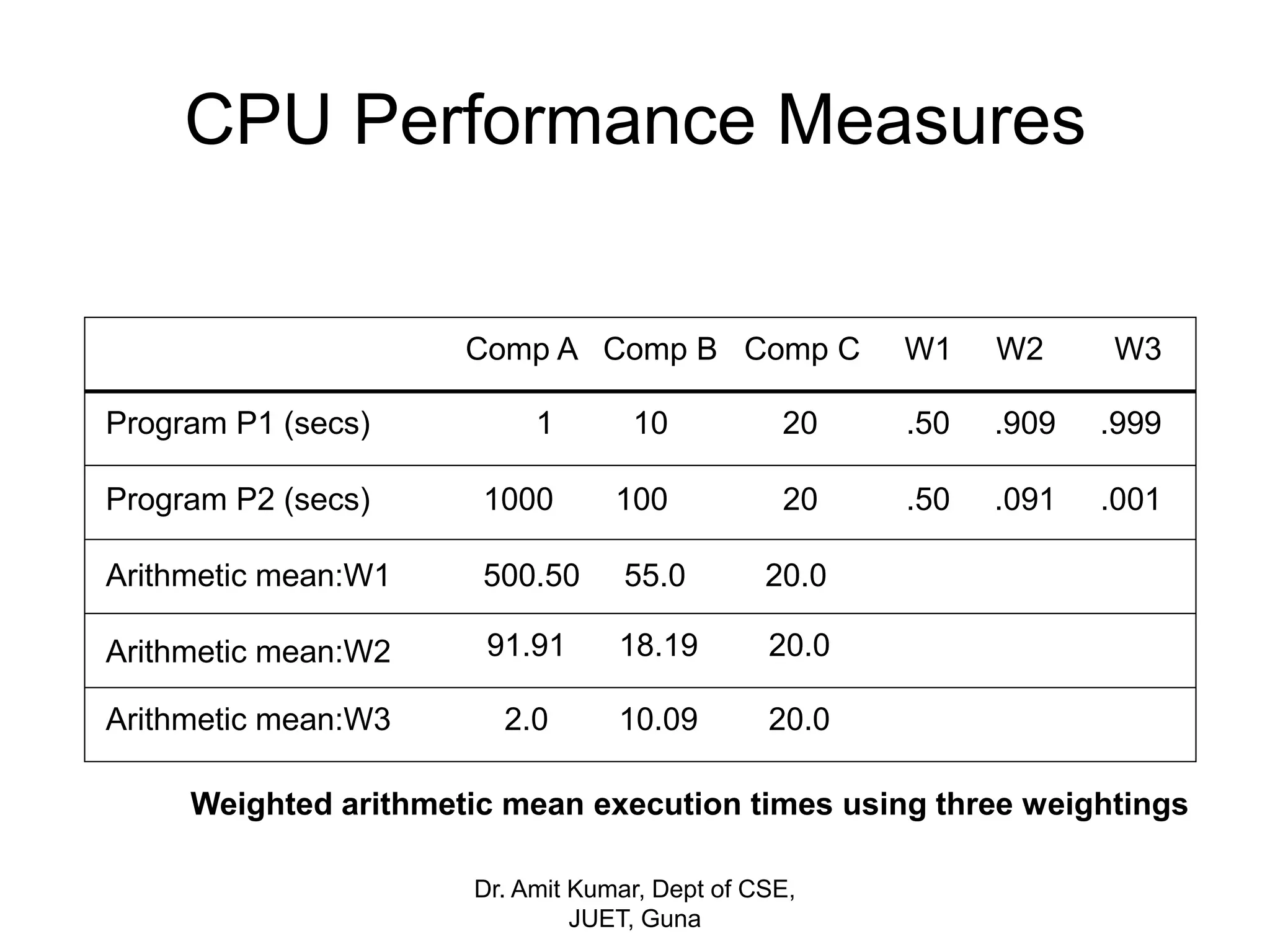

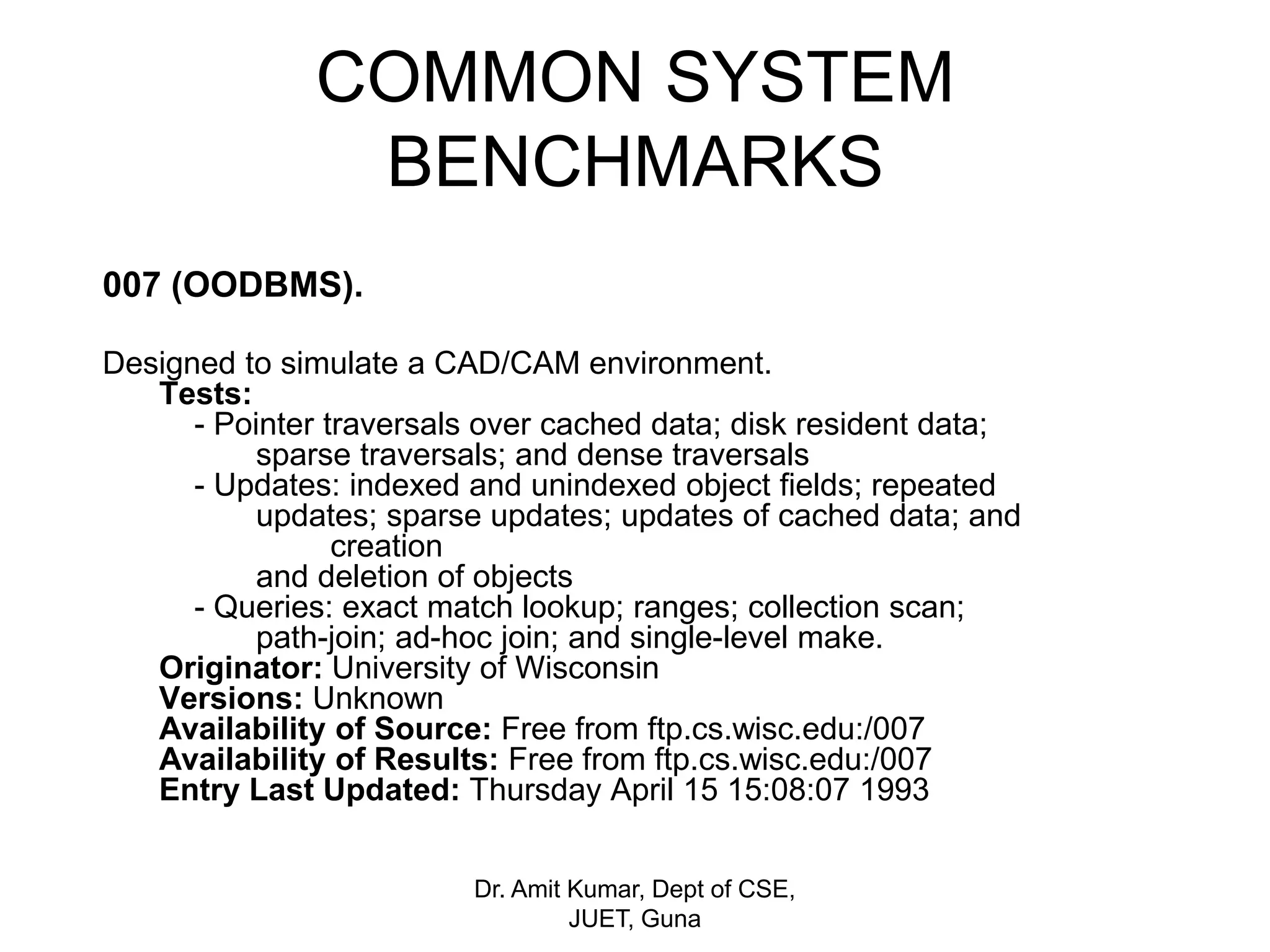

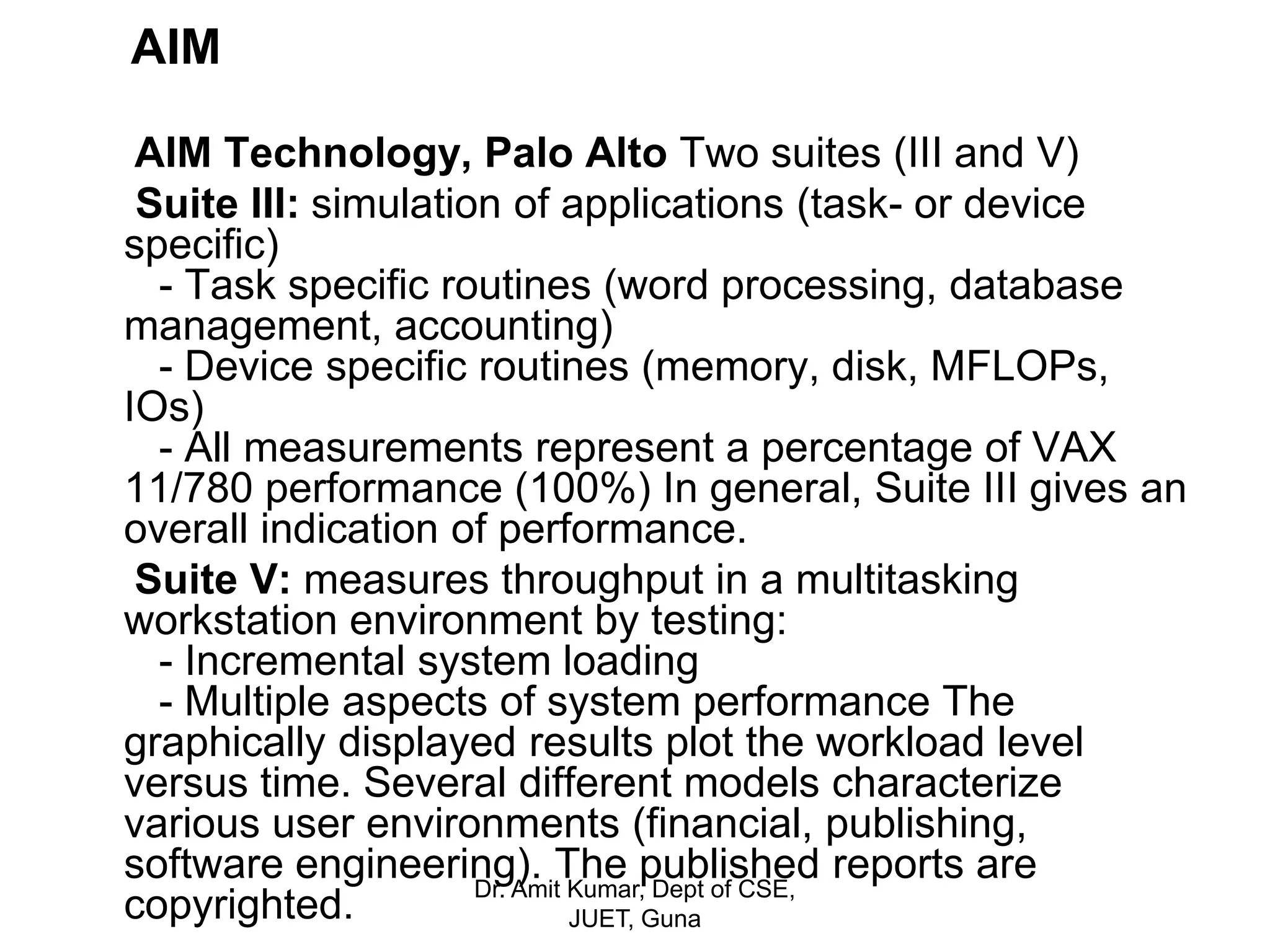

The document discusses benchmarks and benchmarking. It provides definitions and examples of benchmarks, benchmarking, and different types of benchmarks. Some key points:

- Benchmarking is comparing performance metrics to industry best practices to measure performance. Benchmarks are tests used to assess relative performance.

- In computing, benchmarks run programs or operations to assess performance of hardware or software. Common benchmarks include Dhrystone, LINPACK, and SPEC.

- There are different types of benchmarks, including synthetic benchmarks designed to test specific components, real programs, microbenchmarks that test small pieces of code, and benchmark suites that test with a variety of applications.

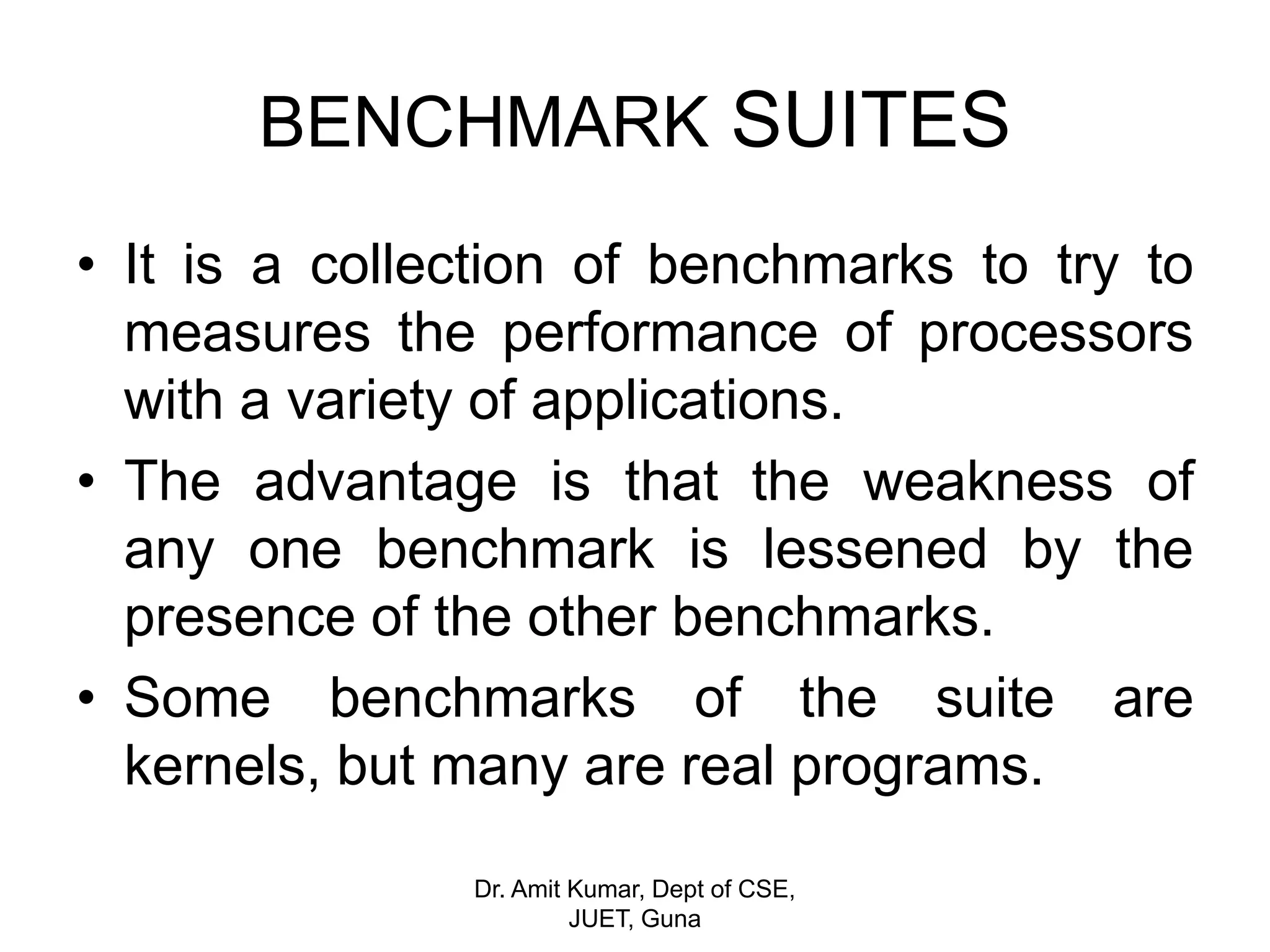

![SPEC

SPEC stands for Standard Performance Evaluation Corporation, a non-profit

organization whose goal is to "establish, maintain and endorse a standardized

set of relevant benchmarks that can be applied to the newest generation of

high performance computers" (from SPEC's bylaws). The SPEC benchmarks

and more information can be obtained from:

SPEC [Standard Performance Evaluation Corporation]

c/o NCGA [National Computer Graphics Association]

2722 Merrilee Drive

Suite 200

Fairfax, VA 22031

USA

The current SPEC benchmark suites are:

CINT92 (CPU intensive integer benchmarks)

CFP92 (CPU intensive floating point benchmarks)

SDM (UNIX Software Development Workloads)

SFS (System level file server (NFS) workload)

Dr. Amit Kumar, Dept of CSE,

JUET, Guna](https://image.slidesharecdn.com/benchmarks-180428050607/75/Benchmarks-34-2048.jpg)

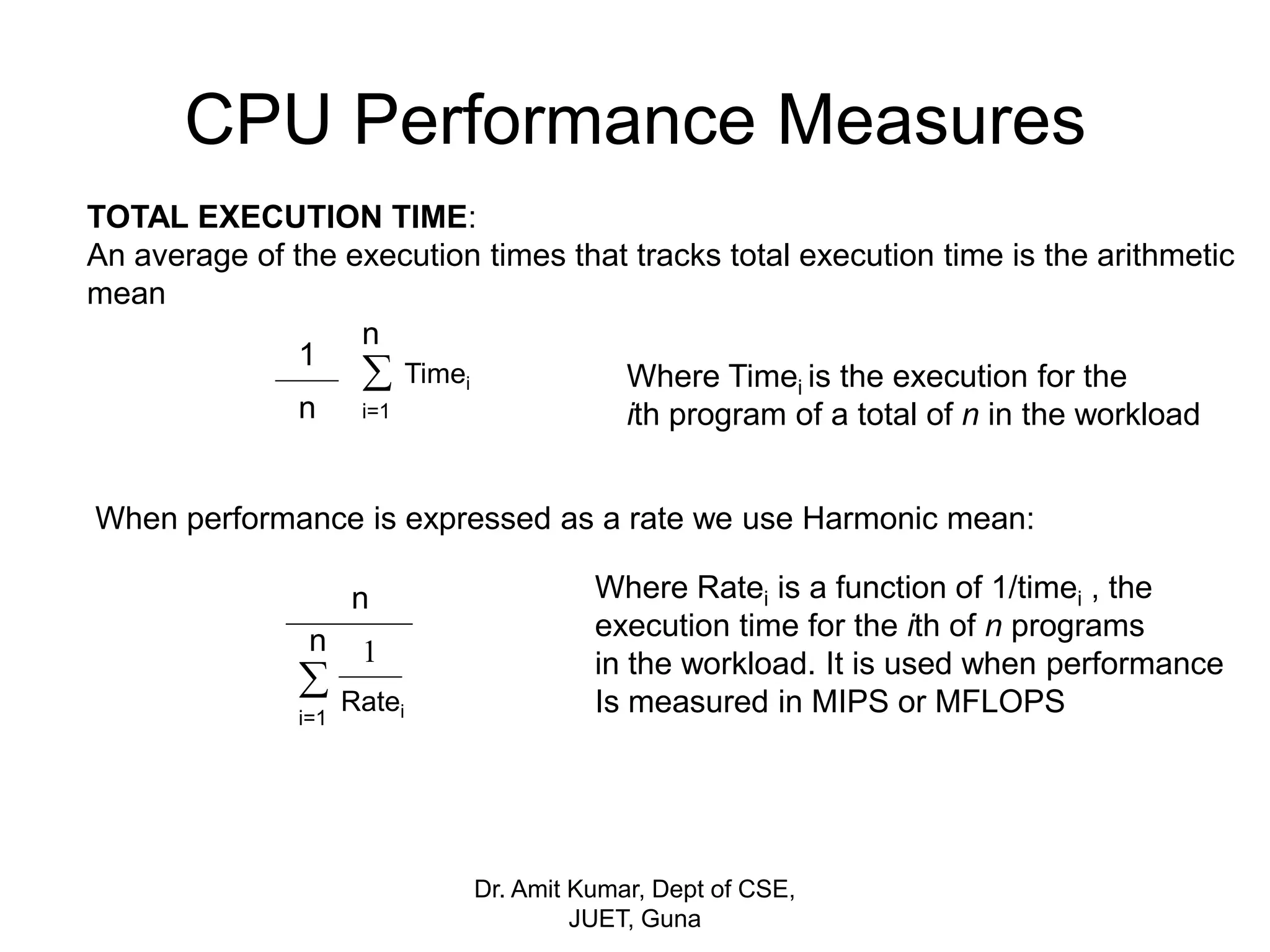

![Stanford

A collection of C routines developed in 1988 at Stanford University

(J. Hennessy, P. Nye). Its two modules, Stanford Integer and Stanford

Floating Point, provide a baseline for comparisons between Reduced

Instruction Set (RISC) and Complex Instruction Set (CISC) processor

architectures

Stanford Integer:

- Eight applications (integer matrix multiplication, sorting algorithm

[quick, bubble, tree], permutation, hanoi, 8 queens puzzle)

Stanford Floating Point:

- Two applications (Fast Fourier Transform [FFT] and matrix multiplication)

The characteristics of the programs vary, but most of them have array

accesses. There seems to be no official publication (only a printing in a

performance report). Secondly, there is no defined weighting of the

results (Sun and MIPS compute the geometric mean).

Dr. Amit Kumar, Dept of CSE,

JUET, Guna](https://image.slidesharecdn.com/benchmarks-180428050607/75/Benchmarks-40-2048.jpg)

![IOBENCH

IOBENCH is a multi-stream benchmark that uses a controlling process

(iobench) to start, coordinate, and measure a number of "user" processes

(iouser); the Makefile parameters used for the SPEC version of IOBENCH

cause ioserver to be built as a "do nothing" process.

IOZONE

This test writes an X MB sequential file in Y byte chunks, then rewinds it

and reads it back. [The size of the file should be big enough to factor out

the effect of any disk cache.] Finally, IOZONE deletes the temporary file.

The file is written (filling any cache buffers), and then read.

If the cache is >= X MB, then most if not all of the reads will be satisfied

from the cache. However, if the cache is <= .5X MB, then NONE of the

reads will be satisfied from the cache. This is because after the file is written,

a .5X MB cache will contain the upper .5 MB of the test file, but we will start

reading from the beginning of the file (data which is no longer in the cache).

In order for this to be a fair test, the length of the test file must be AT LEAST

2X the amount of disk cache memory for your system. If not, you are really

testing the speed at which your CPU can read blocks out of the cache

(not a fair test).

Dr. Amit Kumar, Dept of CSE,

JUET, Guna](https://image.slidesharecdn.com/benchmarks-180428050607/75/Benchmarks-42-2048.jpg)