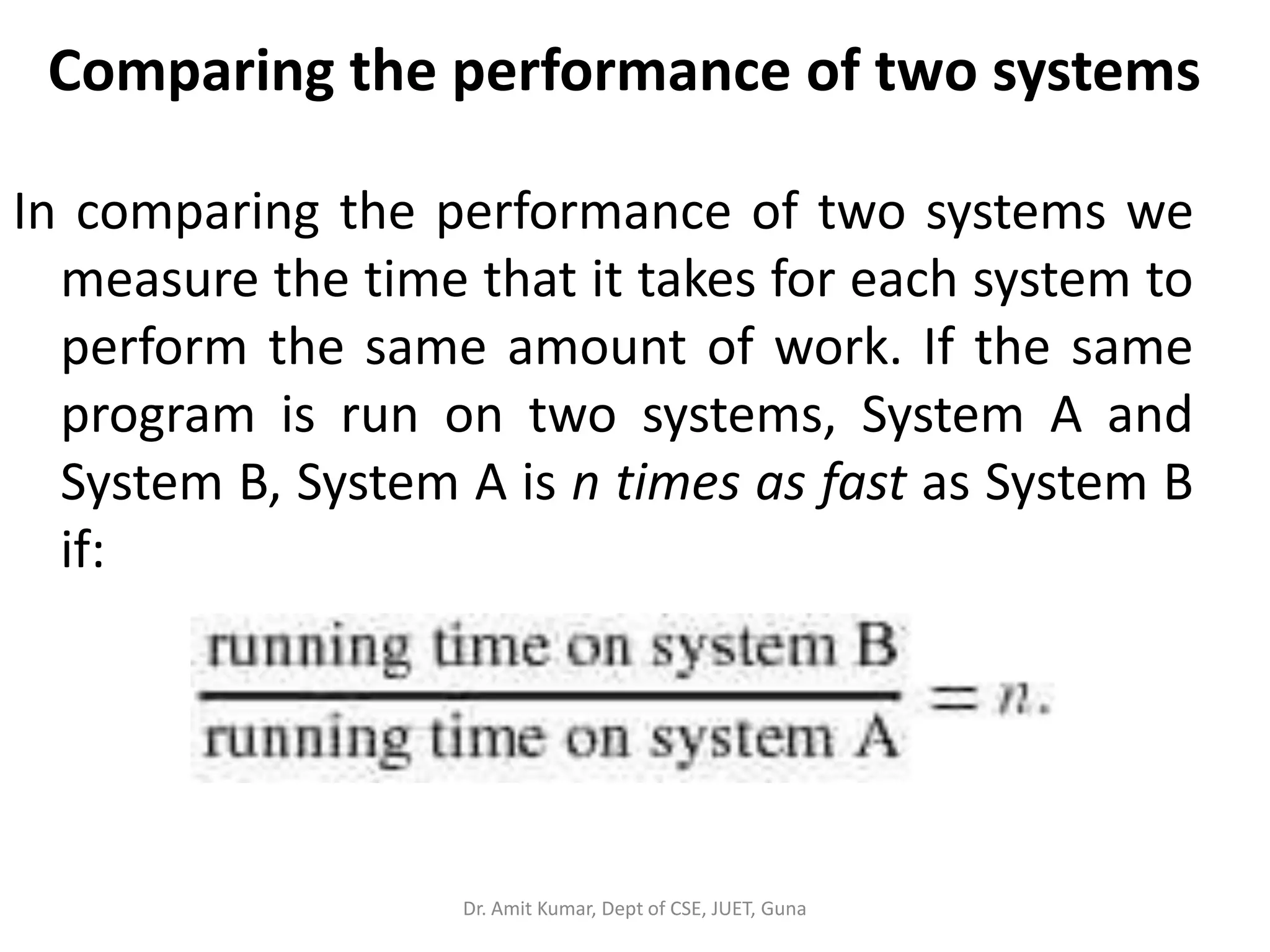

Computer performance is characterized by the amount of useful work accomplished by a system over the resources and time used. It can be measured through metrics like response time, throughput, and utilization. Several factors influence performance, including hardware, software, memory, and I/O. Benchmarks are used to evaluate performance by measuring how systems perform standard tasks. Maintaining high performance requires optimizing these various components through techniques like CPU enhancement, memory improvement, and I/O optimization.

![Basic Performance Metrics

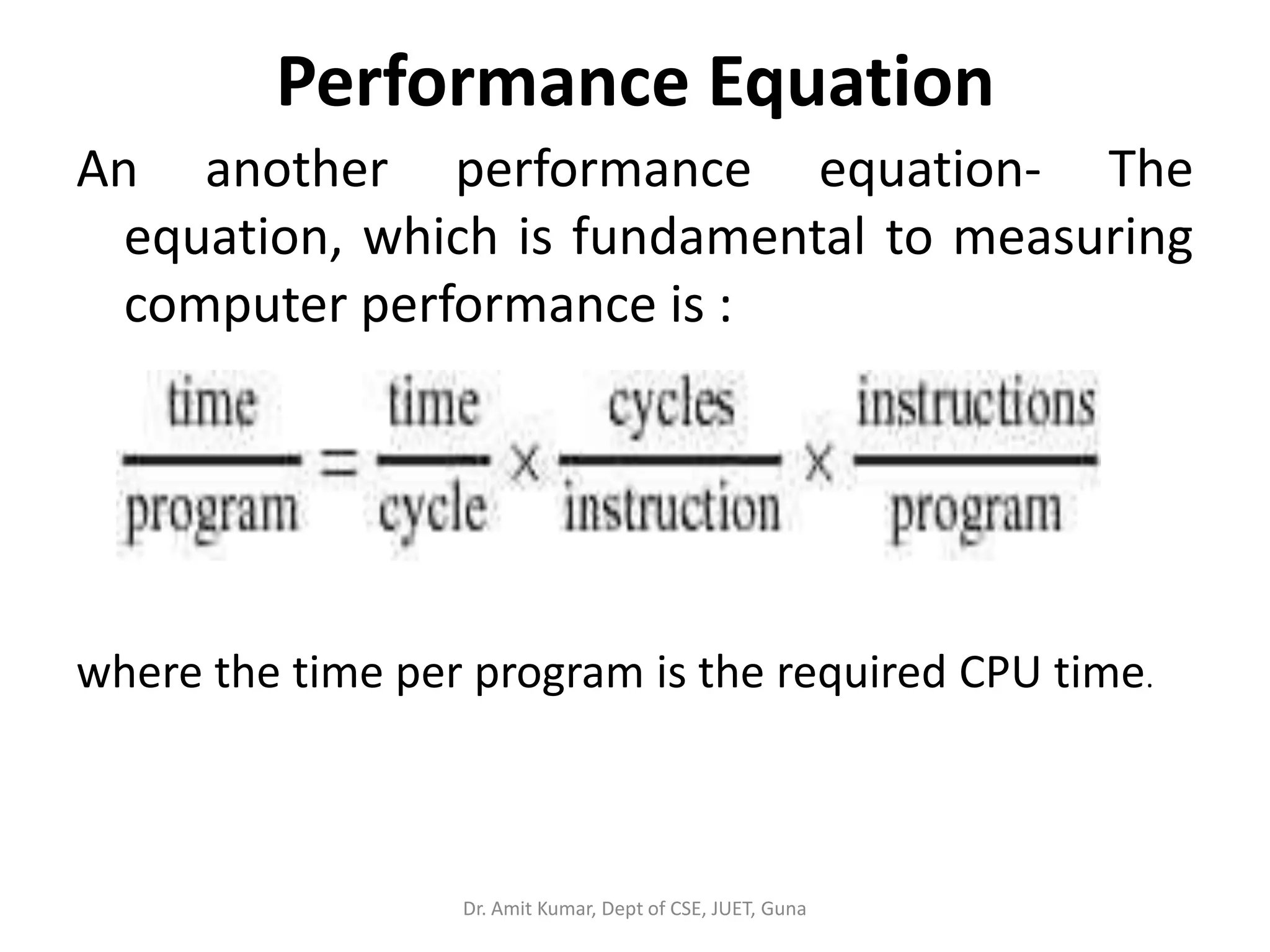

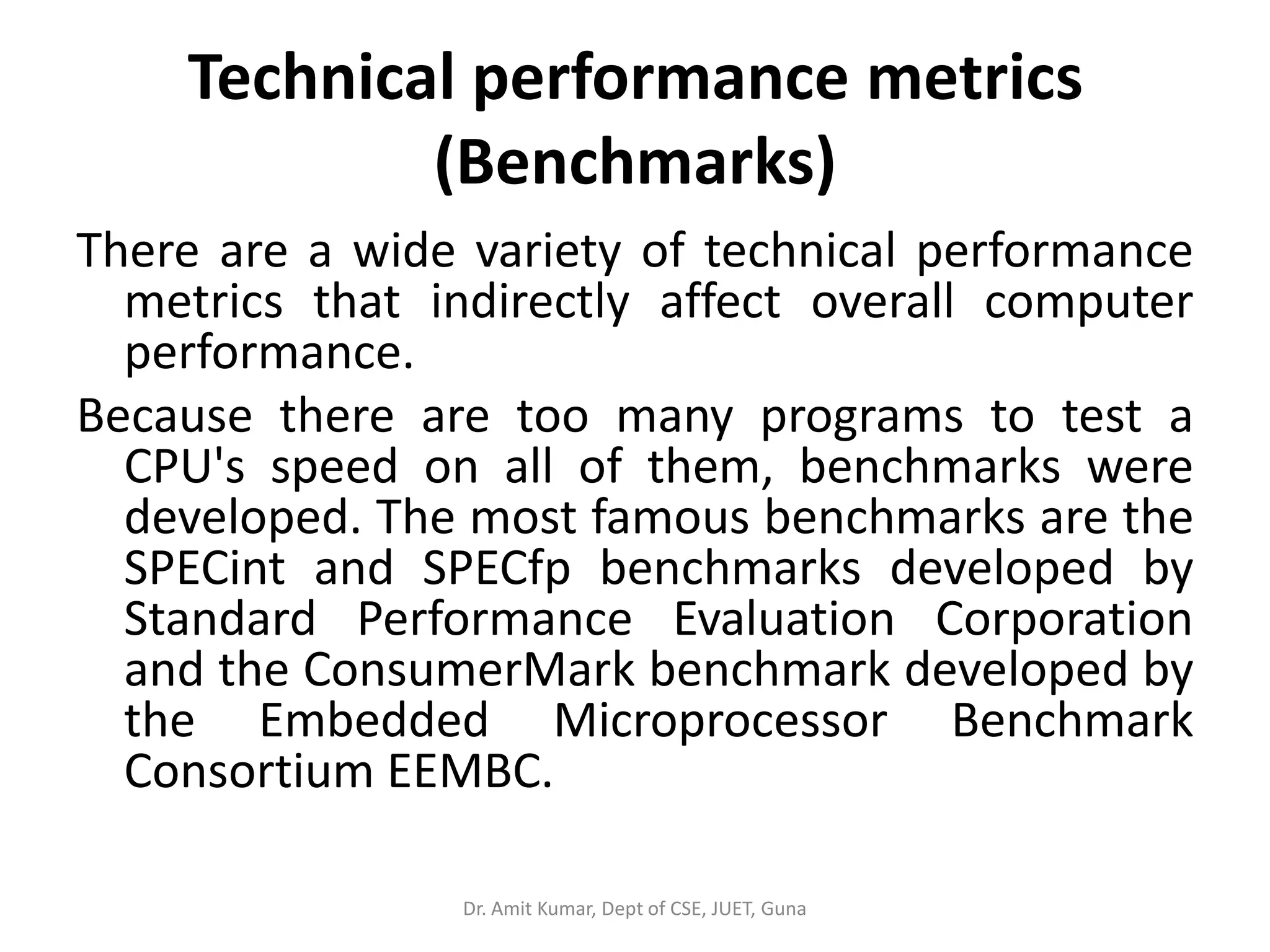

• Time related:

– Execution time [seconds]

• wall clock time

• system and user time

– Latency

– Response time

• Rate related:

– Rate of computation

• floating point operations per second [flops]

• integer operations per second [ops]

– Data transfer (I/O) rate [bytes/second]

• Effectiveness:

– Efficiency [%]

– Memory consumption [bytes]

– Productivity [utility/($*second)]

• Modifiers:

– Sustained

– Peak

– Theoretical peak Dr. Amit Kumar, Dept of CSE, JUET, Guna](https://image.slidesharecdn.com/computerperformance-180428051010/75/Computer-performance-30-2048.jpg)

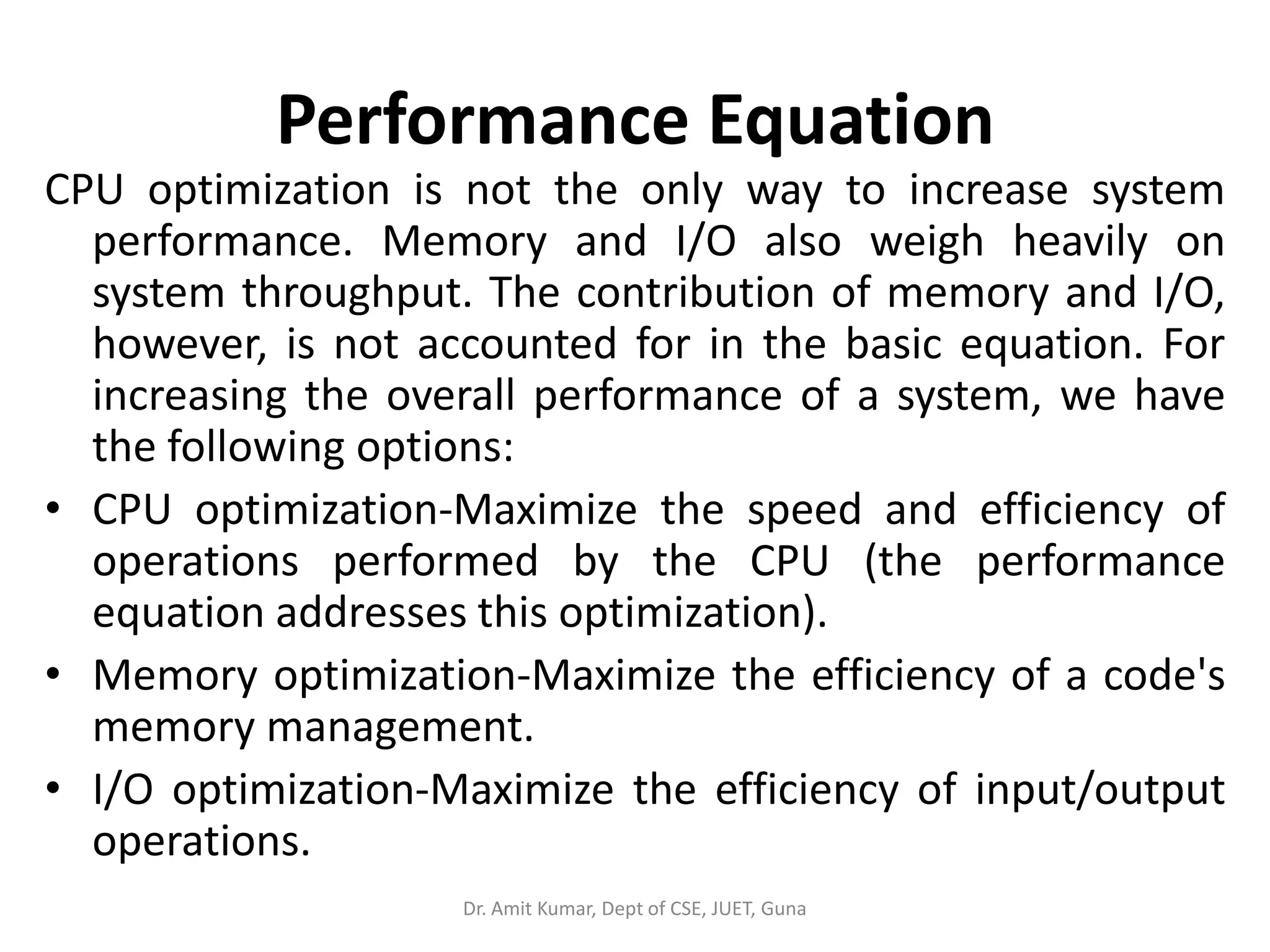

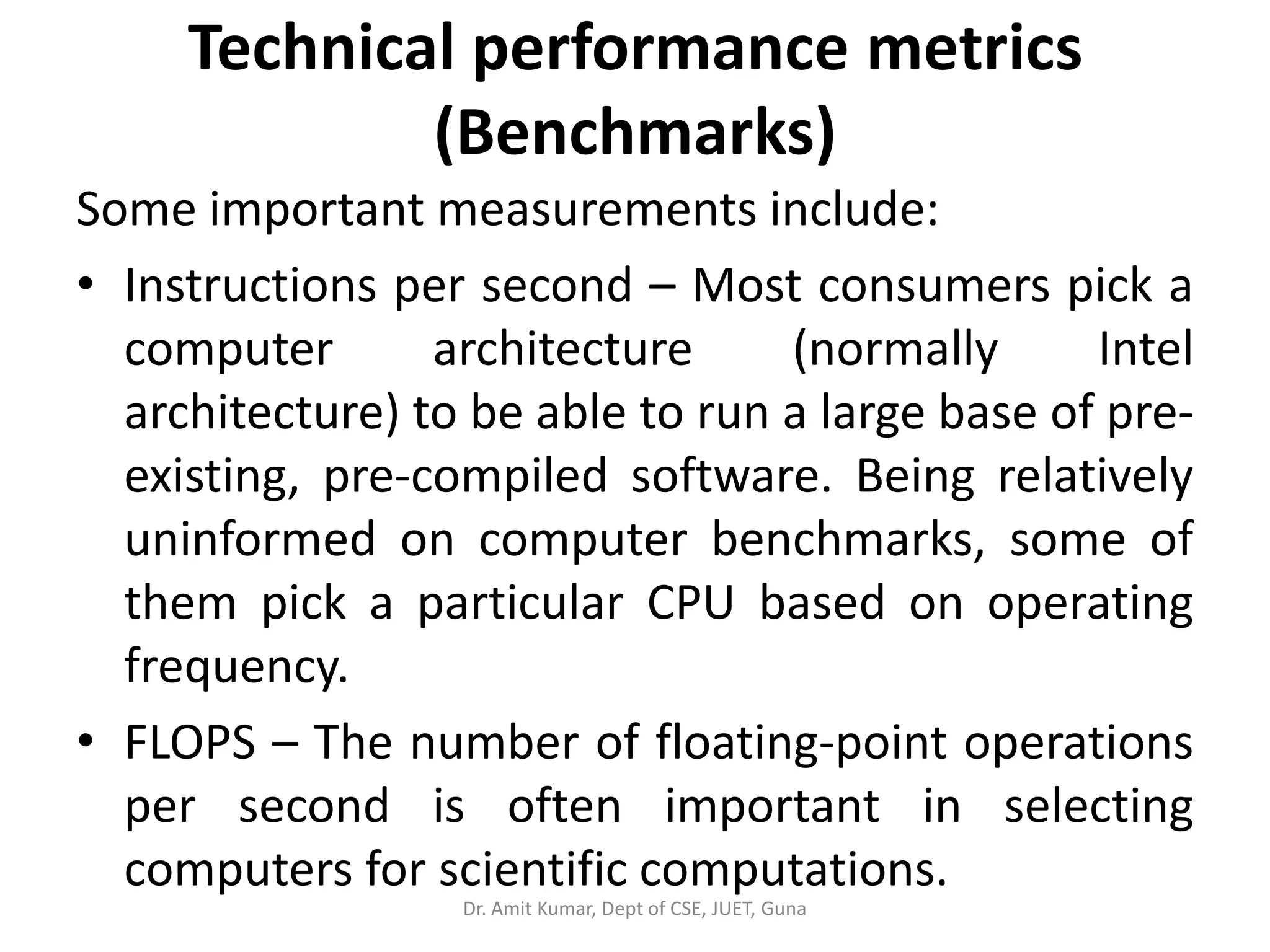

![What Is a Benchmark?

• Benchmark: a standardized problem or test that serves as a

basis for evaluation or comparison (as of computer system

performance) [Merriam-Webster]

• The term “benchmark” also commonly applies to specially-

designed programs used in benchmarking

• A benchmark should:

– be domain specific (the more general the benchmark, the less useful it

is for anything in particular)

– be a distillation of the essential attributes of a workload

– avoid using single metric to express the overall performance

• Computational benchmark kinds

– synthetic: specially-created programs that impose the load on the

specific component in the system

– application: derived from a real-world application program

Dr. Amit Kumar, Dept of CSE, JUET, Guna](https://image.slidesharecdn.com/computerperformance-180428051010/75/Computer-performance-31-2048.jpg)