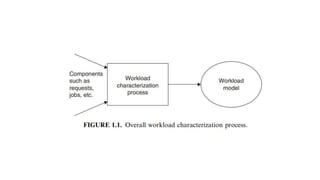

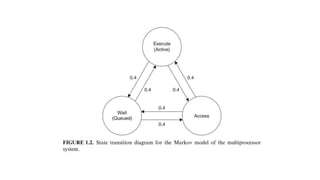

The document discusses performance evaluation of computer and telecommunication systems. Performance evaluation aims to quantitatively predict a system's behavior and is used to compare designs, plan for capacity, and debug performance issues. It involves modeling, simulation, and testing approaches of varying cost and accuracy. Key metrics include counts, times, sizes, productivity, response time, and reliability measures. Workload characterization analyzes how systems are used, while benchmarks compare performance across systems running standardized tests.