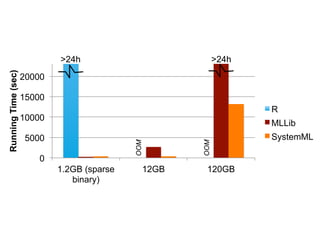

Apache SystemML is a declarative machine learning library that:

1) Allows users to write custom machine learning algorithms declaratively without needing to handle performance or distribution details.

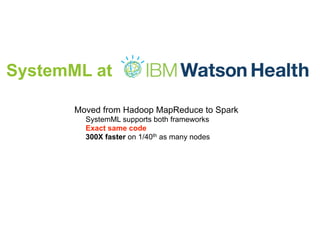

2) Generates and optimizes low-level execution plans to transparently distribute and run algorithms at scale on data-parallel frameworks like Spark.

3) Originally started as projects at IBM Research to handle machine learning on Hadoop and address how data scientists create ML solutions, and has since become an Apache open-source project.