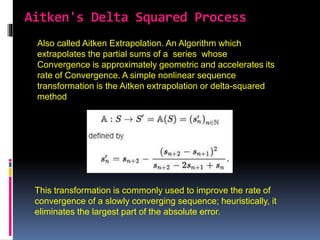

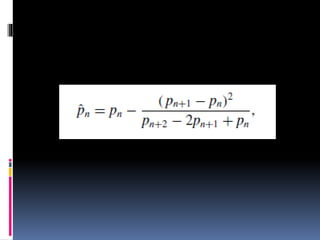

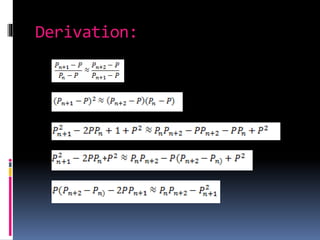

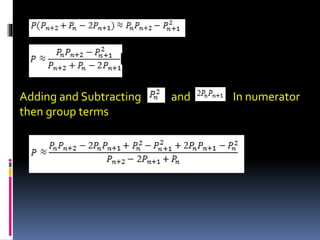

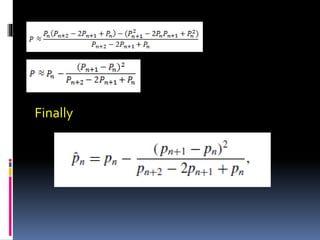

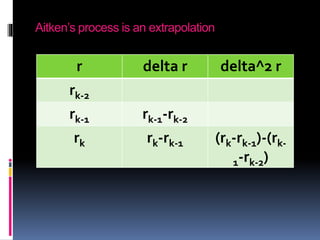

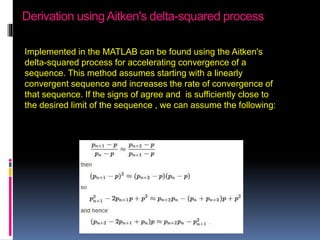

Aitken's delta-squared process is a method for accelerating the convergence of numerical sequences. It works by extrapolating the partial sums of a series whose convergence is approximately geometric. The method eliminates the largest part of the absolute error, improving the rate of convergence. Aitken's method can be applied to root-finding algorithms and iterative processes to achieve faster linear or quadratic convergence.

![Example : Find a root of cos[x] - x * exp[x] = 0 with

x0 = 0.0

Let the linear iterative process be

xi+1 = xi + 1/2(cos[xi]- xi * exp[xi] ) i = 0, 1, 2 . . .](https://image.slidesharecdn.com/aitkensmethod-170829115234/85/Aitken-s-method-16-320.jpg)