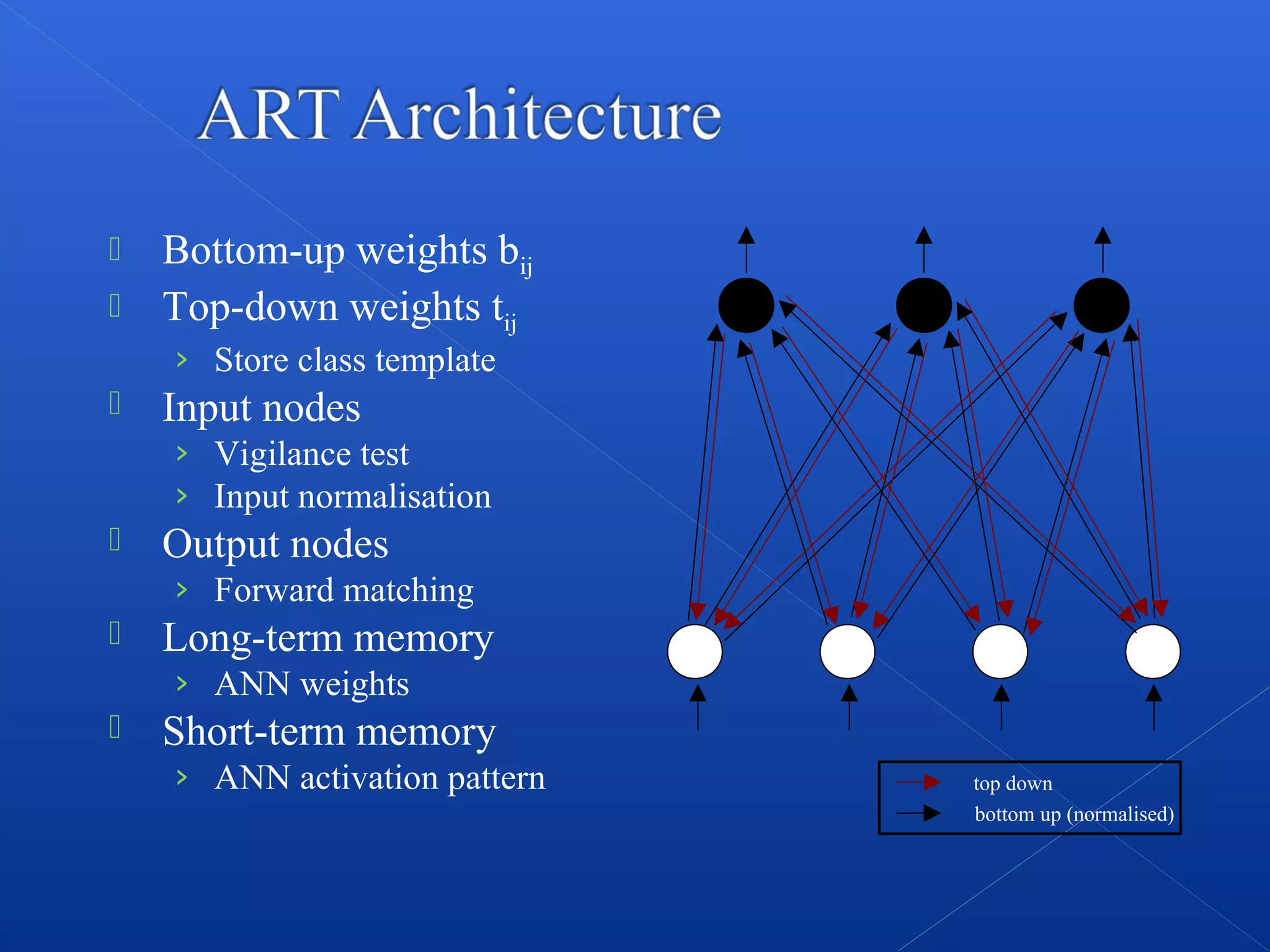

The document provides an overview of Adaptive Resonance Theory (ART), a family of unsupervised neural networks developed by Stephen Grossberg that addresses the plasticity-stability dilemma in learning systems. It explains the architecture and functioning of ART, including the roles of various components such as the comparison field, recognition field, and vigilance parameter in categorizing input patterns. The document also describes different versions of ART, including ART1, ART2, and ART3, and their applications in various fields such as robotics and medical diagnosis.