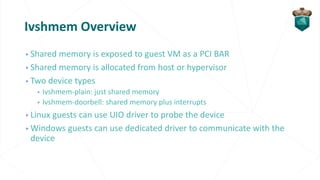

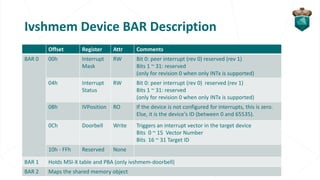

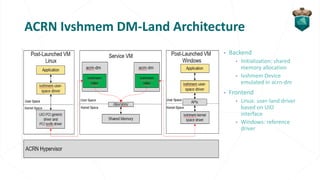

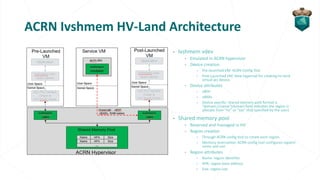

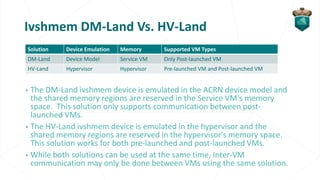

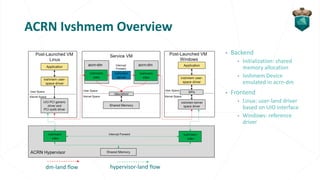

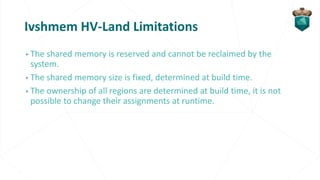

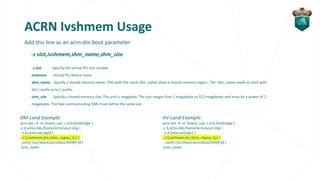

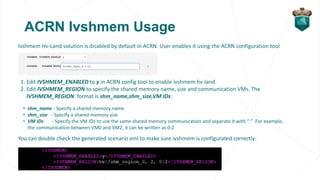

This document discusses shared memory based inter-VM communication using Ivshmem in ACRN. It provides an overview of Ivshmem, describes the DM-Land and HV-Land architectures in ACRN for emulating the Ivshmem device, discusses limitations of the HV-Land approach, and provides examples of configuring and using Ivshmem for inter-VM shared memory communication.