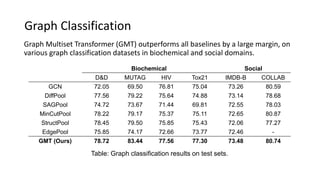

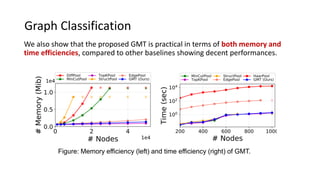

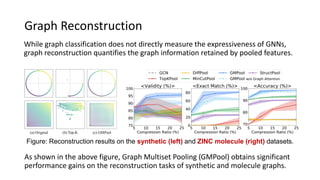

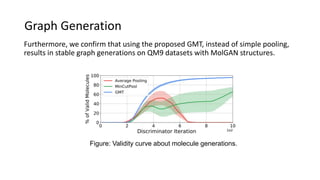

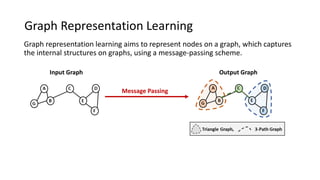

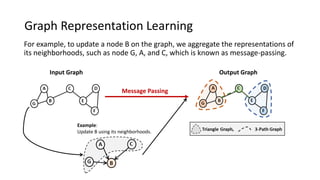

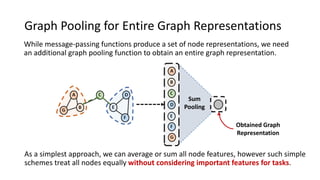

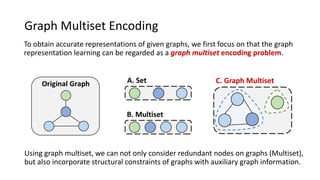

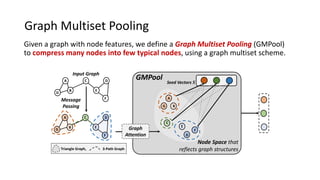

This paper presents a novel graph representation learning approach utilizing graph multiset pooling to effectively encode graphs by considering structural constraints and node interactions. The proposed graph multiset transformer (GMT) model demonstrates substantial performance improvements in graph classification, reconstruction, and generation tasks compared to existing methods, while also showcasing efficiency in memory and time usage. The findings suggest that the GMT can reach the expressive power of the Weisfeiler-Lehman test, paving the way for enhanced graph analysis techniques.

![Graph Multiset Transformer

To further consider the interactions among 𝑛 or condensed 𝑘 different nodes,

we propose a Self-Attention function (SelfAtt), inspired by Transformer [1].

[1] Vaswani et al. Attention Is All You Need. NIPS 2017.

Notably, the full structure of our model, namely Graph Multiset Transformer (GMT),

consists of GMPool for compressing nodes, and SelfAtt for considering interactions.](https://image.slidesharecdn.com/iclr2021graphmultisettransformer-210514140038/85/Accurate-Learning-of-Graph-Representations-with-Graph-Multiset-Pooling-7-320.jpg)