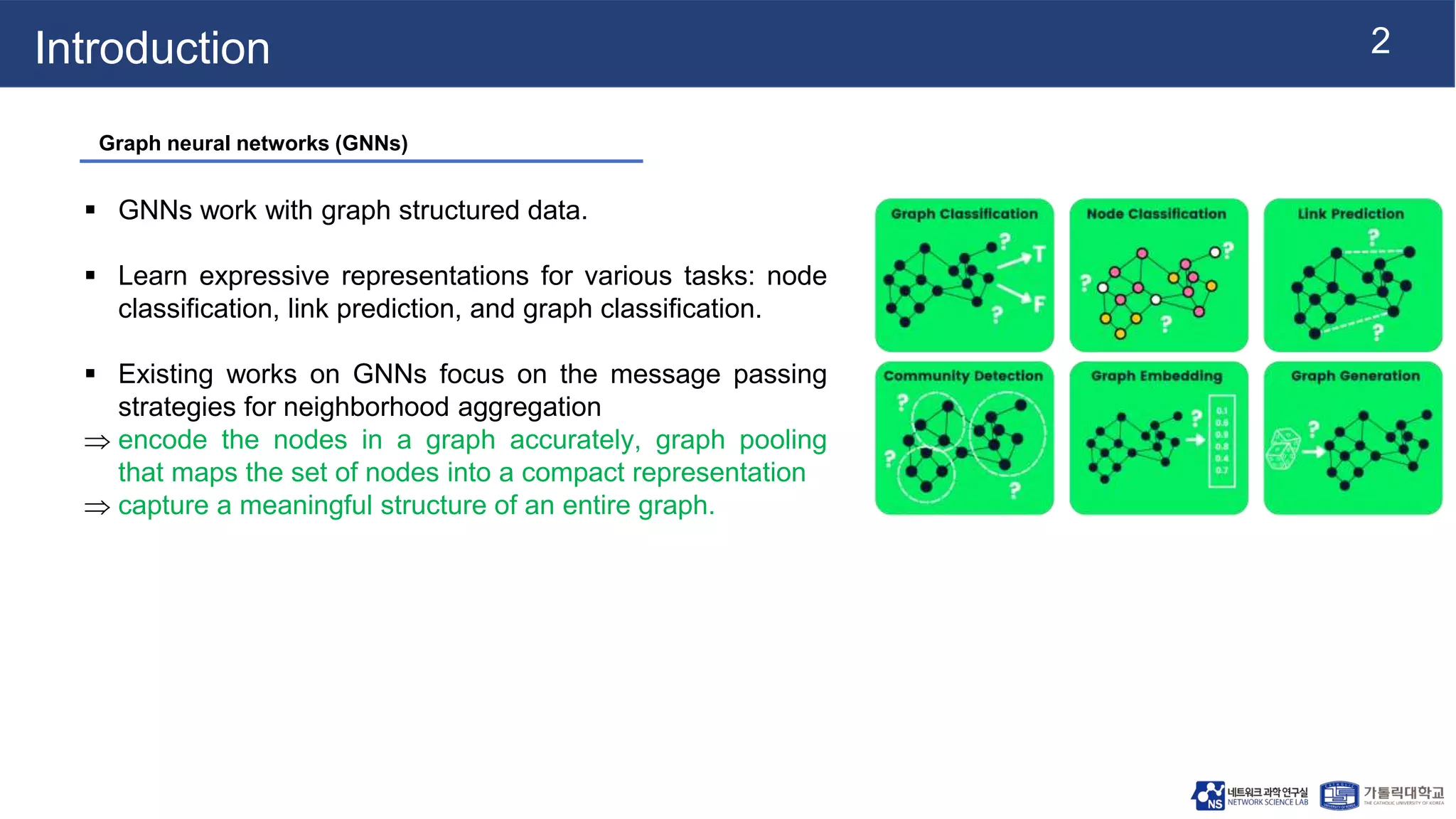

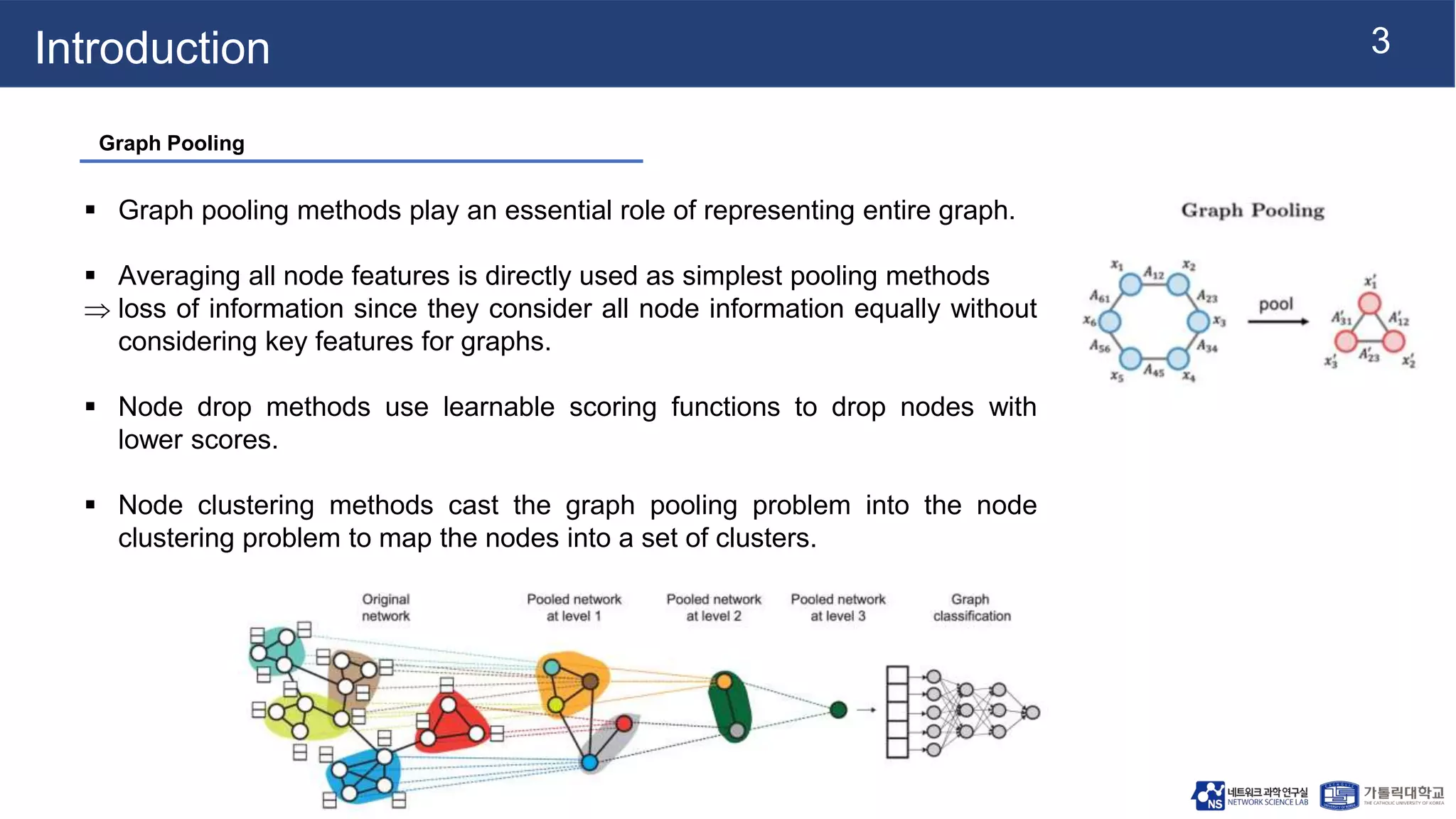

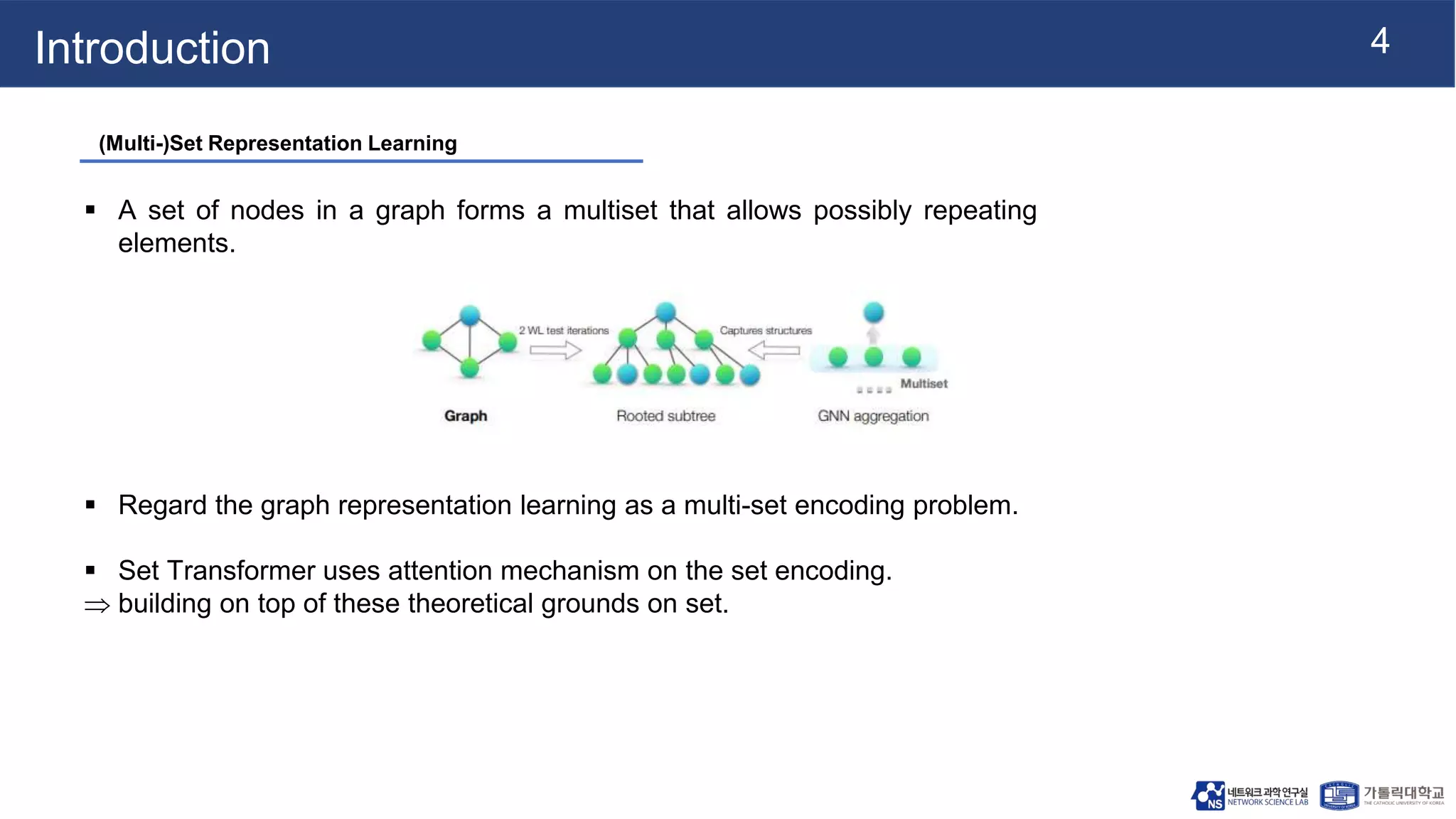

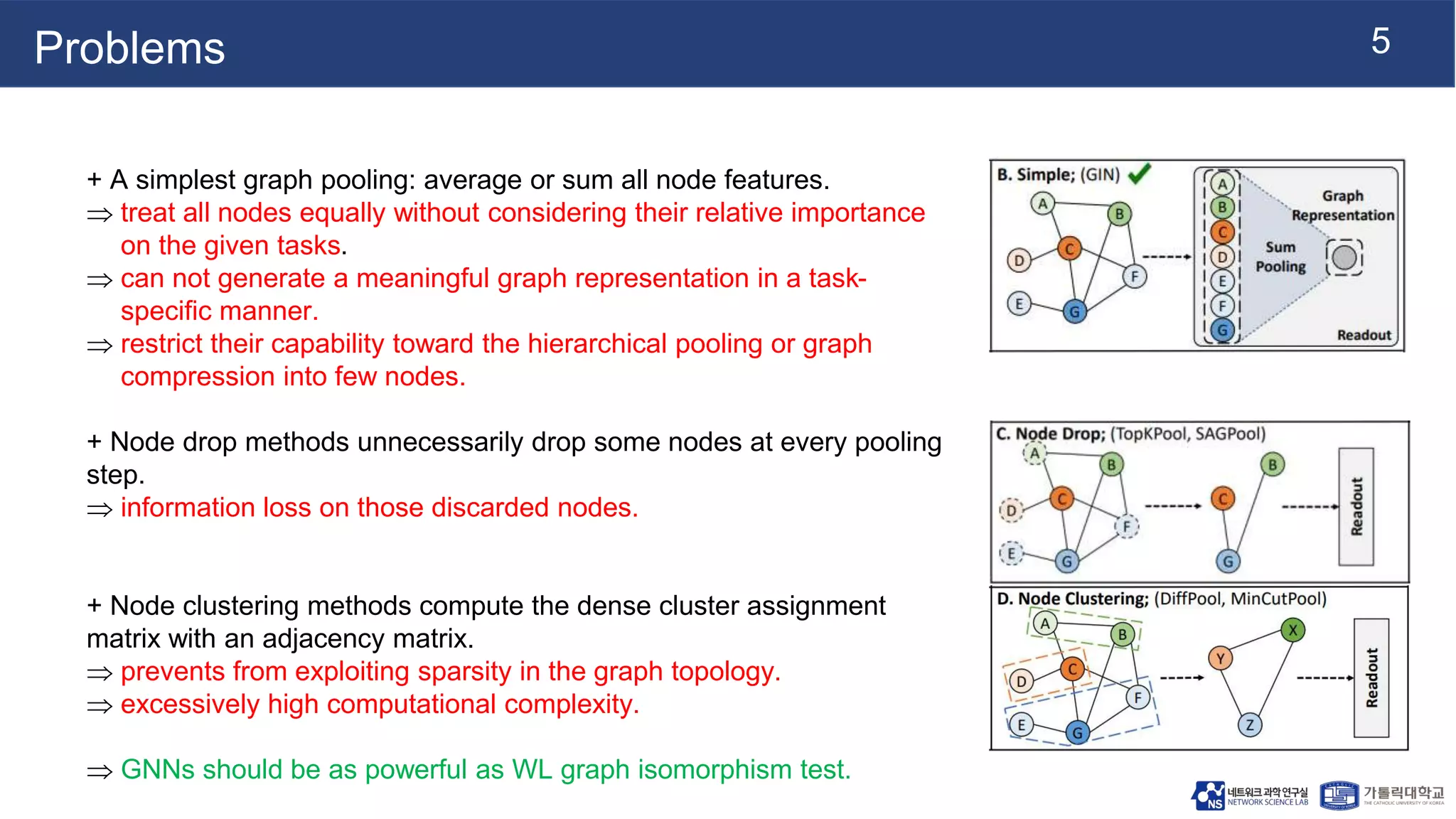

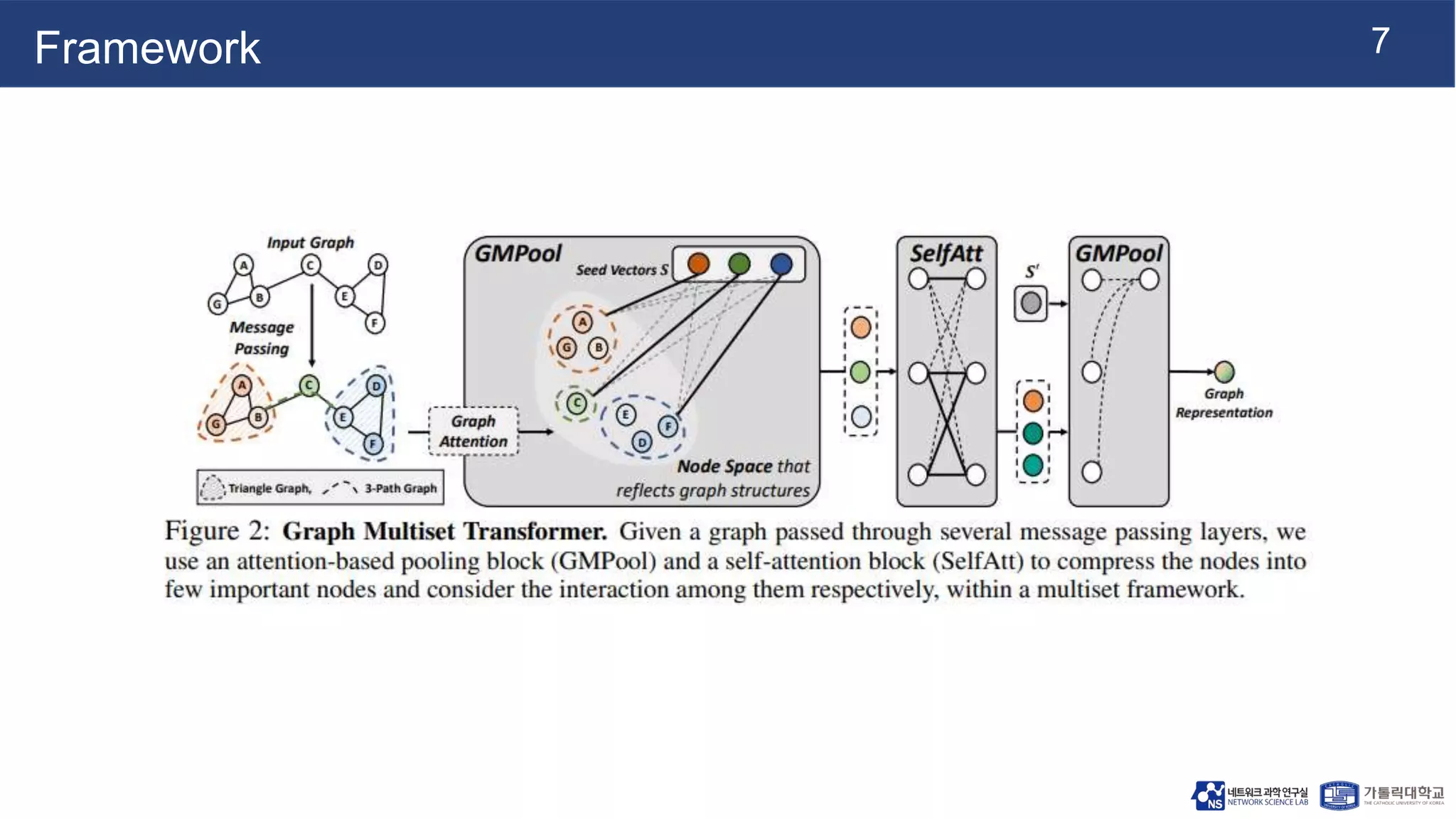

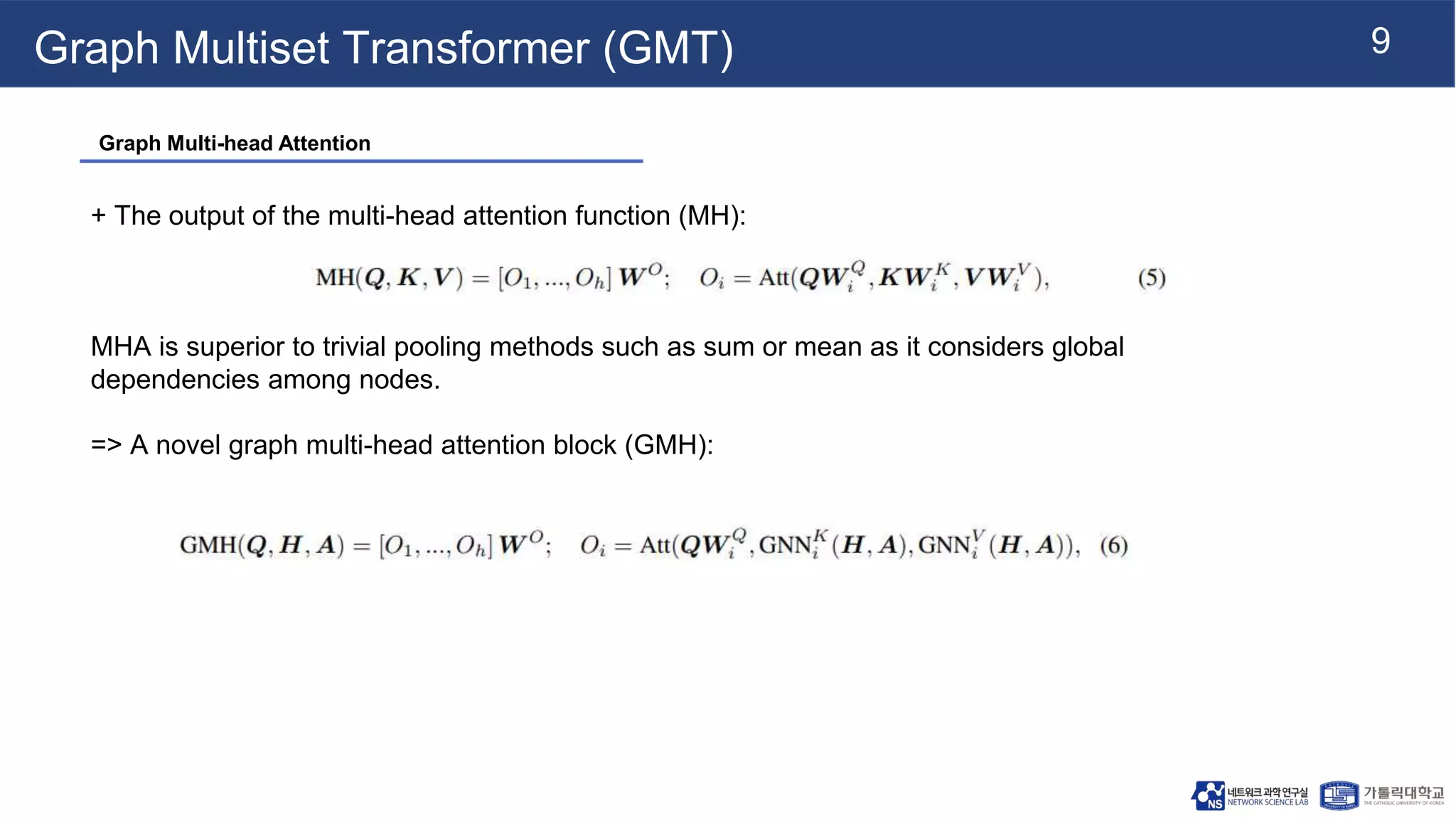

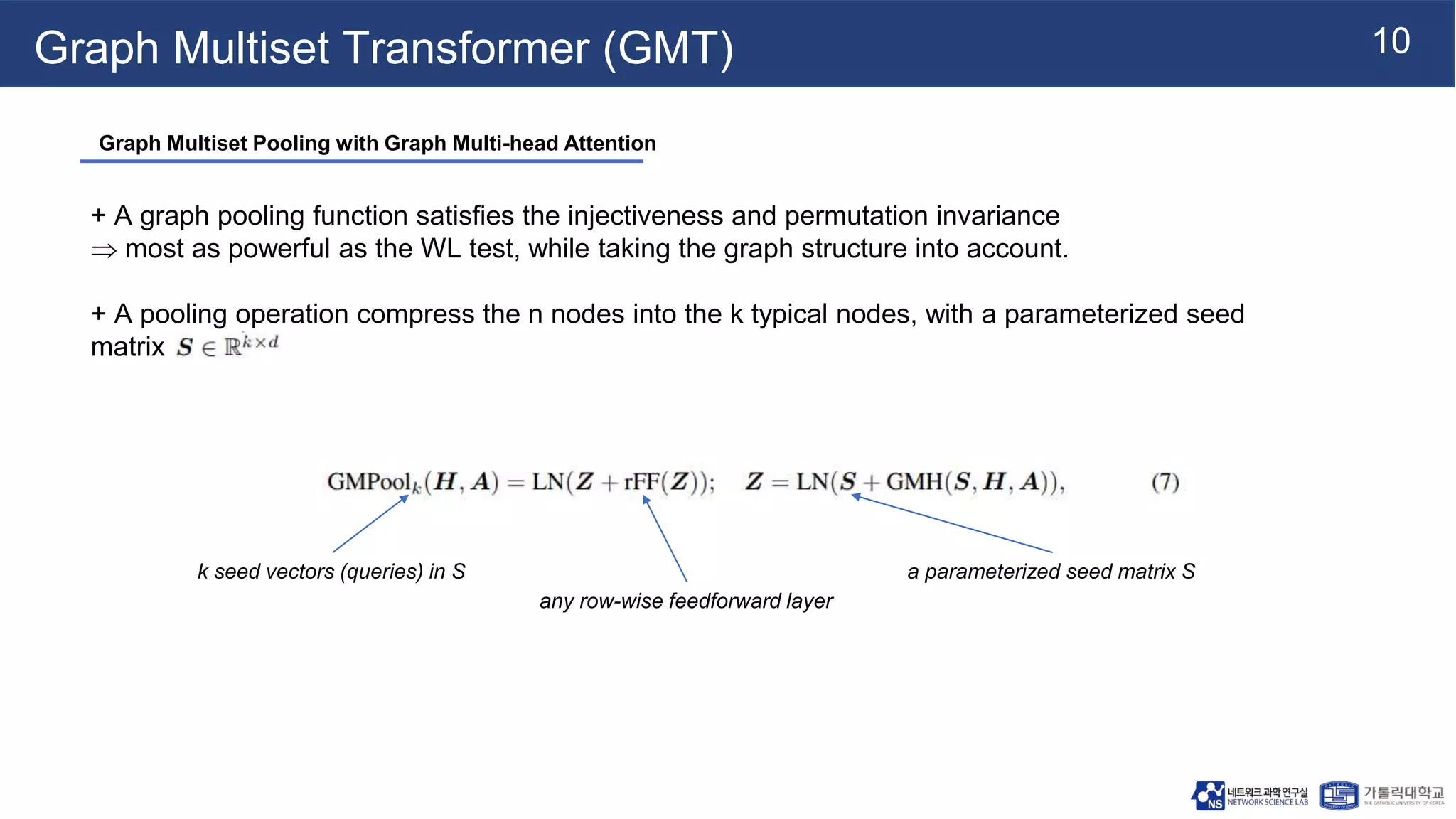

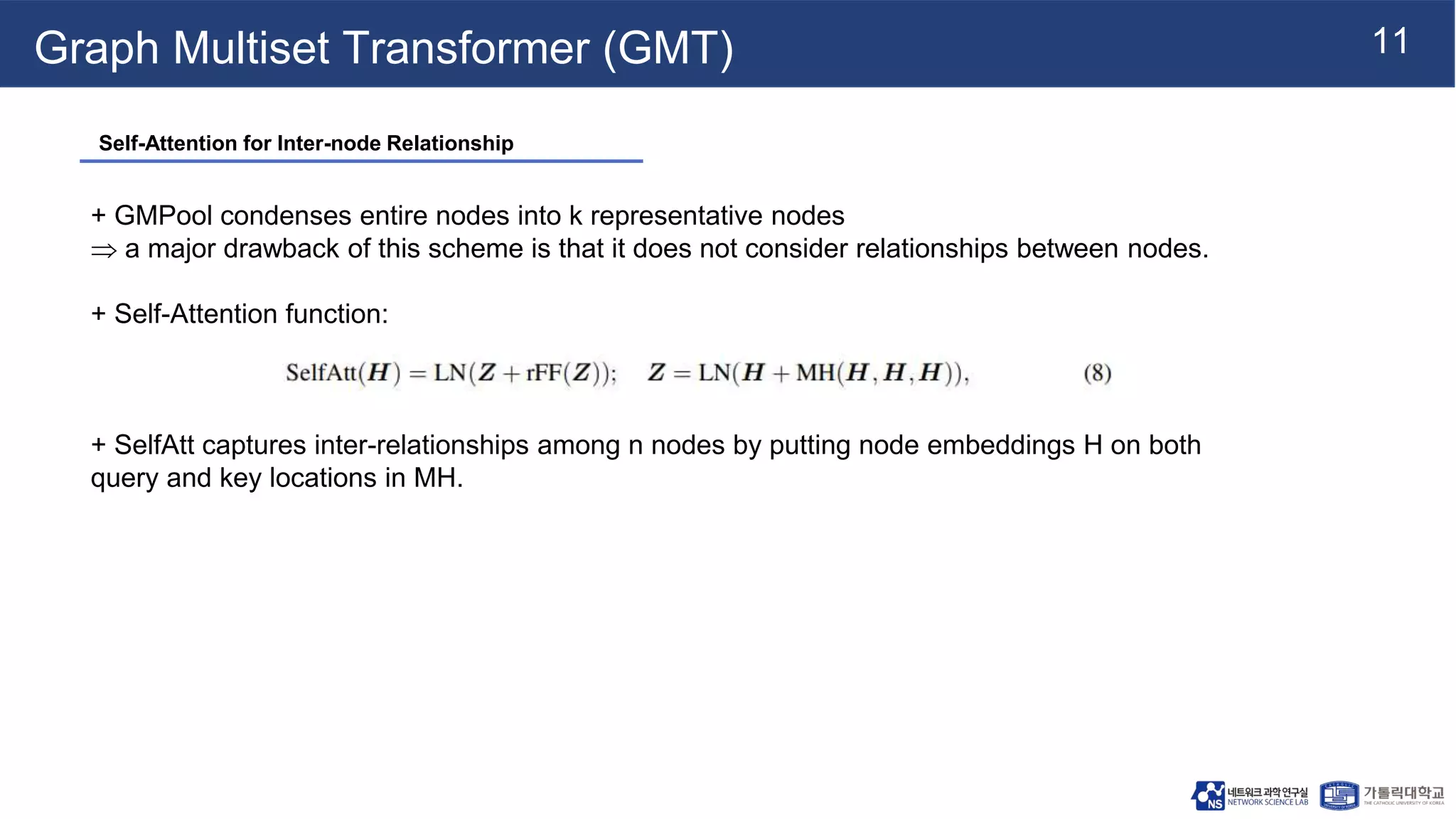

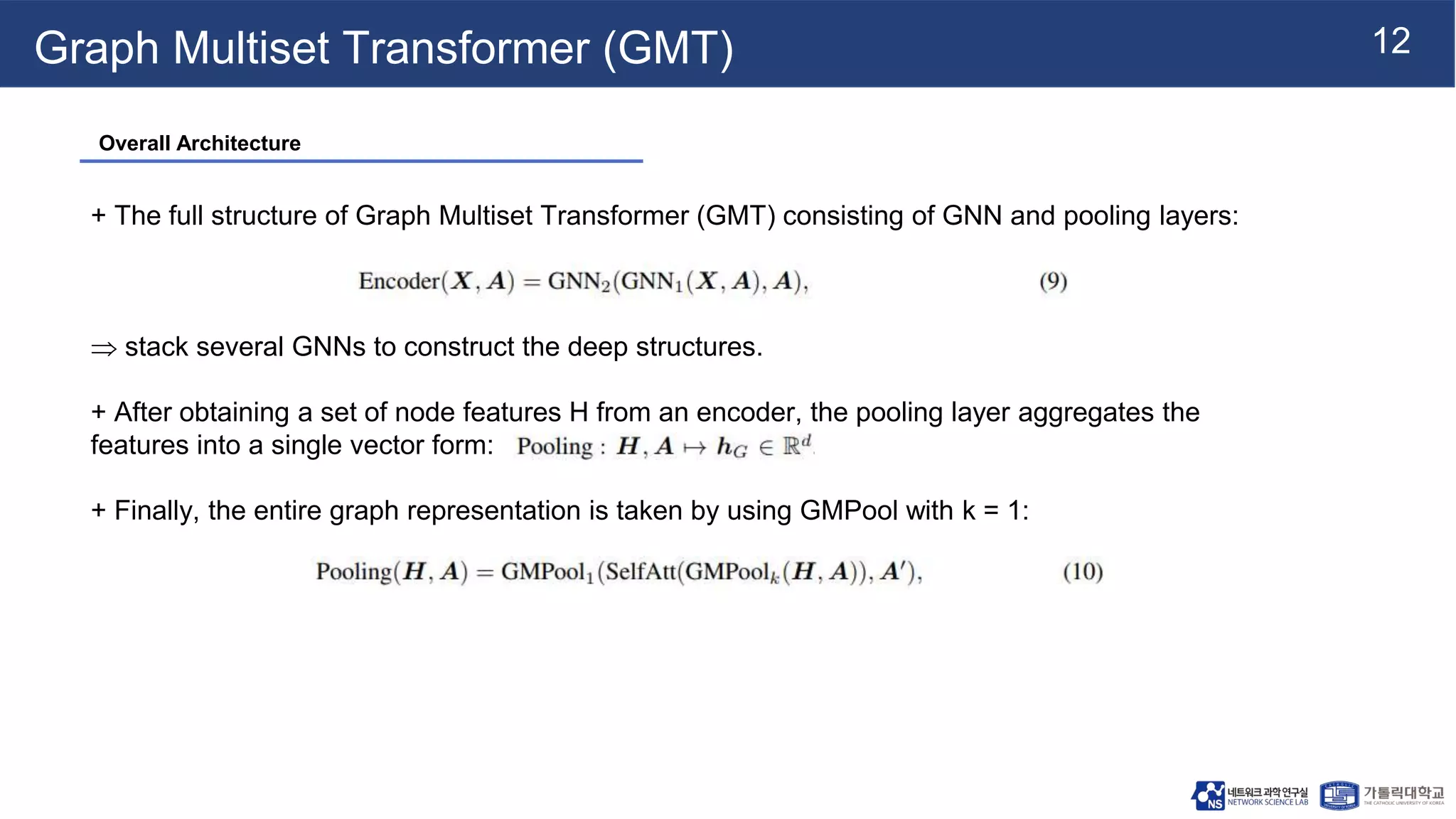

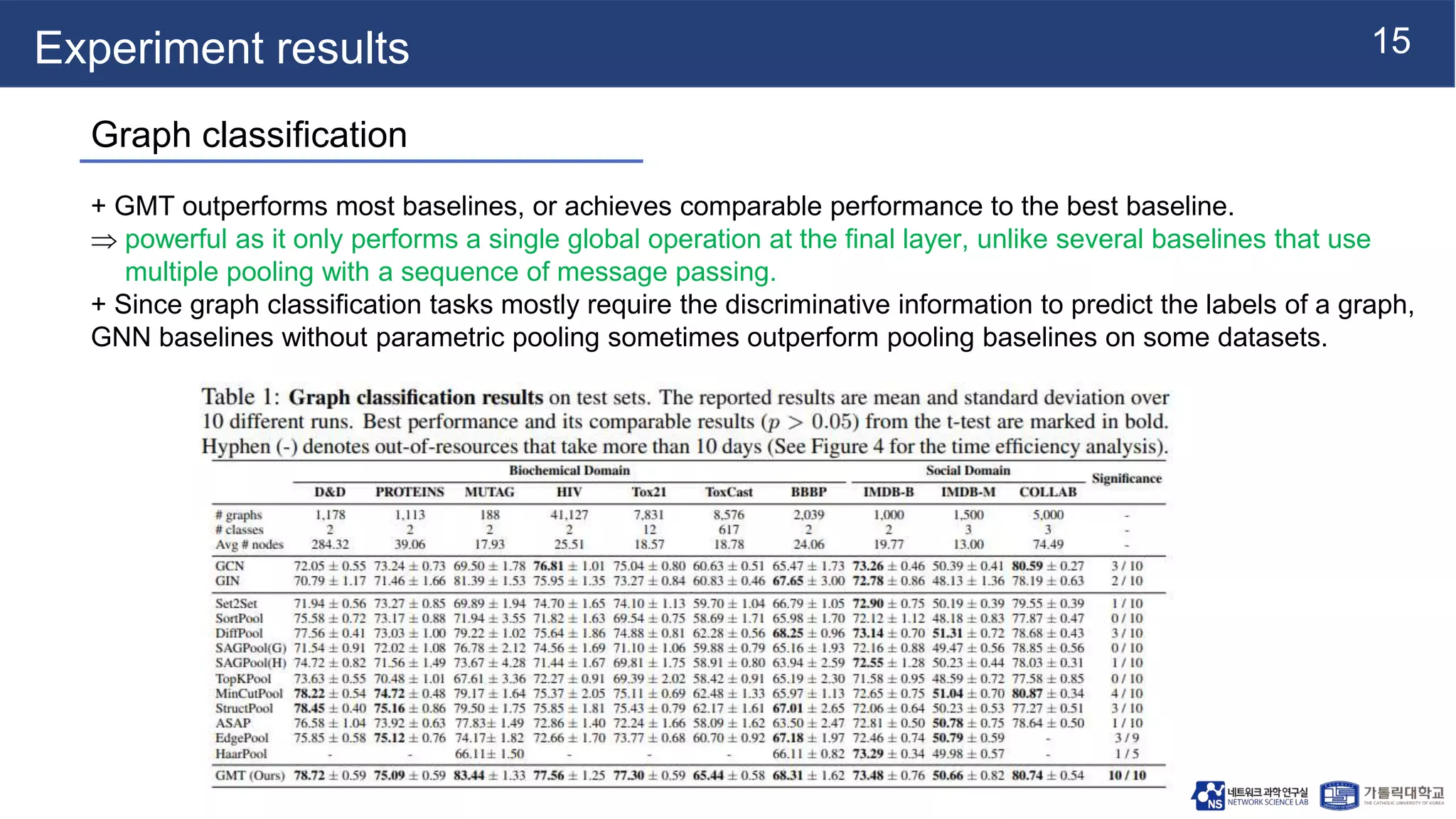

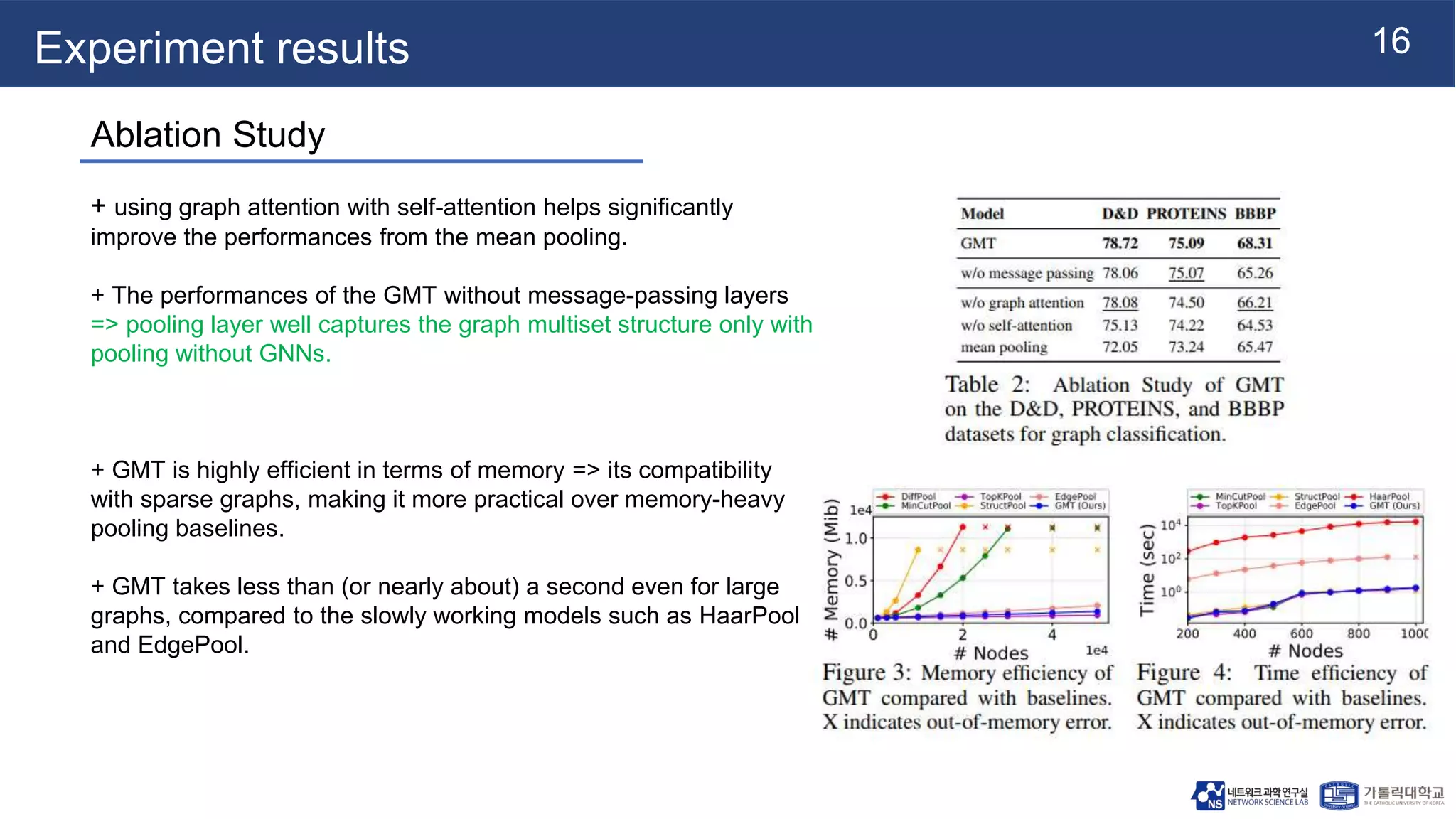

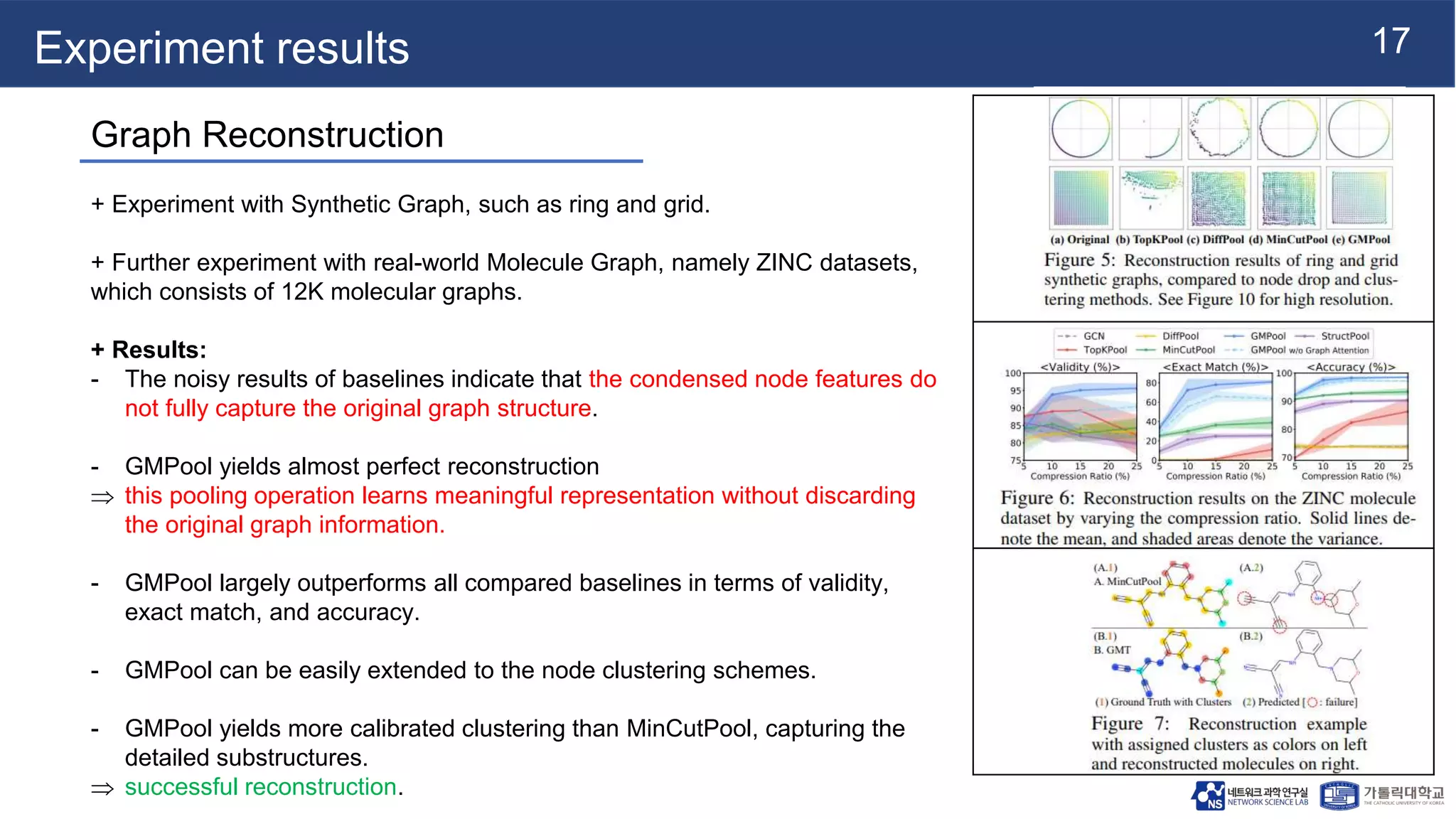

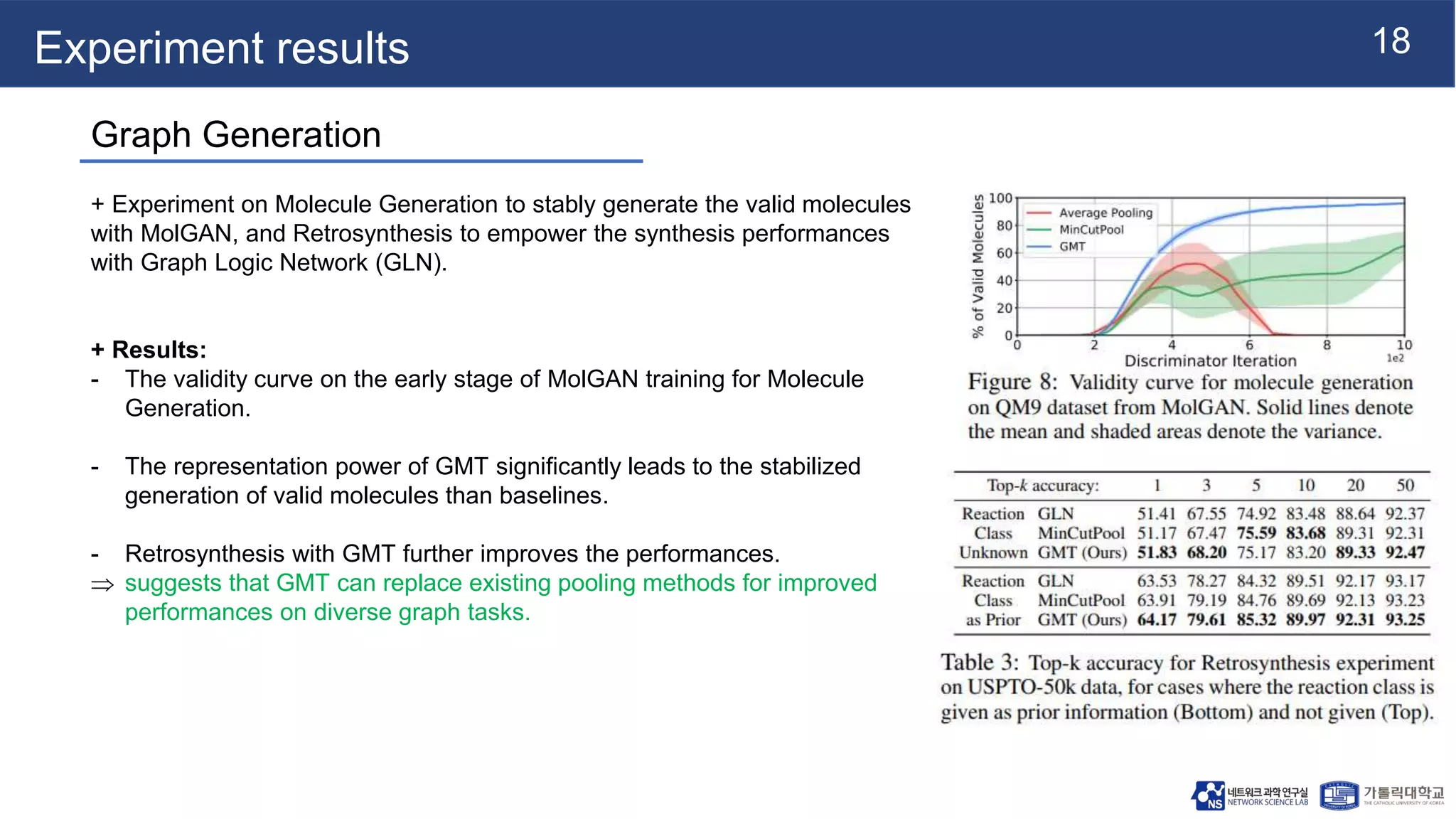

This document summarizes a research paper on Graph Multiset Pooling, a new method for graph pooling using a Graph Multiset Transformer (GMT). The GMT treats graph pooling as a multiset encoding problem and uses multi-head attention to capture relationships among nodes. It satisfies the injectiveness and permutation invariance properties needed to be as powerful as the Weisfeiler-Lehman graph isomorphism test. Experimental results show the GMT outperforms other pooling methods on tasks like graph classification, reconstruction, and generation. The GMT provides a powerful and efficient way to learn meaningful representations of entire graphs.