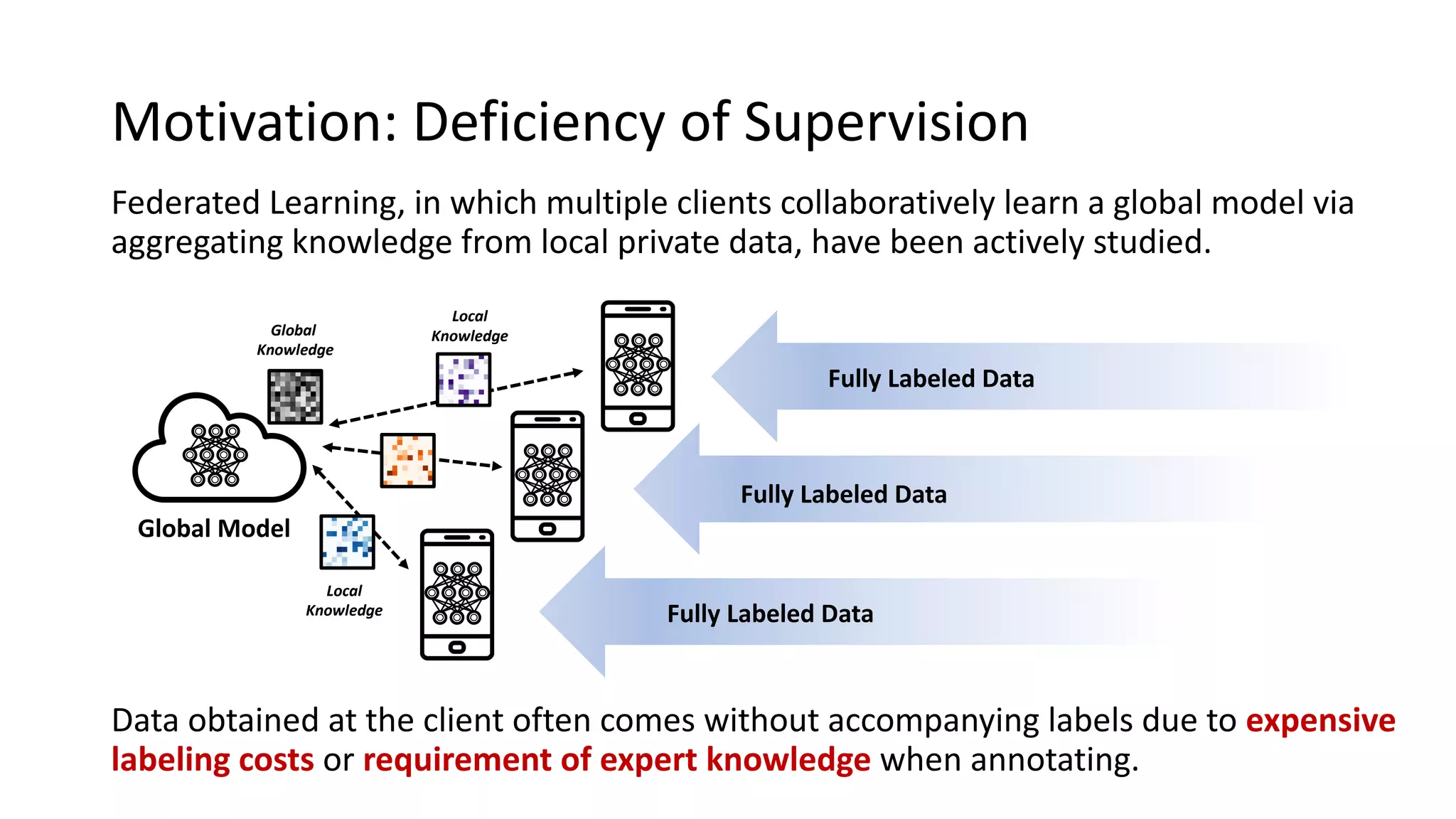

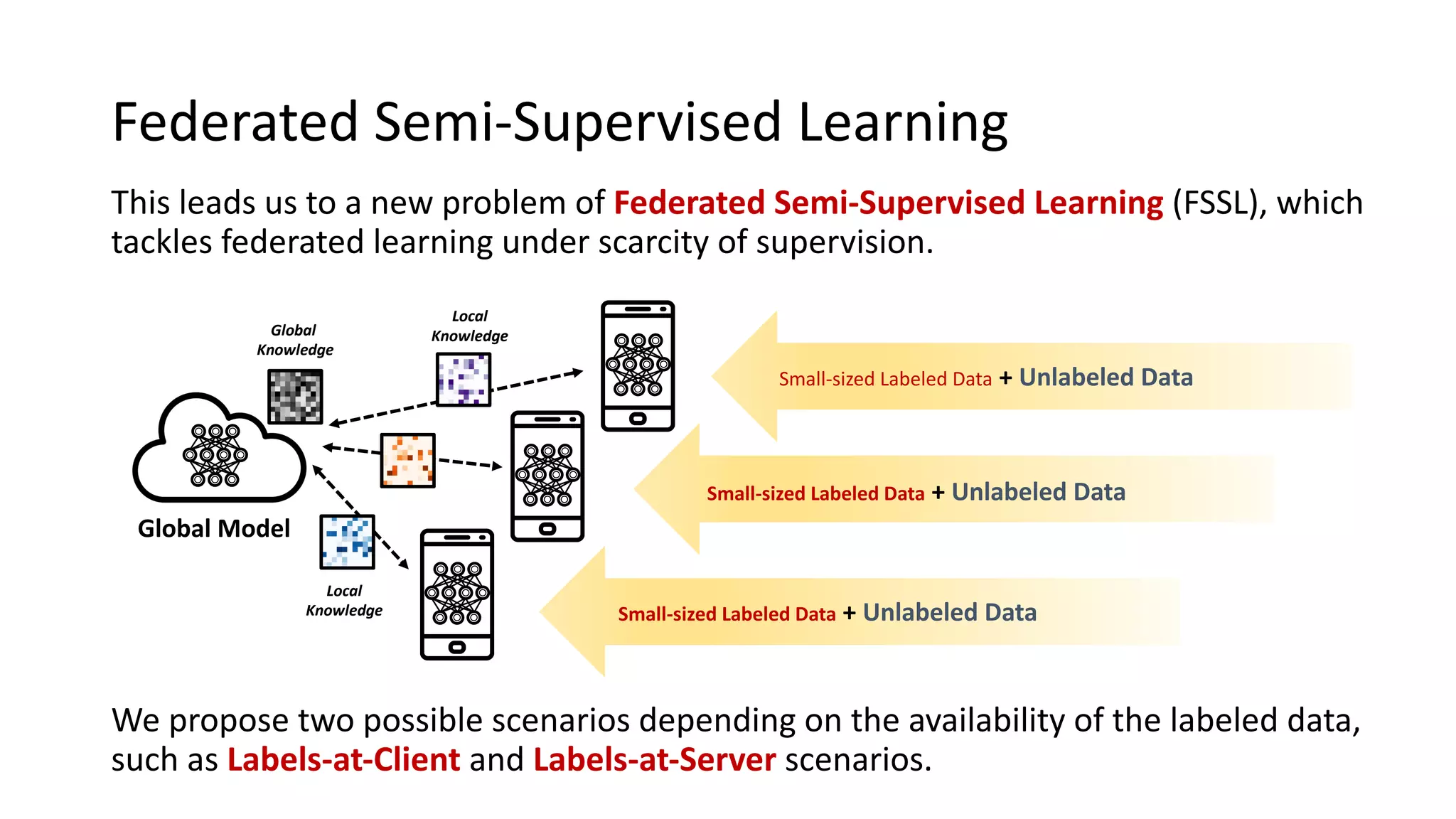

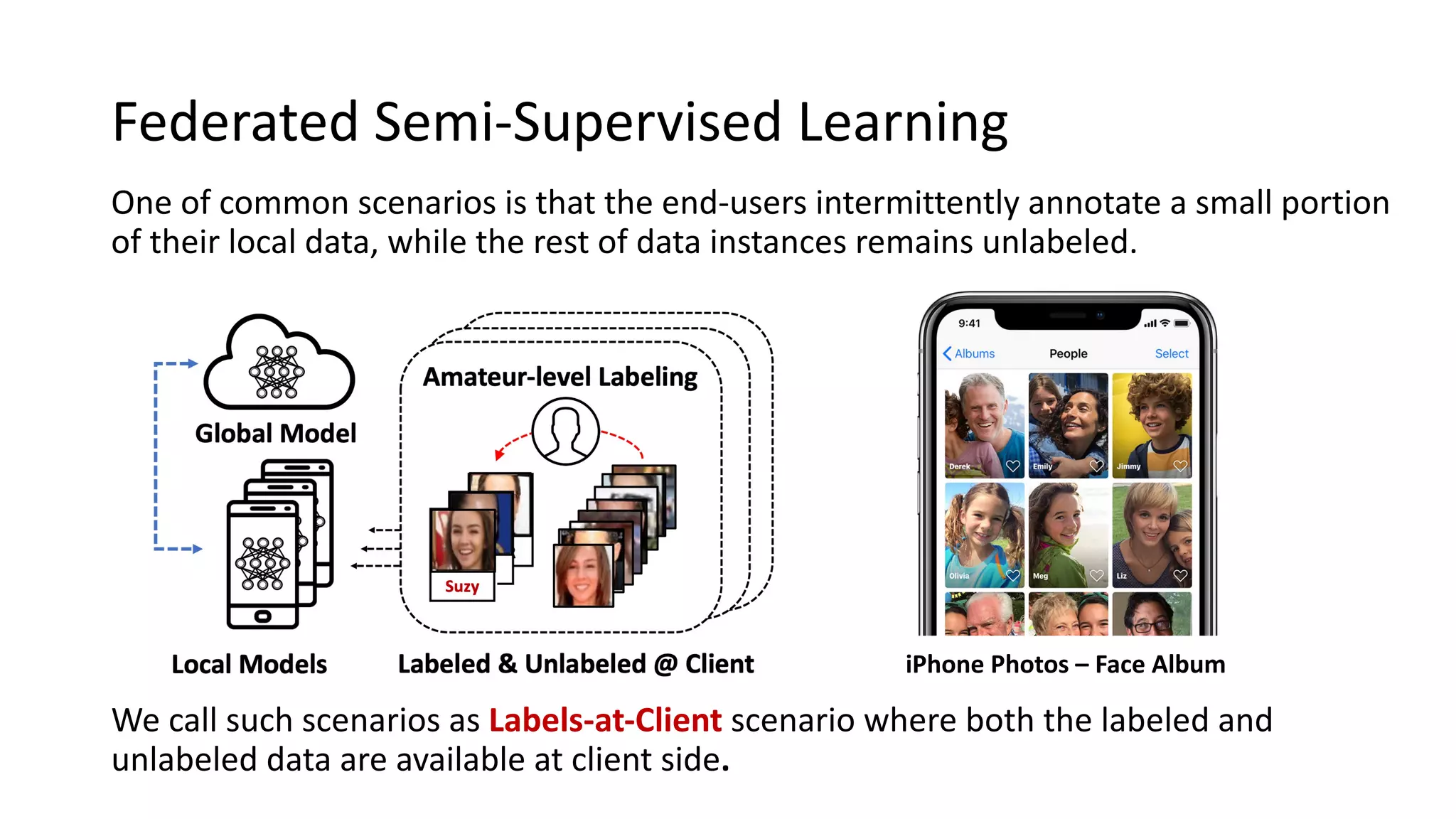

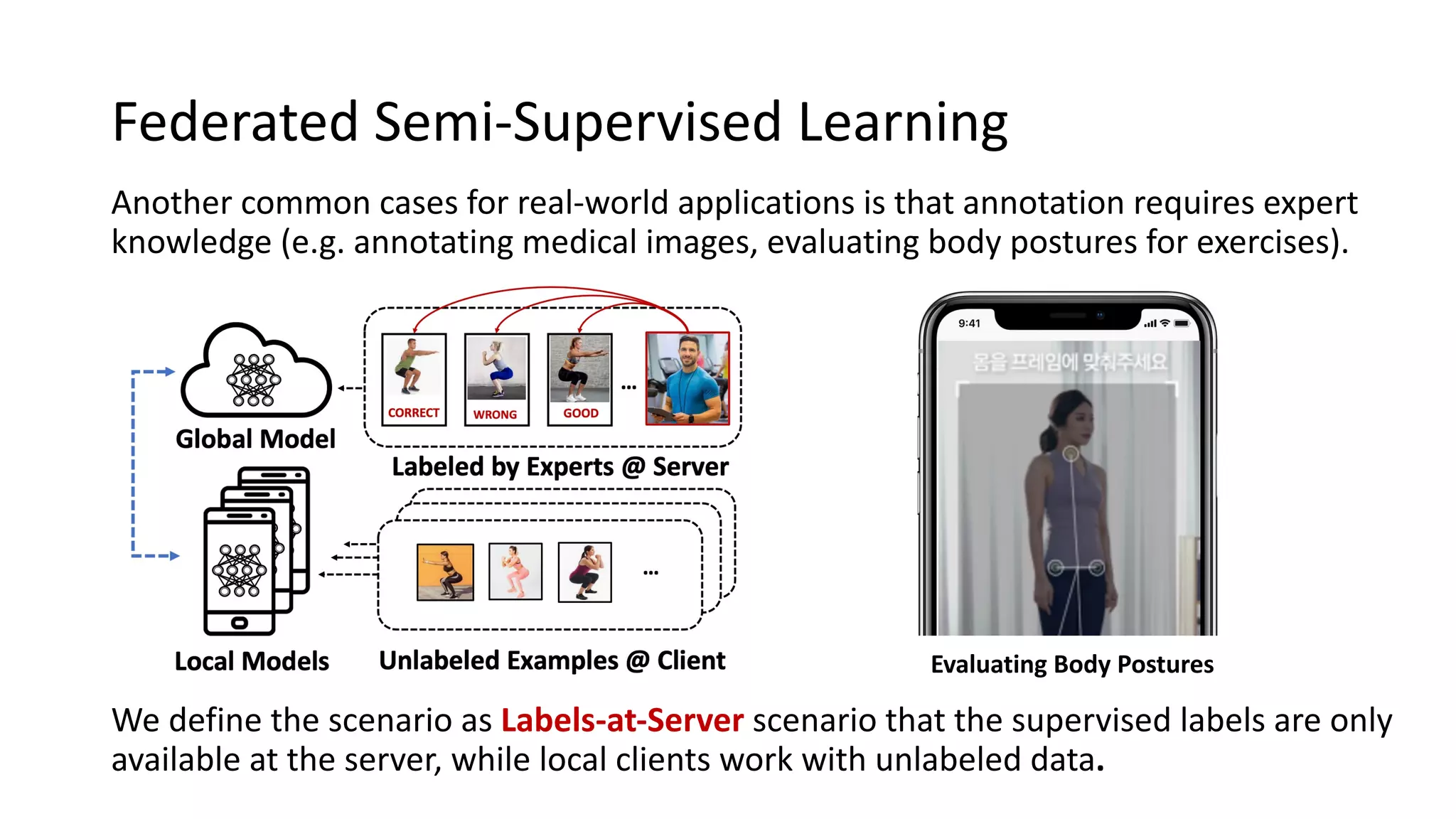

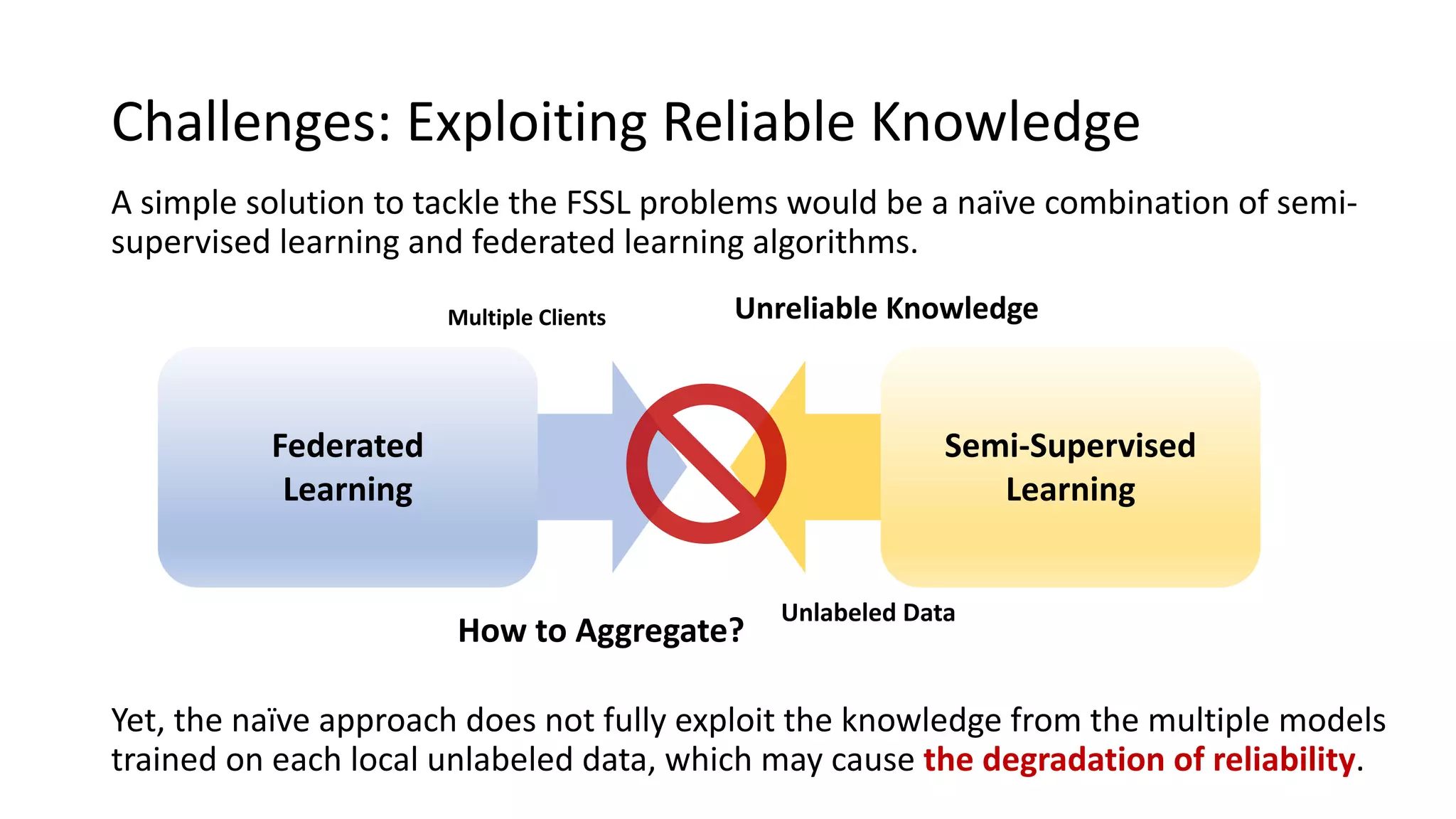

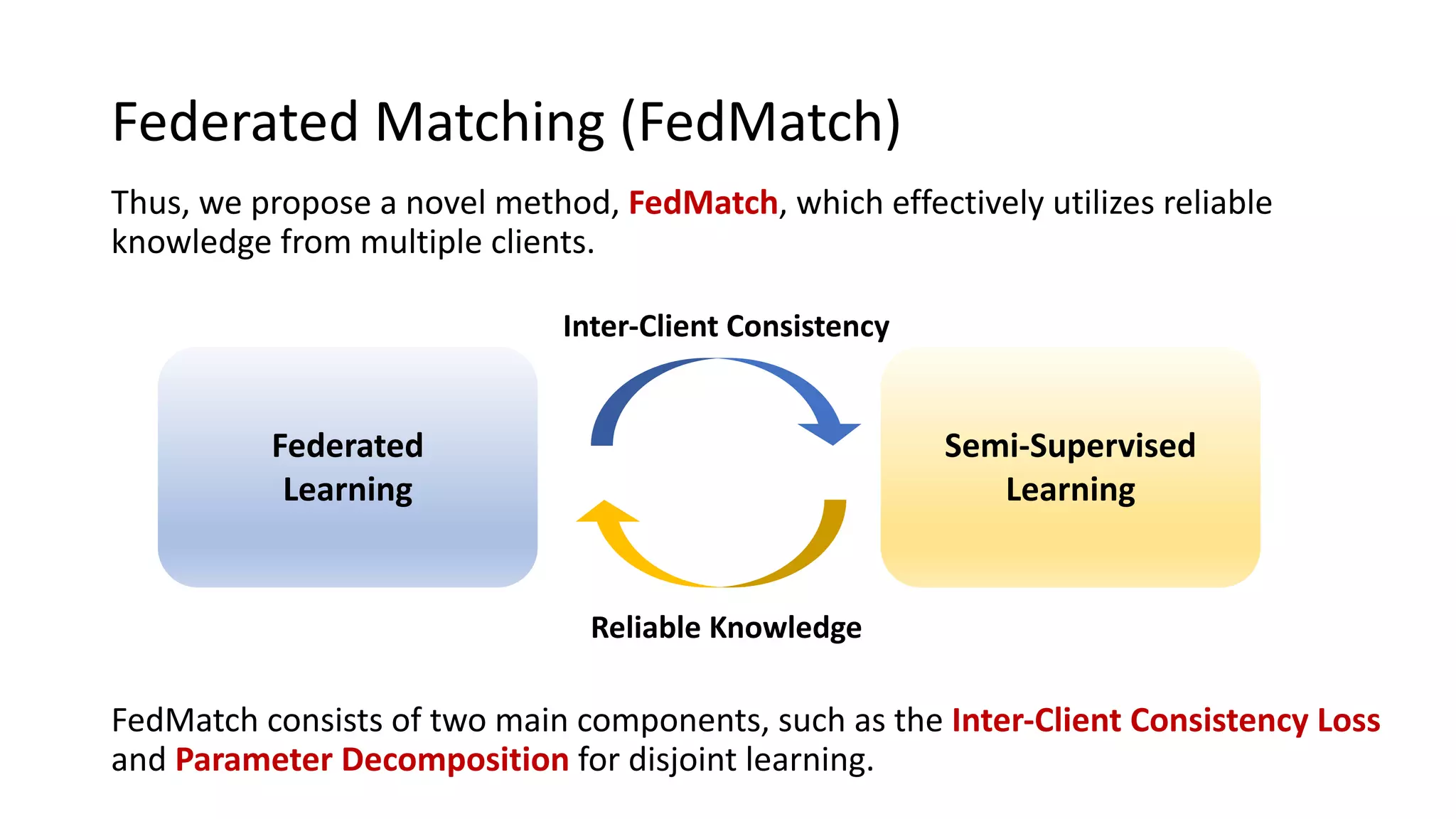

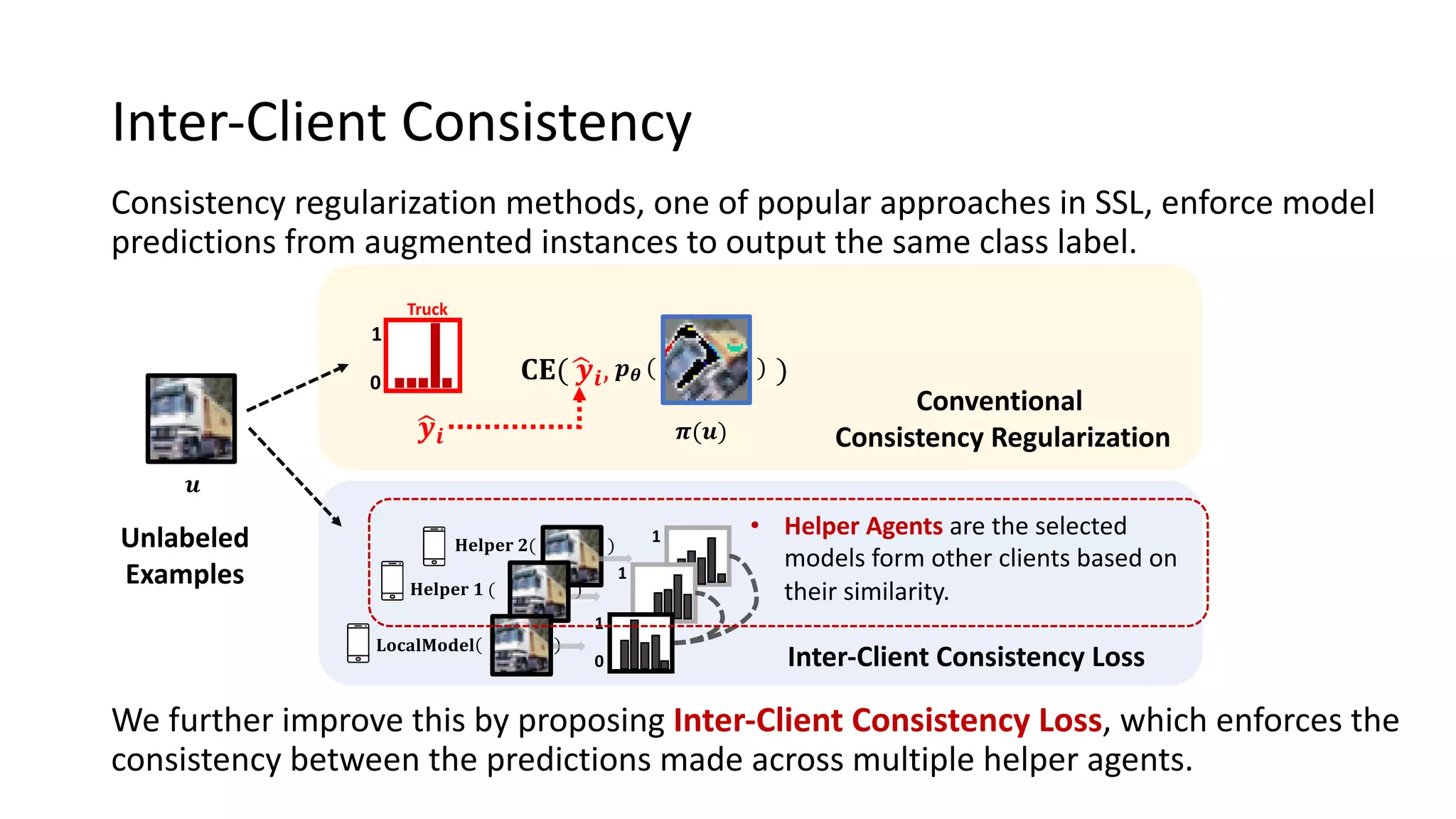

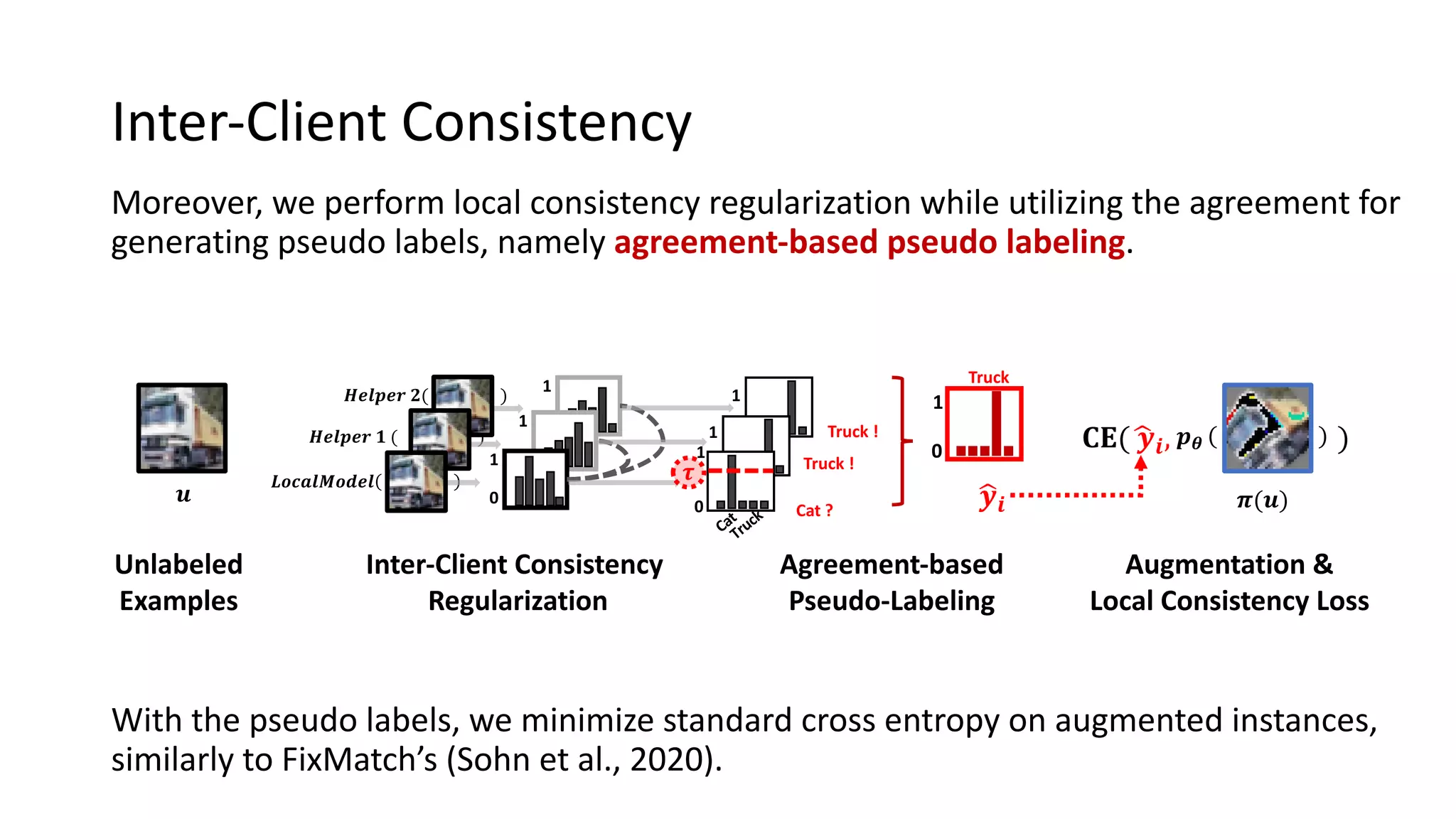

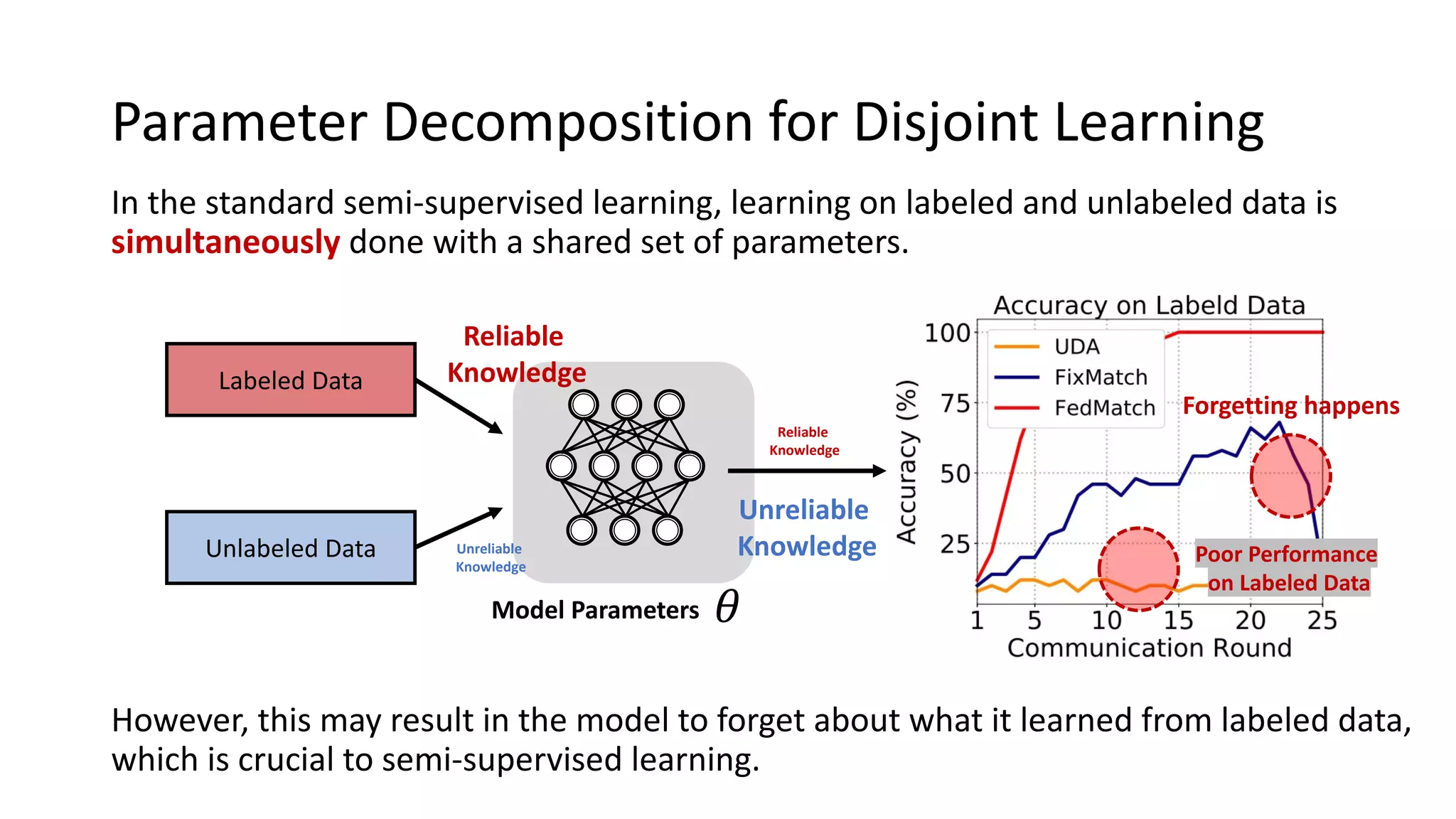

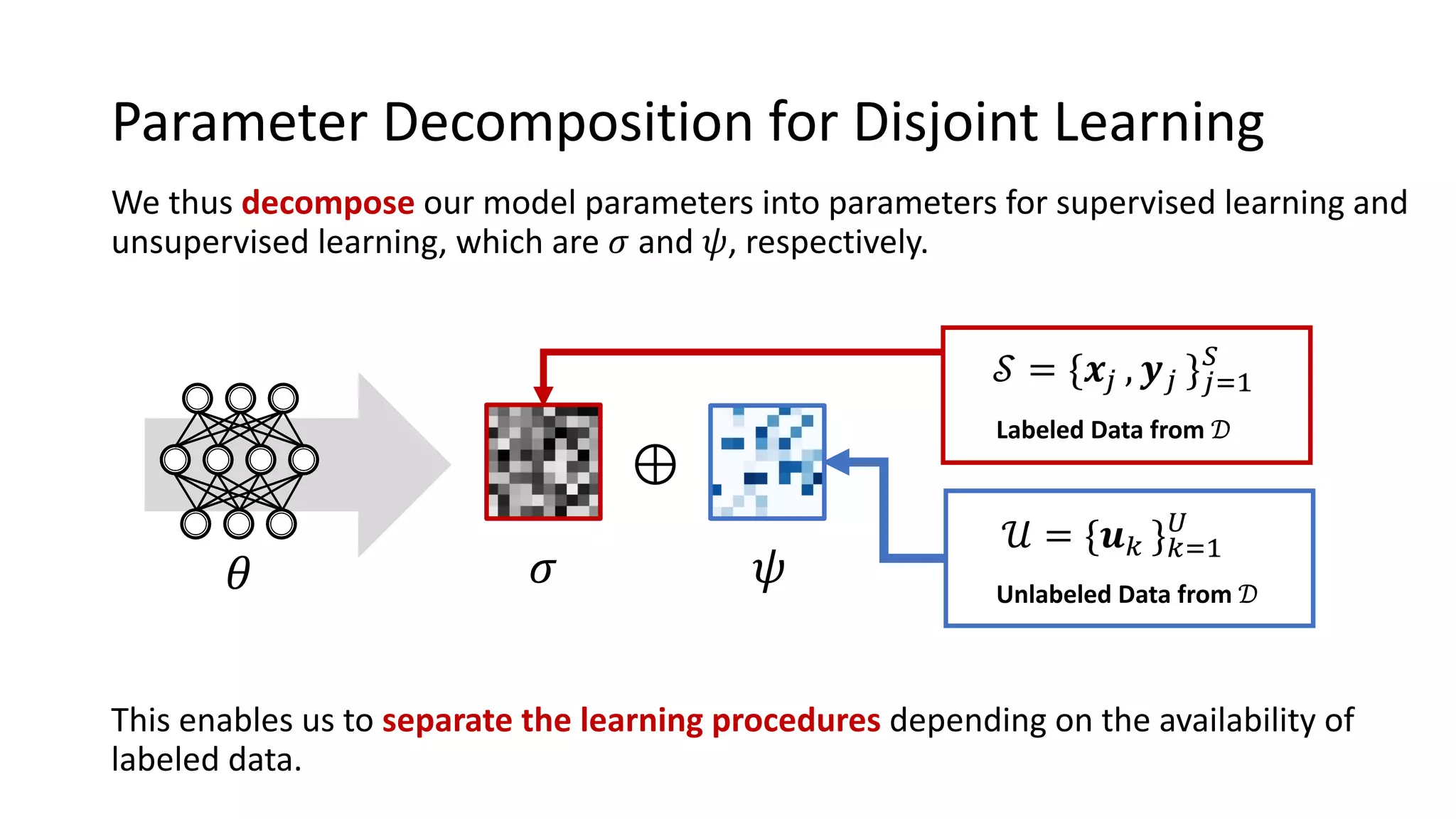

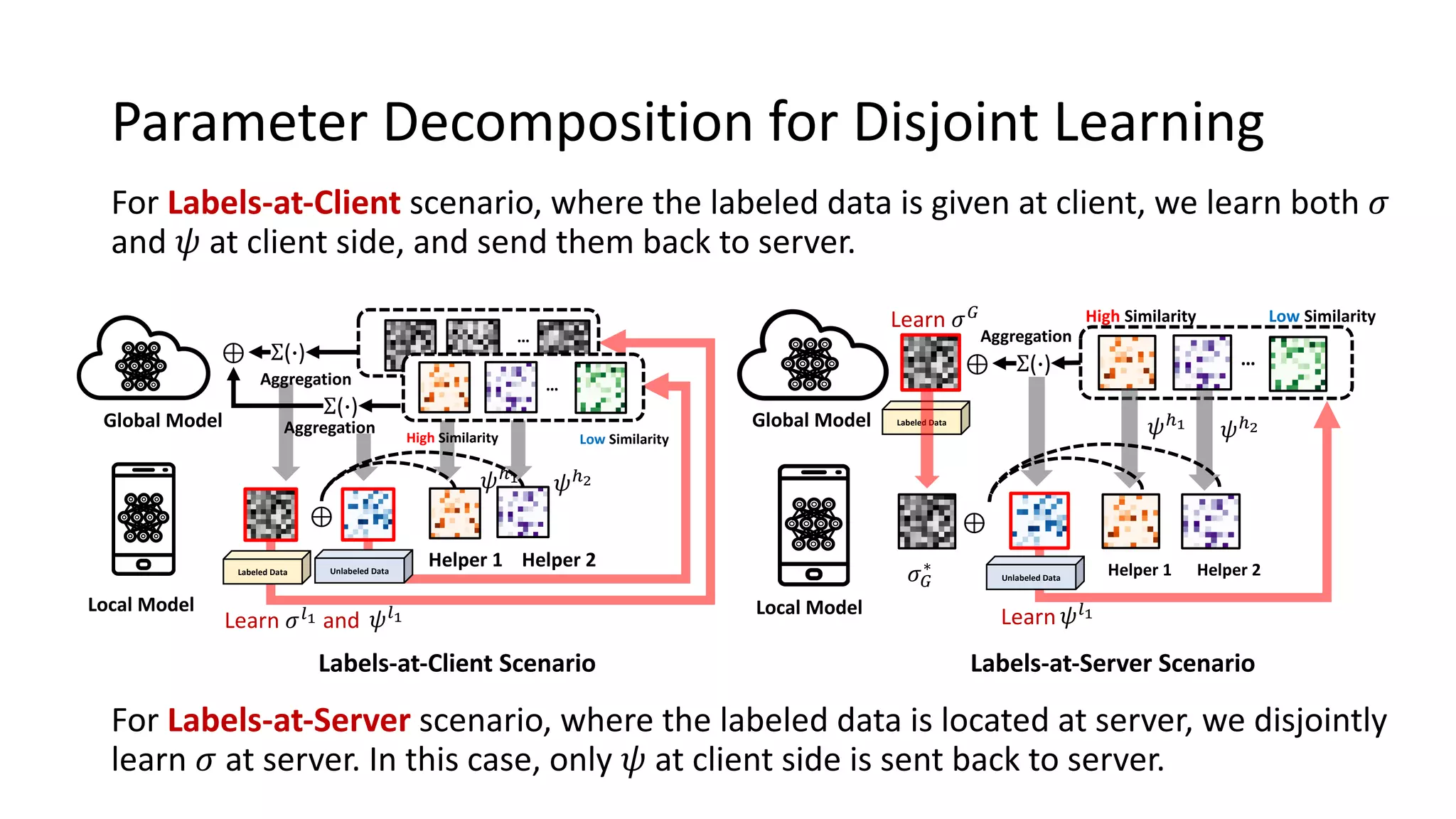

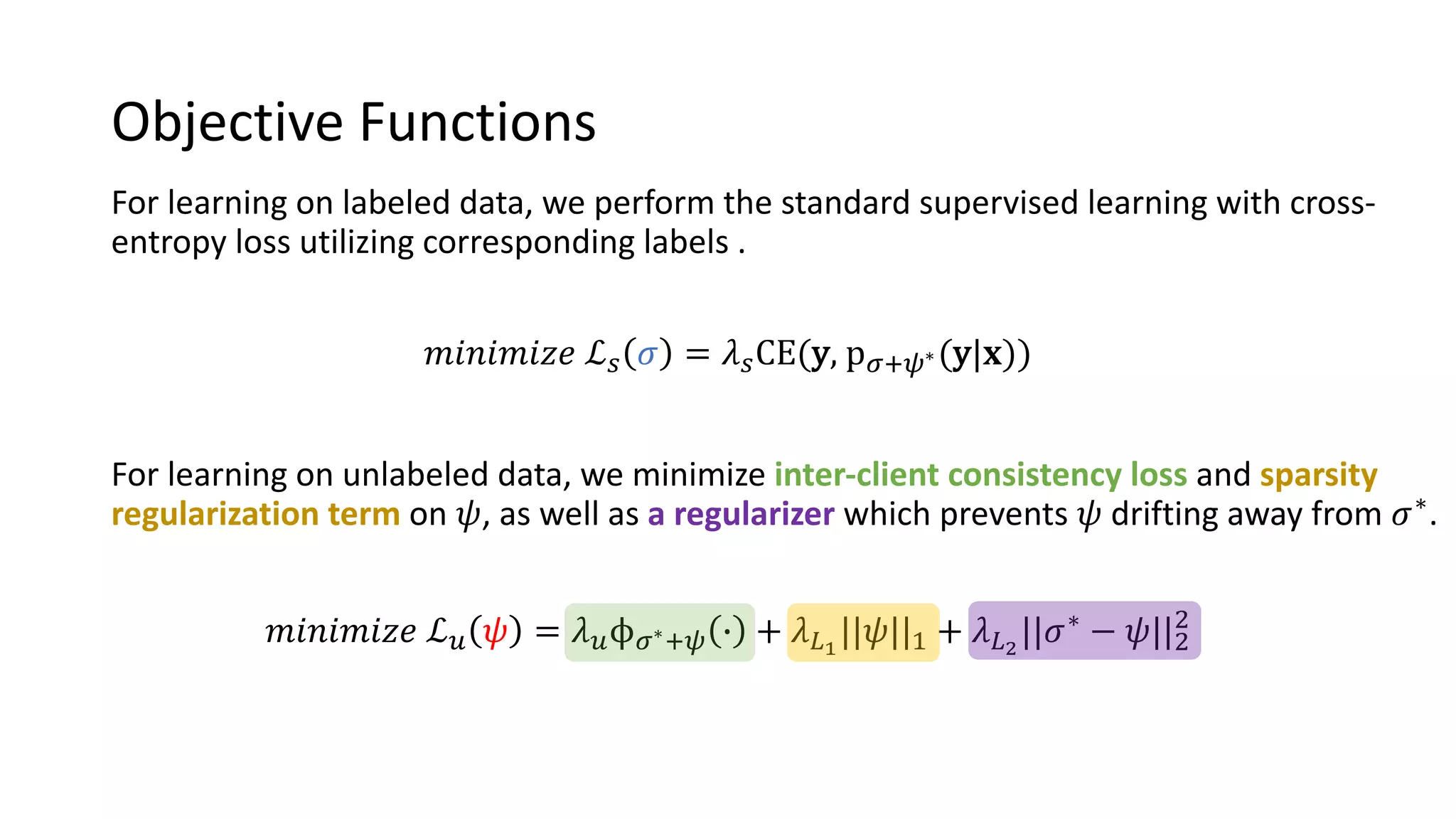

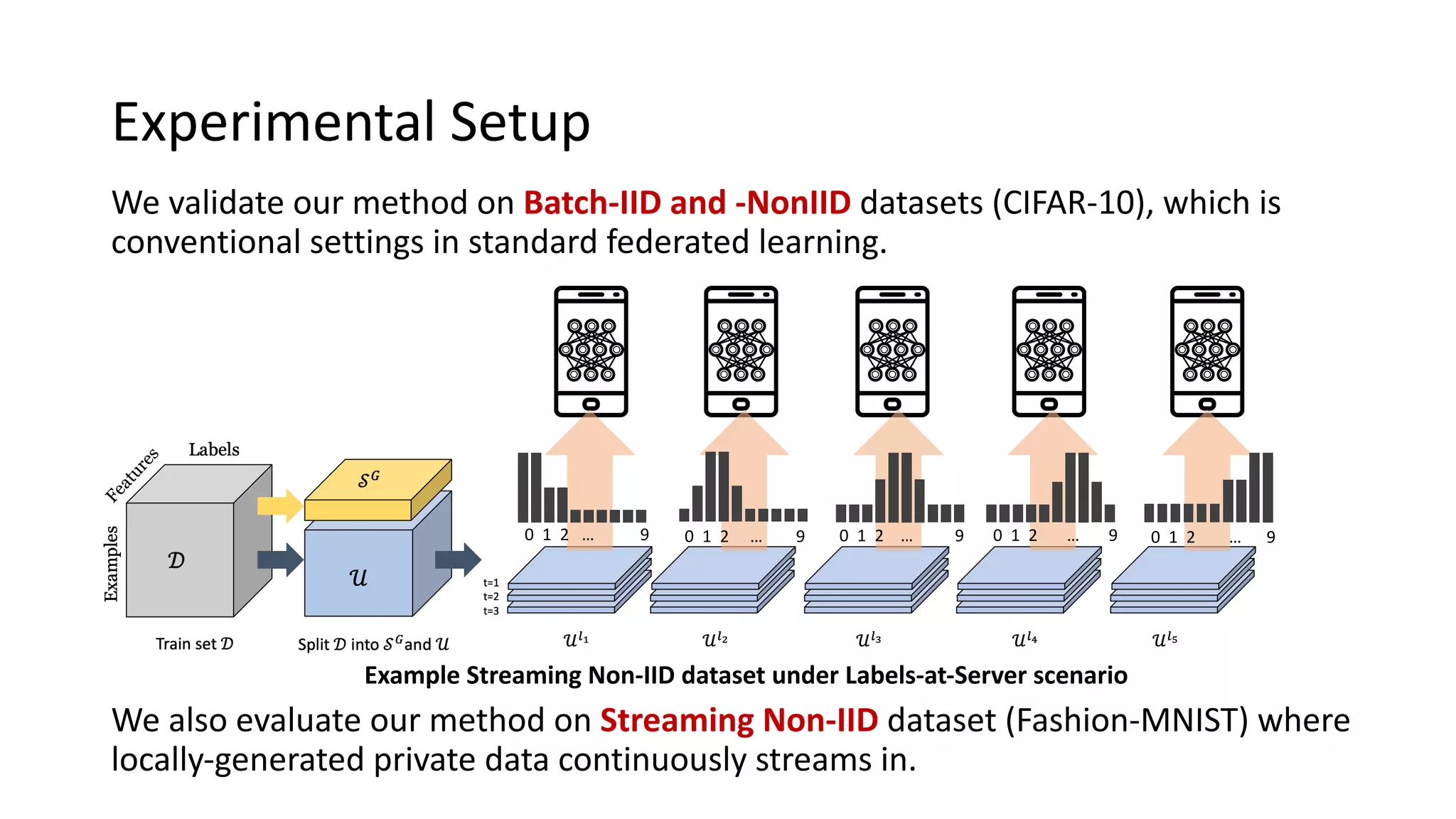

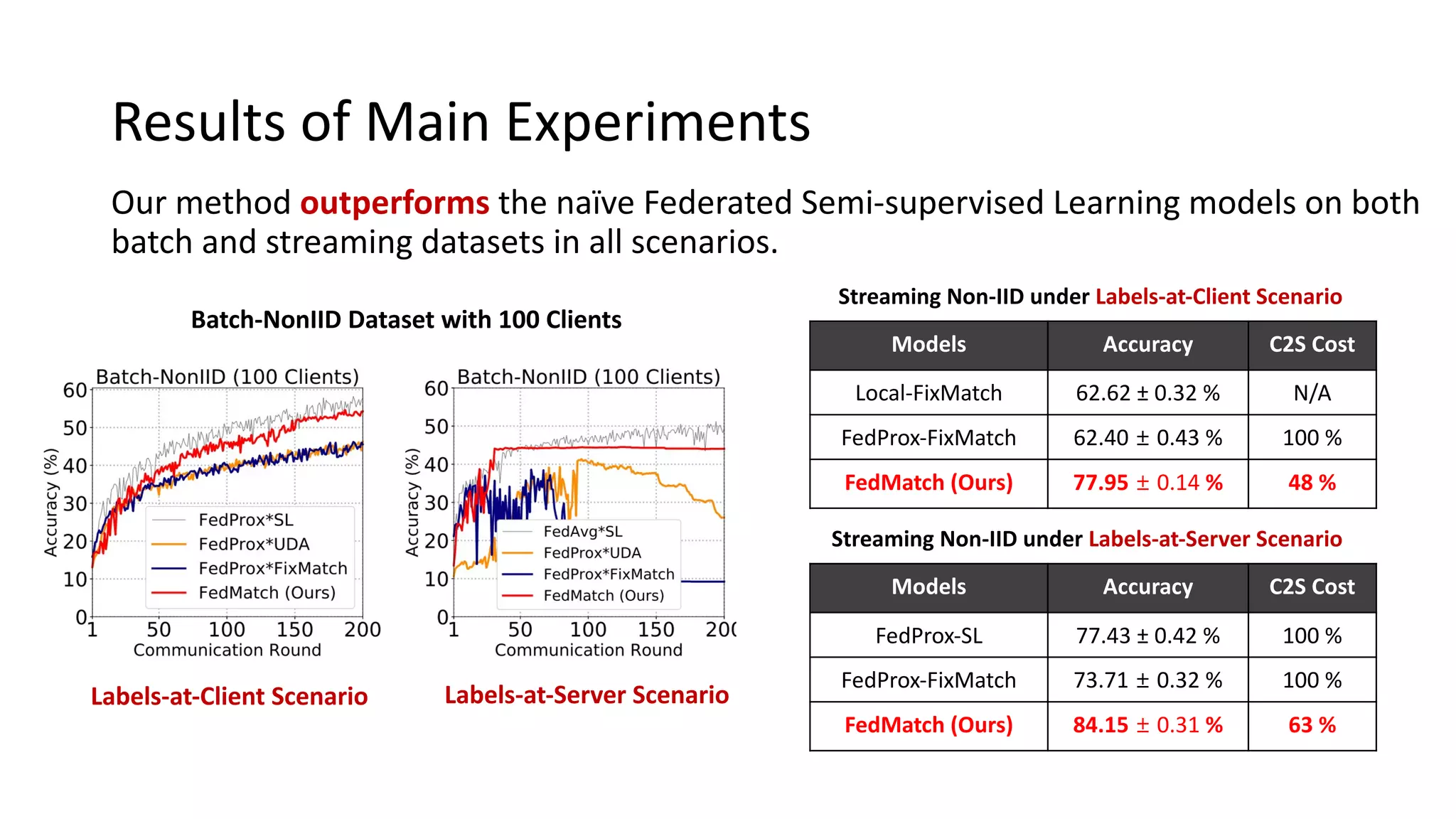

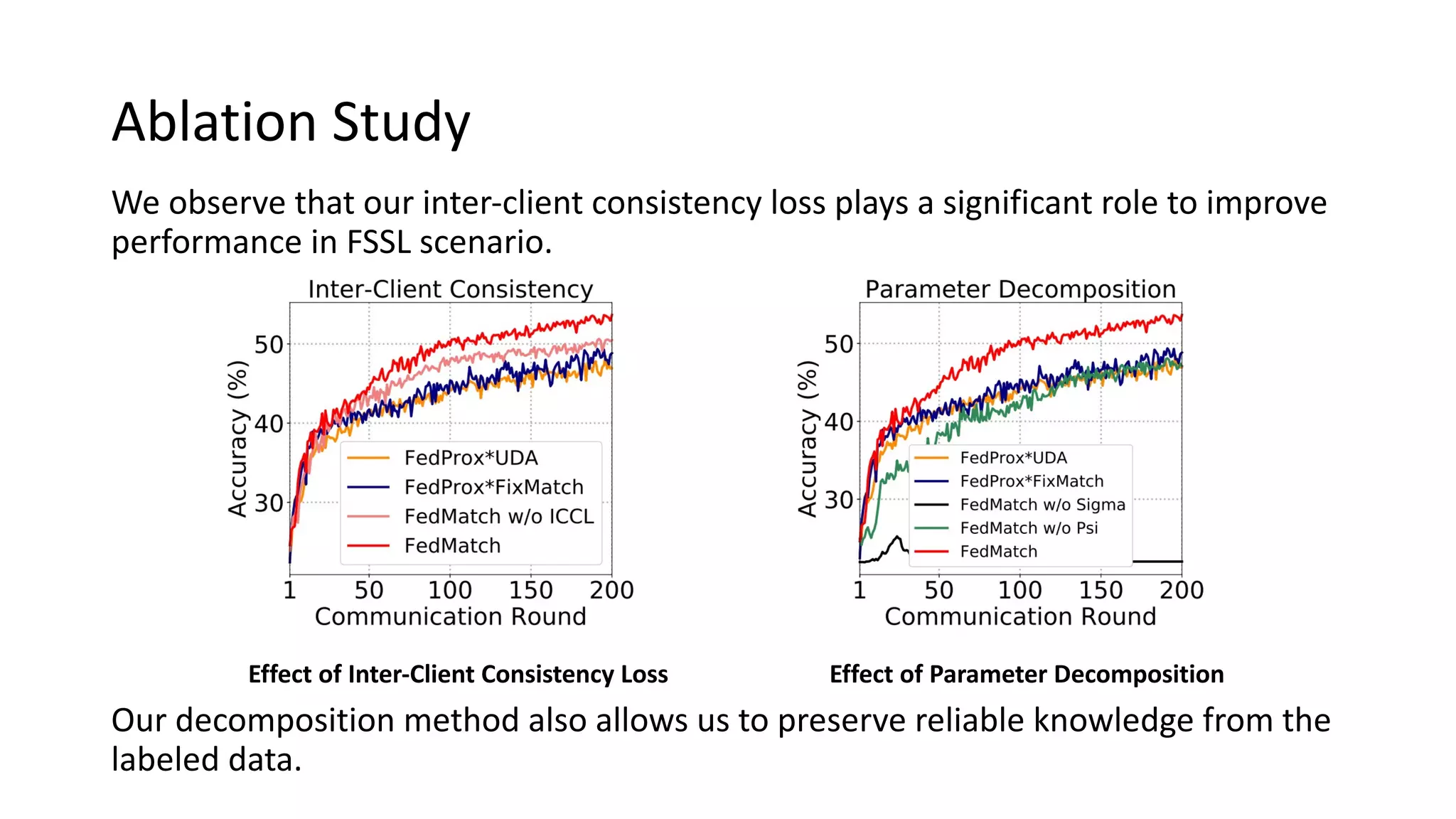

The document presents a method called federated semi-supervised learning (FSSL) designed to enhance knowledge sharing among clients with limited labeled data. It introduces 'fedmatch', which employs inter-client consistency loss and parameter decomposition for effective learning across different scenarios where either partially or completely unlabeled data is present. Experimental results demonstrate that fedmatch significantly outperforms traditional FSSL approaches in both batch and streaming datasets.