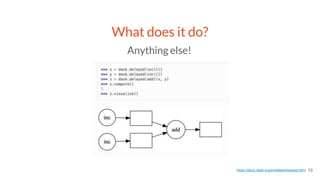

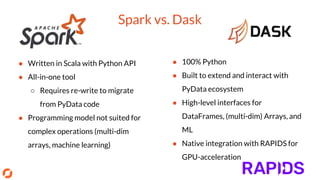

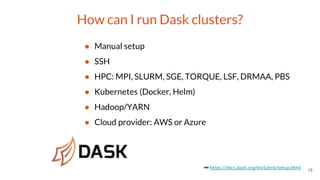

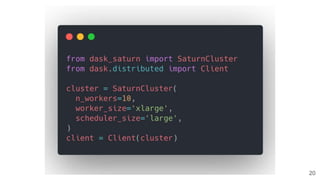

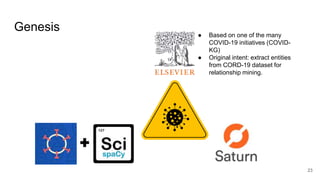

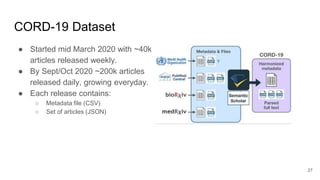

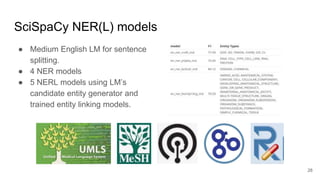

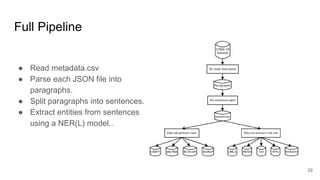

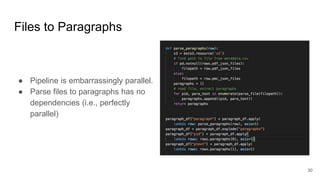

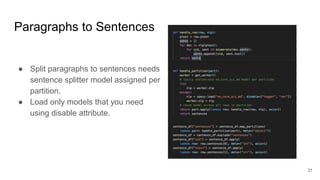

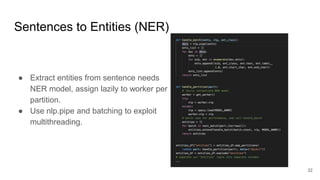

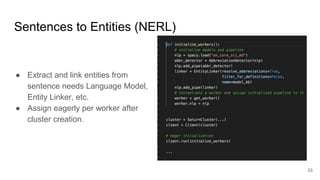

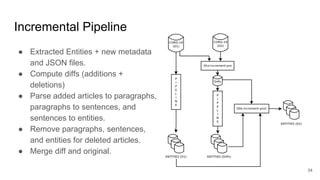

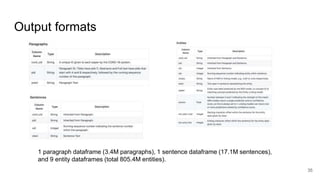

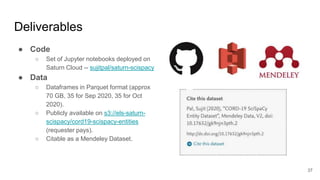

This document provides an overview of a project to extract entities from the CORD-19 dataset using SciSpaCy named entity recognition models on a Dask cluster. The goals were to create standoff entity annotations for the CORD-19 papers and output the data in a structured format. The pipeline involved parsing papers to paragraphs, splitting paragraphs to sentences, and then extracting entities from sentences using various NER models. The output was stored in Parquet files that could be accessed via Dask or Spark. The project delivered Jupyter notebooks demonstrating the code and entity data in Parquet format totaling around 70GB.