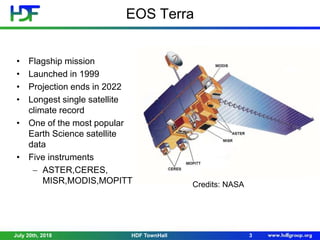

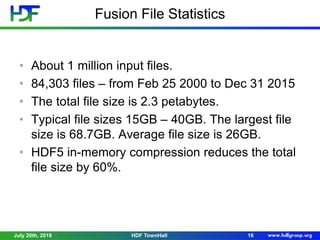

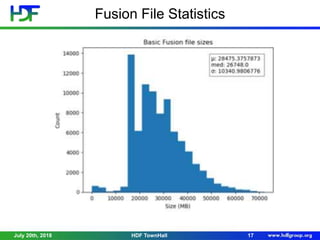

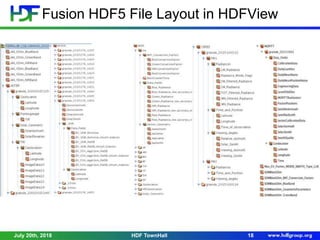

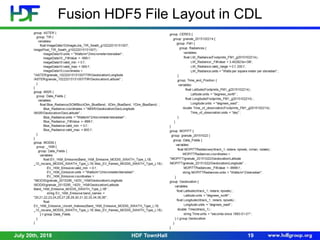

The Terra Data Fusion Project aims to fuse data from NASA's Terra satellite's five instruments - ASTER, CERES, MISR, MODIS, and MOPITT - into a single product. This presents challenges due to the huge data volumes, different locations of input data, instrument granularities, data storage methods, and file formats. The project overcame these by using NCSA supercomputing facilities, converting files to HDF5, organizing data by instrument and granule, and adding metadata. The final fused data product contains over 1 million input files totaling 2.3 petabytes, reduced to 1 petabyte using HDF5 compression.

![Acknowledgements

This work was supported by NASA ACCESS Grant

#NNX16AM07A.

Any opinions, findings, conclusions, or

recommendations expressed in this material are

those of the author[s] and do not necessarily

reflect the views of NASA.

July 20th, 2018 24HDF TownHall](https://image.slidesharecdn.com/kxy-180802020204/85/NASA-Terra-Data-Fusion-24-320.jpg)