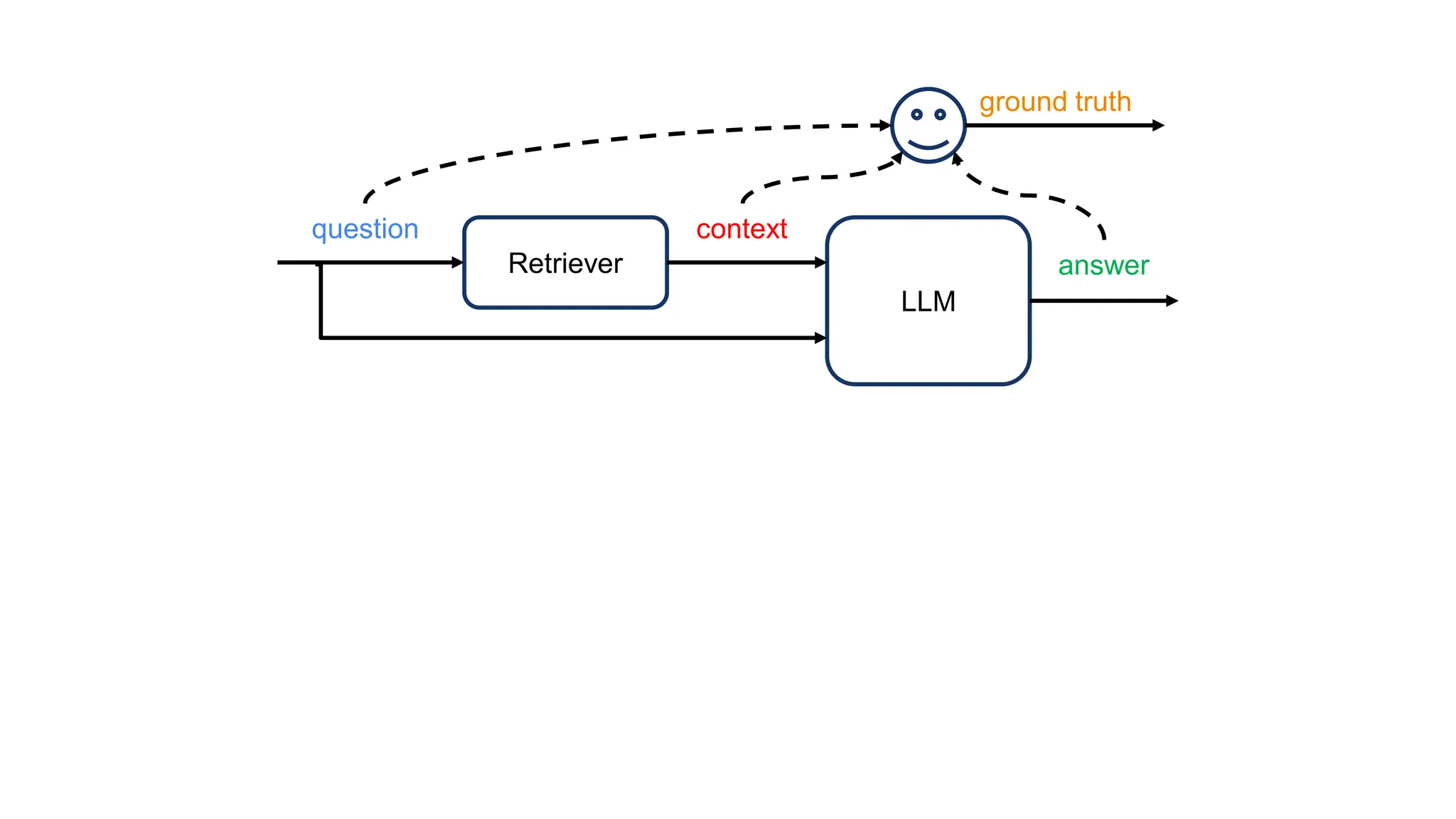

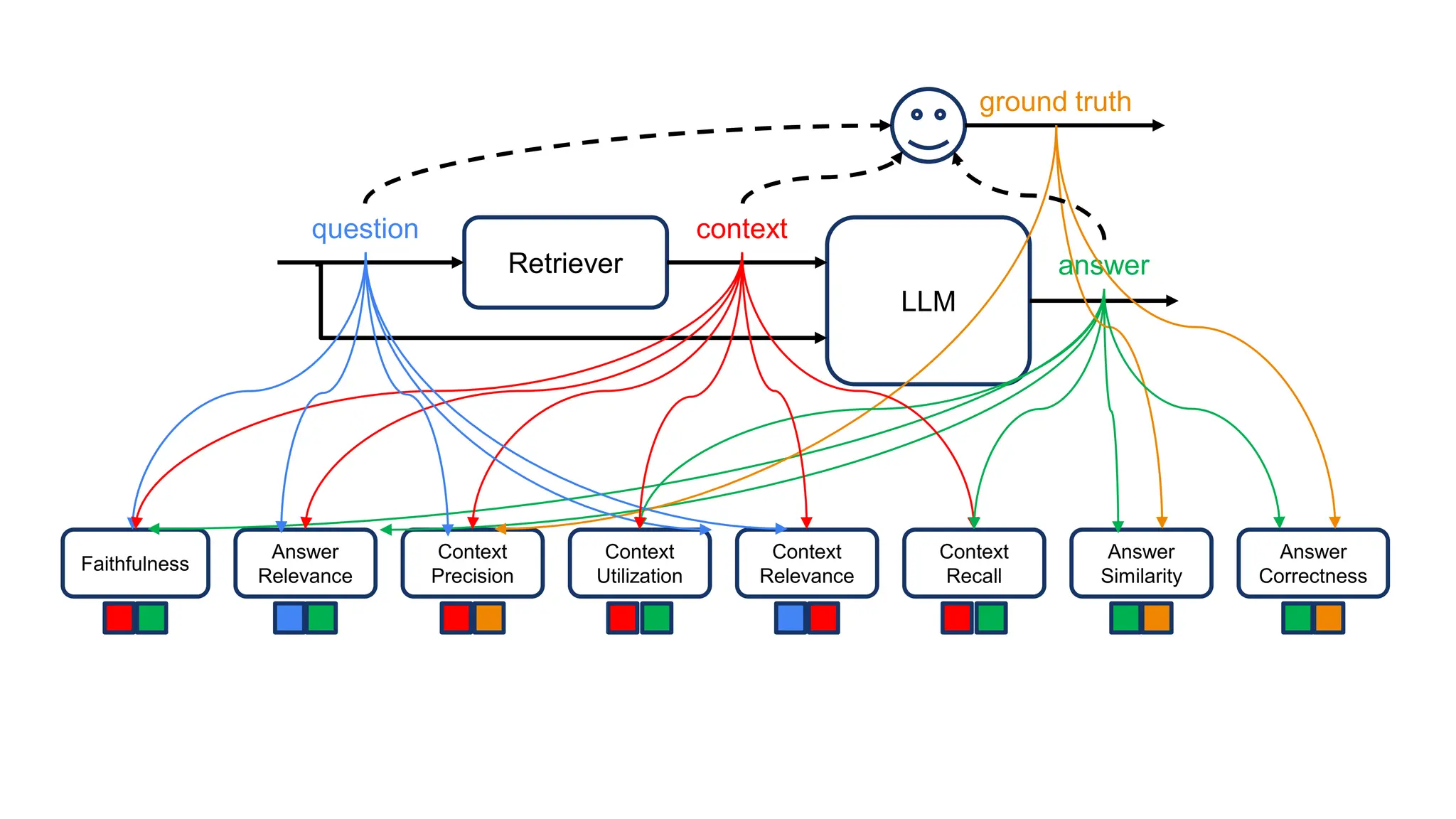

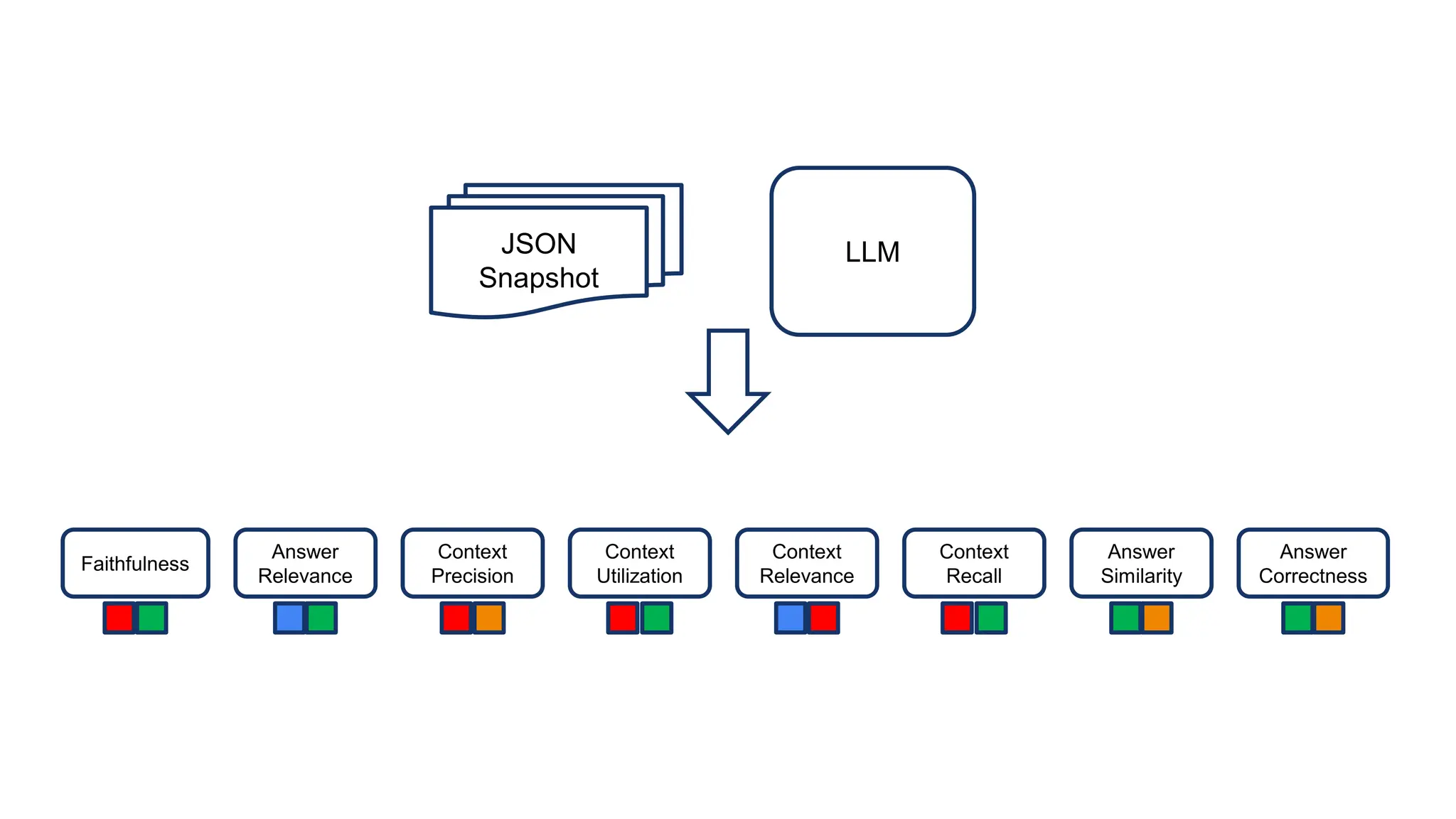

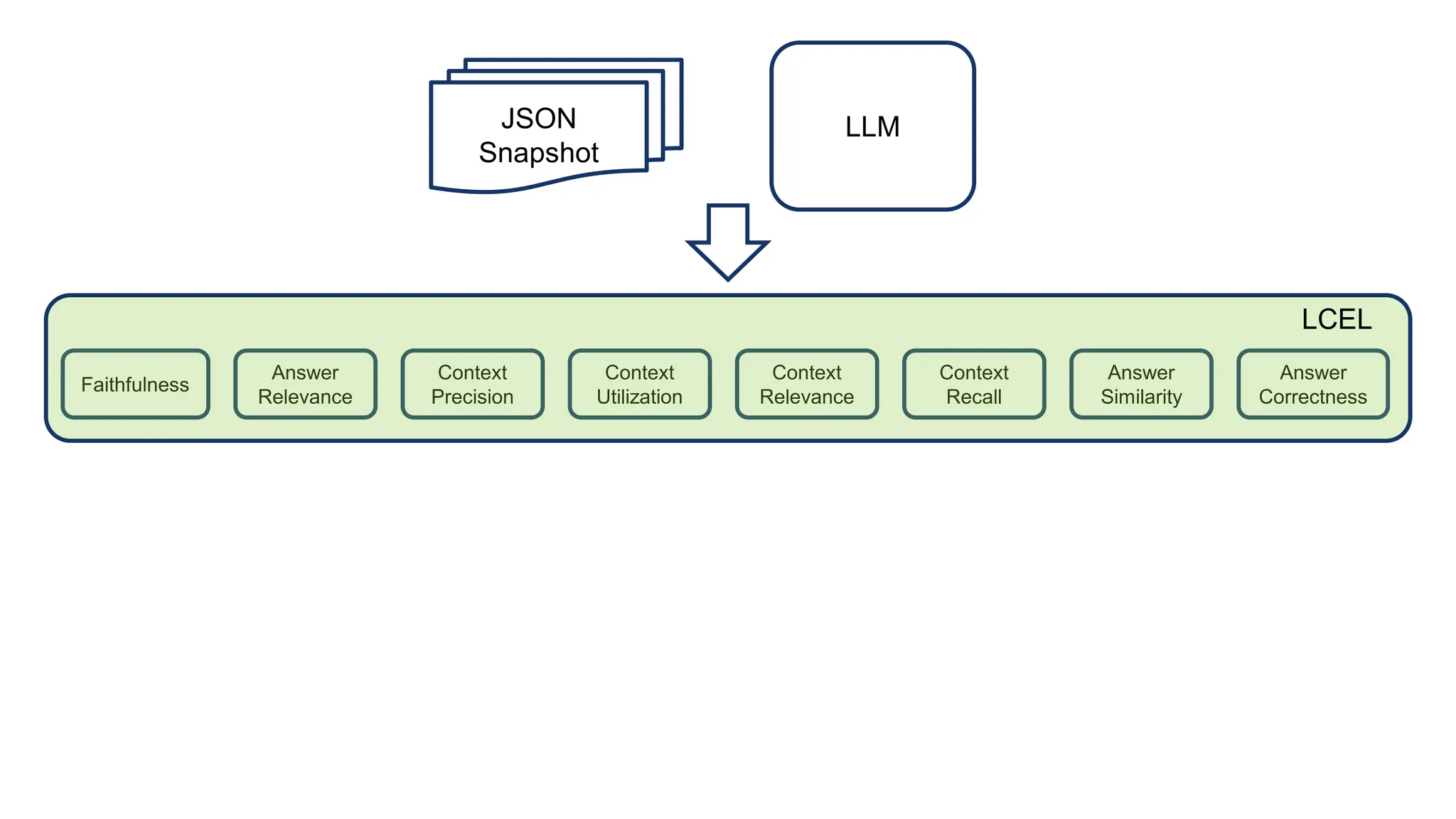

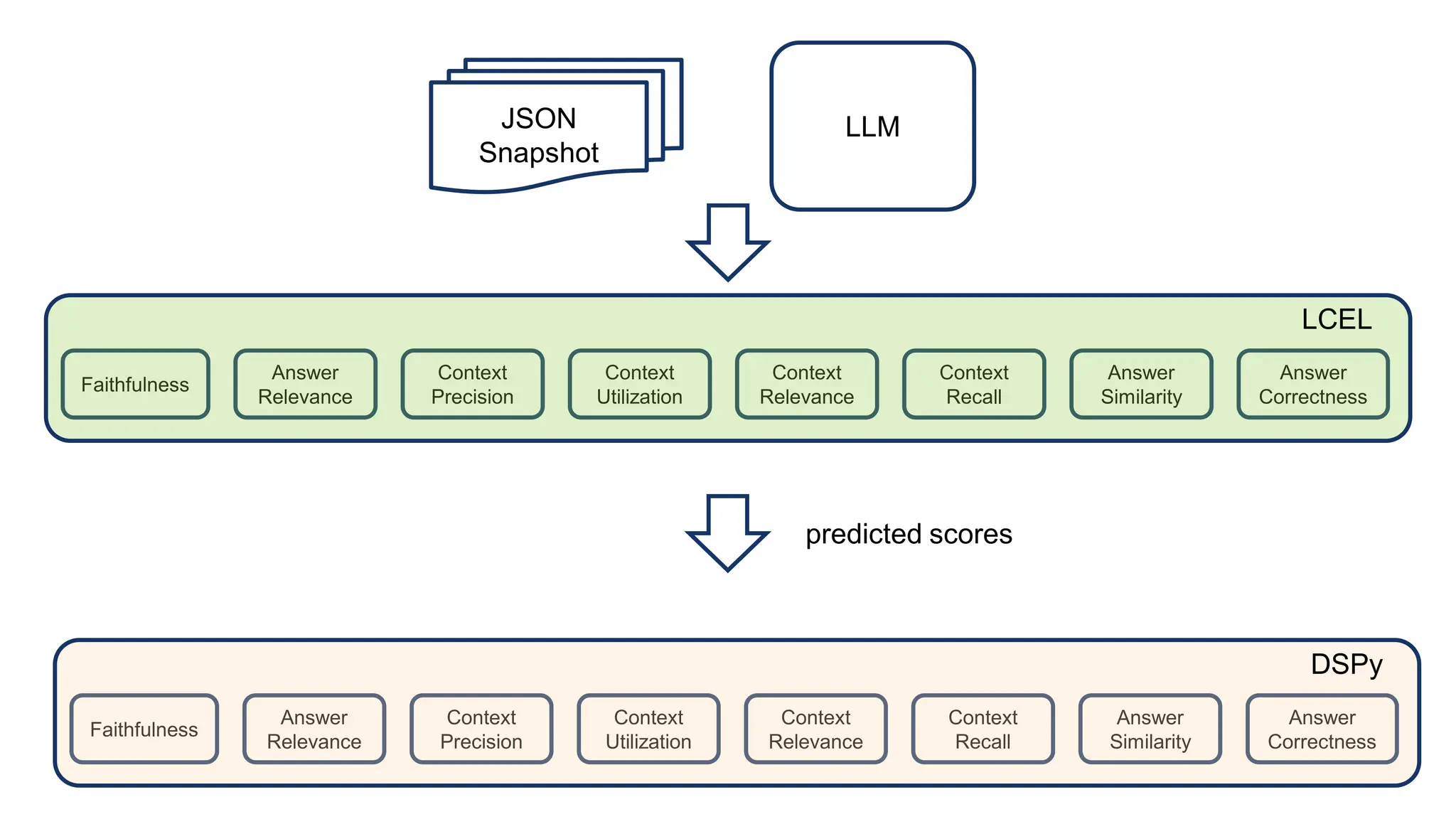

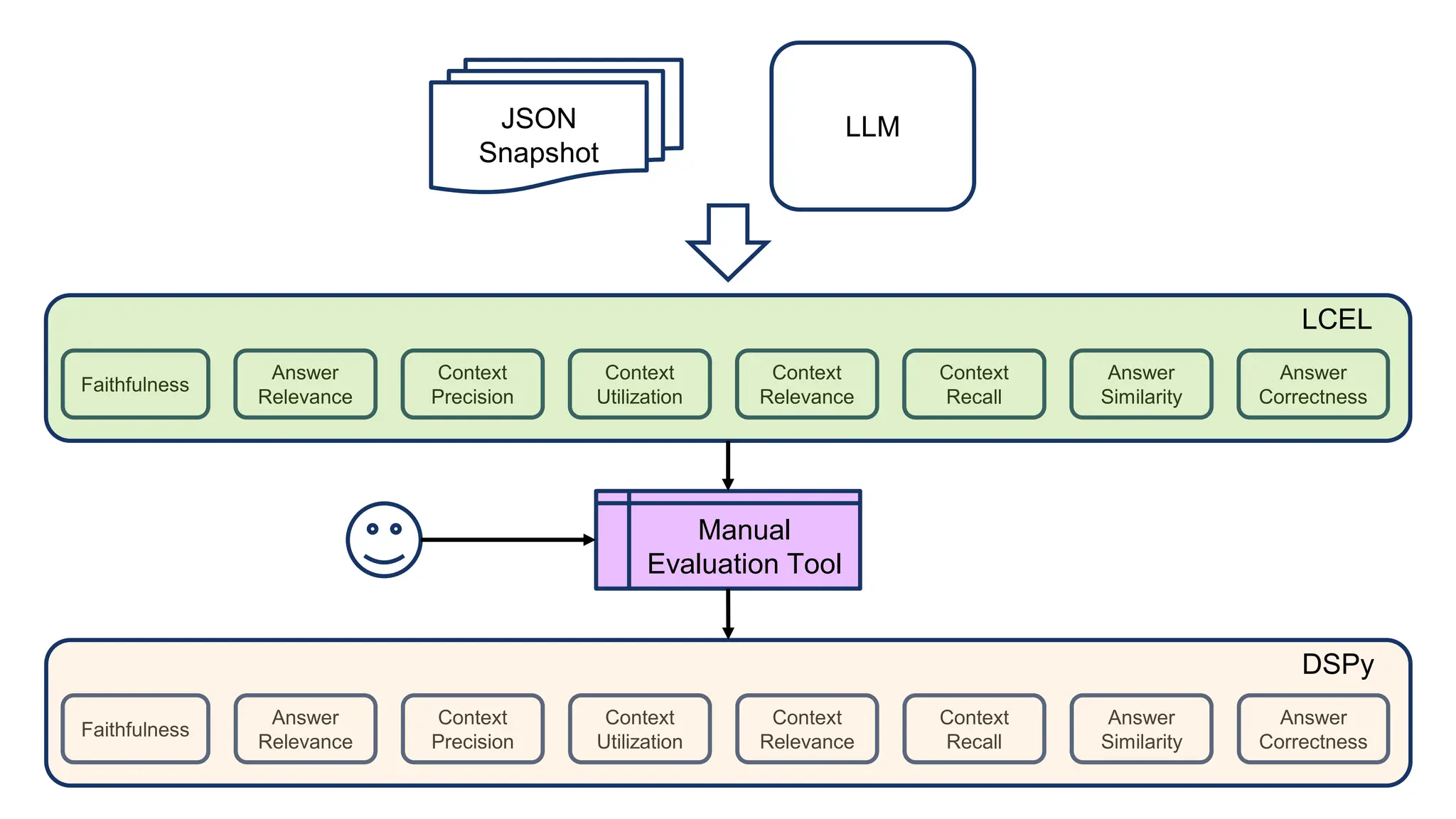

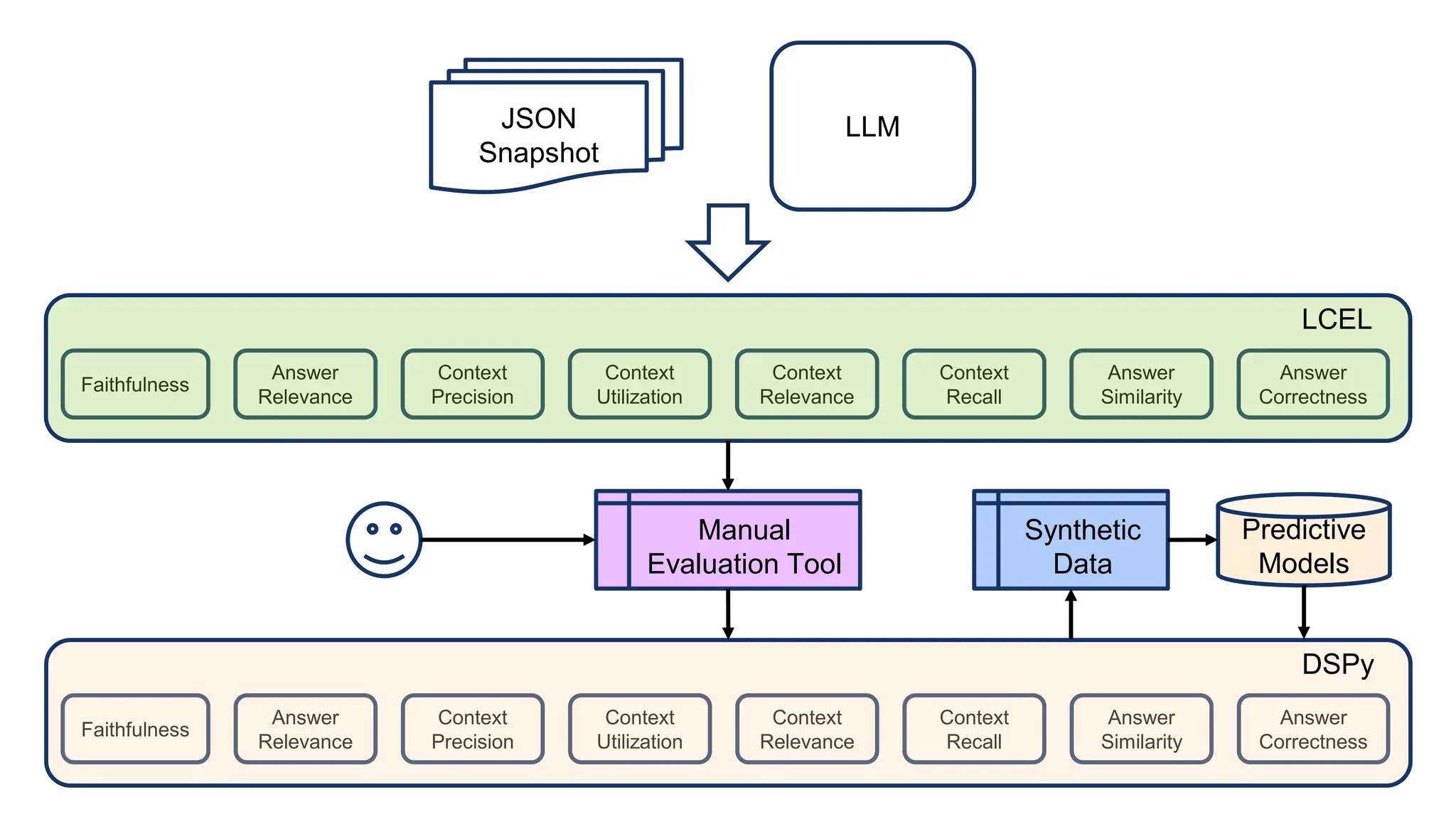

The document discusses an LLM-based evaluator for RAG, detailing metrics such as faithfulness, answer relevance, precision, and recall in the context of validating answers against ground truth. It mentions the use of JSON snapshots for capturing evaluation results and tools for manual evaluation. The author provides a GitHub link for further reference to the evaluation models and tools used.