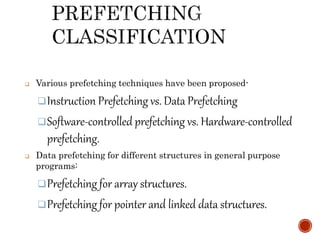

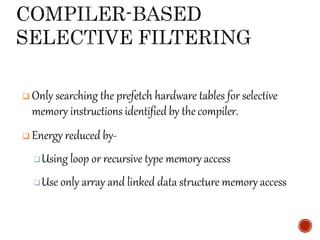

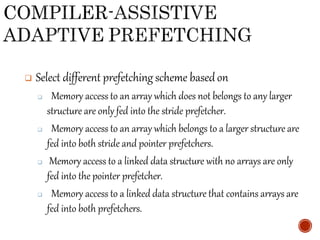

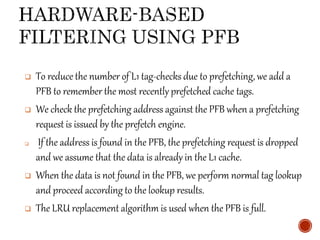

The document discusses energy-efficient hardware data prefetching in advanced computer architecture, emphasizing its necessity due to the performance gap between microprocessors and DRAM. It outlines various prefetching techniques, comparing software-controlled and hardware-controlled methods, and highlights the energy consumption impact of aggressive prefetching. Additionally, it presents solutions for reducing energy overhead and improving performance through energy-aware prefetching techniques.