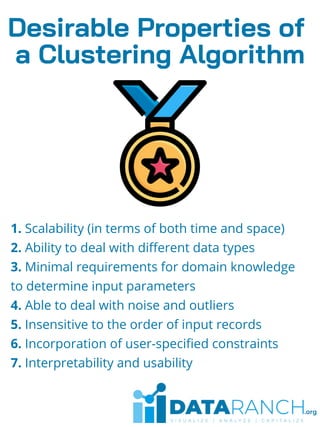

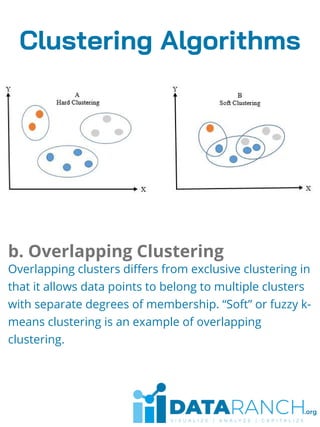

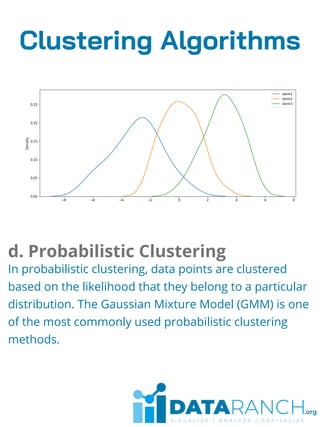

Clustering is an unsupervised learning technique used to group unlabeled data points based on their similarities. It is useful for segmenting customers into groups to develop targeted business strategies. Desirable properties of clustering algorithms include scalability, ability to handle different data types and noise, and interpretability. Common clustering algorithms are k-means for exclusive clustering, fuzzy c-means for overlapping clusters, hierarchical clustering using agglomerative or divisive approaches, and Gaussian mixture models for probabilistic clustering. Clustering has applications in marketing, biology, libraries, and city planning. Challenges include computational complexity, training time, inaccurate results, and lack of transparency.