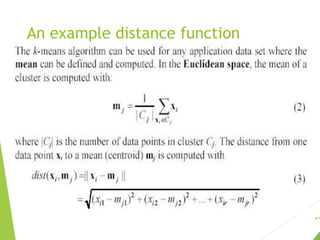

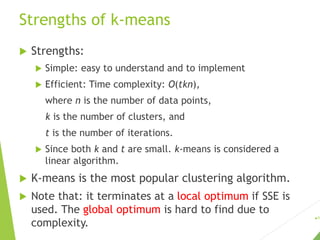

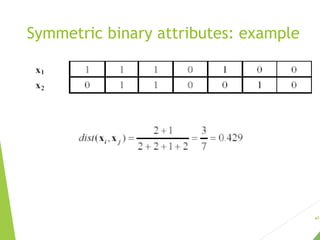

The document discusses unsupervised learning, focusing on clustering techniques like k-means and hierarchical clustering, and differentiates between supervised and unsupervised learning approaches. It covers basic concepts, algorithms, and their applications across various fields, illustrating clustering's utility with examples such as market segmentation and document organization. Additionally, it addresses strengths and weaknesses of k-means, distance functions, and methods for cluster evaluation.