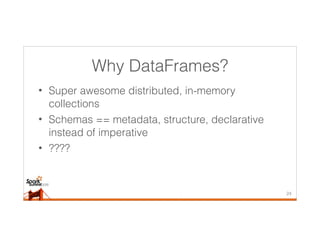

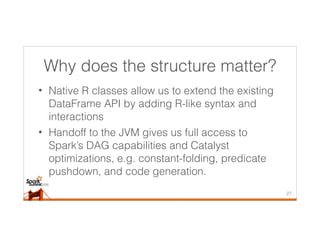

SparkR provides an R interface to Apache Spark that allows R developers to leverage Spark's distributed processing capabilities through DataFrames. DataFrames impose a schema on data from RDDs, making the data easier to access and manipulate compared to raw RDDs. DataFrames in SparkR allow R-like syntax and interactions with data while leveraging Spark's optimizations by passing operations to the JVM. The speaker demonstrated SparkR DataFrame features and discussed the project's roadmap, including expanding SparkR to support Spark machine learning capabilities.

![How can I use Spark to do something

simple?

peopleRDD <- textFile(sc, “people.txt”)

lines <- flatMap(peopleRDD,

function(line) {

strsplit(line, ", ")

})

ageInt <- lapply(lines,

function(line) {

as.numeric(line[2])

})

13](https://image.slidesharecdn.com/02chrisfreeman-150624013446-lva1-app6891/85/A-Data-Frame-Abstraction-Layer-for-SparkR-Chris-Freeman-Alteryx-13-320.jpg)

![How can I use Spark to do something

simple?

peopleRDD <- textFile(sc, “people.txt”)

lines <- flatMap(peopleRDD,

function(line) {

strsplit(line, ", ")

})

ageInt <- lapply(lines,

function(line) {

as.numeric(line[2])

})

sum <- reduce(ageInt,function(x,y) {x+y})

14](https://image.slidesharecdn.com/02chrisfreeman-150624013446-lva1-app6891/85/A-Data-Frame-Abstraction-Layer-for-SparkR-Chris-Freeman-Alteryx-14-320.jpg)

![How can I use Spark to do something

simple?

peopleRDD <- textFile(sc, “people.txt”)

lines <- flatMap(peopleRDD,

function(line) {

strsplit(line, ", ")

})

ageInt <- lapply(lines,

function(line) {

as.numeric(line[2])

})

sum <- reduce(ageInt,function(x,y) {x+y})

avg <- sum / count(peopleRDD)

15](https://image.slidesharecdn.com/02chrisfreeman-150624013446-lva1-app6891/85/A-Data-Frame-Abstraction-Layer-for-SparkR-Chris-Freeman-Alteryx-15-320.jpg)

![How can I use Spark to do something

simple?

peopleRDD <- textFile(sc, “people.txt”)

lines <- flatMap(peopleRDD,

function(line) {

strsplit(line, ", ")

})

ageInt <- lapply(lines,

function(line) {

as.numeric(line[2])

})

sum <- reduce(ageInt,function(x,y) {x+y})

avg <- sum / count(peopleRDD)

16](https://image.slidesharecdn.com/02chrisfreeman-150624013446-lva1-app6891/85/A-Data-Frame-Abstraction-Layer-for-SparkR-Chris-Freeman-Alteryx-16-320.jpg)

![SparkR DataFrame Features

• Column access using ‘$’ or ‘[ ]’ just like in R

• dplyr-like DataFrame manipulation:

– filter

– groupBy

– summarize

– mutate

• Access to external R packages that extend R

syntax

28](https://image.slidesharecdn.com/02chrisfreeman-150624013446-lva1-app6891/85/A-Data-Frame-Abstraction-Layer-for-SparkR-Chris-Freeman-Alteryx-28-320.jpg)