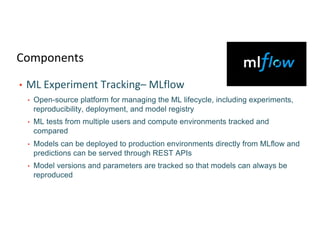

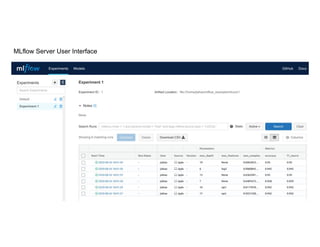

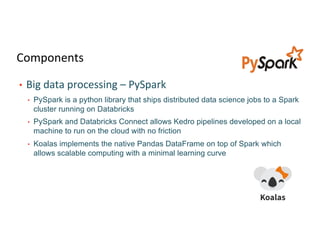

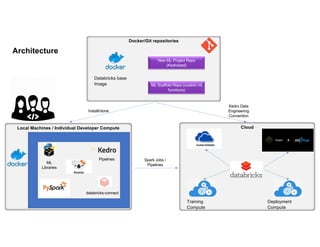

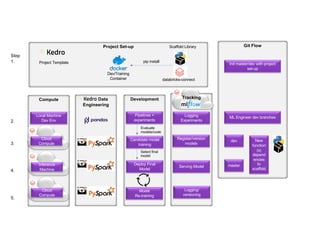

The document outlines a collaborative and scalable machine learning workflow aimed at enhancing research and development in ML. It details key components such as Docker for environment consistency, Kedro for ML pipelines, MLflow for experiment tracking, and Databricks for lifecycle integration. The architecture integrates cloud resources with local pipelines, ensuring effective tracking, logging, and deployment of ML models.