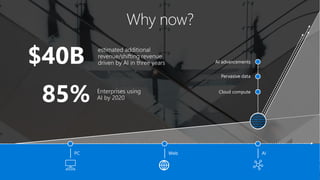

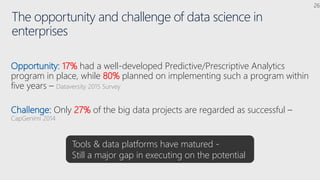

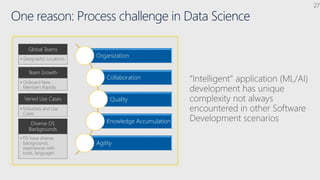

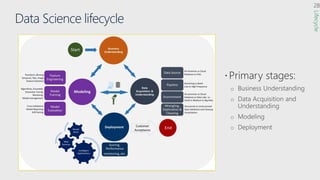

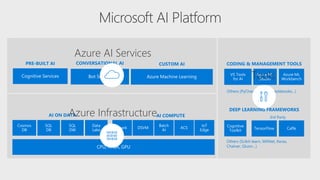

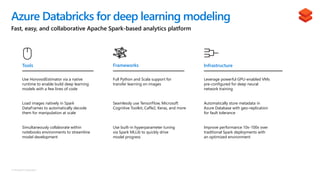

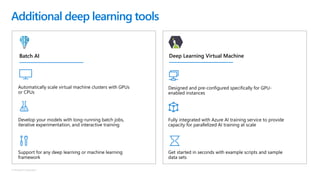

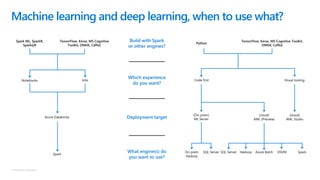

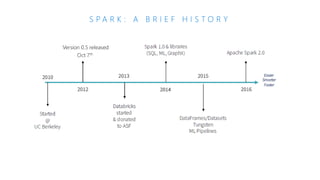

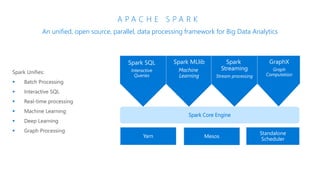

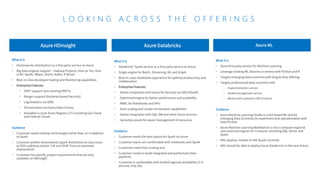

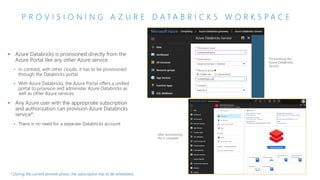

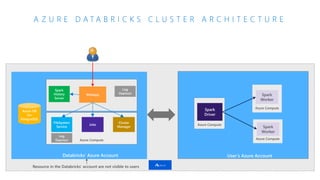

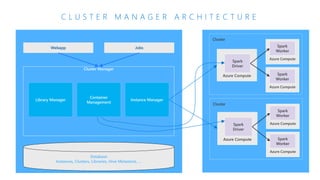

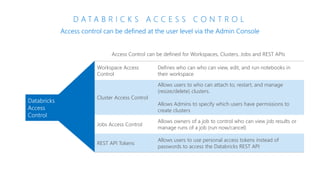

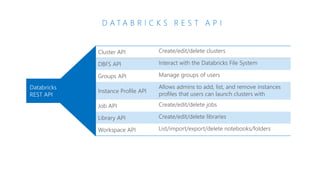

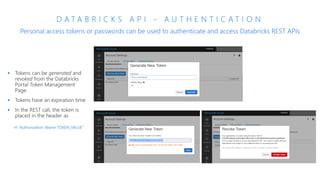

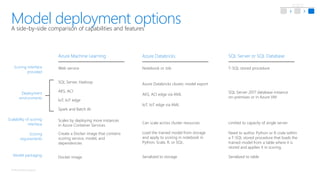

The document discusses the current state and challenges of data science in enterprises, noting that while many organizations plan to implement advanced analytics programs, a significant number of big data projects are unsuccessful. It highlights various tools and platforms, particularly focusing on Azure's offerings such as Azure Databricks and Azure ML, for seamless machine learning and big data analytics. Additionally, the document emphasizes the importance of collaboration and diverse skills in optimizing data science practices within organizations.