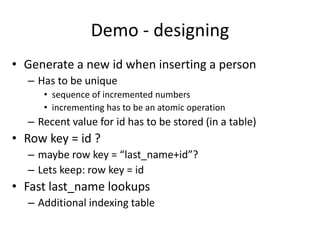

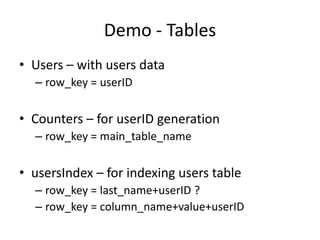

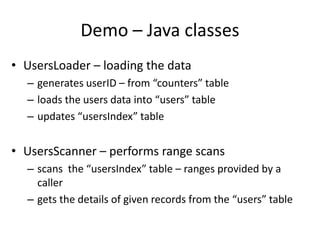

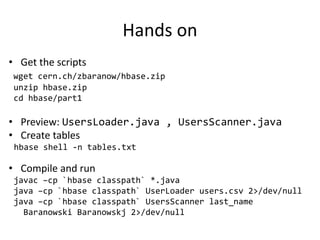

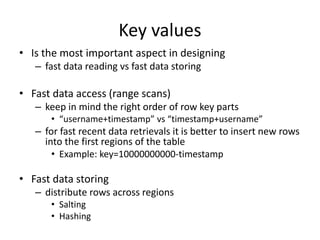

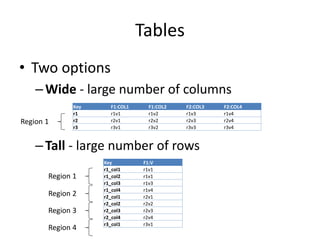

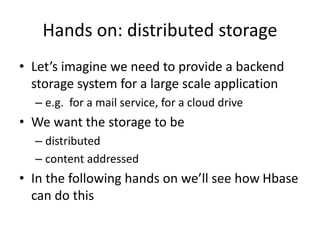

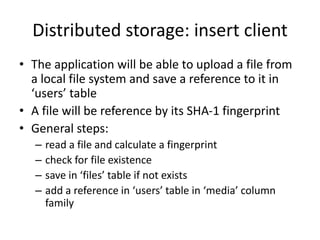

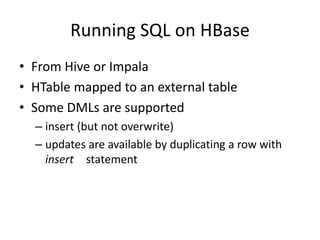

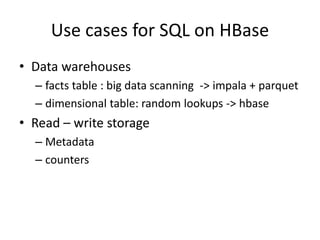

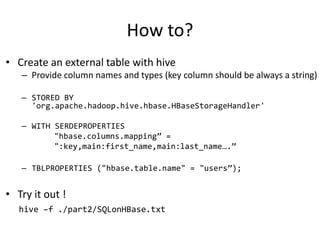

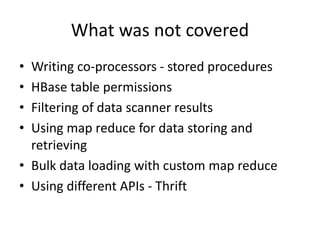

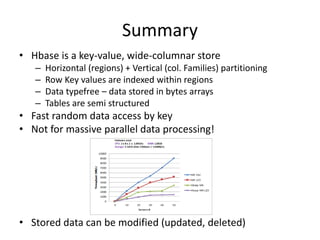

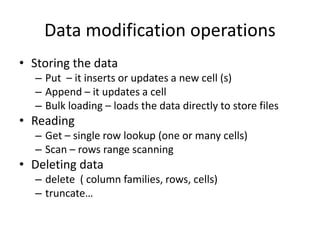

The document outlines a comprehensive guide on using HBase, including Java API interactions for data storage, querying, and bulk data loading techniques. It details hands-on demonstrations for distributed storage and SQL integration, explaining how to design tables and manage data efficiently in a NoSQL environment. Additionally, it highlights the importance of schema design and provides use cases for HBase in large-scale applications, emphasizing its capabilities as a key-value store.

![Getting data with Java API

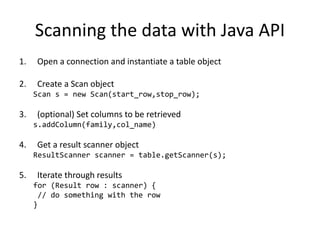

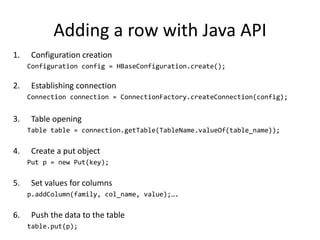

1. Open a connection and instantiate a table object

2. Create a get object

Get g = new Get(key);

3. (optional) specify certain family or column only

g.addColumn(family_name, col_name); //or

g.addFamily(family_name);

4. Get the data from the table

Result result= table.get(g);

5. Get values from the result object

byte [] value = result.getValue(family, col_name); //….](https://image.slidesharecdn.com/hbase-dataoperationsfinal-240505114543-396684a5/85/HBase_-_data_operaet-le-operations-de-calciletions_final-pptx-6-320.jpg)